Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

The easiest way to deploy Terrakube is using our Helm Chart, to learn more about this please review the following:

🔨Helm ChartYou can deploy Terrakube using different authentication providers for more information check

WIP

WIP

Special thanks to SolomonHD who has created the Traefik setup example to use Terrakube

Original Reference:

https://gist.github.com/SolomonHD/b55be40146b7a53b8f26fe244f5be52e

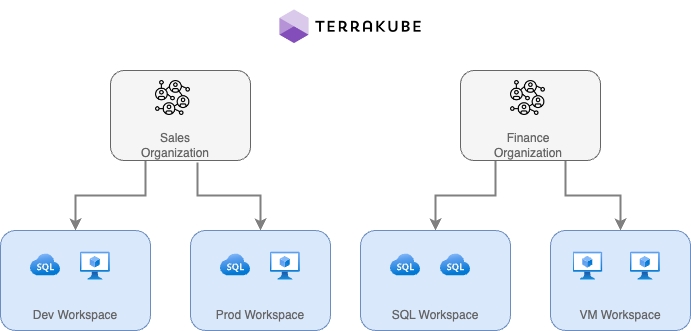

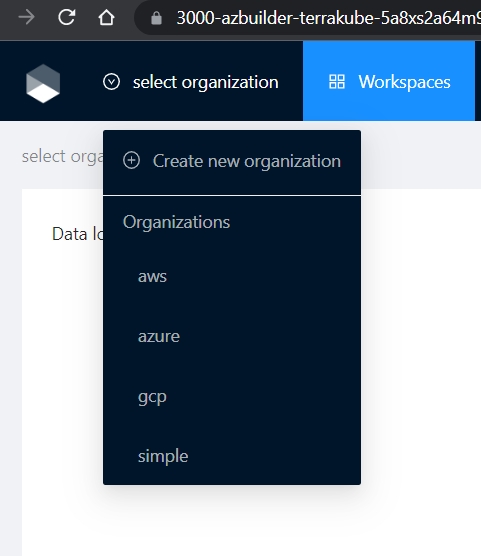

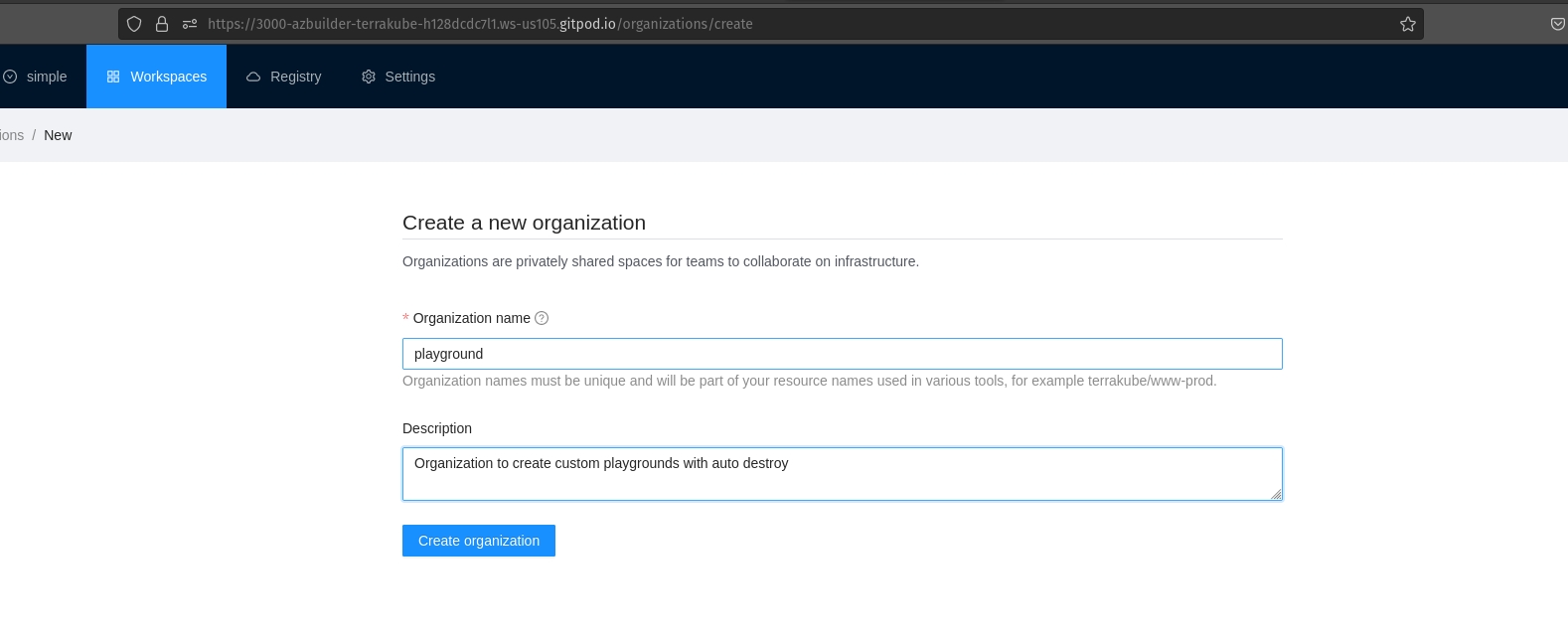

Organizations are privately shared spaces for teams to collaborate on infrastructure. Basically are logical containers that you can use to define your company hierarchy to organize the workspaces, modules in the private registry and assign fine grained permissions to your teams.

In this section:

Creating an OrganizationGlobal VariablesTeam ManagementAPI TokensTemplatesTagsYou can deploy Terrakube to any Kubernetes cluster using the helm chart available in the following repository:

https://github.com/AzBuilder/terrakube-helm-chart

To use the repository do the following:

helm repo add terrakube-repo https://AzBuilder.github.io/terrakube-helm-chart

helm repo updateWhen Terrrakube needs to run behind a corporate proxy the following environment variable can be used in each container:

JAVA_TOOL_OPTIONS="-Dhttp.proxyHost=your.proxy.net -Dhttp.proxyPort=8080 -Dhttp.nonProxyHosts=XXXXX -Dhttps.proxyHost=your.proxy.net -Dhttps.proxyPort=8080 -Dhttps.nonProxyHosts=XXXXX"When using the official helm chart the environment variable setting can be set like the following:

api:

env:

- name: JAVA_TOOL_OPTIONS

value: "-Dhttp.proxyHost=your.proxy.net -Dhttp.proxyPort=8080 -Dhttp.nonProxyHosts=XXXXX -Dhttps.proxyHost=your.proxy.net -Dhttps.proxyPort=8080 -Dhttps.nonProxyHosts=XXXXX"

registry:

env:

- name: JAVA_TOOL_OPTIONS

value: "-Dhttp.proxyHost=your.proxy.net -Dhttp.proxyPort=8080 -Dhttp.nonProxyHosts=XXXXX -Dhttps.proxyHost=your.proxy.net -Dhttps.proxyPort=8080 -Dhttps.nonProxyHosts=XXXXX"

executor:

env:

- name: JAVA_TOOL_OPTIONS

value: "-Dhttp.proxyHost=your.proxy.net -Dhttp.proxyPort=8080 -Dhttp.nonProxyHosts=XXXXX -Dhttps.proxyHost=your.proxy.net -Dhttps.proxyPort=8080 -Dhttps.nonProxyHosts=XXXXX"Terrakube is an open source collaboration platform for running remote infrastructure as code operations using Terraform or OpenTofu that aims to be a complete replacement for close source tools like Terraform Enterprise, Scalr or Env0.

The platform can be easily installed in any kubernetes cluster, you can easily customize the platform using other open source tools already available for terraform (Example: terratag, infracost, terrascan, etc) and you can integrate the tool with different authentication providers like Azure Active Directory, Google Cloud Identity, Github, Gitlab or any other provider supported by .

Terrakube provides different features like the following:

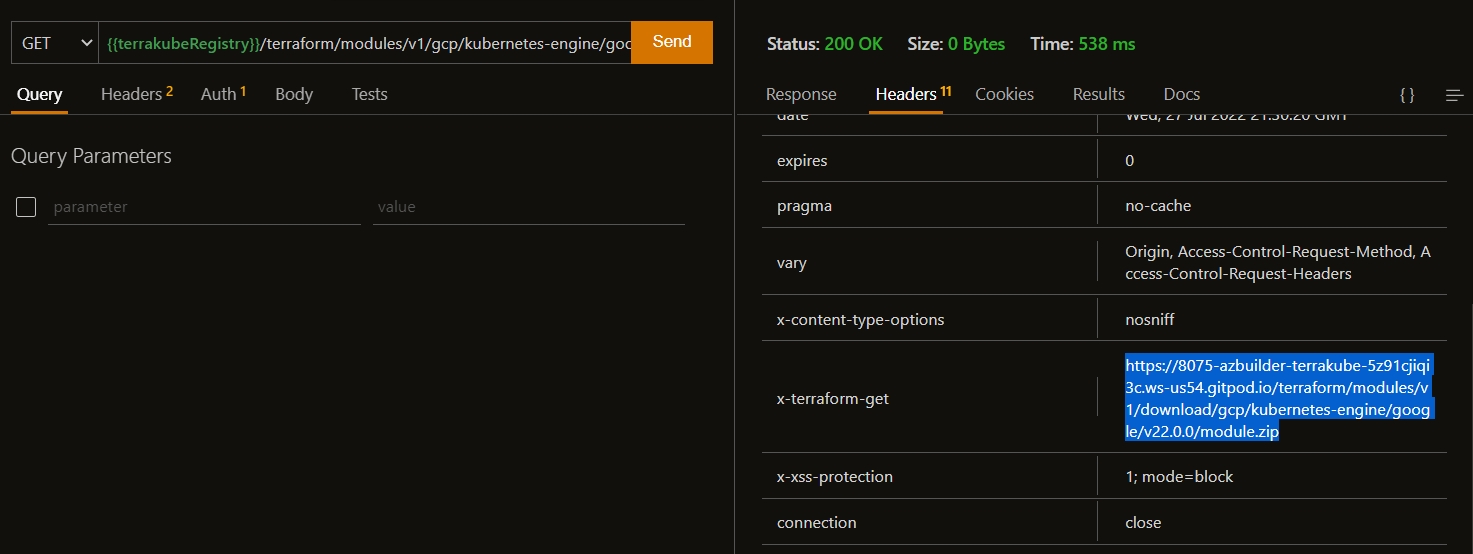

Terraform module protocol:

The first step will be to create one google storage bucket with private access

The first step will be to deploy a minio instance inside minikube in the terrakube namespace

The first step will be to create one azure storage account with the folling containers:

content

To use a H2 with your Terrakube deployment create a terrakube.yaml file with the following content:INT

H2 database is just for testing, each time the api pod is restarted a new database will be created

loadSampleData this will add some organization, workspaces and modules by default in your database, keep databaseHostname, databaseName, databaseUser and databasePassword empty

Terrakube use two secrets internally to sign the personal access token and one internal token for intercomponent comunnication when using the helm deployment you can change this values using the following keys:

Make sure to change the default values in a real kubernetes deployment.

The secret should be 32 character long and it should be base64 compatible string.

You can handle Terrakube users with differen authentication providers like the following

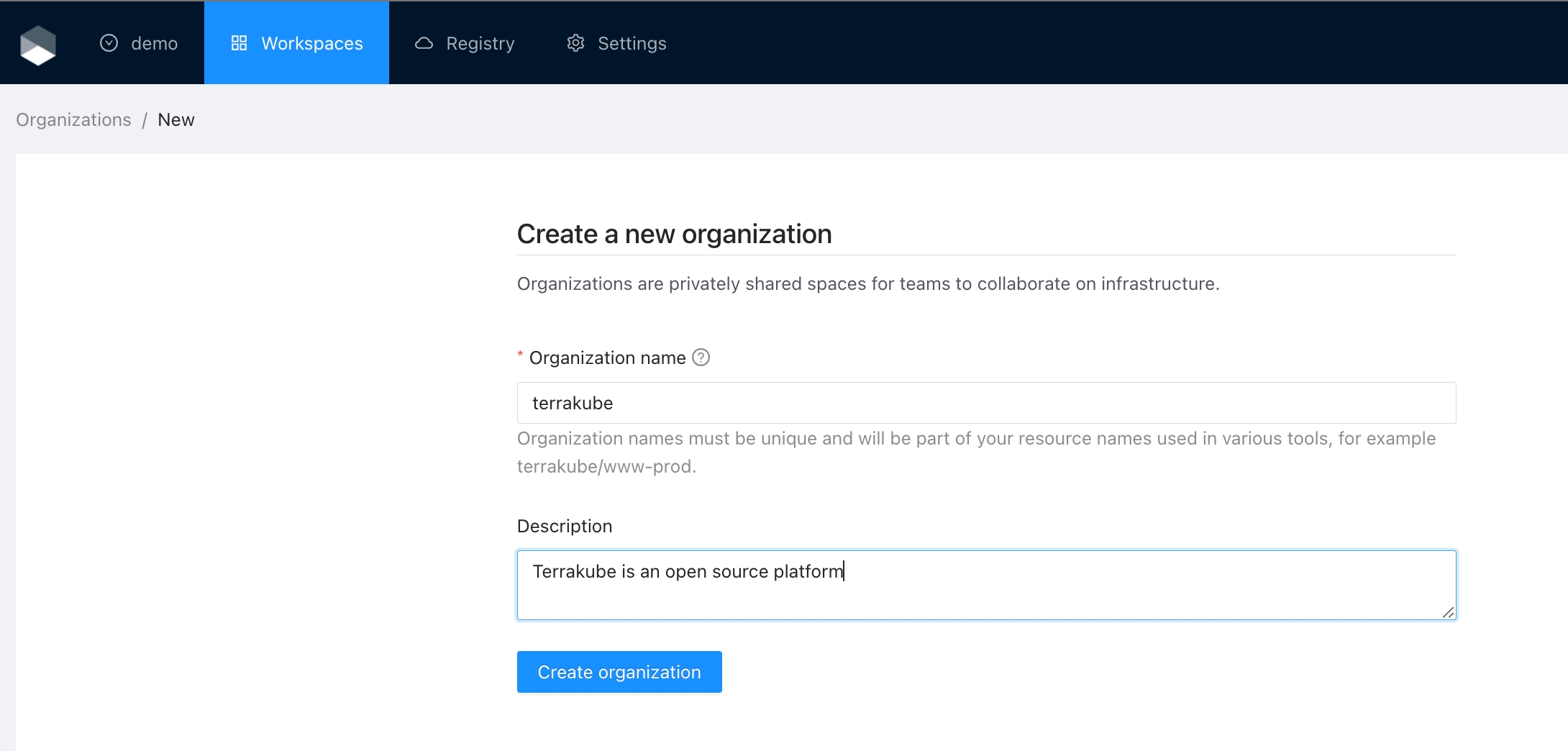

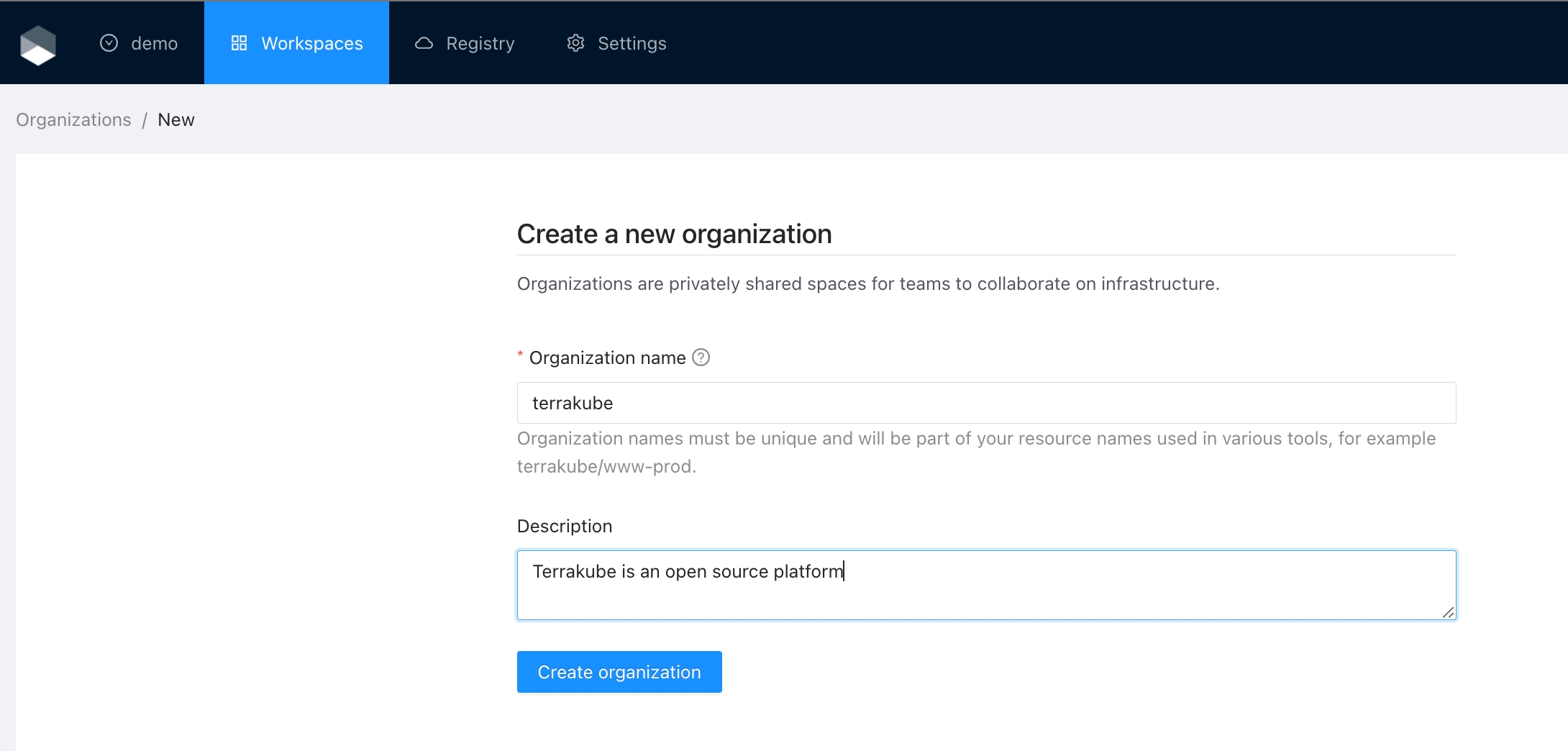

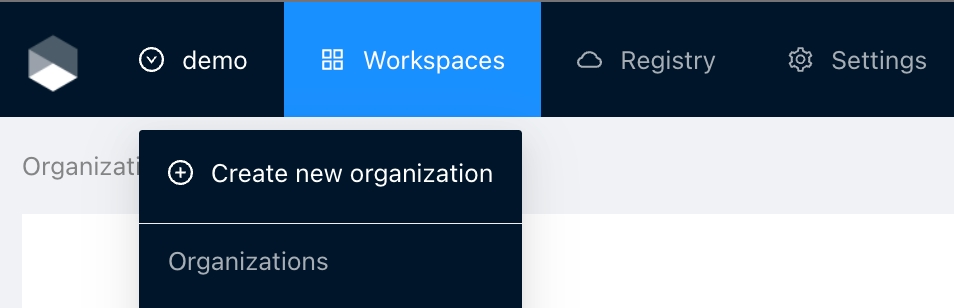

Click the organizations list on the main menu and then click the Create new organization button

Provide organization name and description and click the Create organization button

Then you will redirected to the Organization Settings page where you can define your teams, this is step is important so you can assign the permissions to users, otherwise you won't be able to create workspaces and modules inside the organization.

The first step will be to create one s3 bucket with private access

Once the s3 bucket is created you will need to get the following:

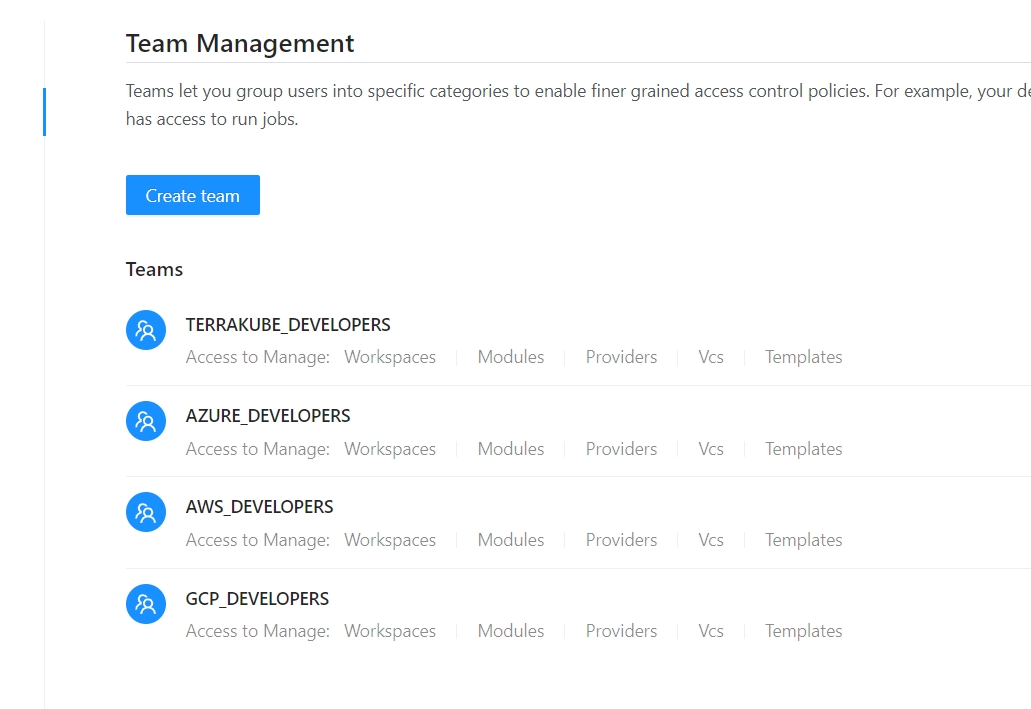

Module

Manage terraform modules inside an organization

VCS

Manage private connections to different VCS like Github, Bitbucket, Azure DevOps and Gitlab and handle SSH keys

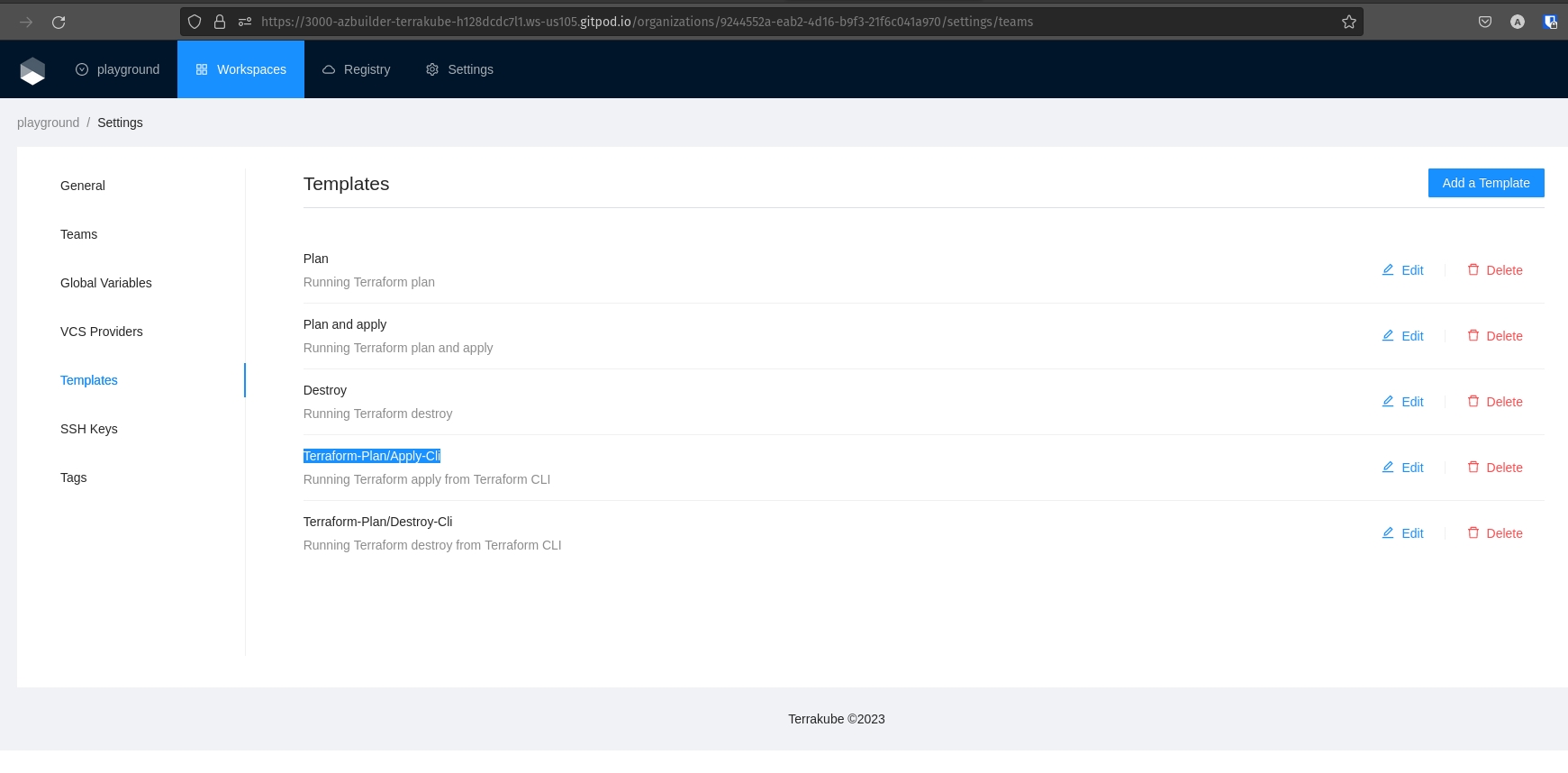

Template

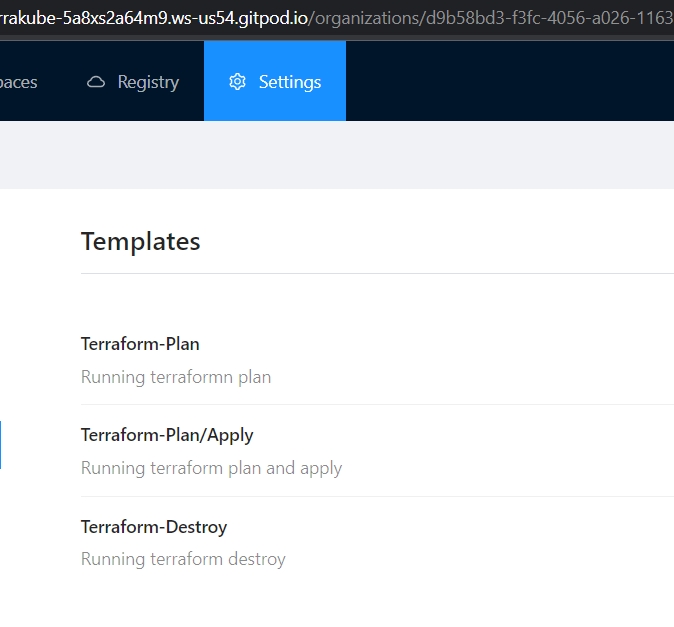

Manage the custom flows written in Terrakube Configuration Language when running any job inside the platform

Workspaces

Manage the terraform workspaces to run remote terraform operations.

Providers

Manage the terraform providers available inside the platform

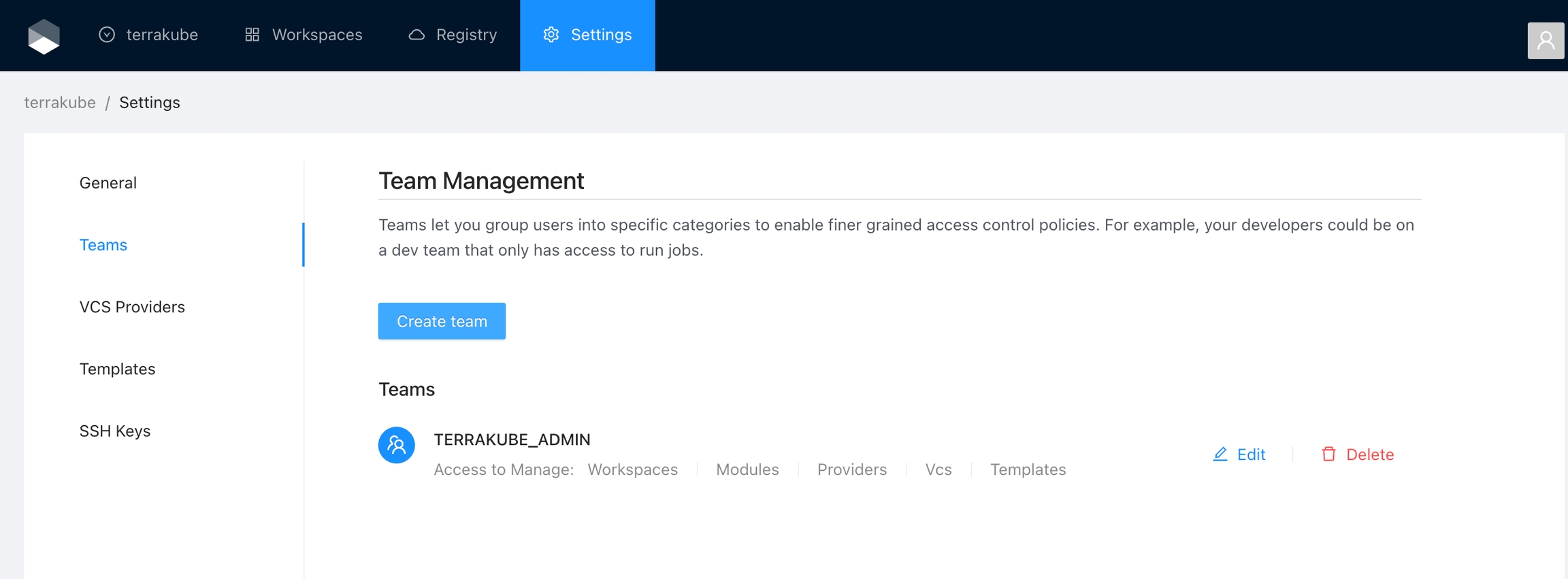

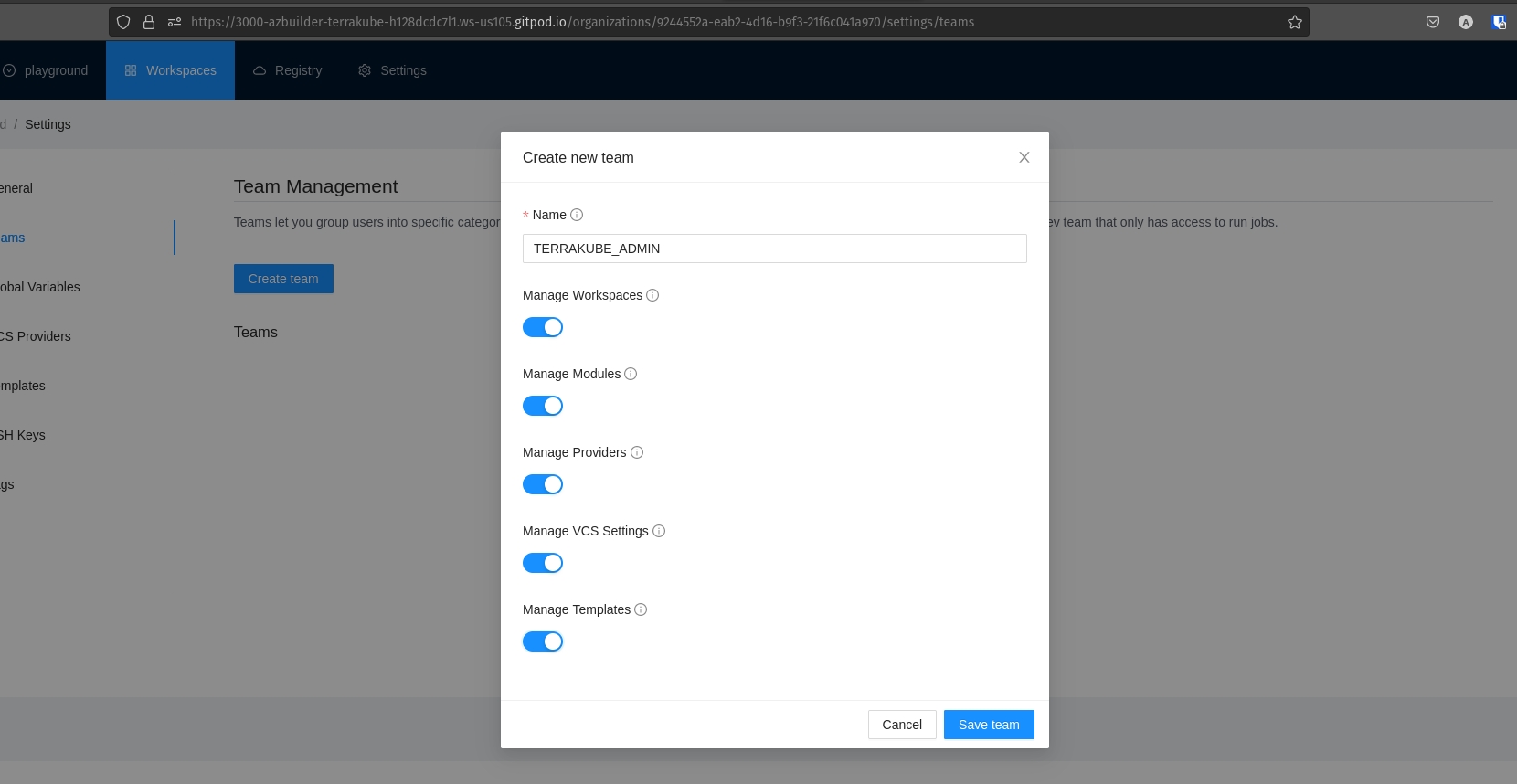

Adding a group to an organization will grant access to read the content inside the organization but to be able to manage any option like module, workspace, templates or providers or VCS a Terrakube administrator will need to grant it

There is one special group inside Terrakube called TERRAKUBE_ADMIN, this is the only group that has access to create organizations and grant access to a teams to manage different organization features, you can also customize the group name if you want to use a different name depending on which Dex connector you are using when running Terrakube.

This allows Terrakube to expose an open source terraform registry that is protected and is private by default using different Dex connectors for the authentication with different providers.

Terraform provider protocol:

This allows Terrakube to expose an open source terraform registry where you can have create your own private terraform providers or create a mirror of the current terraform providers available in the public Hashicorp registry.

Handle you infrastructure as code using Organization like closed source tools like Terraform Enterprise and Scalr.

Easily extended by default integrating with many open source tools available today for terraform CLI.

Support to run the platform using any RDBMS supported by liquibase (mysql, postgres, oracle, sql server, etc).

Plugins support to extends functionality using different cloud providers and open source tools using the Terrakube Configuration Languague (TCL).

Easily extends the platform using common technologies like react js, java, spring, elide, liquibase, groovy, bash, go.

Native integration with the most popular VCS like:

Github.

Azure DevOps

Bitbucket

Gitlab

Support for SSH key (RSA and ED25519)

Handle Personal Access Token

Extends and create custom flows when running terraform remote operations with custom logics like:

Approvals

Budget reviews

Security reviews

Custom logic that you can build with groovy and bash scripts

security:

patSecret: "AAAAAAAAAAAAAAAAAAAA"

internalSecret: "BBBBBBBBBBBBBBBBB"To use a MySQL with your Terrakube deployment create a terrakube.yaml file with the following content:

## Terrakube API properties

api:

defaultDatabase: false

loadSampleData: false

properties:

databaseType: "MYSQL"

databaseHostname: "server_name.database.mysql.com"

databaseName: "database_name"

databaseUser: "database_user"

databasePassword: "database_password"

Now you can install terrakube using the command.

helm install --values terrakube.yaml terrakube terrakube-repo/terrakube -n terrakubeTo use a SQL Azure with your Terrakube deployment create a terrakube.yaml file with the following content:

## Terrakube API properties

api:

defaultDatabase: false

loadSampleData: false

properties:

databaseType: "SQL_AZURE"

databaseHostname: "server_name.database.windows.net"

databaseName: "database_name"

databaseUser: "database_user"

databasePassword: "database_password"

databaseSchema: "dbo"

Now you can install terrakube using the command.

helm install --values terrakube.yaml terrakube terrakube-repo/terrakube -n terrakubeproject id

bucket name

JSON GCP credentials file with access to the storage bucket

Now you have all the information we will need to create a terrakube.yaml for our terrakube deployment with the following content:

Now you can install terrakube using the command:

## Terrakube Storage

storage:

defaultStorage: false

gcp:

projectId: "sample project"

bucketName: "sampledata"

credentials: |

{

"type": "service_account",

"project_id": "XXXXXXX",

......

}helm install --values terrakube.yaml terrakube terrakube-repo/terrakube -n terrakubeOnce the minio storage is installed lets get the service name.

The service name for the minio storage should be "miniostorage"

Once minio is installed with a bucket you will need to get the following:

access key

secret key

bucket name

endpoint (http://miniostorage:9000)

Now you have all the information we will need to create a terrakube.yaml for our terrakube deployment with the following content:

Now you can install terrakube using the command:

auth:

rootUser: "admin"

rootPassword: "superadmin"

defaultBuckets: "terrakube"kubectl install --values minio-setup.yaml miniostorage bitnami/minio -n terrakubetfoutput

tfstate

Once the storage account is created you will have to get the "Access Key"

Now you have all the information we will need to create a terrakube.yaml for our terrakube deployment with the following content:

Now you can install terrakube using the command

## Terrakube Storage

storage:

defaultStorage: false

azure:

storageAccountName: "<<STORAGE ACCOUNT NAME>>"

storageAccountResourceGroup: "<< RESOURCE GROUP >>"

storageAccountAccessKey: "<< STORAGE ACCOUNT ACCESS KEY"## Terrakube API properties

api:

defaultDatabase: false

loadSampleData: true

properties:

databaseType: "H2"

databaseHostname: ""

databaseName: ""

databaseUser: ""

databasePassword: ""

helm install --values terrakube.yaml terrakube terrakube-repo/terrakube -n terrakubeaccess key

secret key

bucket name

region

Now you have all the information we will need to create a terrakube.yaml for our terrakube deployment with the following content:

Now you can install terrakube using the command:

## Terrakube Storage

storage:

defaultStorage: false

aws:

accessKey: "rqerqw"

secretKey: "sadfasfdq"

bucketName: "qerqw"

region: "us-east-1"helm install --values terrakube.yaml terrakube terrakube-repo/terrakube -n terrakubekubectl get svc -o wide -n terrakube## Terrakube Storage

storage:

defaultStorage: false

minio:

accessKey: "admin"

secretKey: "superadmin"

bucketName: "terrakube"

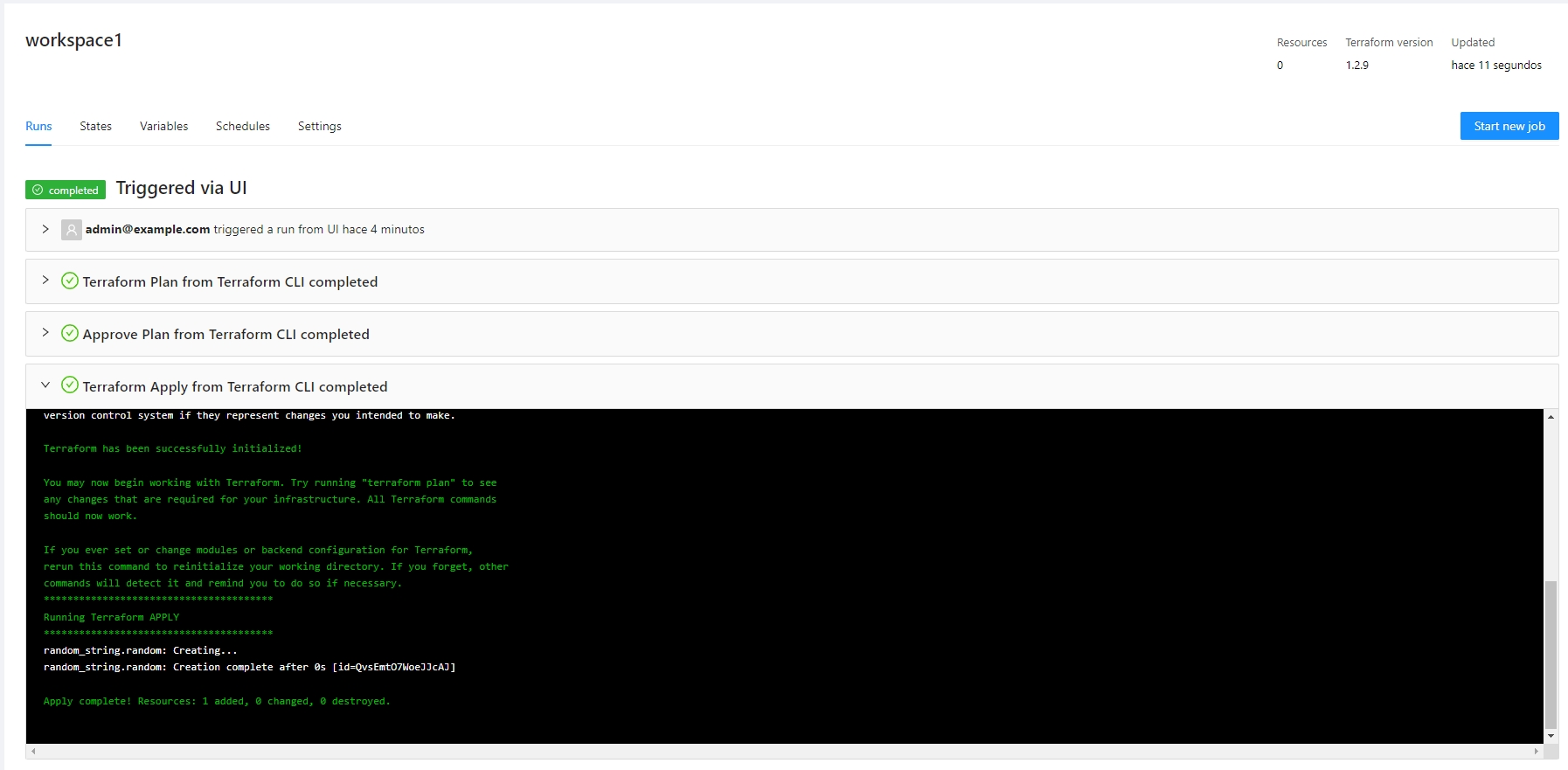

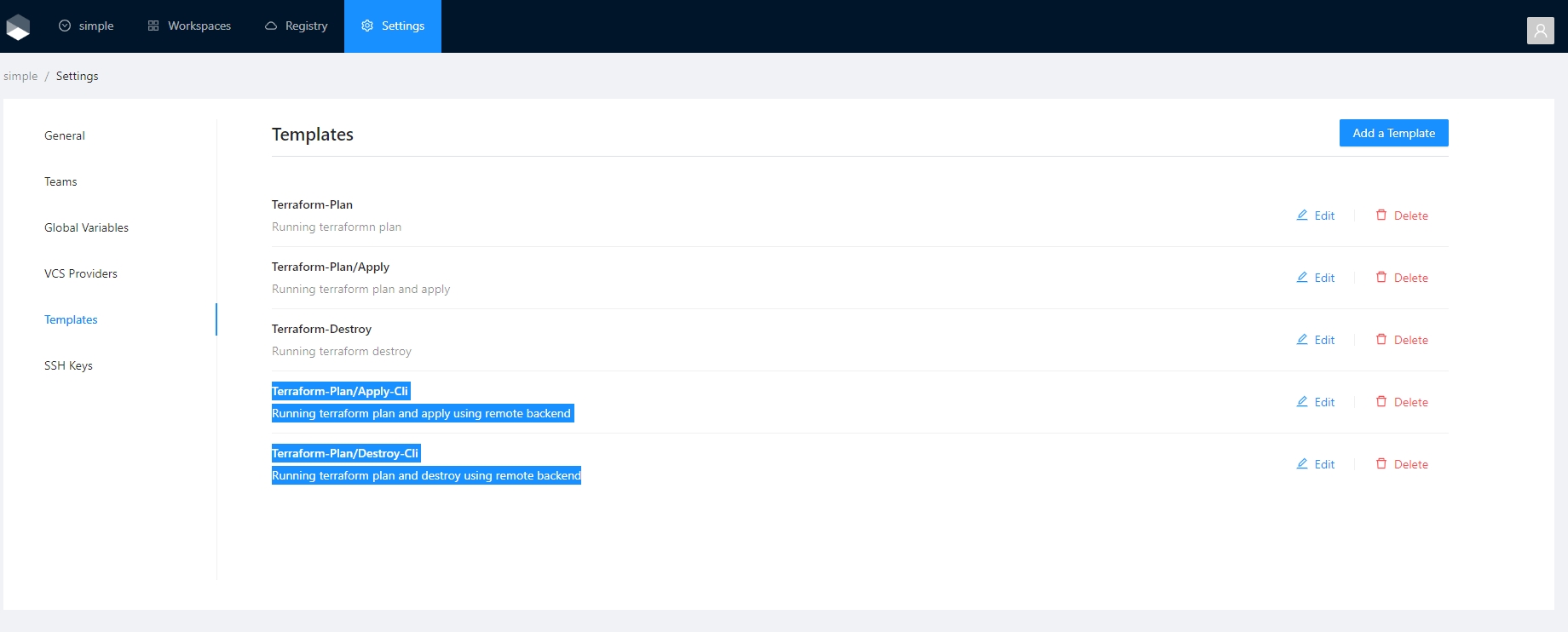

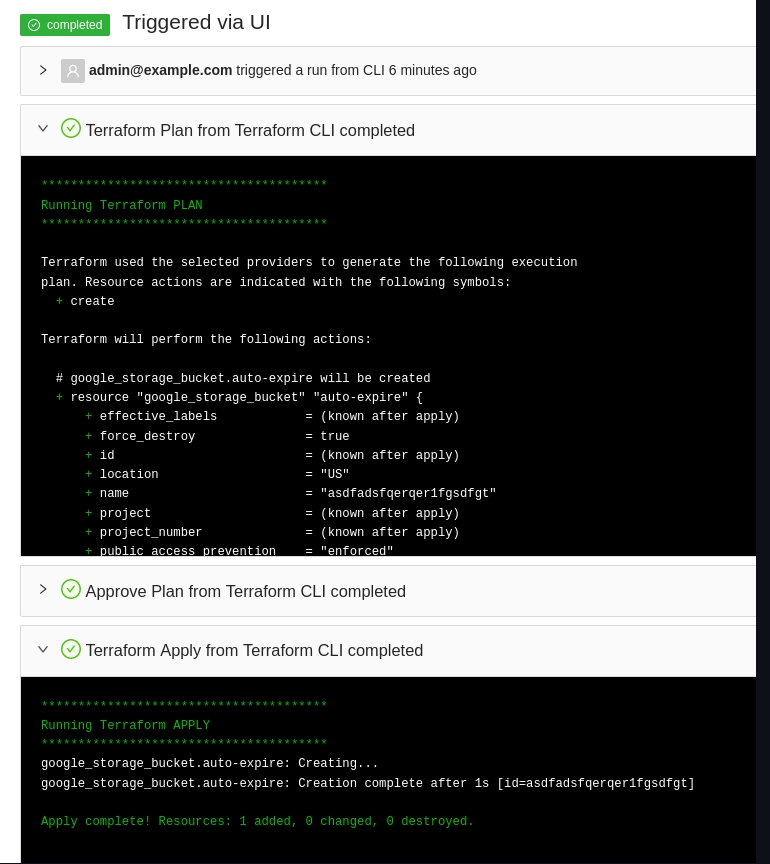

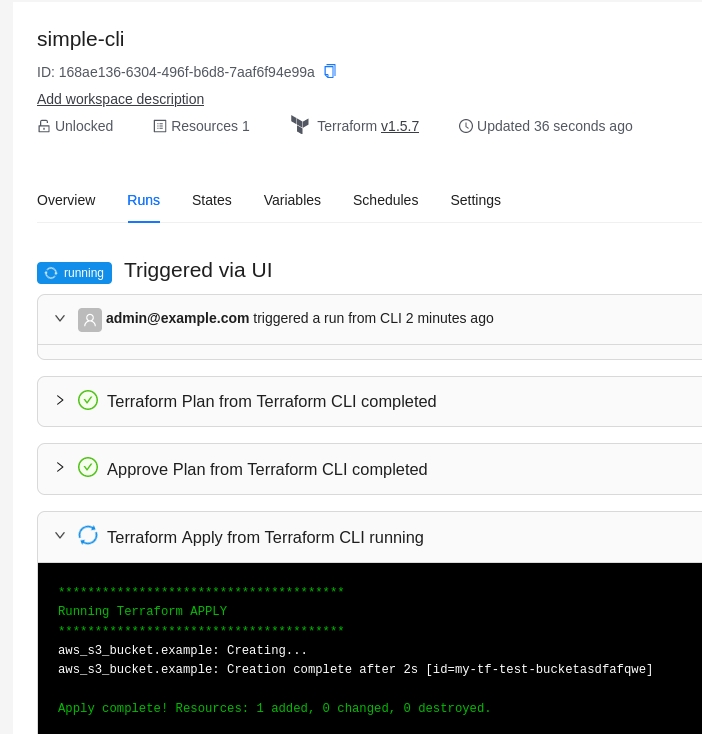

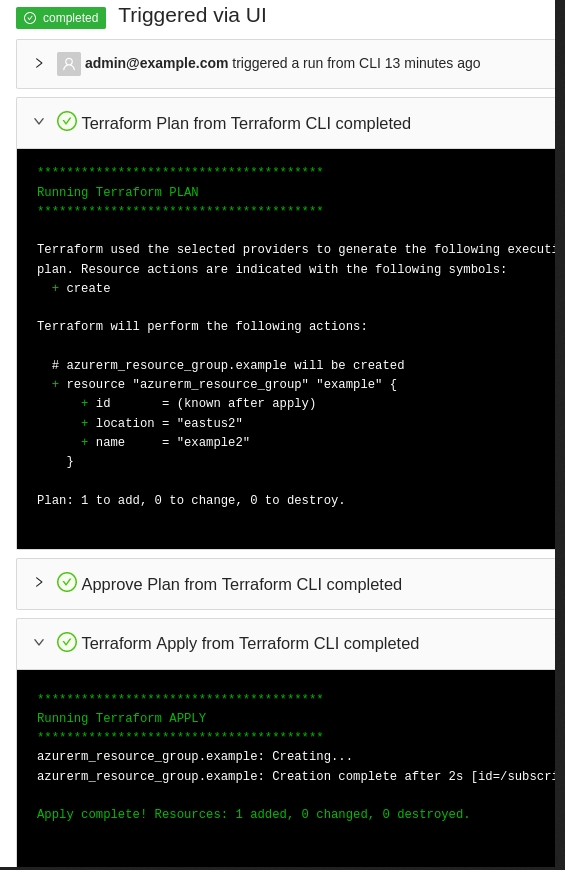

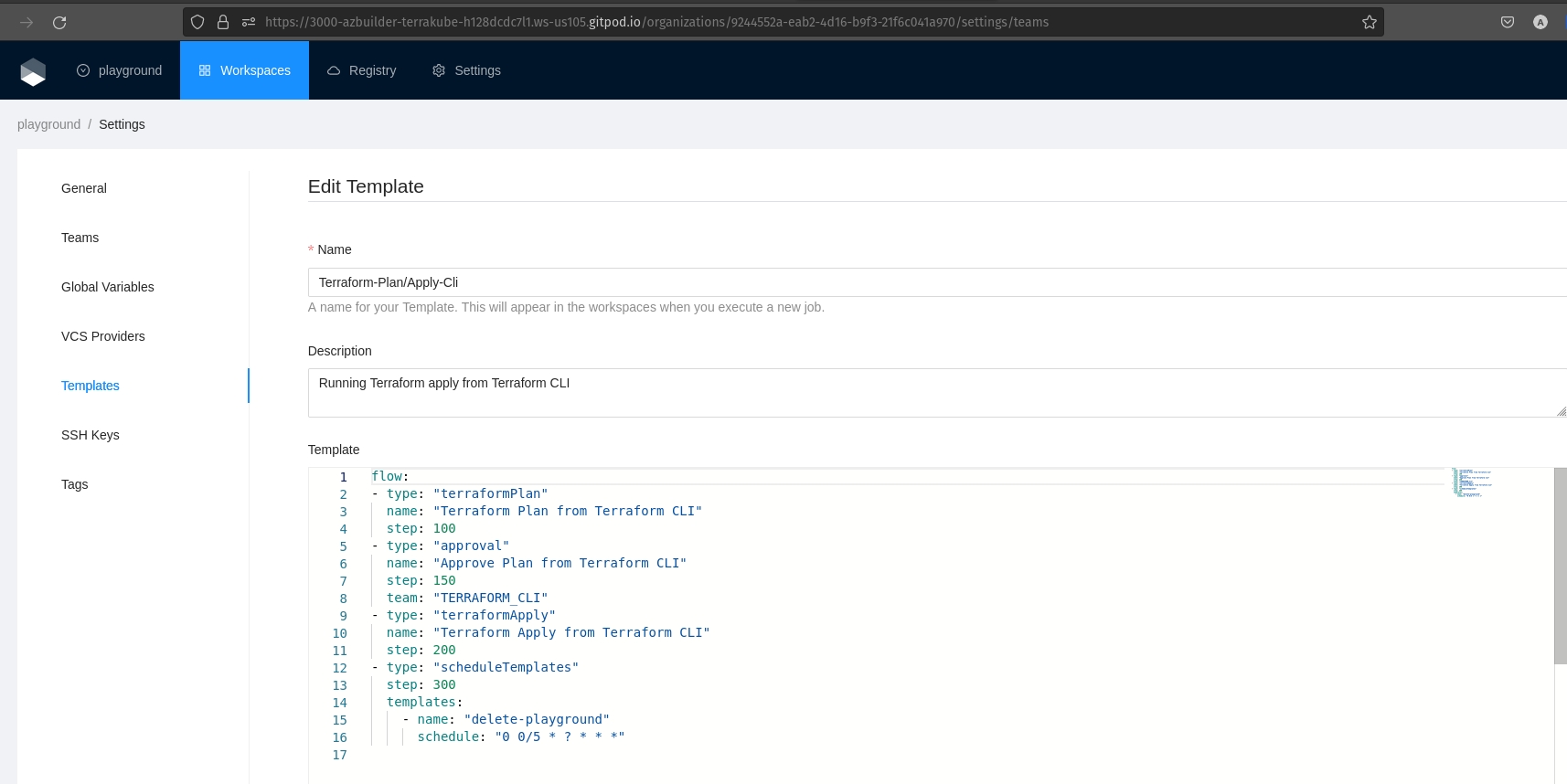

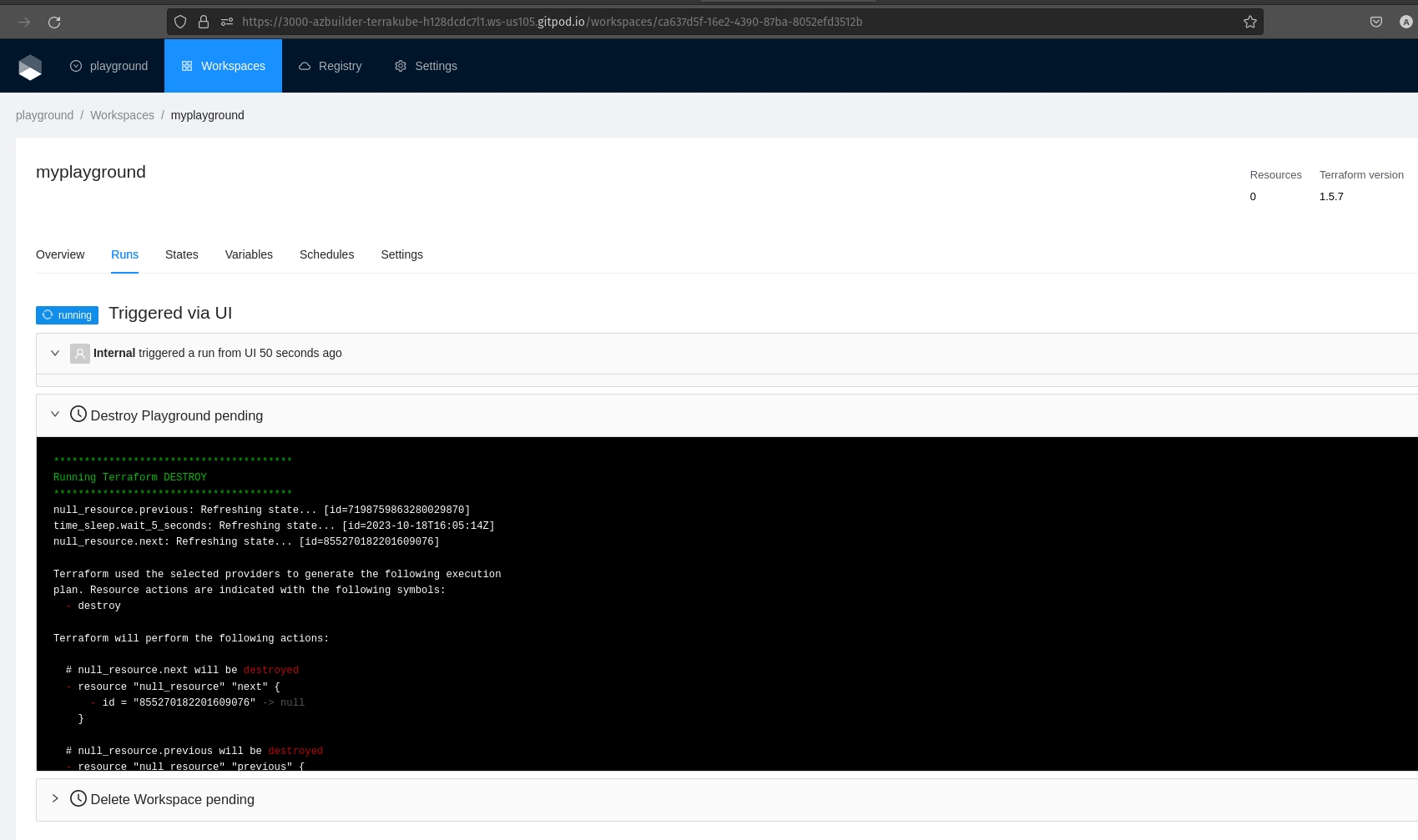

endpoint: "http://miniostorage:9000"helm install --values terrakube.yaml terrakube terrakube-repo/terrakube -n terrakubehelm install --values terrakube.yaml terrakube terrakube-repo/terrakube -n terrakubeWill be used when you execute terraform apply using the terraform cli

Will be used when you execute terraform destroyusing the terraform cli

This templates can be updated if need it but in order for the terraform remote state backed to work properly the step number and the template names should not be changed. So if you delete or modify this templates

flow:

- type: "terraformPlan"

name: "Terraform Plan from Terraform CLI"

step: 100

- type: "approval"

name: "Approve Plan from Terraform CLI"

step: 150

team: "TERRAFORM_CLI"

- type: "terraformApply"

name: "Terraform Apply from Terraform CLI"

step: 200

Images for Terrakube components can be found in the following links:

Terrakube API

Terrakube Registry

Terrakube Executor

Terrakube UI

Default Images are based on Ubuntu Jammy (linux/amd64)

The docker images are generated using cloud native buildpacks with the maven plugin, more information can be found in these links:

From Terrakube 2.17.0 we generate 3 additional docker images for the API, Registry and Executor components that are based on that are designed and optimized for Spring Boot applications.

Alpaquita Linux images for the Executor component does not include GIT, Terraform modules using remote executions like the following wont work:

Update the /etc/hosts file adding the following entries:

Terrakube will be available in the following URL:

http://terrakube-ui:3000

Username: [email protected]

Password: admin

You can also test Terrakube using the Open Telemetry example setup to see how the components are working internally and check some internal logs

Terrakube allow to have one or multiple agents to run jobs, you can have as many agents as you want for a single organization.

To use this feature you could deploy a single executor component using the following values:

The above values are assuming the we have deploy terrakube using the domain "minikube.net" inside a namespace called "terrakube"

Now that we have our values.yaml we can use the following helm command:

Now we have a single executor component ready to accept jobs or we could change the number or replicas to have multiple replicas like a pool of agent:

Terrakube will support terraform cli custom builds or custom cli mirrors, the only requirement is to expose an endpoint with the following structure:

Example Endpoint:

https://eov1ys4sxa1bfy9.m.pipedream.net/

{

"name":"terraform",

"versions":{

"1.3.9":{

"builds":[

{

"arch":"amd64",

"filename":"terraform_1.3.9_linux_amd64.zip",

"name":"terraform",

"os":"linux",

"url":"https://releases.hashicorp.com/terraform/1.3.9/terraform_1.3.9_linux_amd64.zip",

"version":"1.3.9"

}

],

"name":"terraform",

"shasums":"terraform_1.3.9_SHA256SUMS",

"shasums_signature":"terraform_1.3.9_SHA256SUMS.sig",

"shasums_signatures":[

"terraform_1.3.9_SHA256SUMS.72D7468F.sig",

"terraform_1.3.9_SHA256SUMS.sig"

],

"version":"1.3.9"

}

}

}This is usefull when you have some network restrictions that does not allow to get the information from in your private kuberentes cluster.

To support custom terrafom cli releases when using the helm chart us the following:

The default ingress configuration for terrakube can be customize using a terrakube.yaml like the following:

dex:

config:

issuer: http://terrakube-api.minikube.net/dex ## CHANGE THIS DOMAIN

#.....

#PUT ALL THE REST OF THE DEX CONFIG AND UPDATE THE URL WITH THE NEW DOMAIN

#.....

## Ingress properties

ingress:

useTls: false

ui:

enabled: true

domain: "terrakube-ui.minikube.net" ## CHANGE THIS DOMAIN

path: "/(.*)"

pathType: "Prefix"

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/use-regex: "true"

api:

enabled: true

domain: "terrakube-api.minikube.net" ## CHANGE THIS DOMAIN

path: "/(.*)"

pathType: "Prefix"

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/configuration-snippet: "proxy_set_header Authorization $http_authorization;"

registry:

enabled: true

domain: "terrakube-reg.minikube.net" ## Change to your customer domain

path: "/(.*)"

pathType: "Prefix"

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/configuration-snippet: "proxy_set_header Authorization $http_authorization;"

dex:

enabled: true

path: "/dex/(.*)"

pathType: "Prefix"

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/configuration-snippet: "proxy_set_header Authorization $http_authorization;"The above example is using a simple ngnix ingress

Now you can install terrakube using the command

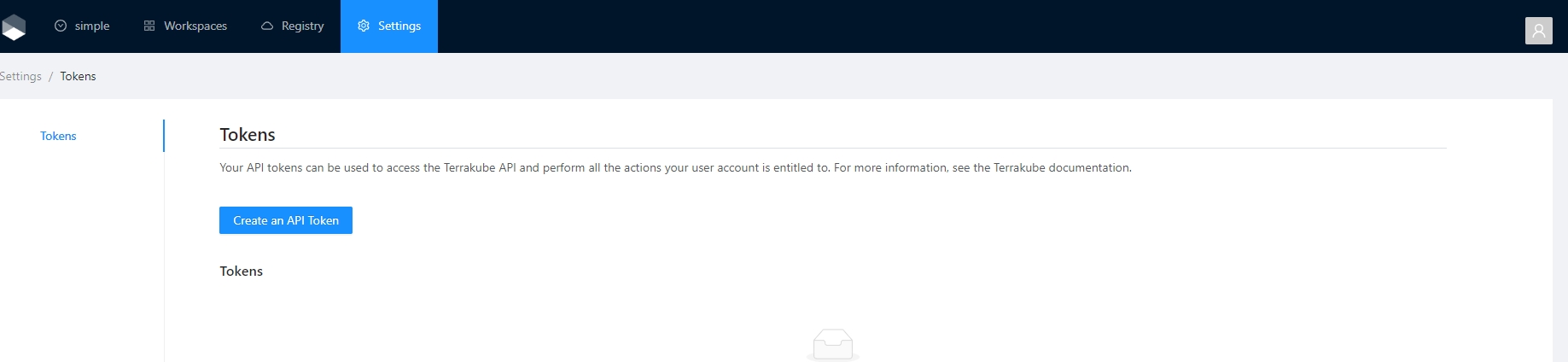

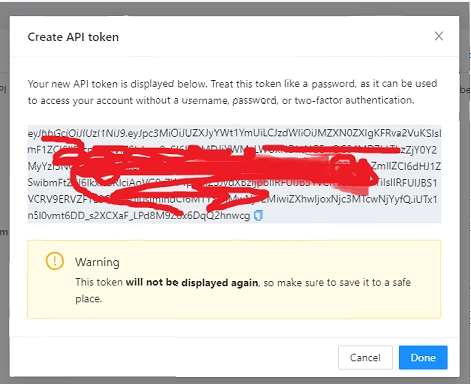

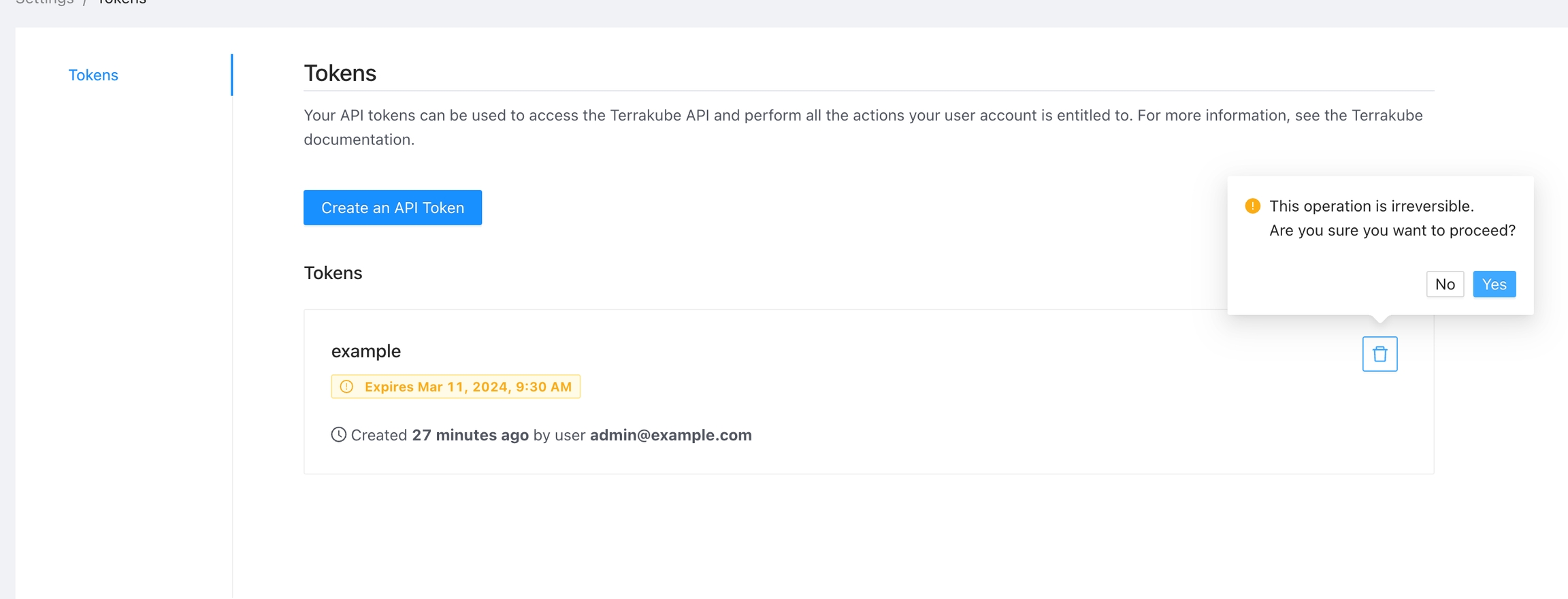

Terrakube has two kinds of API tokens: user and team. Each token type has a different access level and creation process, which are explained below.

API tokens are only shown once when you create them, and then they are hidden. You have to make a new token if you lose the old one.

API tokens may belong directly to a user. User tokens inherit permissions from the user they are associated with.

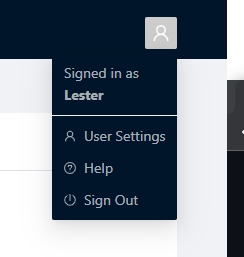

User API tokens can be generated inside the User Settings.

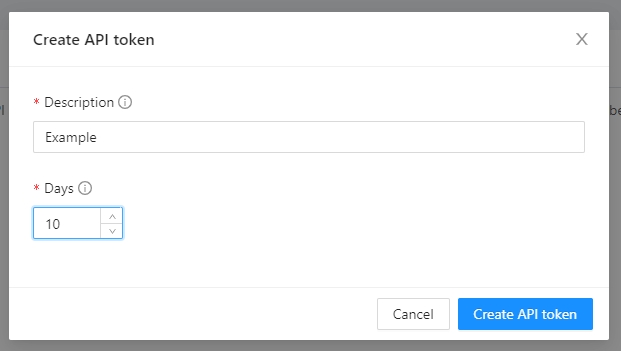

Click the Generate and API token button

Add some small description to the token and the duration

The new token will be showed and you can copy it to star calling the Terrakube API using Postman or some other tool.

To delete an API token, navigate to the list of tokens, click the Delete button next to the token you wish to remove, and confirm the deletion.

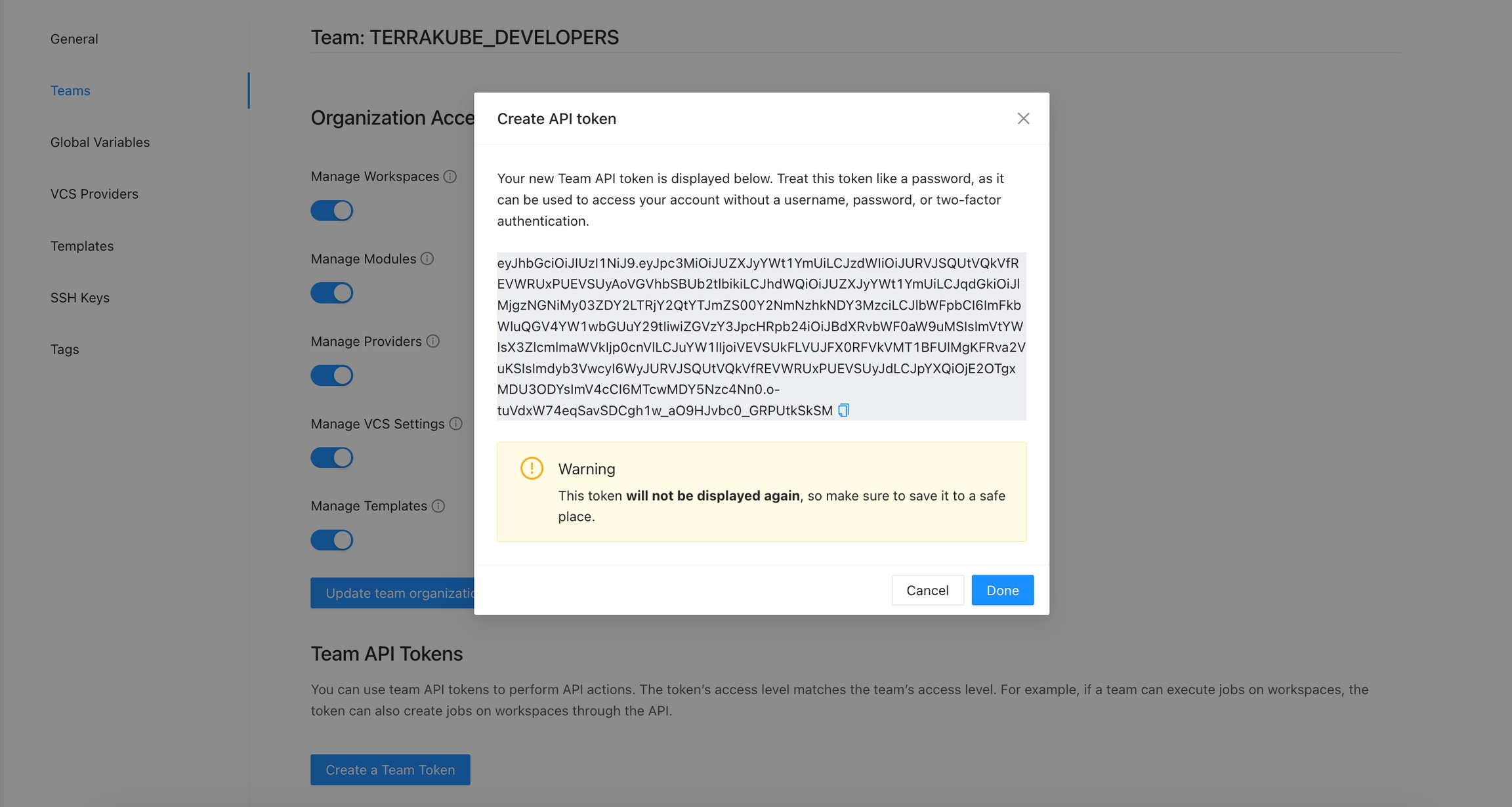

API tokens may belong to a specific team. Team API tokens allow access Terrakube, without being tied to any specific user.

A team token can only be generated if you are member of the specified team.

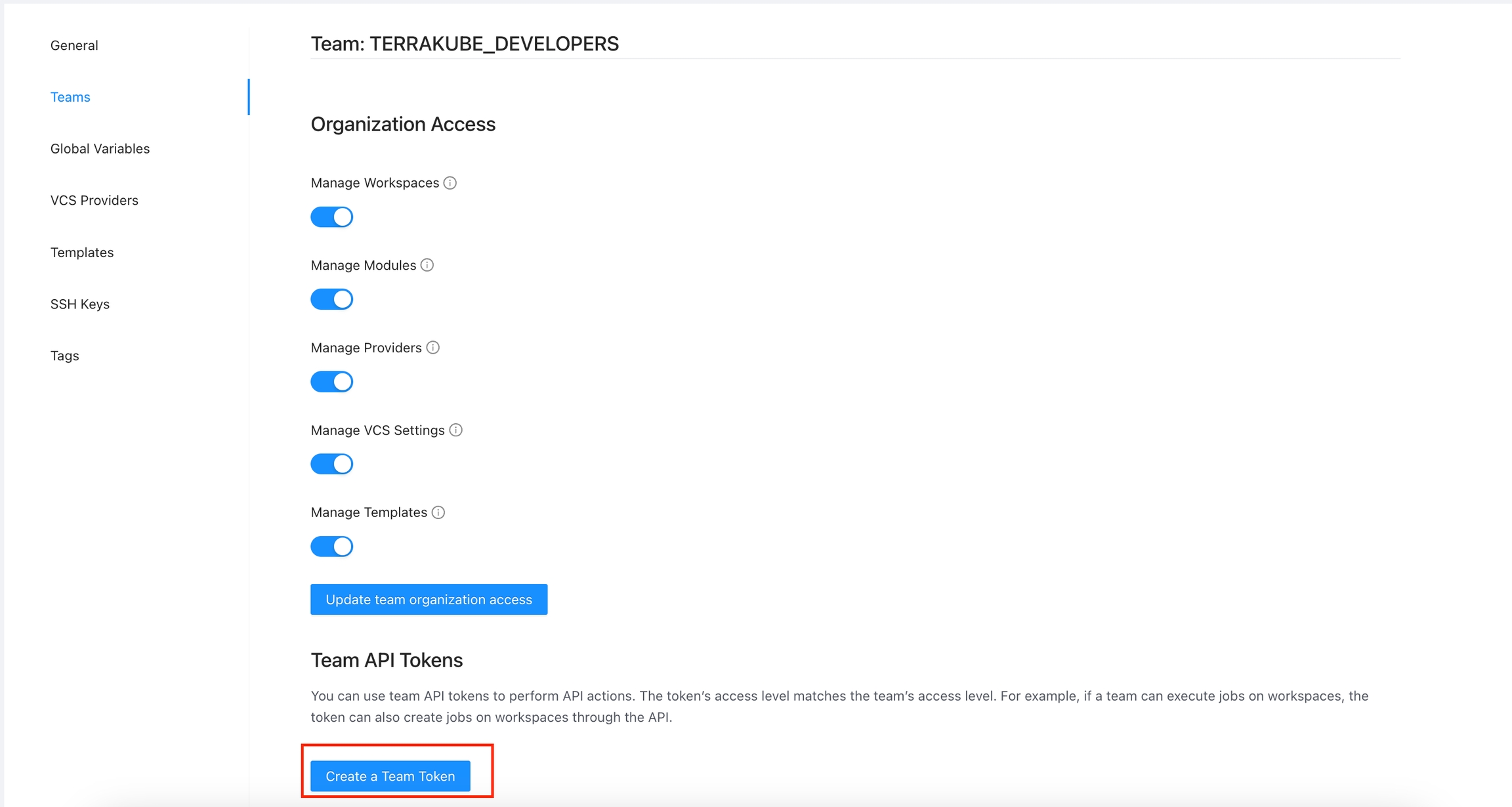

To manage the API token for a team, go to Settings > Teams > Edit button on the desired team.

Click the Create a Team Token button in the Team API Tokens section.

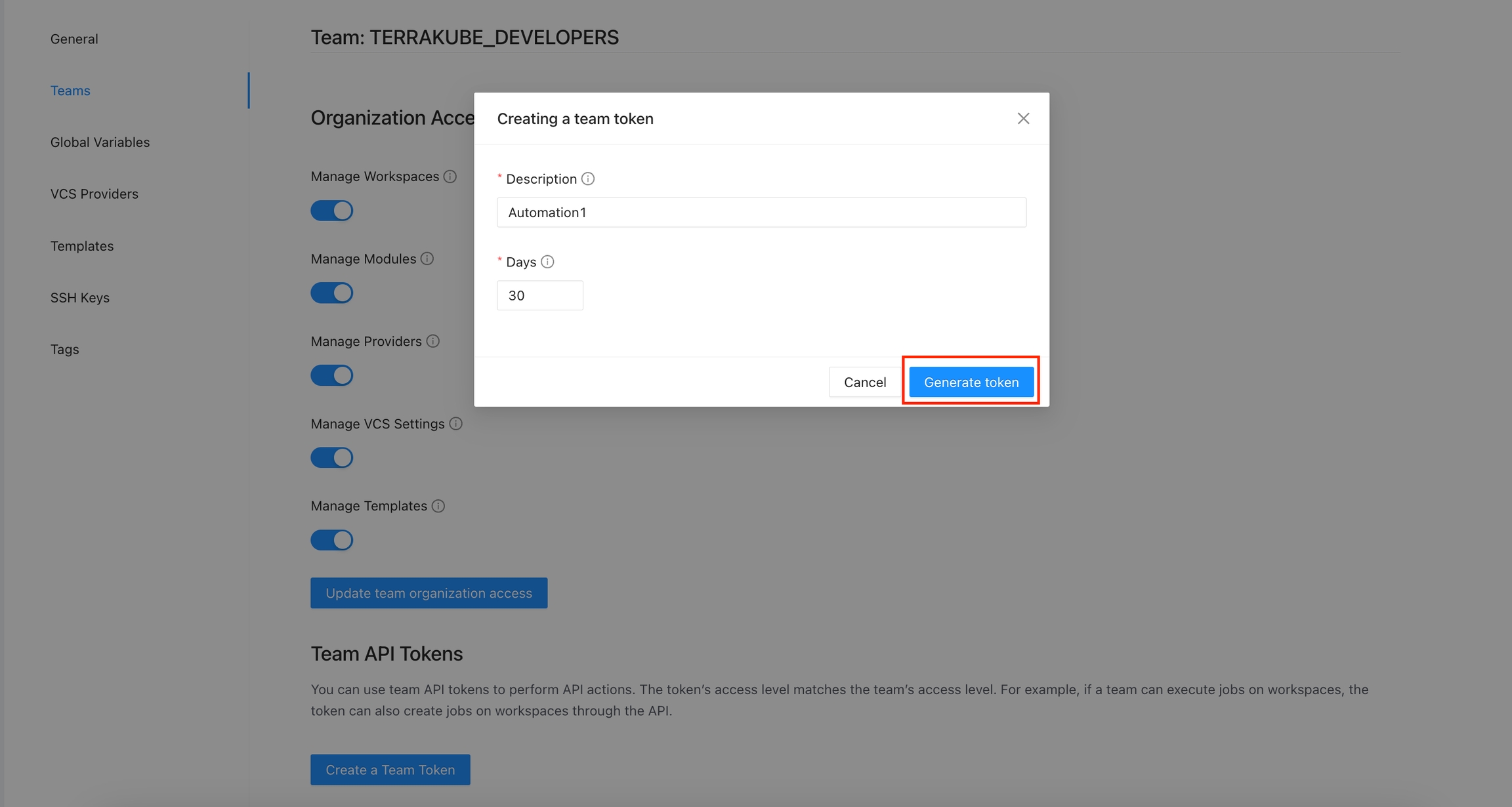

Add the token description and duration and click the Generate token button

The new token will be showed and you can copy it to star calling the Terrakube API using Postman or some other tool.

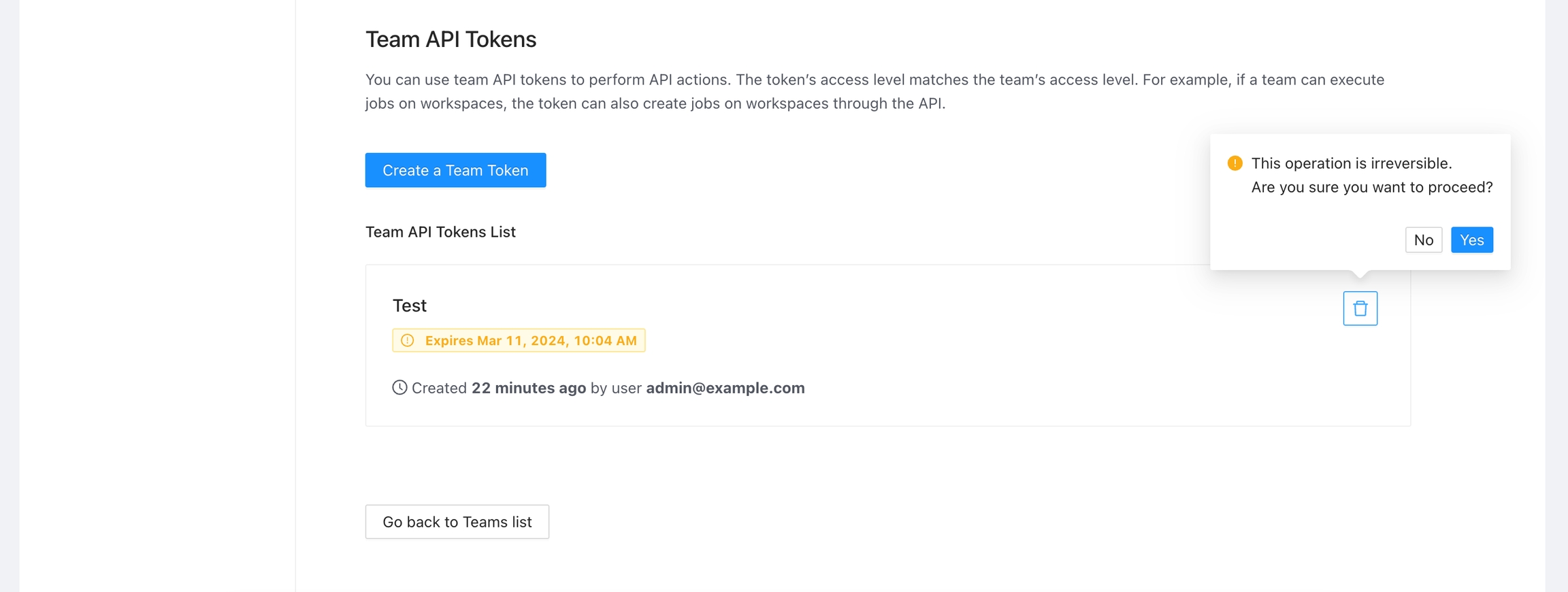

To delete an API token, navigate to the list of tokens, click the Delete button next to the token you wish to remove, and confirm the deletion.

To use a PostgreSQL with your Terrakube deployment create a terrakube.yaml file with the following content:

## Terrakube API properties

api:

defaultDatabase: false

loadSampleData: false

properties:

databaseType: "POSTGRESQL"

databaseHostname: "server_name.postgres.database.com"

databaseName: "database_name"

databaseUser: "database_user"

databasePassword: "database_password"

databaseSslMode: "disable"

databasePort: "5432"

Now you can install terrakube using the command.

Postgresql SSL mode can be use adding databaseSslMode parameter by default the value is "disable", but it accepts the following values; disable, allow, prefer, require, verify-ca, verify-full. This feature is supported from Terrakube 2.15.0. Reference:

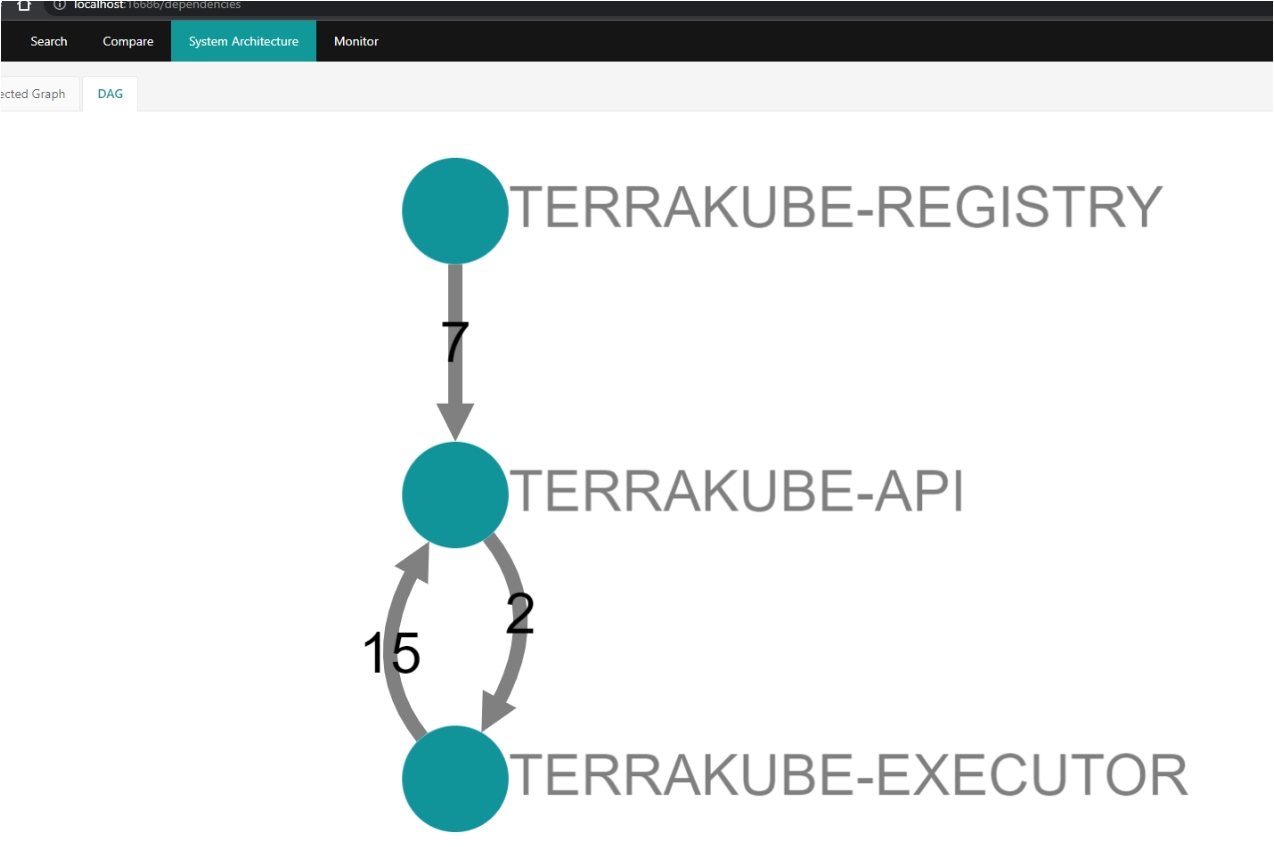

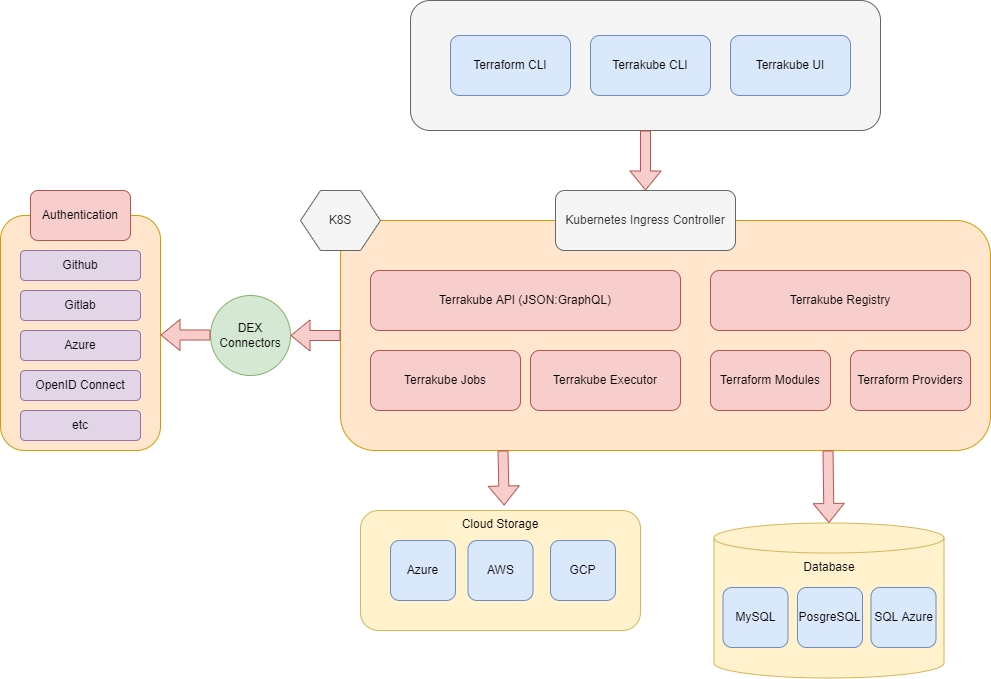

This is the high level architecture of the platform

Component descriptions:

Terrakube API:

Expose a JSON:API or GraphQL API providing endpoints to handle:

flow:

- type: "terraformPlanDestroy"

name: "Terraform Plan Destroy from Terraform CLI"

step: 100

- type: "approval"

name: "Approve Plan from Terraform CLI"

step: 150

team: "TERRAFORM_CLI"

- type: "terraformApply"

name: "Terraform Apply from Terraform CLI"

step: 200

127.0.0.1 terrakube-api

127.0.0.1 terrakube-ui

127.0.0.1 terrakube-executor

127.0.0.1 terrakube-dex

127.0.0.1 terrakube-registrygit clone https://github.com/AzBuilder/terrakube.git

cd terrakube/docker-compose

docker-compose up -d# Executor should be enable but we need to customize the apiServiceUrl

executor:

enabled: true

apiServiceUrl: "http://terrakube-api-service.terrakube:8080" ## The API is in another namespace called "terrakube"

# We need to disable the default openLdap but we need to provide the internal secret

# so the executor can authenticat with the API and the Registry

security:

useOpenLDAP: false

internalSecret: "AxxPdgpCi72f8WhMXCTGhtfMRp6AuBfj"

# We need to disable dex in this deployment

dex:

enabled: false

# We need to disable default storage MINIO and set some custom values

# in this example will be deploying like using an external MINIO

#(other backend storage could be used too)

storage:

defaultStorage: false

minio:

accessKey: "admin"

secretKey: "superadmin"

bucketName: "terrakube"

endpoint: "http://terrakube-minio.terrakube:9000" ## MINIO is in another namespace called "terrakube"

# We need to disable API, the default redis and default postgresql database

# But we need to provide some properties like the redis connection

api:

enabled: false

defaultRedis: false

defaultDatabase: false

properties:

redisHostname: "terrakube-redis-master.terrakube" ## REDIS is in another namespace called "terrakube"

redisPassword: "7p9iWVeRV4S944"

# We need to disable registry deployment

registry:

enabled: false

# We need to disable ui deployment

ui:

enabled: false

# We need to disable the ingress configuration

# but we need to specify the api and registry URL

ingress:

useTls: false

includeTlsHosts: false

ui:

enabled: false

api:

enabled: false

domain: "terrakube-api.minikube.net"

registry:

enabled: false

domain: "terrakube-reg.minikube.net"

dex:

enabled: falseIn progress

api:

version: "2.12.0"

terraformReleasesUrl: "https://eov1ys4sxa1bfy9.m.pipedream.net/"

executor:

version: "2.12.0"helm install --values terrakube.yaml terrakube terrakube-repo/terrakube -n terrakubehelm install --values terrakube.yaml terrakube terrakube-repo/terrakube -n terrakubedocker pull azbuilder/api-server:2.17.0-alpaquita

docker pull azbuilder/open-registry:2.17.0-alpaquita

docker pull azbuilder/executor:2.17.0-alpaquitamodule "consul" {

source = "[email protected]:hashicorp/example.git"

}Organizations.

Workspace API with the following support:

Terraform state history

Terraform output history

Terraform Variables (public/secrets)

Environment Variables (public/secrets)

Cron like support to schedule the jobs execution programmatically

Job.

Custom terraform flows based on Terrakube Configuration Language

Modules

Providers

Teams

Teamplate

Terrakube Jobs:

Automatic process that check for any pending job operations in any workspace to trigger a custom logic flow based on Terrakube Configuration Language

Terrakube Executor:

Service that executes the Terrakube job operations written in Terrakube Configuration Language, handle the terraform state and outputs using cloud storage providers like Azure Storage Account

Terrakube Open Registry:

This component allows to expose an open source private repository protected by Dex that you can use to handle your company private terraform modules or providers.

Cloud Storage:

Cloud storage to handle terraform state, outputs and terraform modules used by terraform CLI

RDBMS:

The platform can be used with any database supported by the Liquibase project.

Security:

All authentication and authorization is handle using Dex.

Terrakube CLI:

Go based CLI that can communicate with the Terrakube API and execute operation for organizations, workspaces, jobs, templates, modules or providers

Terrakube UI:

React based frontend to handle all Terrakube Operations.

helm install --debug --values ./your-values.yaml terrakube terrakube-repo/terrakube -n self-hosted-executorPersistent Context is helpfull when you need to save information from the job execution, for example it can be used to save the infracost or save the thread id when using the Slack extension. We can also use it to save any JSON information generated inside Terrakube Jobs.

In order to save the information the terrakube API exposes the following endpoint

POST {{terrakubeApi}}/context/v1/{{jobId}}

{

"slackThreadId": "12345667",

"infracost": {}

}Job context can only be updated when the status of the Job is running.

To get the context you can use the Terrakube API

The persistent context can be used using the extension from the Terrakube extension repository. It supports saving a JSON file or saving a new property inside the context JSON.

This is an example of a Terrakube template using the persistent job context to save the infracost information.

You can use persistent context to customize the Job UI, see for more details.

This feature is supported from version 2.22.0

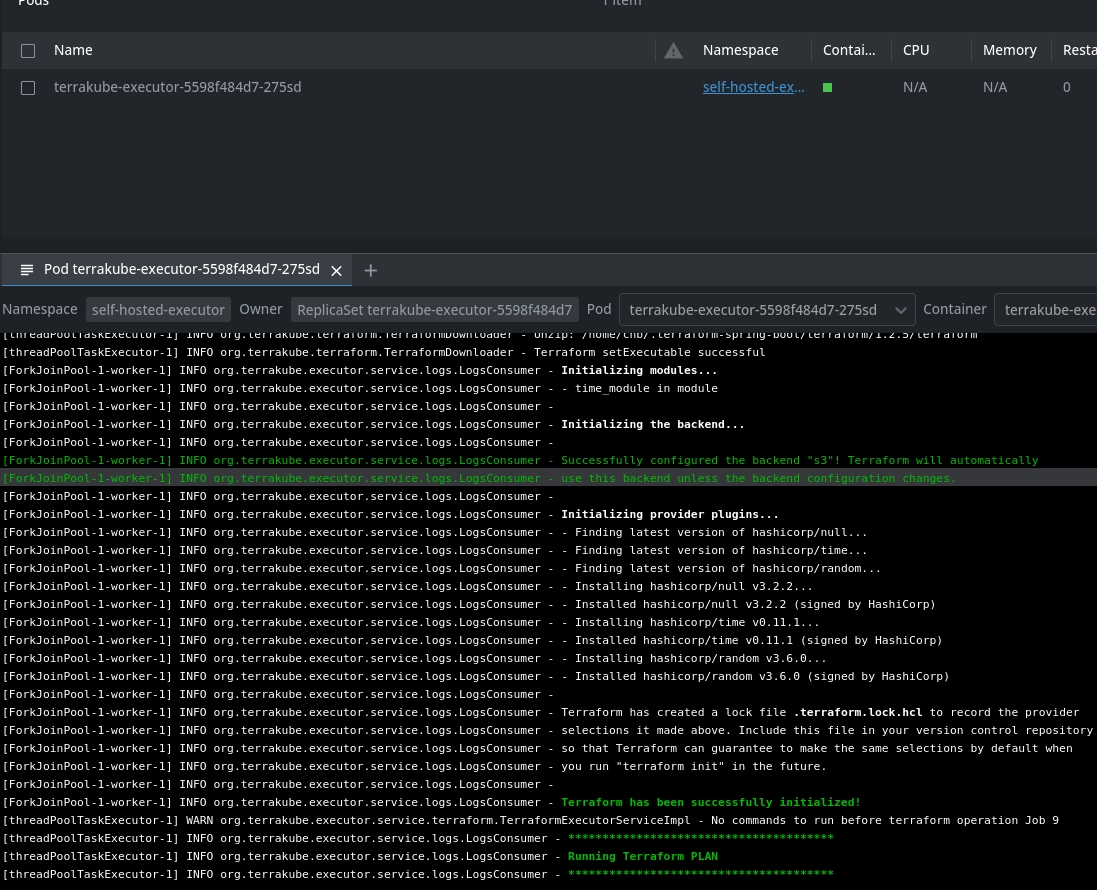

The following will explain how to run the executor component in"ephemeral" mode.

These environment variables can be used to customize the API component:

ExecutorEphemeralNamespace (Default value: "terrakube")

ExecutorEphemeralImage (Defatul value: "azbuilder/executor:2.22.0" )

ExecutorEphemeralSecret (Default value: "terrakube-executor-secrets" )

The above is basically to control where the job will be created and executed and to mount the secrets required by the executor component

Internally the Executor component will use the following to run in "ephemeral" :

EphemeralFlagBatch (Default value: "false")

EphemeralJobData, this contains all the data that the executor need to run.

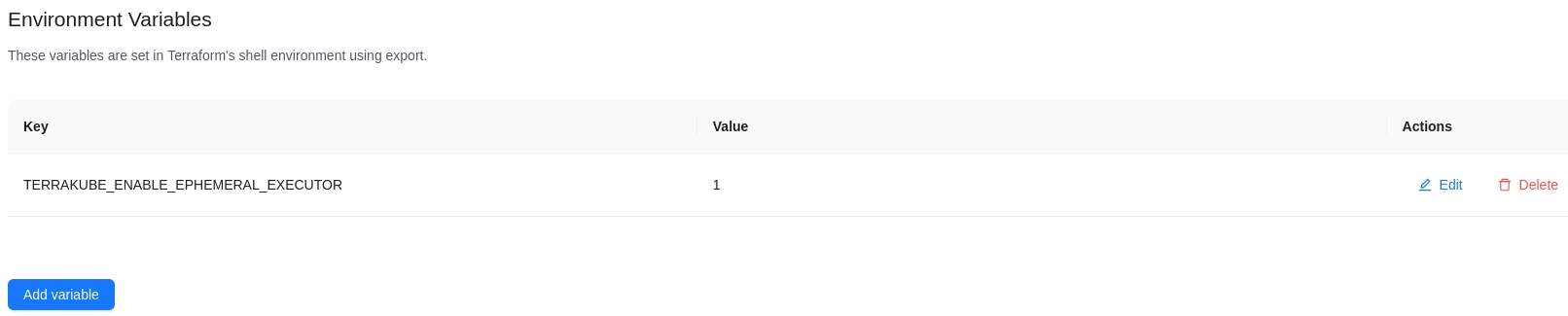

To use Ephemeral executors we need to create the following configuration:

Once the above configuration is created we can deploy the Terrakube API like the following example:

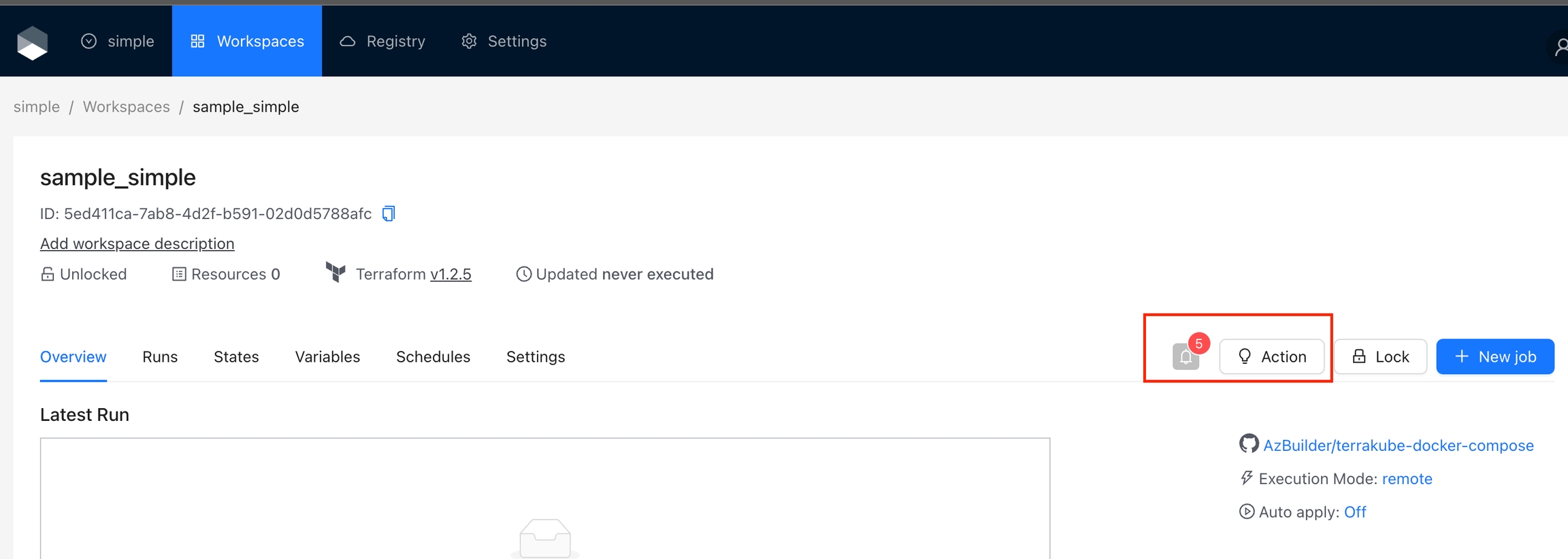

Add the environment variable TERRAKUBE_ENABLE_EPHEMERAL_EXECUTOR=1 like the image below

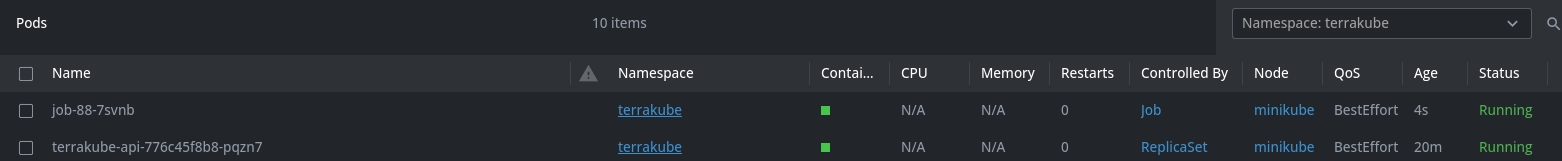

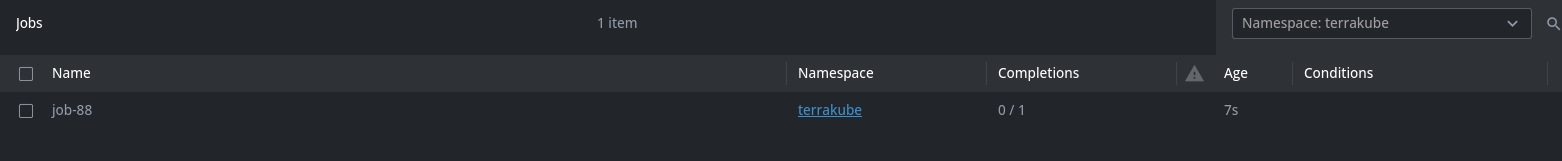

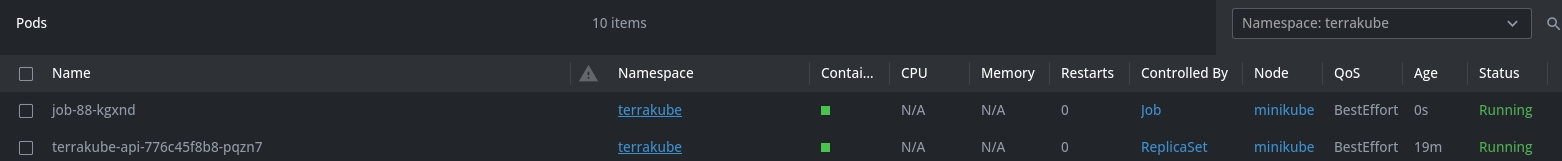

Now when the job is running internally Terrakube will create a K8S job and will execute each step of the job in a "ephemeral executor"

Internal Kubernetes Job Example:

Plan Running in a pod:

Apply Running in a different pod:

If required you can specify the node selector configuration where the pod will be created using something like the following:

The above will be the equivalent to use the Kubernetes YAML like:

Adding node selector configuration is available from version 2.23.0

A working Keycloak server with a configured realm.

For configuring Terrakube with Keycloak using Dex the following steps are involved:

Keycloak client creation and configuration for Terrakube.

Configure Terrakube so it works with Keycloak.

Testing the configuration.

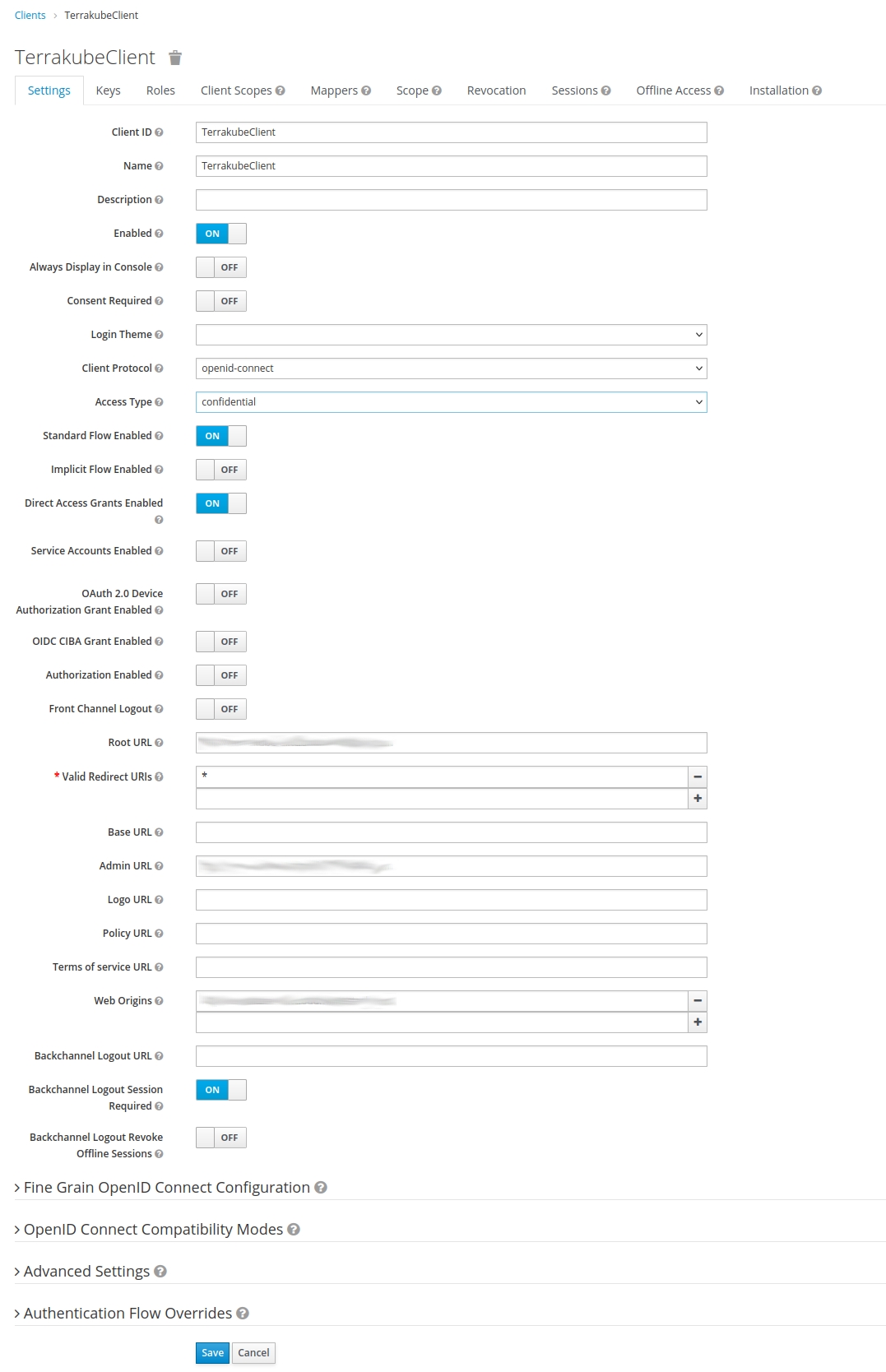

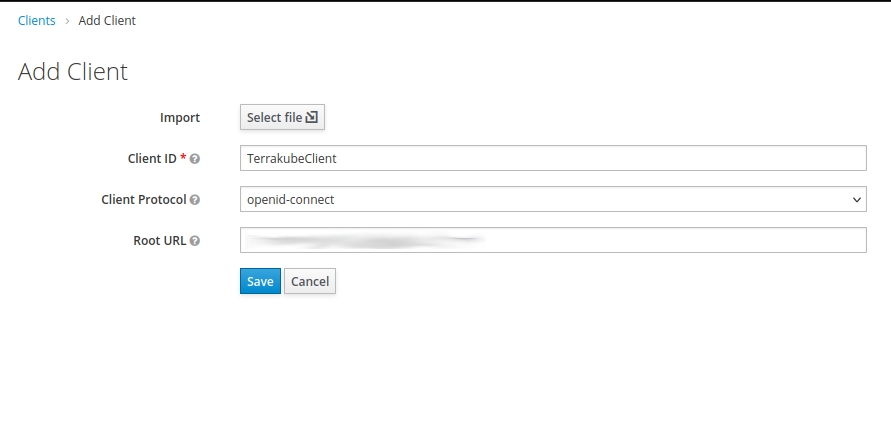

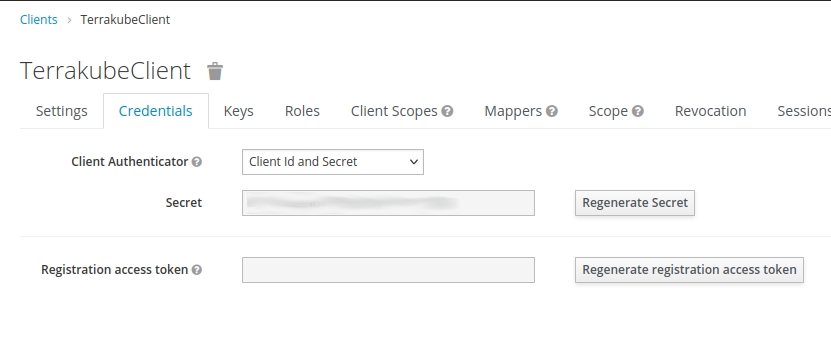

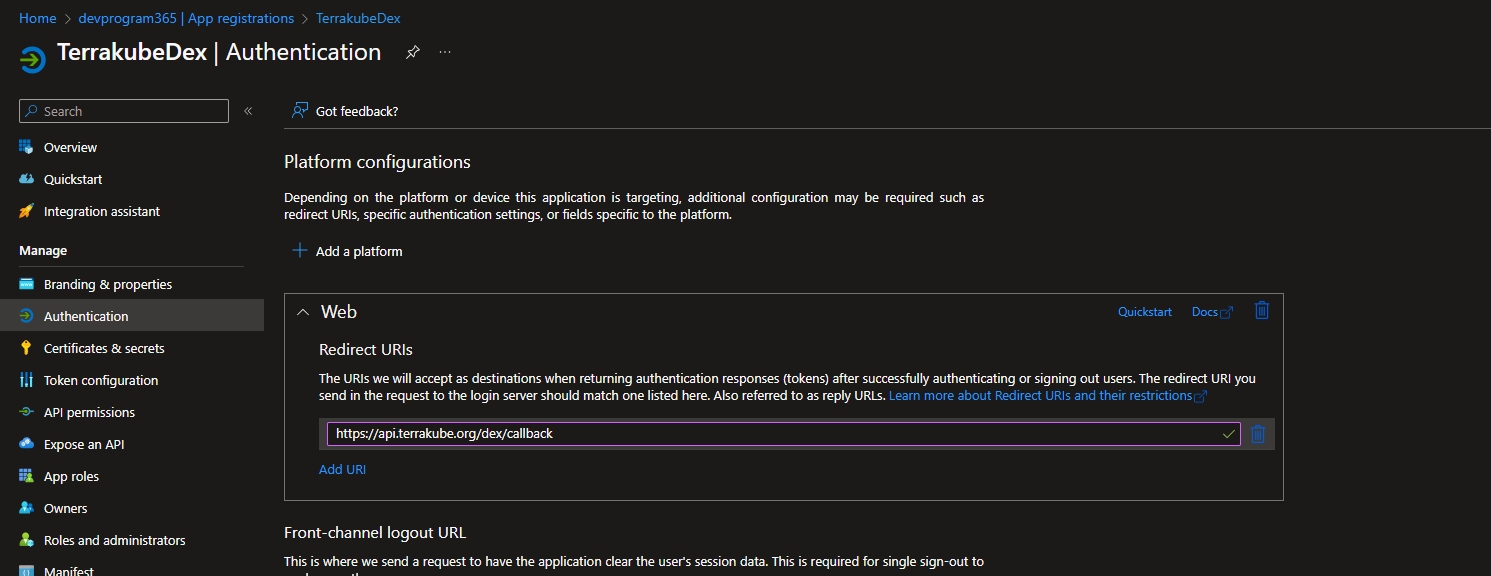

Log in to Keycloak with admin credentials and select Configure > Clients > Create. Define the ID as TerrakubeClient and select openid-connect as its protocol. For Root URL use Terrakube's GUI URL. Then click Save.

A form for finishing the Terrakube client configuration will be displayed. These are the fields that must be fulfilled:

Name: in this example it has the value of TerrakubeClient.

Client Protocol: it must be set to openid-connect.

Access Type: set it to confidential.

Then click Save.

Notice that, since we set Access Type to confidential, we have an extra tab titled Credentials. Click the Credentials tab and copy the Secret value. It will be used later when we configure the Terrakube's connector.

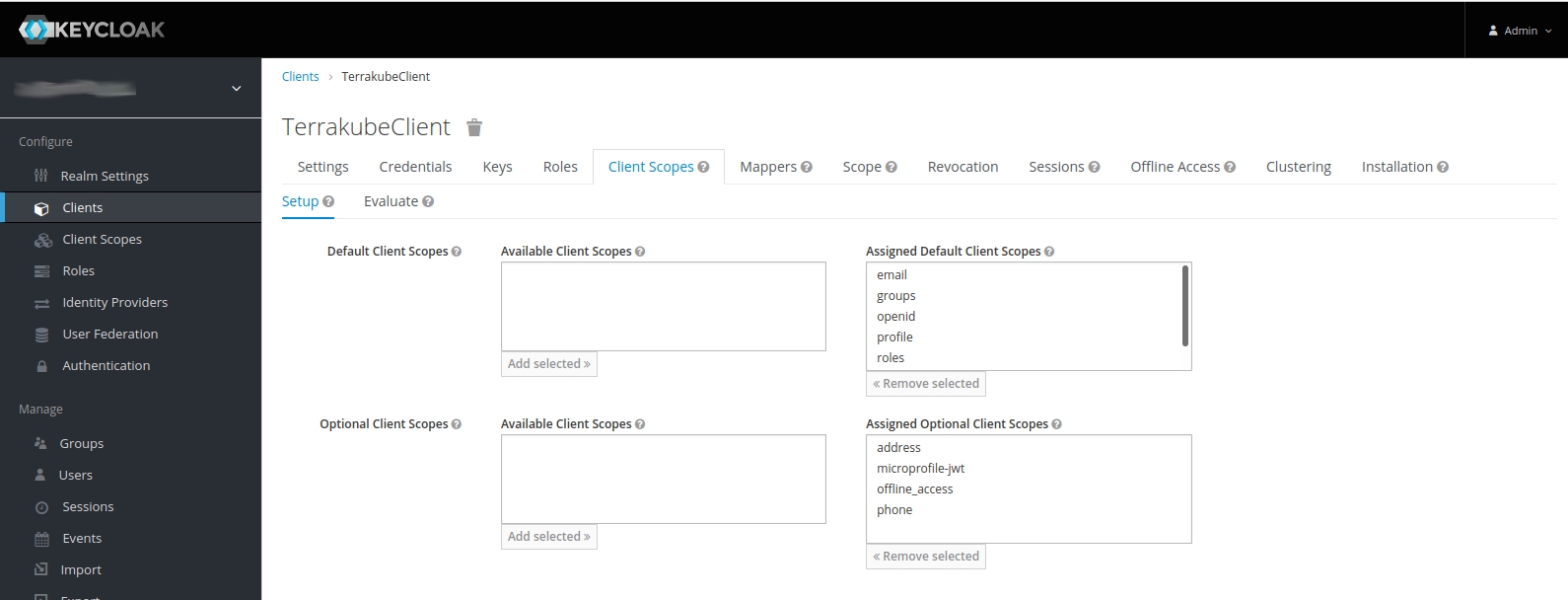

Depending on your configuration, Terrakube might expect different client scopes, such as openid, profile, email, groups, etc. You can see if they are assigned to TerrakubeClient by clicking on the Client Scopes tab (in TerrakubeClient).

If they are not assigned, you can assign them by selecting the scopes and clicking on the Add selected button.

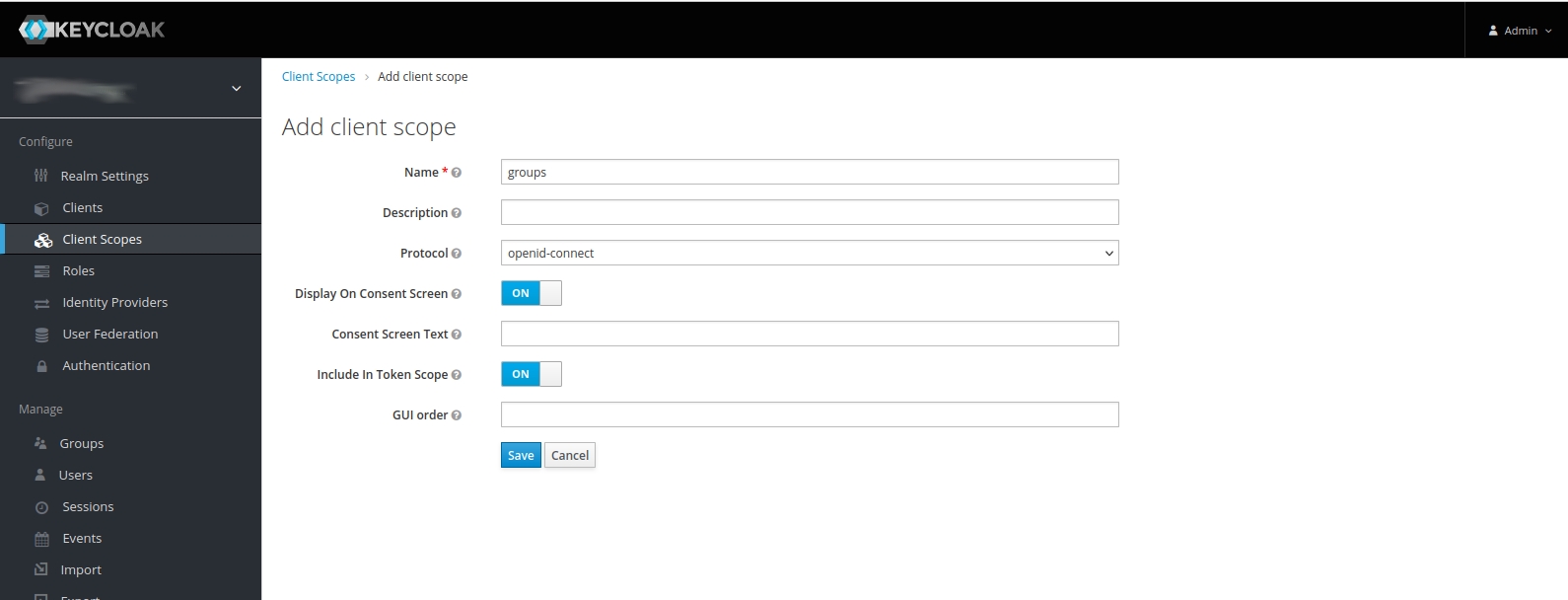

If some scope does not exist, you must create it before assigning it to the client. You do this by clicking on Client Scopes, then click on the Create button. This will lead you to a form where you can create the new scope. Then, you can assign it to the client.

We have to configure a Dex connector to use with Keycloak. Add a new connector in Dex's configuration, so it looks like this:

This is the simpler configuration that we can use. Let's see some notes about this fields:

type: must be oidc (OpenID Connect).

name: this is the string shown in the connectors list in Terrakube GUI.

issuer: it refers to the Keycloak server. It has the form [http|https]://<KEYCLOAK_SERVER>/auth/realms/<REALM_NAME>

{% hint style="info" %} If your users do not have a name set (First Name field in Keycloak), you must tell oidc which attribute to use as the user's name. You can do this giving the userNameKey:

{% endhint %}

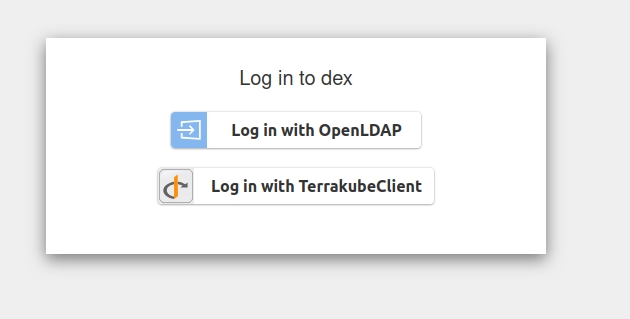

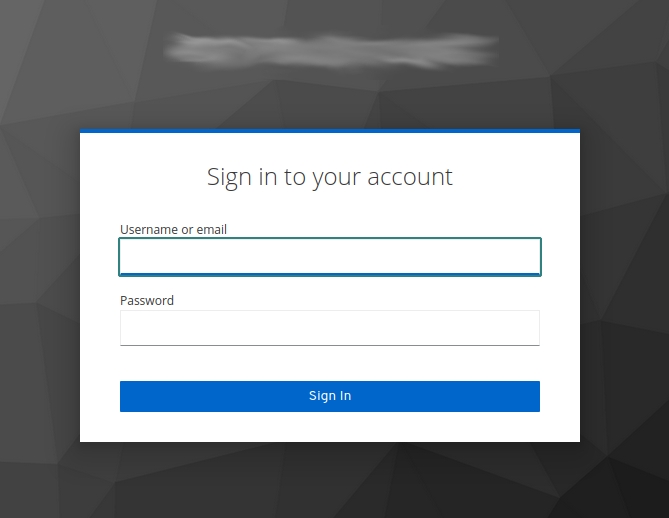

When we click on Terrakube's login button we are given the choice to select the connector we want to use:

Click on Log in with TerrakubeClient. You will be redirected to a login form in Keycloak:

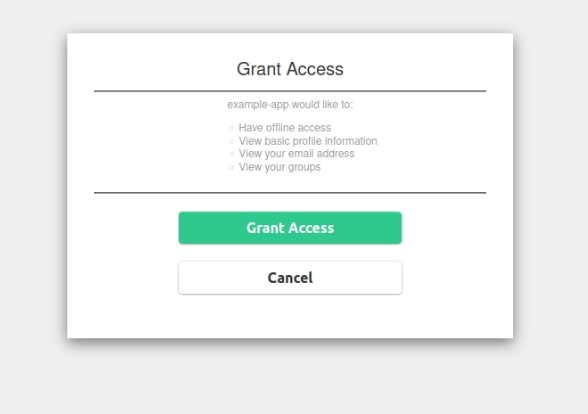

After login, you are redirected back to Terrakube and a dialog asking you to grant access is shown

Click on Grant Access. That is the last step. If everything went right, now you should be logged in Terrakube.

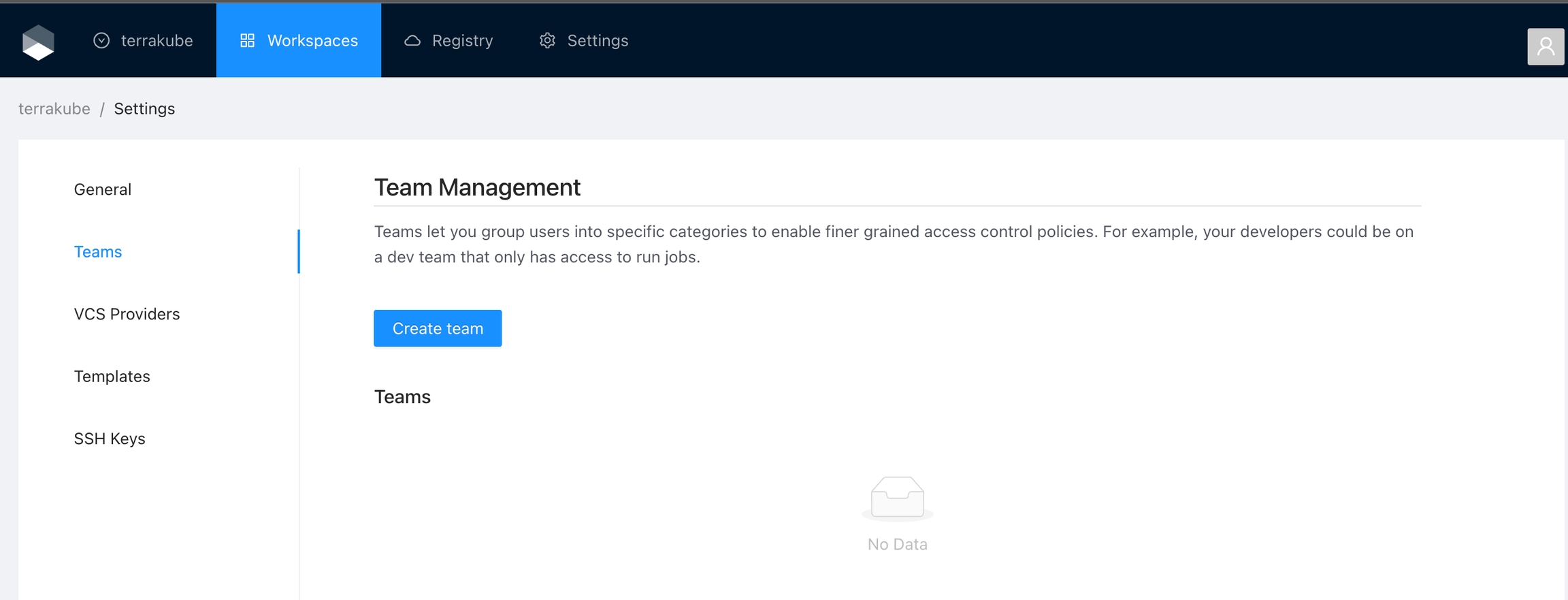

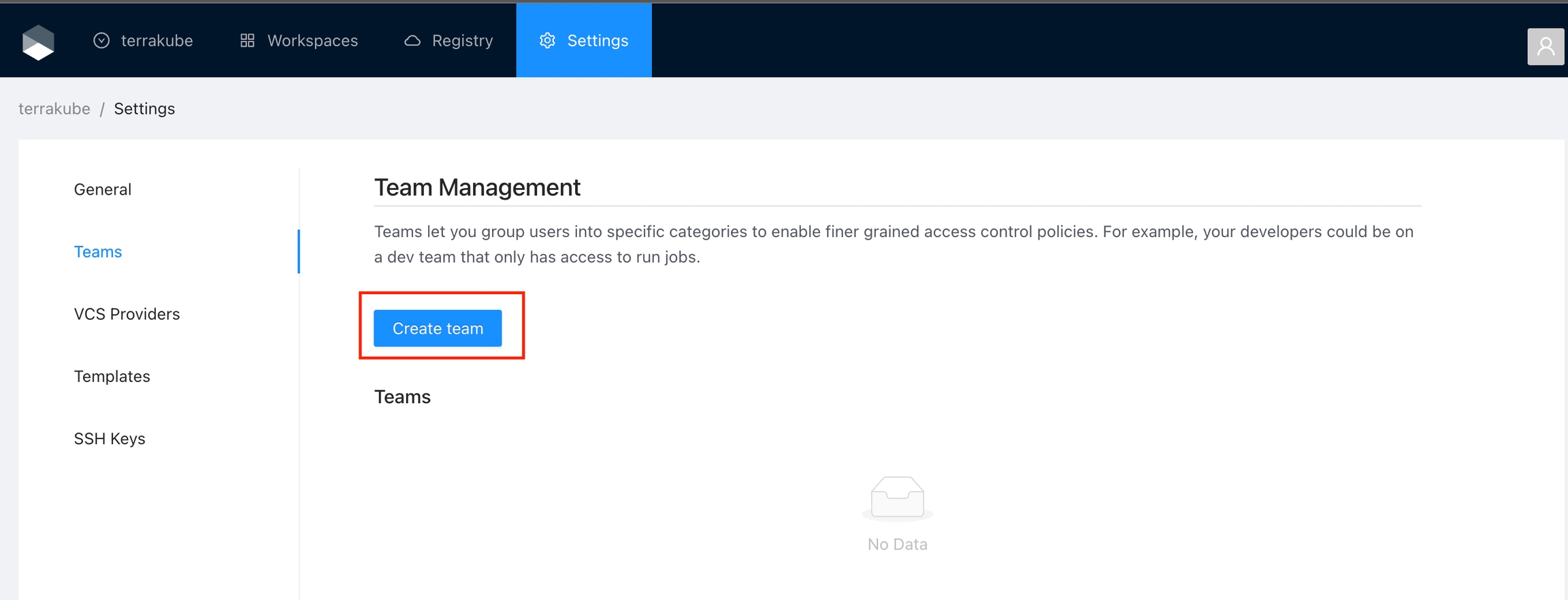

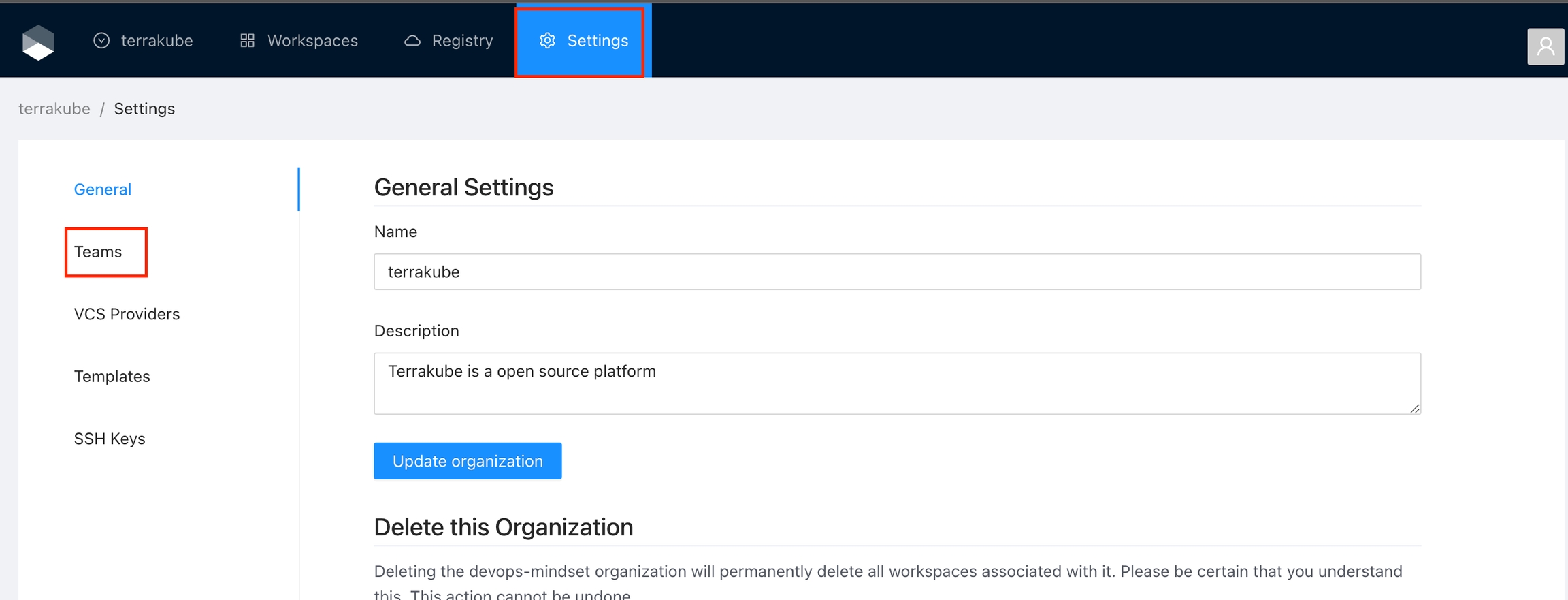

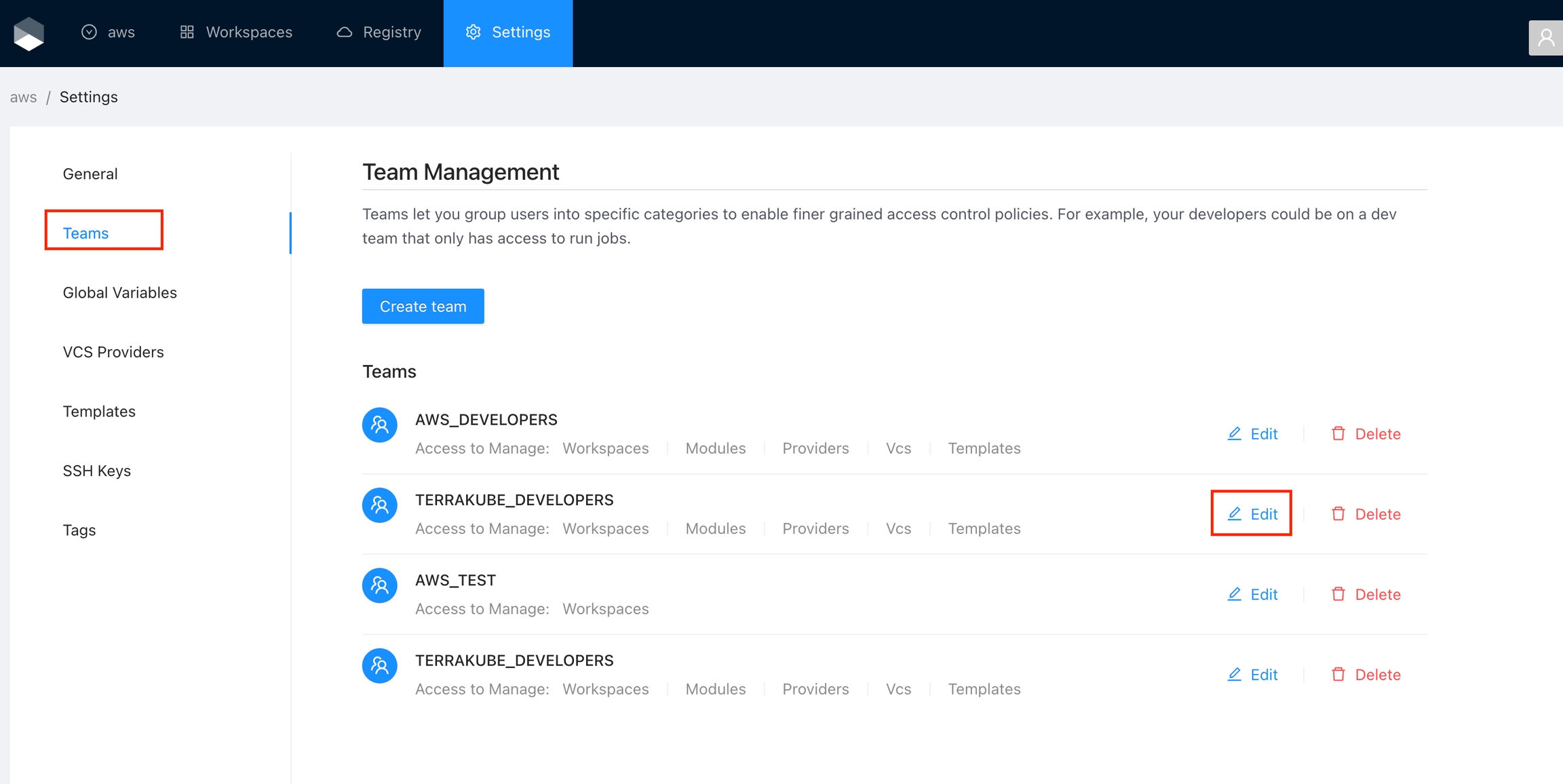

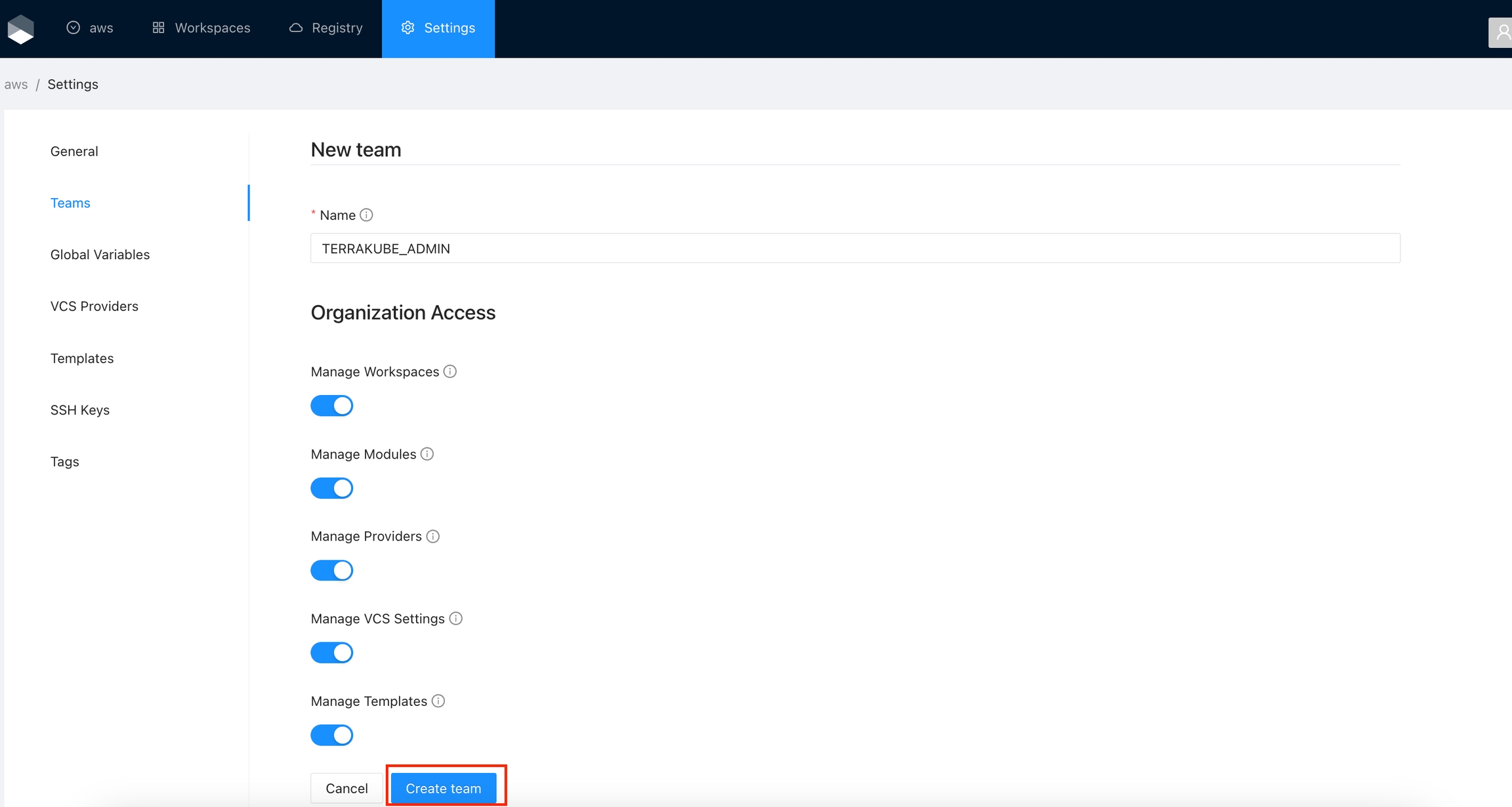

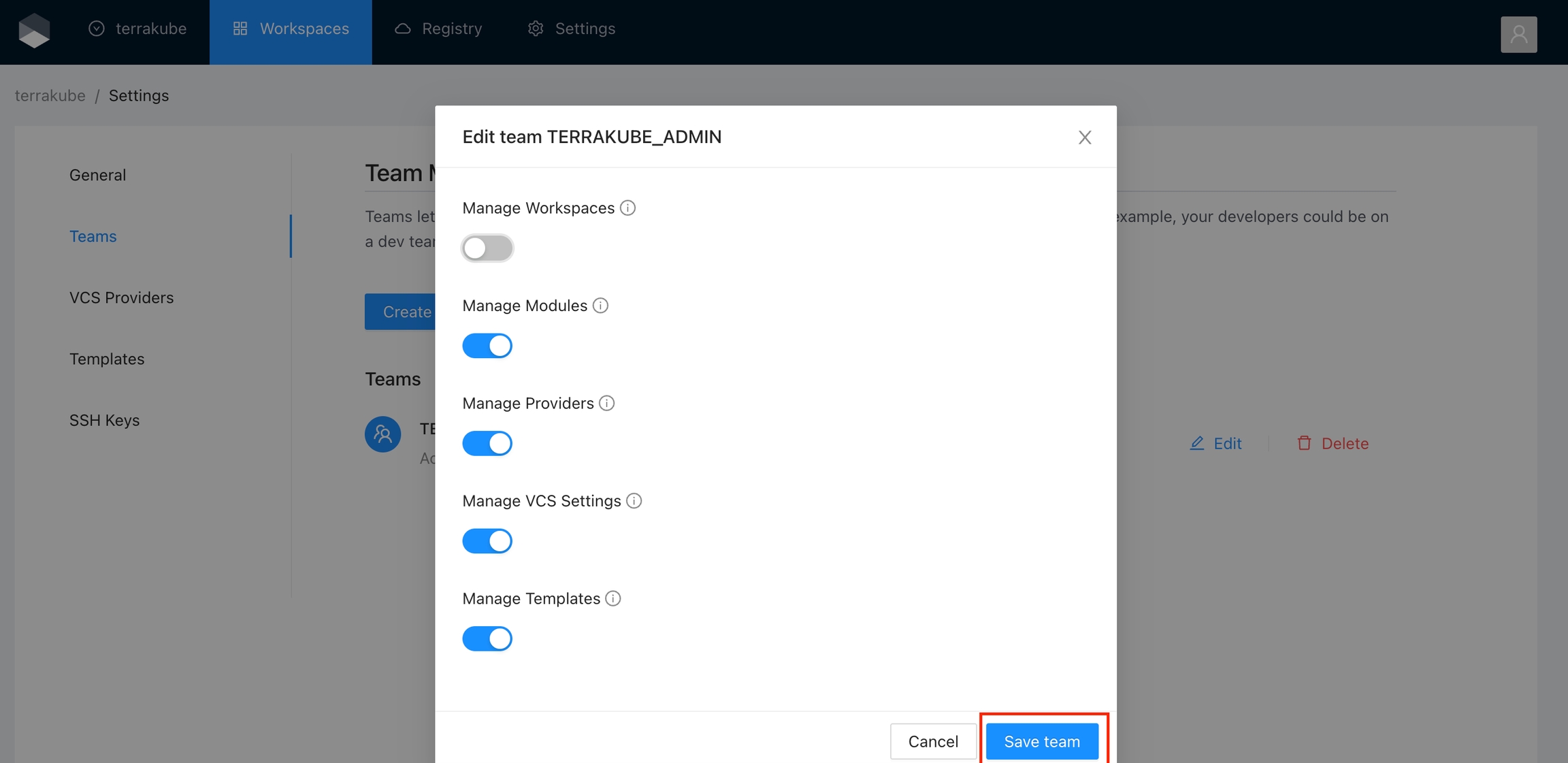

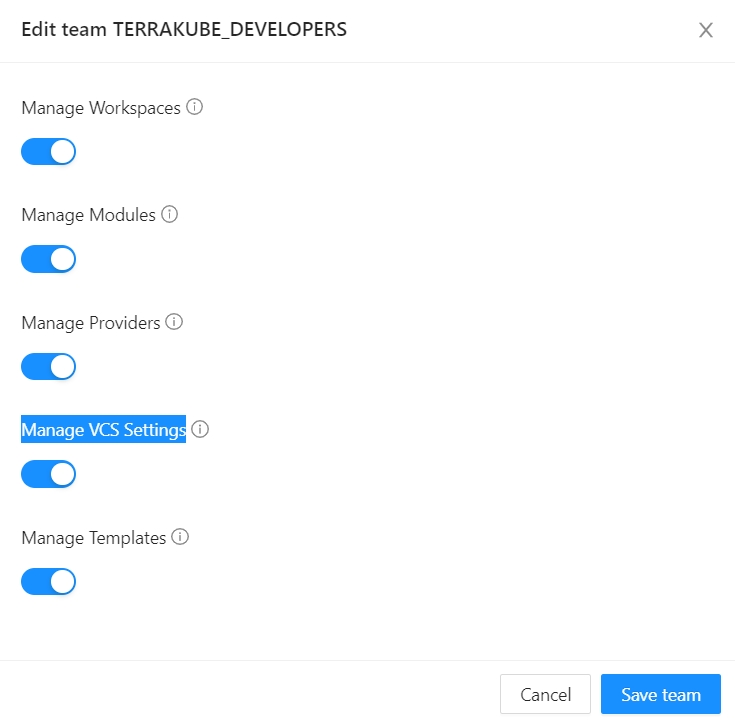

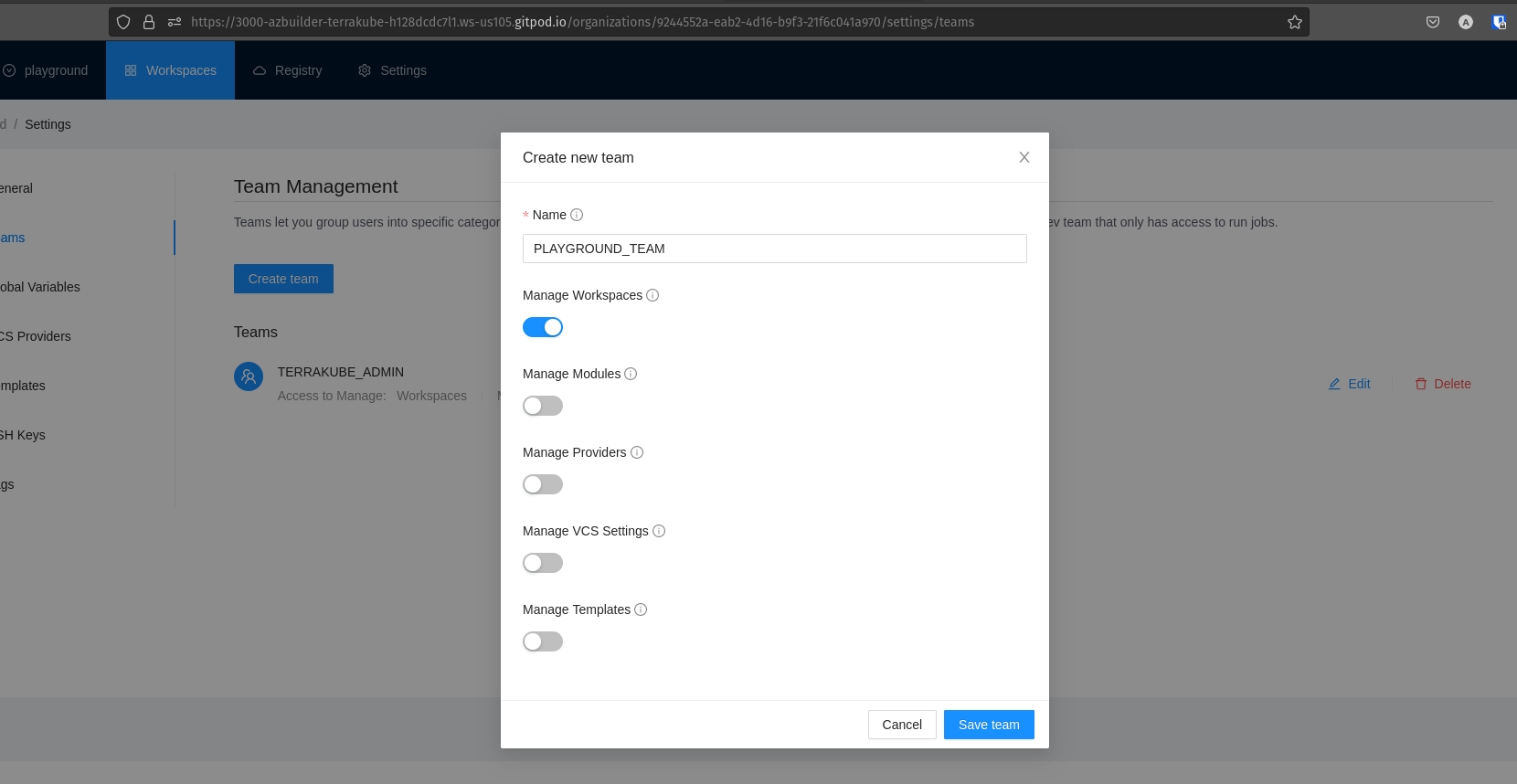

In Terrakube you can define user permissions inside your organization using teams.

Once you are in the desired organization, click the Settings button and then in the left menu select the Teams option.

Click the Create team button

In the popup, provide the team name and the permissions assigned to the team. Use the below table as reference:

Finally click the Create team button and the team will be created

Now all the users inside the team will be able to manage the specific resources within the organization based on the permissions you grantted.

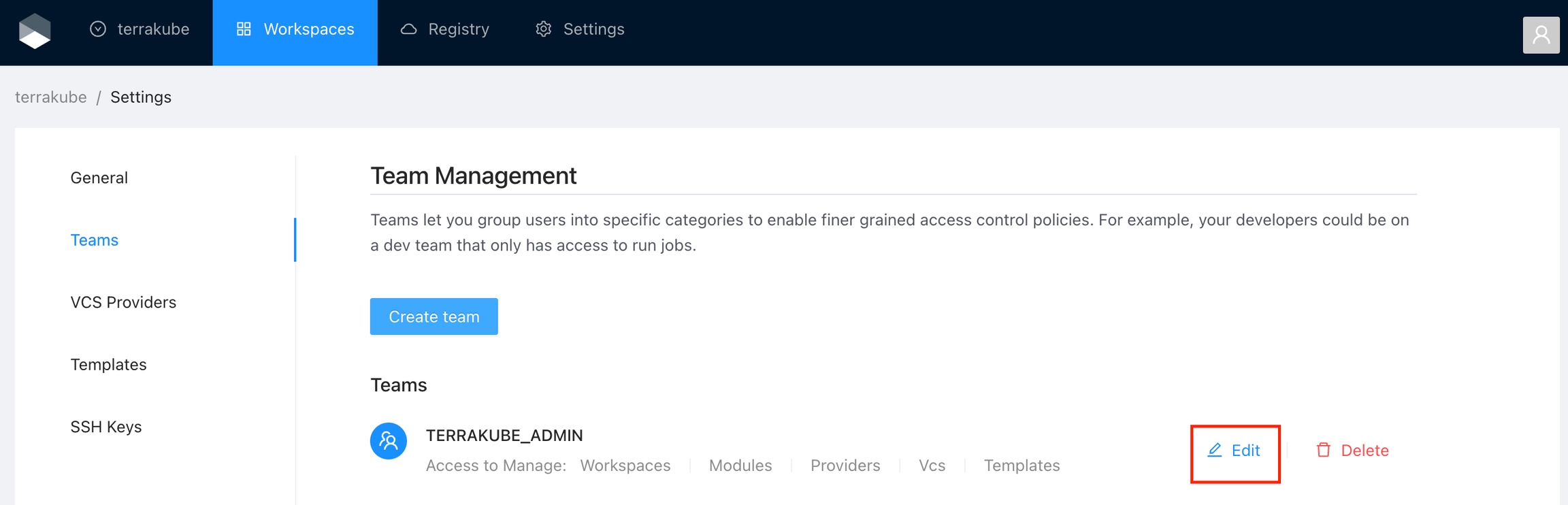

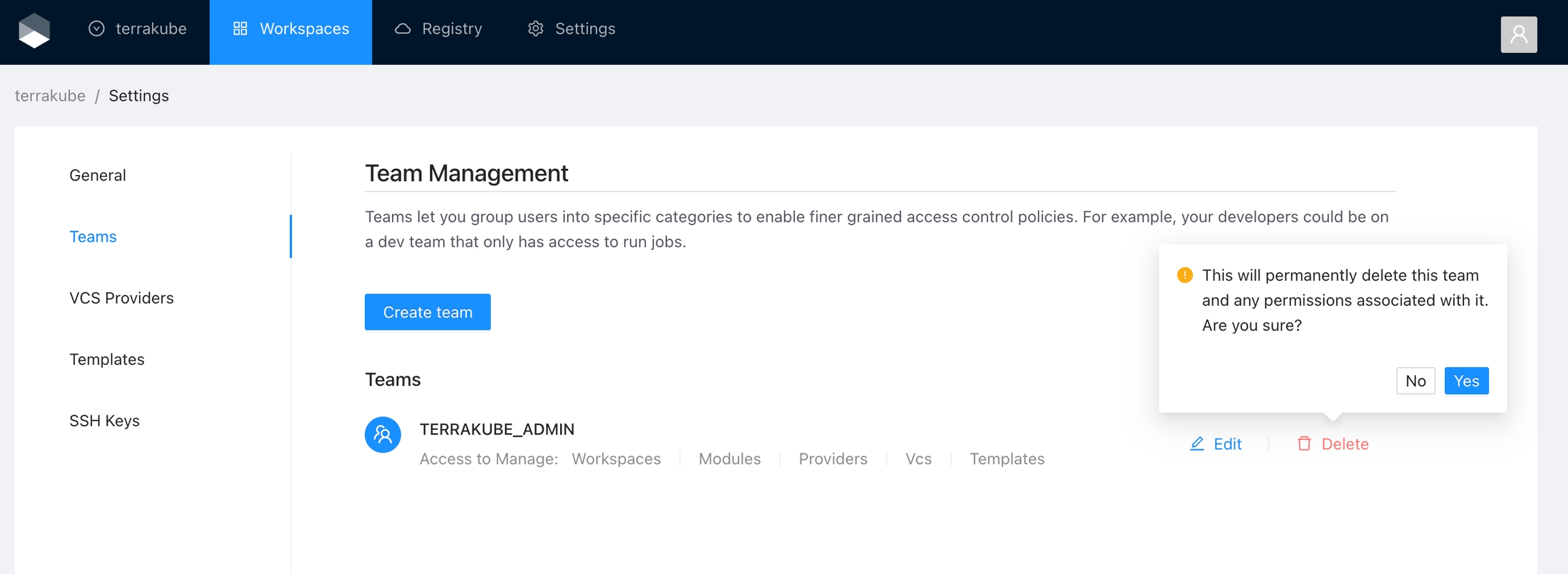

Click the Edit button next to the team you want to edit

Change the permissions you need and click the Save team button

Click the Delete button next to the team you want to delete, and then click the Yes button to confirm the deletion. Please take in consideration the deletion is irreversible

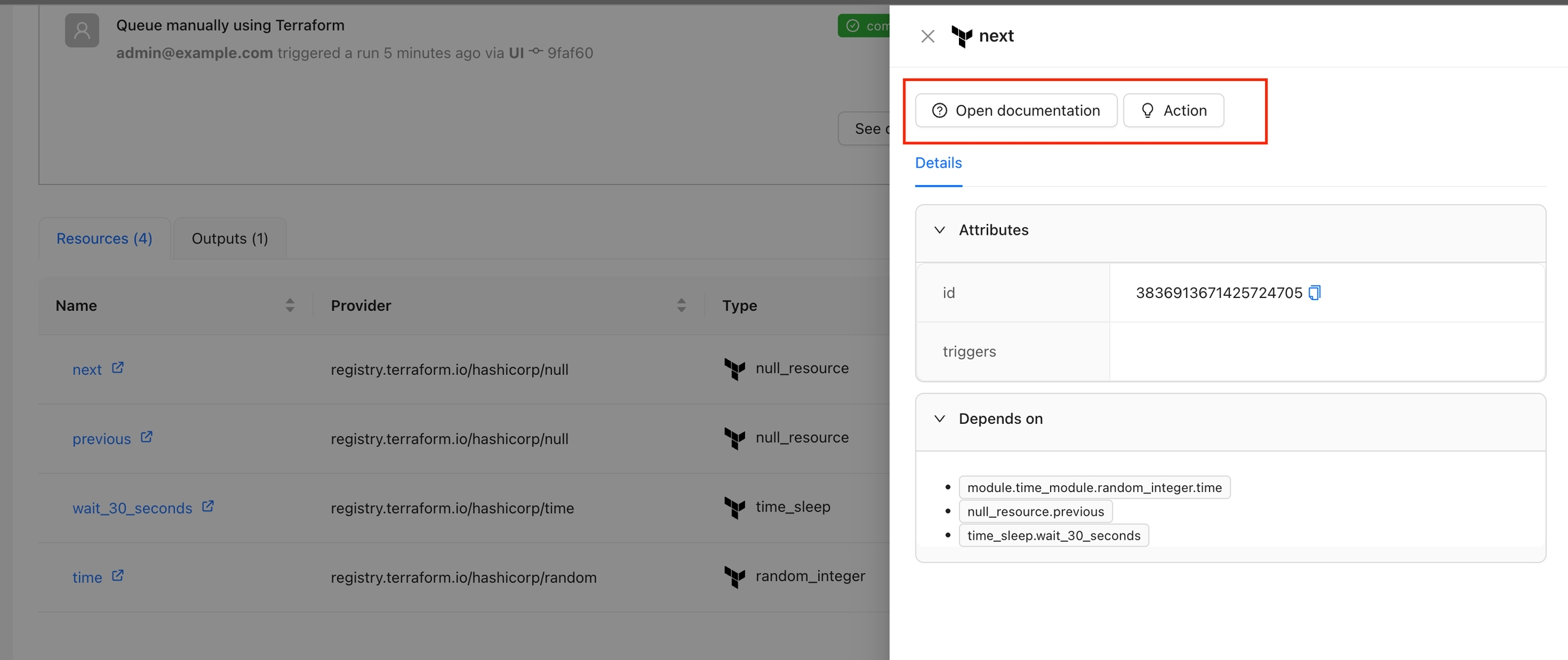

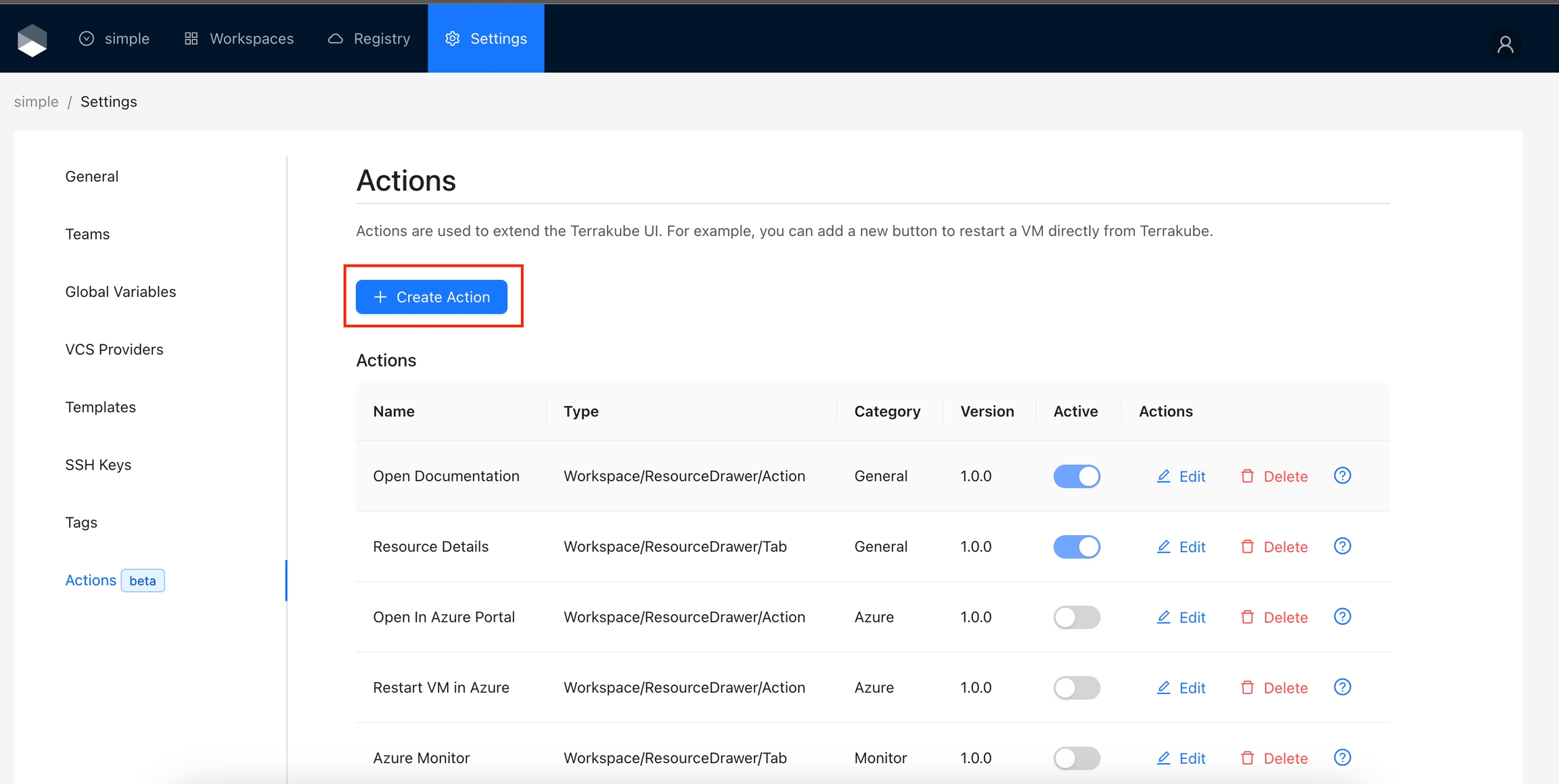

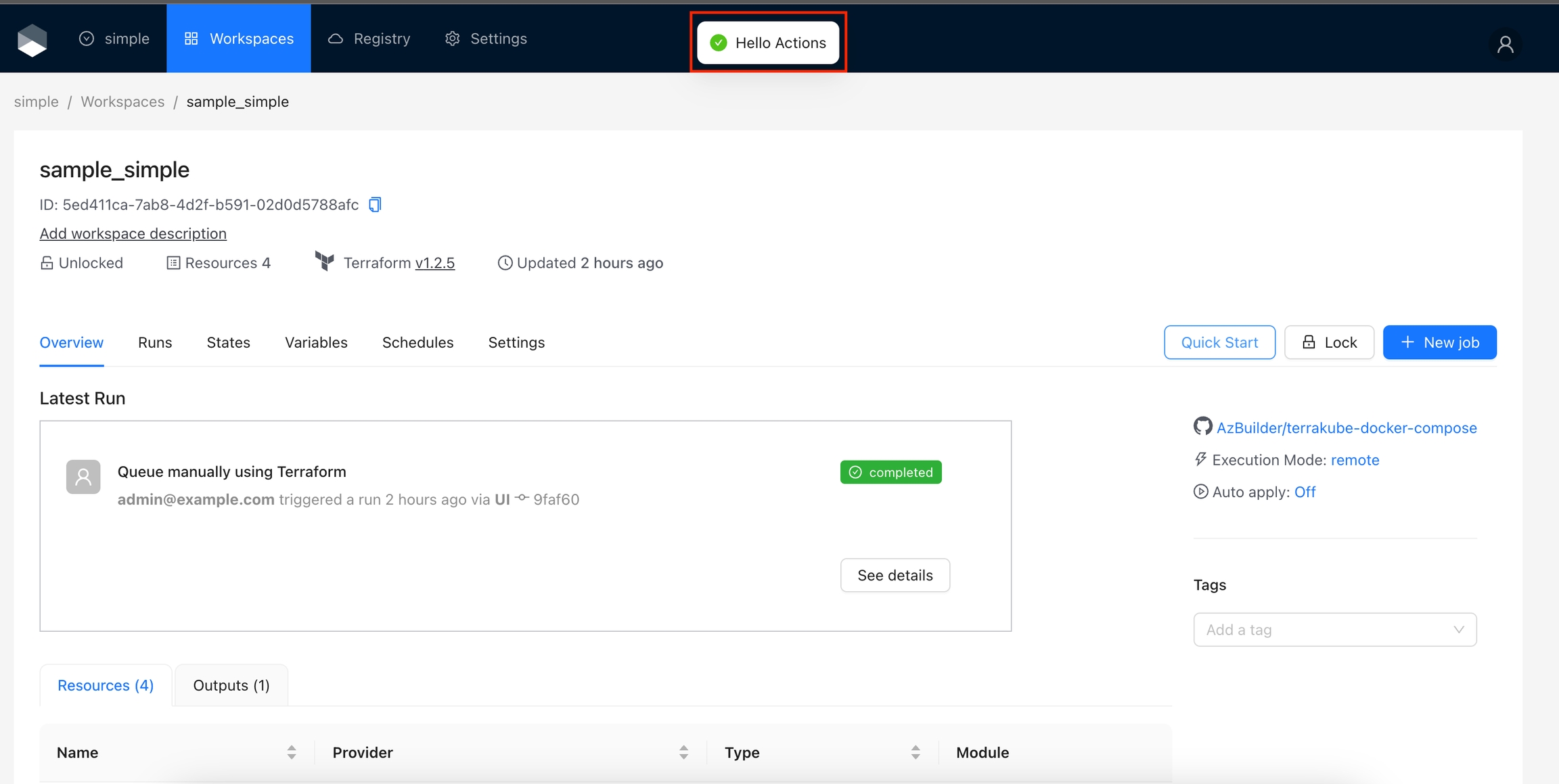

Action Types define where an action will appear in Terrakube. Here is the list of supported types:

The action will appear on the Workspace page, next to the Lock button. Actions are not limited to buttons, but this section is particularly suitable for adding various kinds of buttons. Use this type for actions that involves interaction with the Workspace

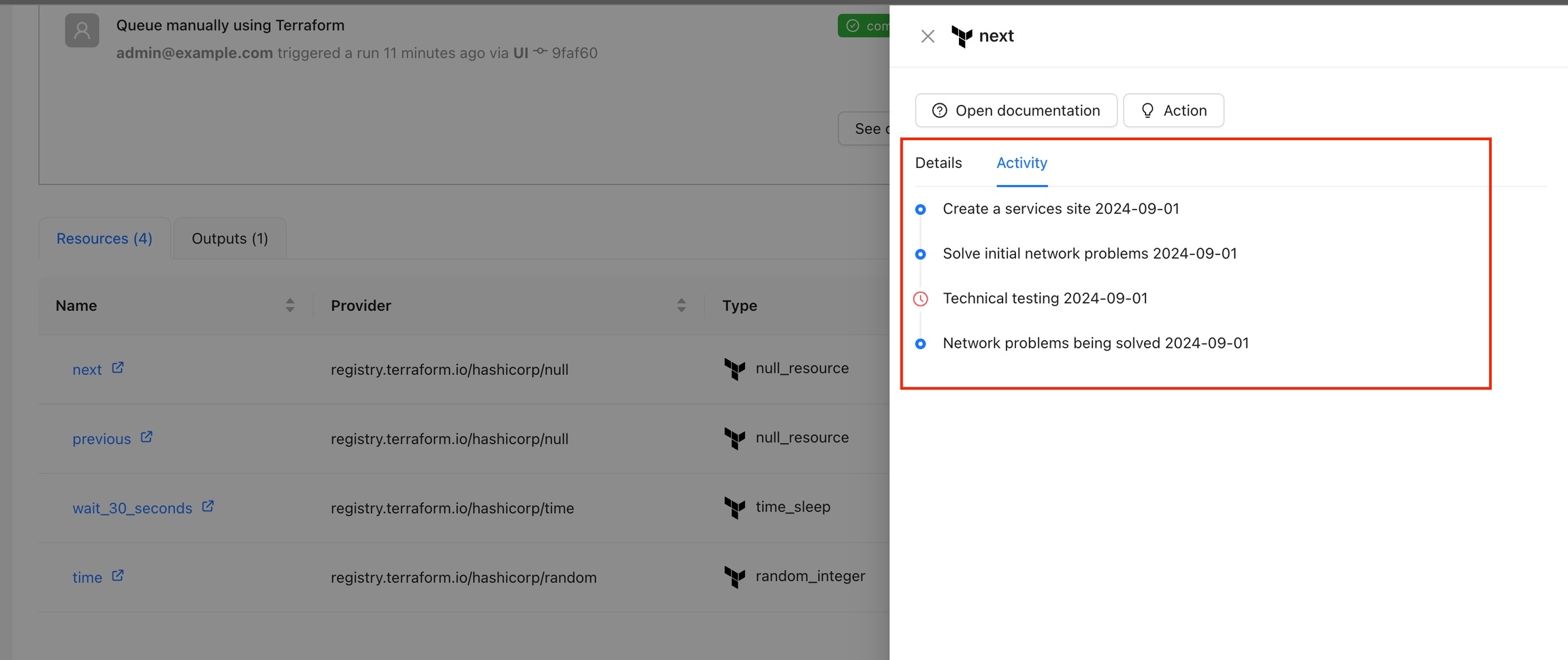

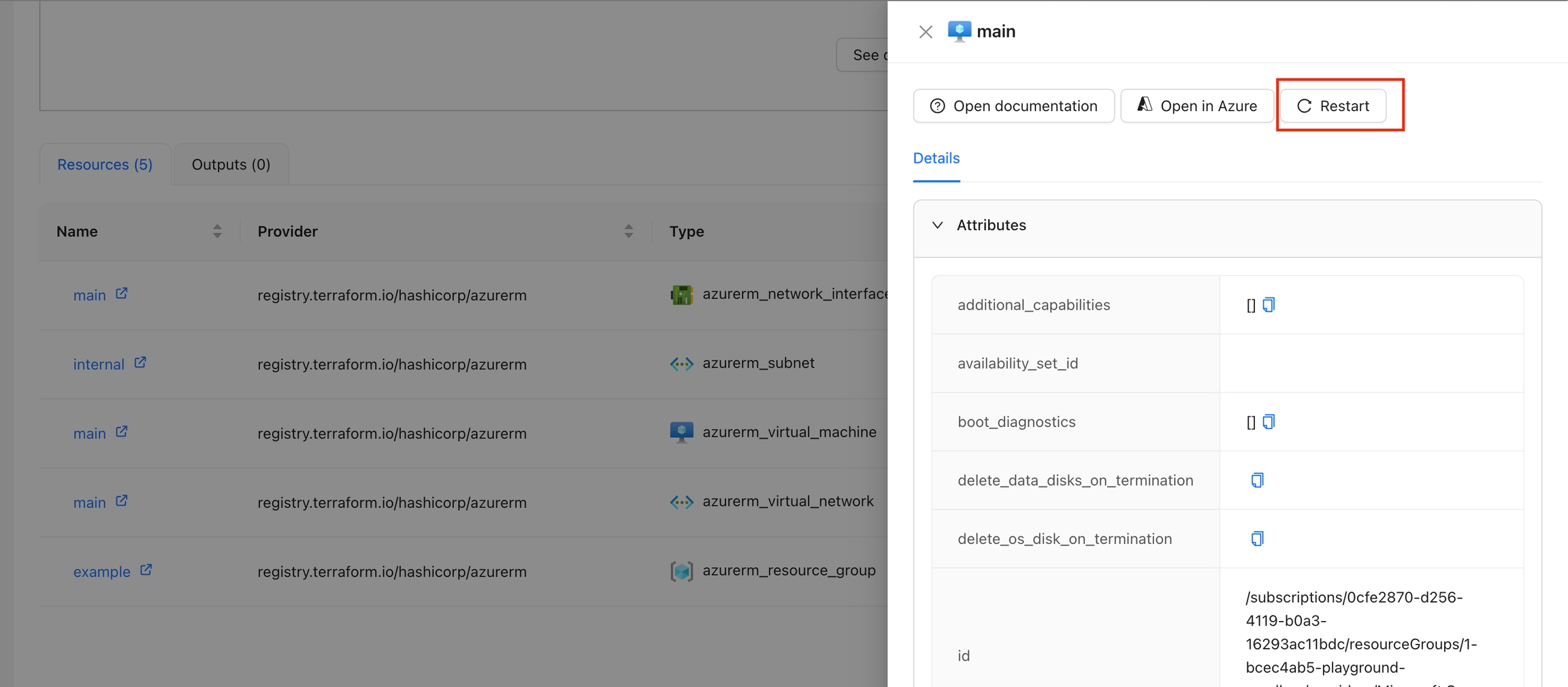

The action will appear for each resource when you click on a resource using the Overview Workspace page or the Visual State Diagram. Use this when an action applies to specific resources, such as restarting a VM or any actions that involve interaction with the resource.

The action will appear for each resource when you click on a resource using the Overview Workspace page or the Visual State Diagram. Use this when you want to display additional data for the resource, such as activity history, costs, metrics, and more.

For this type, theLabel property will be used as the name of the Tab pane.

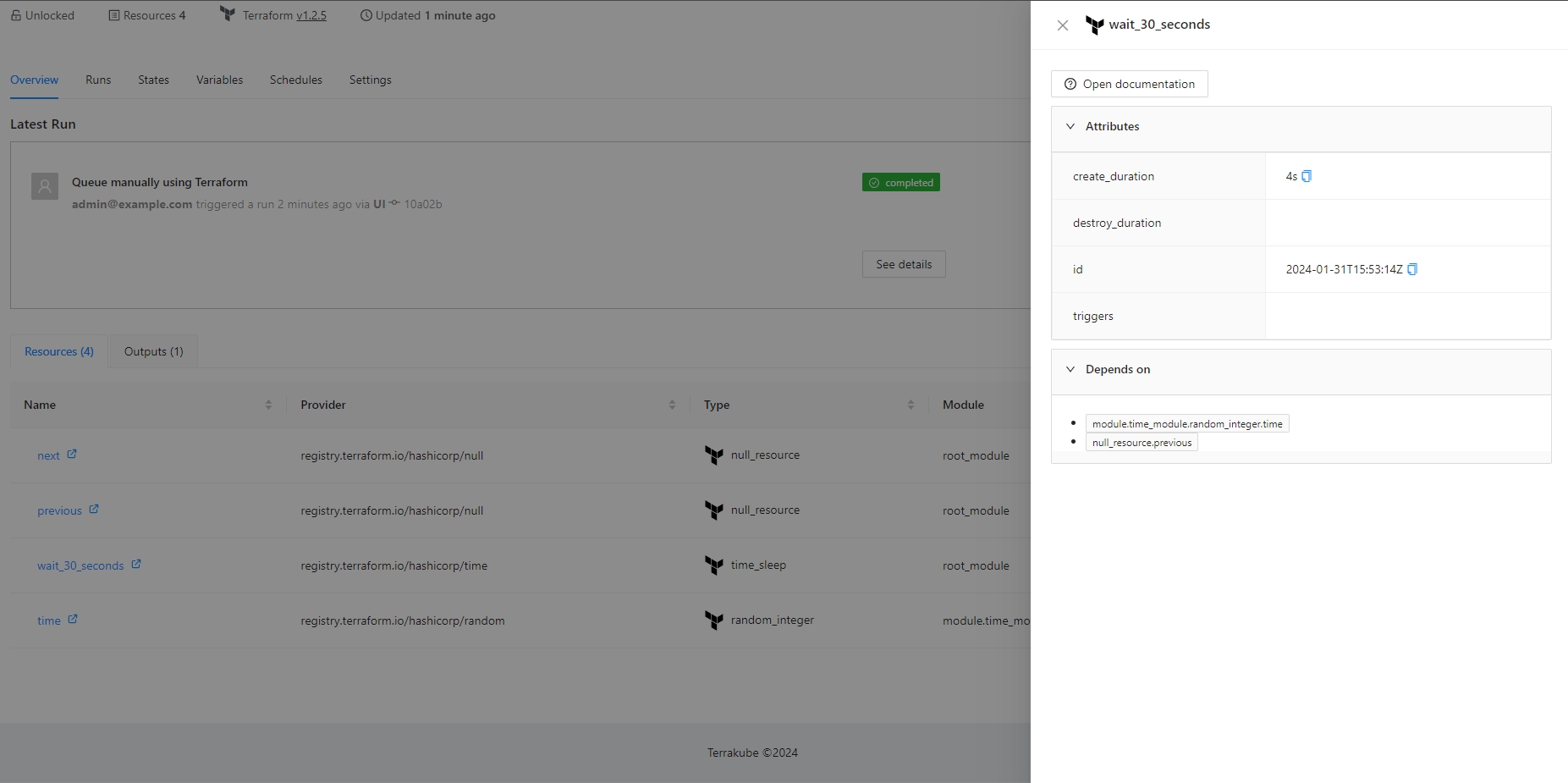

The Resource Details action is designed to show detailed information about a specific resource within the workspace. By using the context of the current state, this action provides a detailed view about the properties and dependencies in a tab within the resource drawer.

By default this Action is enabled and will appear for all the resources. If you want to display this action only for certain resources, please check .

Navigate to the Workspace Overview or the Visual State and click a resource name.

In the Resource Drawer, you will see a new tab Details with the resource attributes and dependencies.

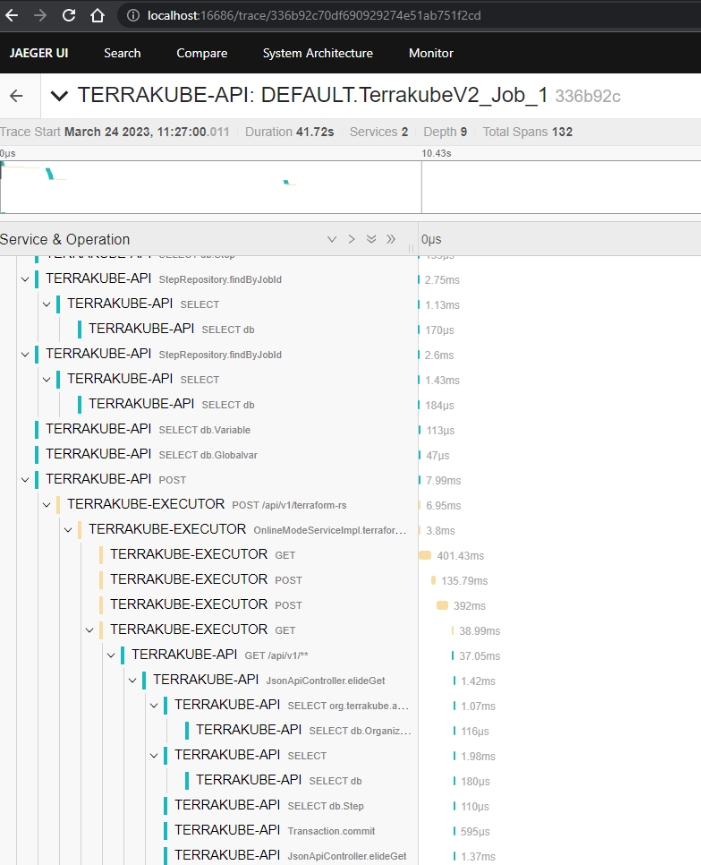

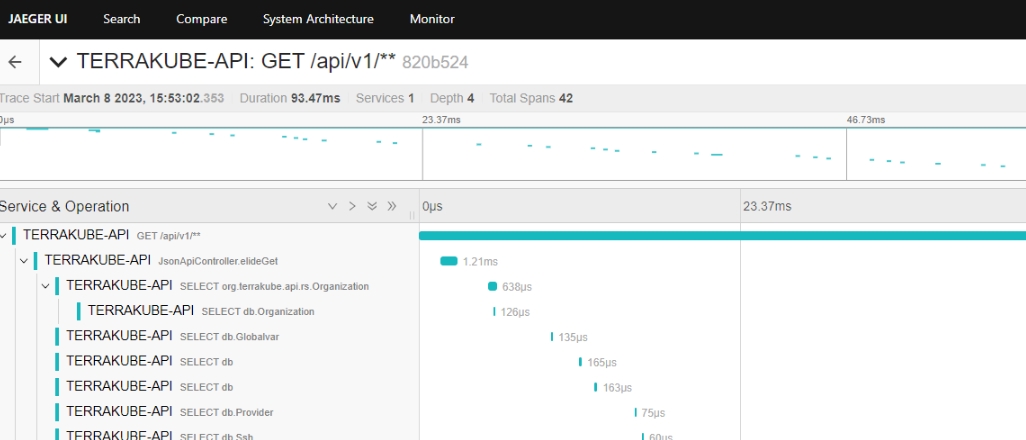

Terrakube components support by default to enable effective observability.

To enable telemetry inside the Terrakube components please add the following environment variable:

Terrakube API, Registry and Executor support the setup for now from version 2.12.0.

UI support will be added in the future.

Once the open telemetry agent is enable we can use other environment variables to setup the monitoring for our application for example to enable jaeger we could add the following using addtional environment variables:

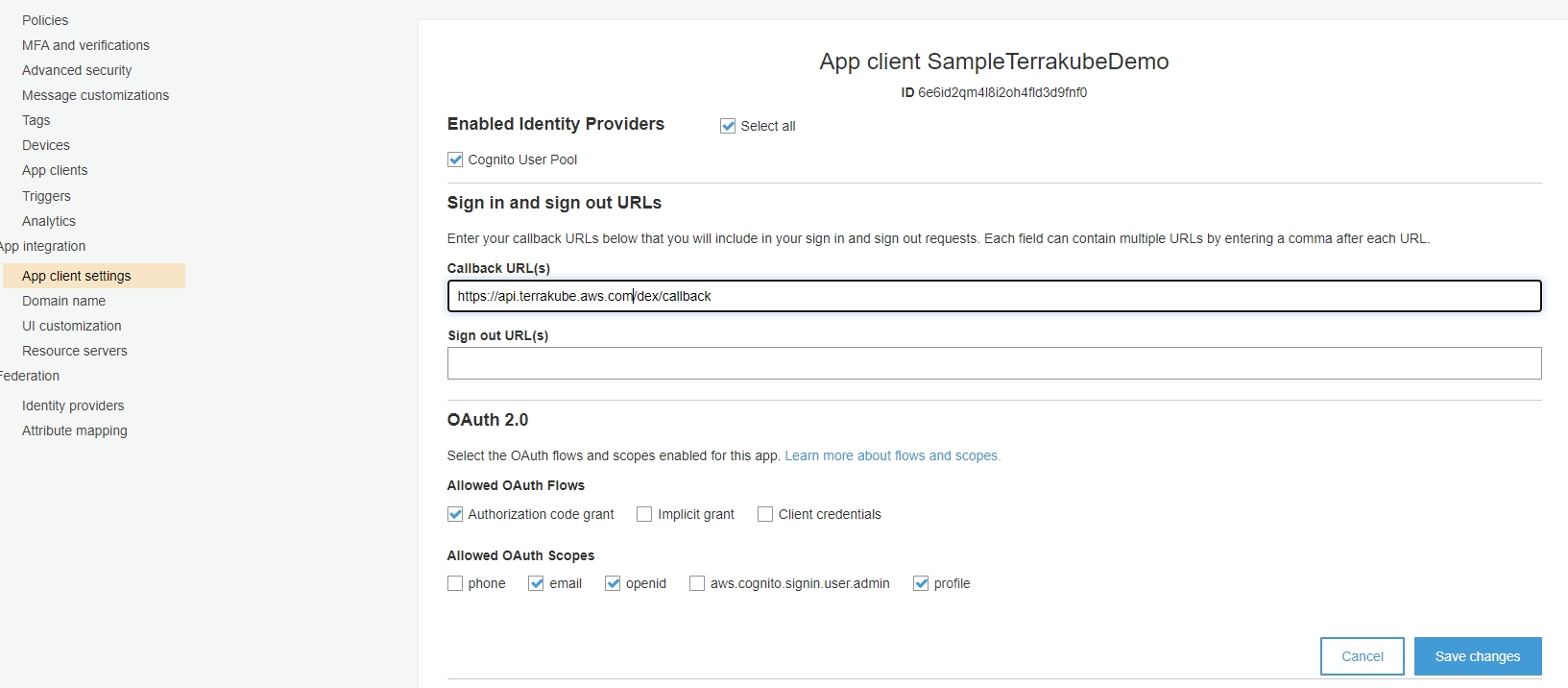

To authenticate users Terrakube implement so you can authenticate using differente providers using like the following:

Azure Active Direcory

Google Cloud Identity

Amazon Cognito

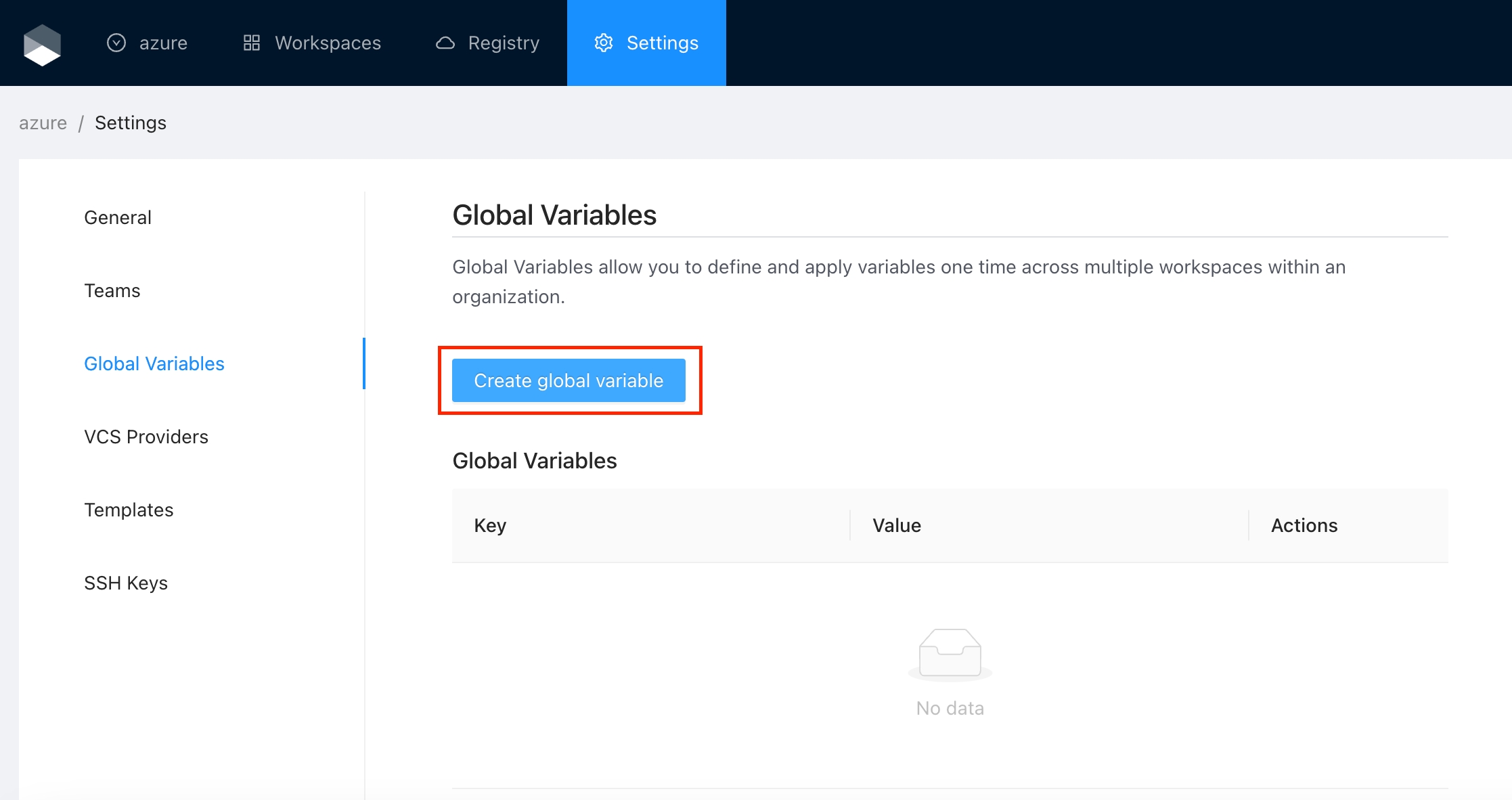

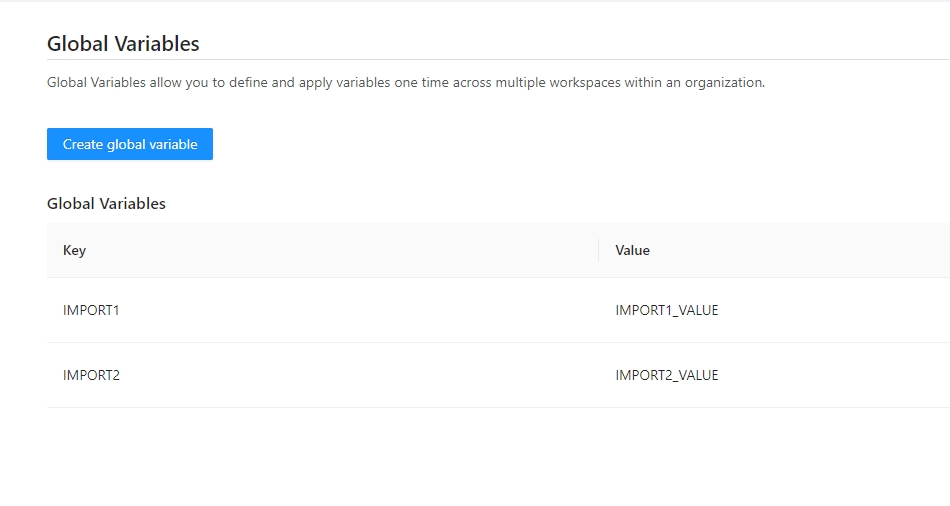

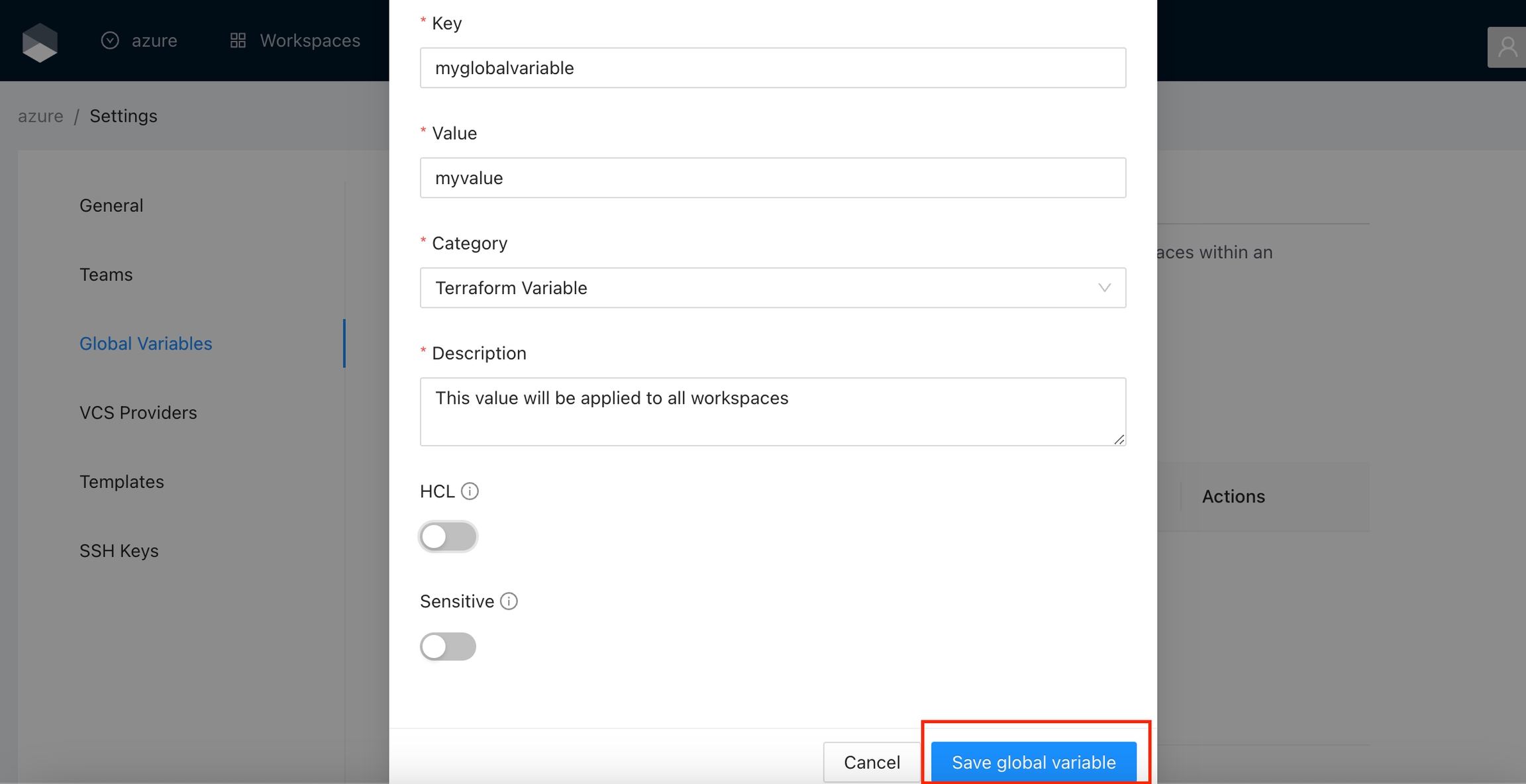

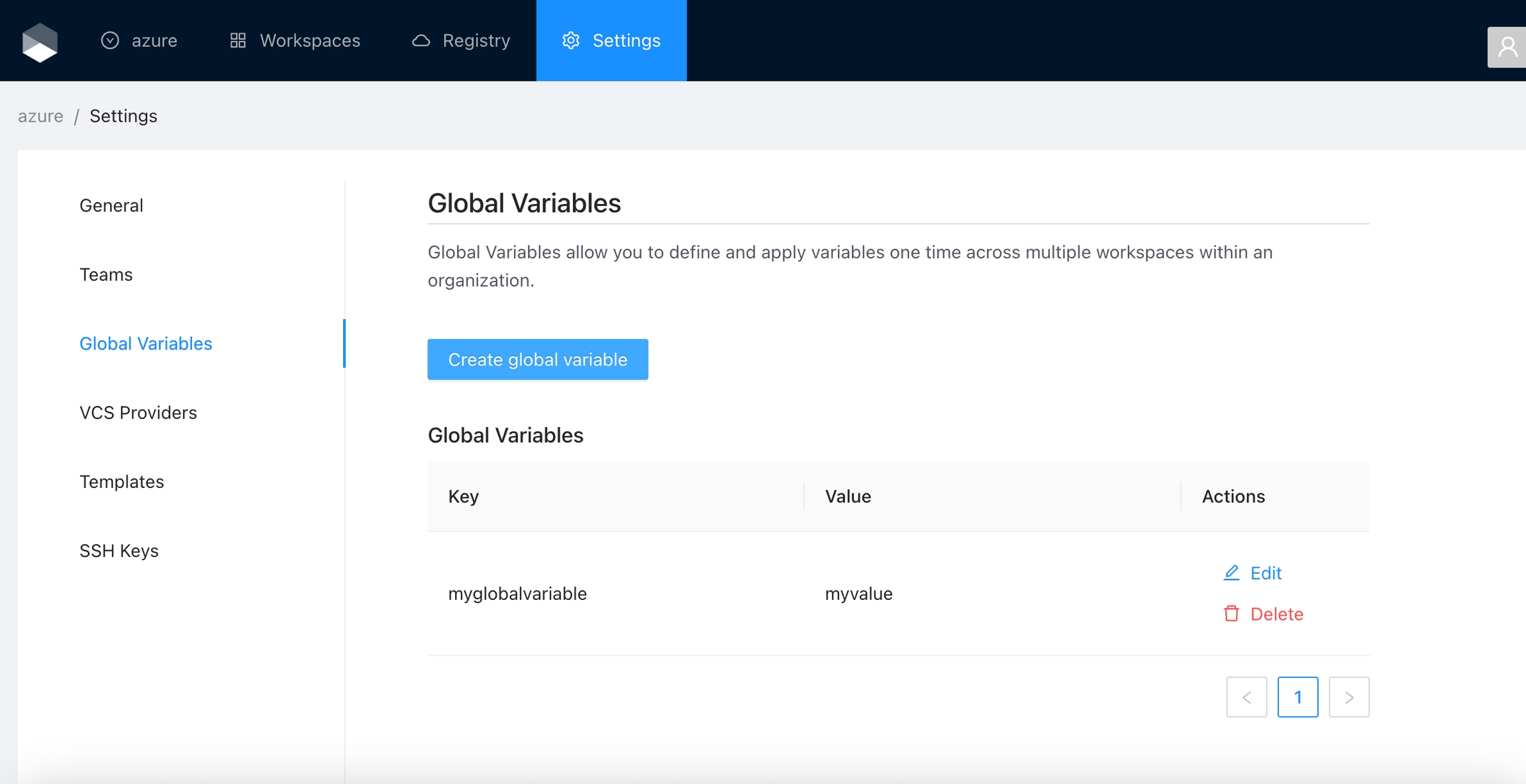

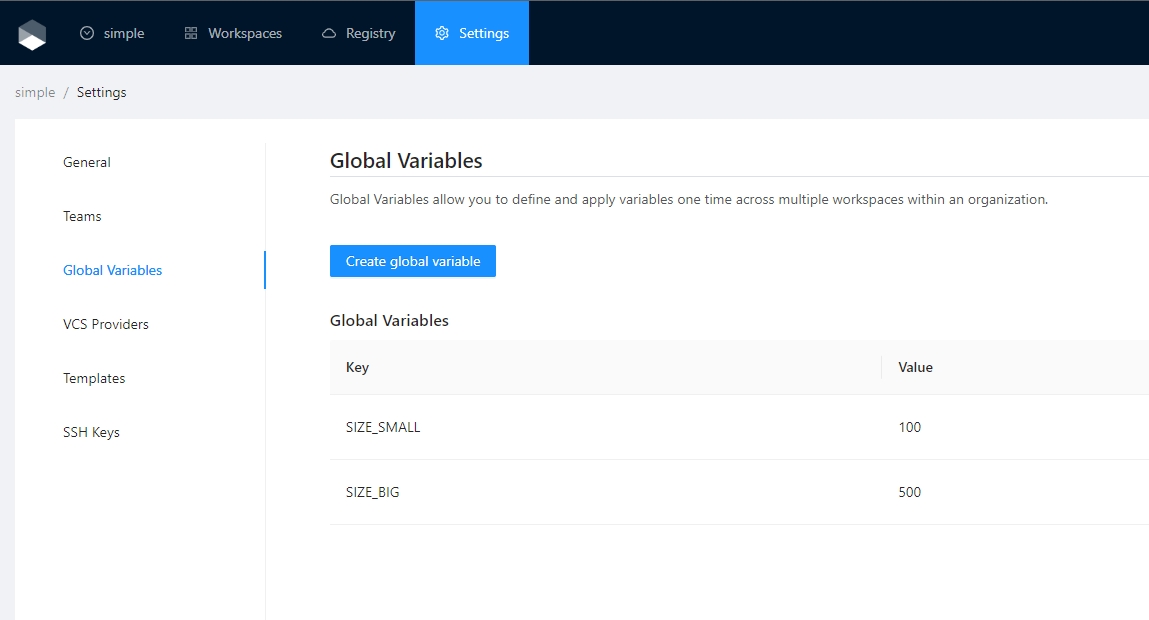

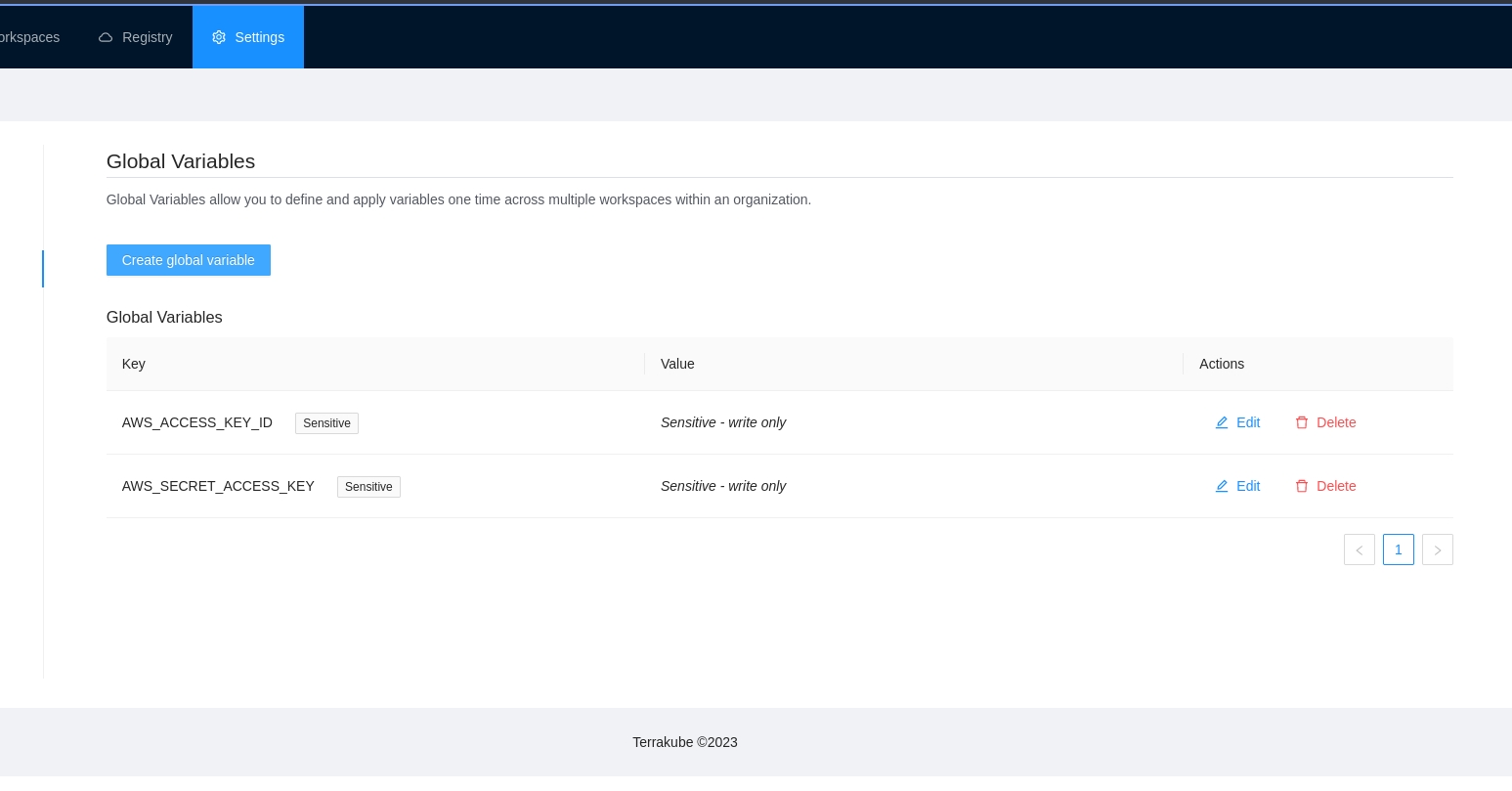

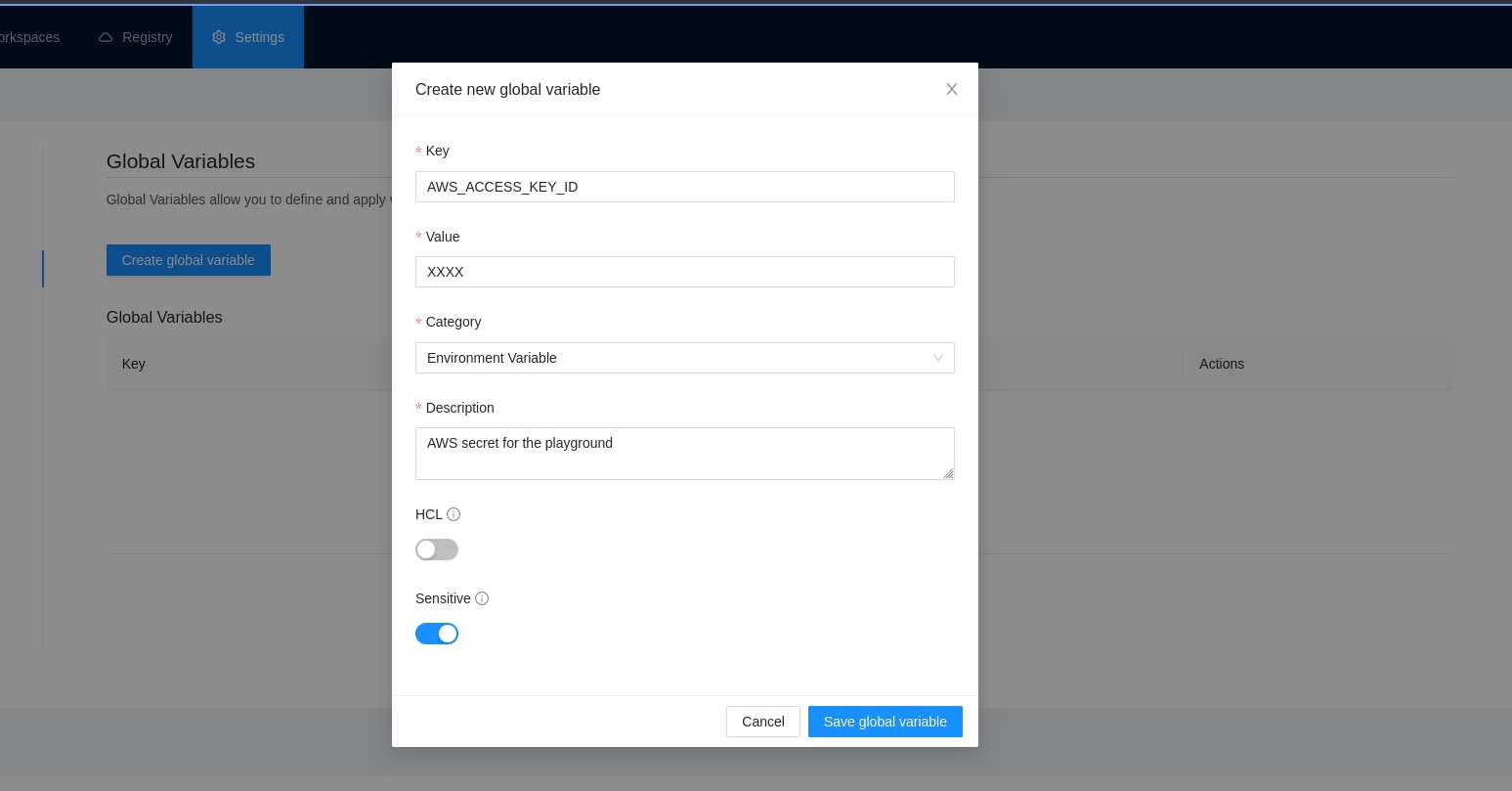

Global Variables allow you to define and apply variables one time across all workspaces within an organization. For example, you could define a global variable of provider credentials and automatically apply it to all workspaces.

Workspace variables have priority over global variables if the same name is used.

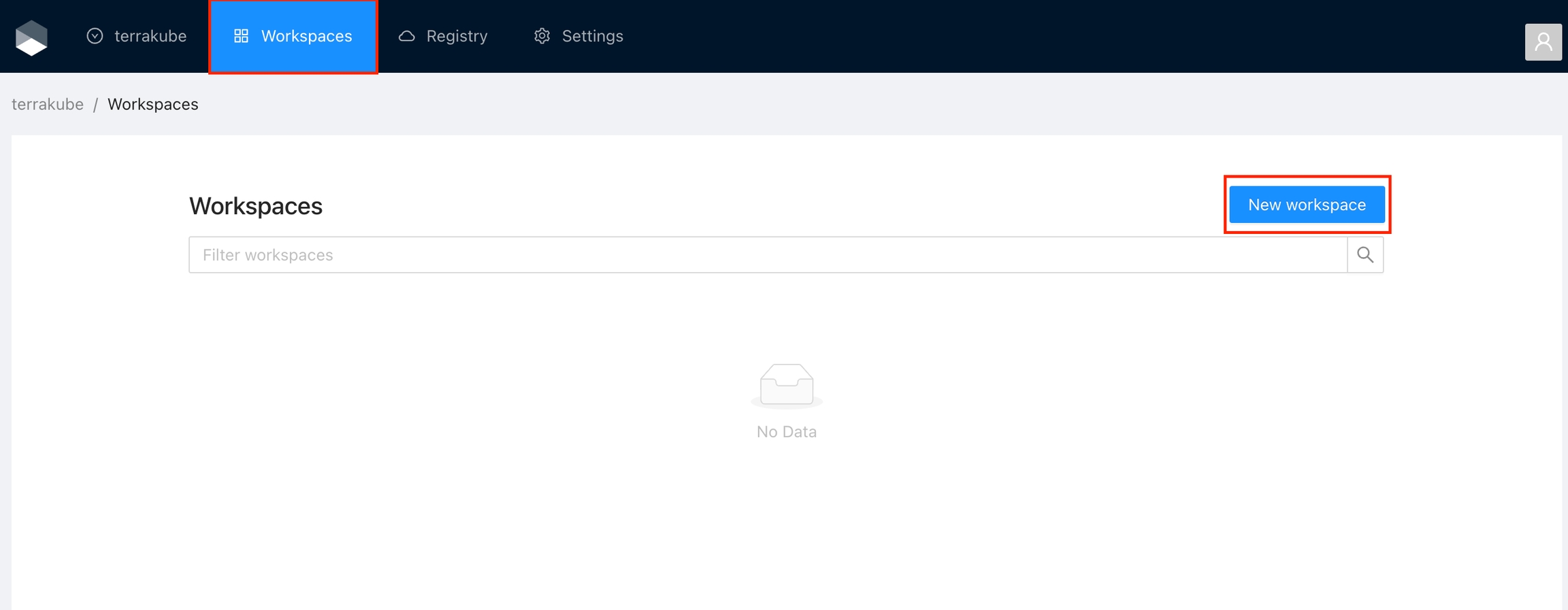

When working with Terraform at Enterprise level, you need to organize your infrastructure in different collections. Terrakube manages infrastructure collections with workspaces. A workspace contains everything Terraform needs to manage a given collection of infrastructure, so you can easily organize all your resources based in your requirements.

For example, you can create a workspace for dev environment and a different workspace for production. Or you can separate your workspaces based in your resource types, so you can create a workspace for all your SQL Databases and anothers workspace for all your VMS.

You can create unlimited workspaces inside each Terrakube Organization. In this section:

Terrakube support sharing the terraform state between workspaces using the following data block.

For example it can be used in the following way:

Terrakube allows you to customize the UI for each step inside your templates using standard HTML. So you can render any kind of content extracted from your Job execution in the Terrakube UI.

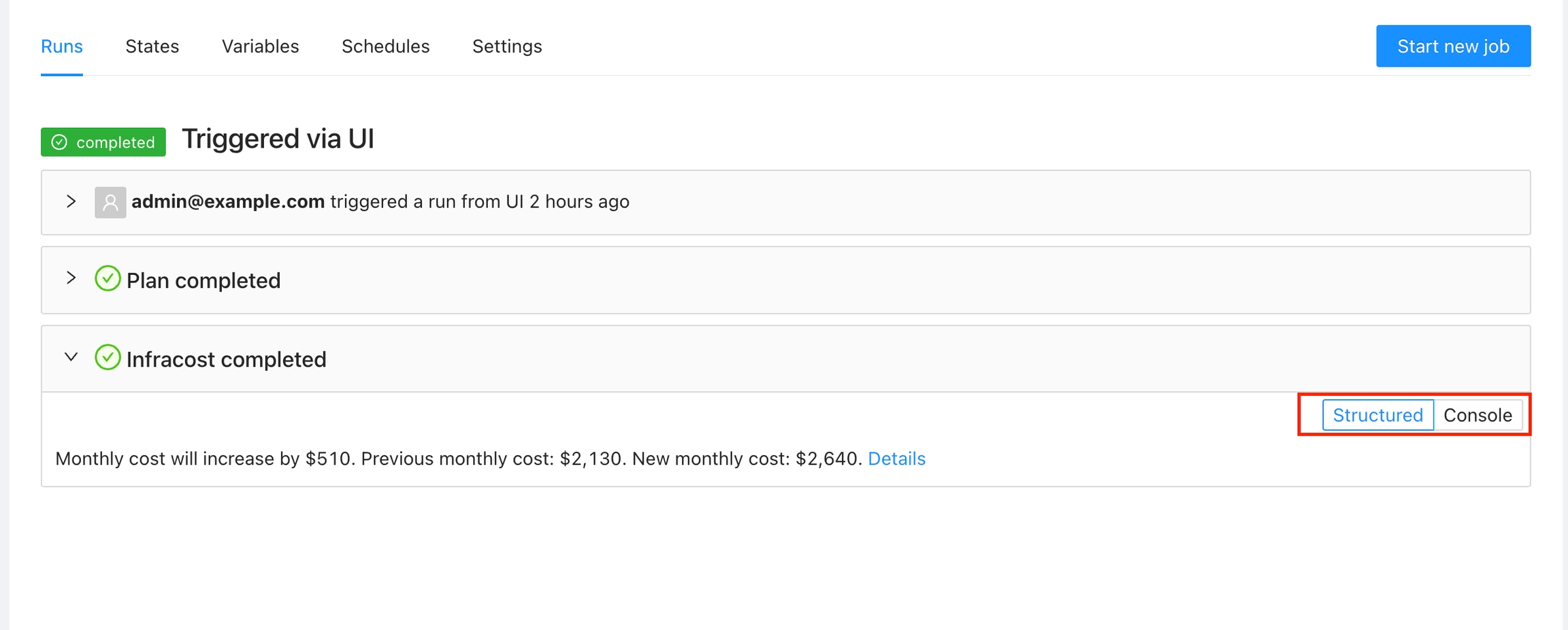

For example you can present the costs using Infracost in a friendly way:

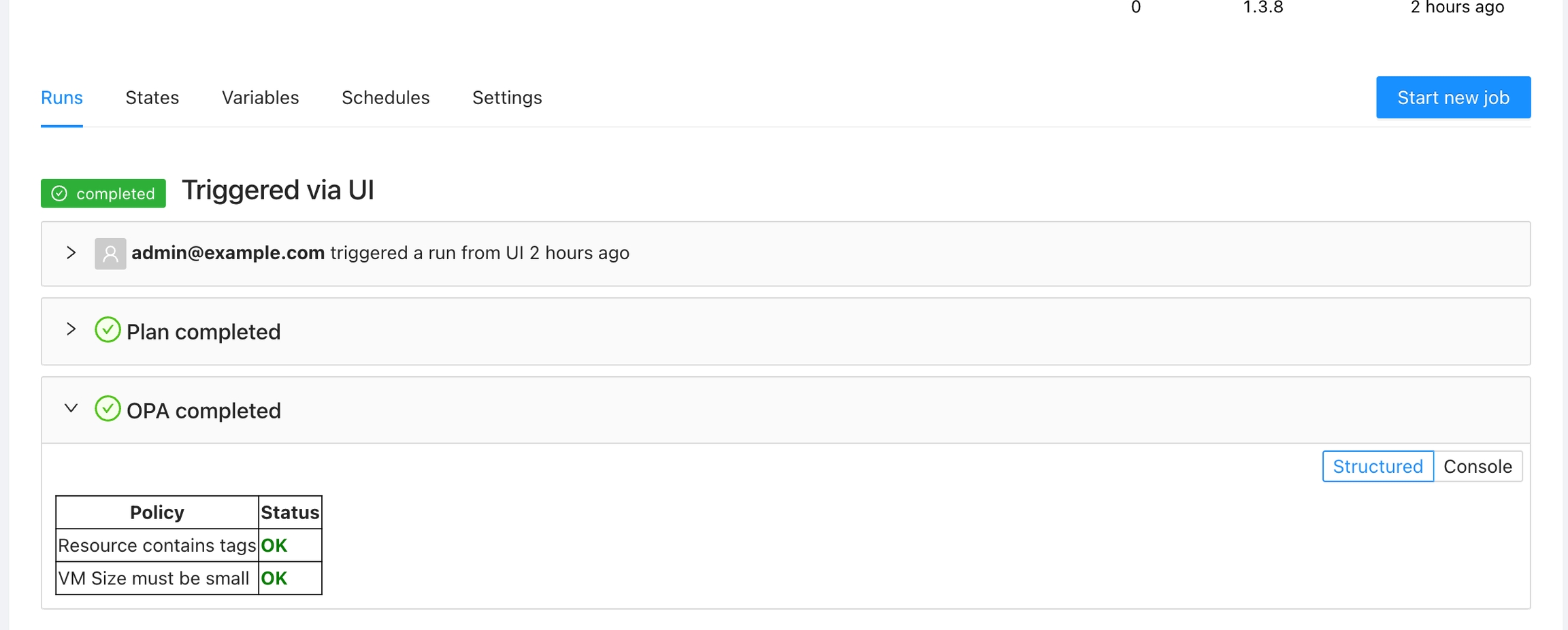

Or present a table with the OPA policies

In order to use UI templates you will need to save the HTML for each template step using the . Terrakube expects the ui templates in the following format.

In the above example the 100 property in the JSON refers to the step number inside your template. In order to save this value from the template you can use the Context extension. For example:

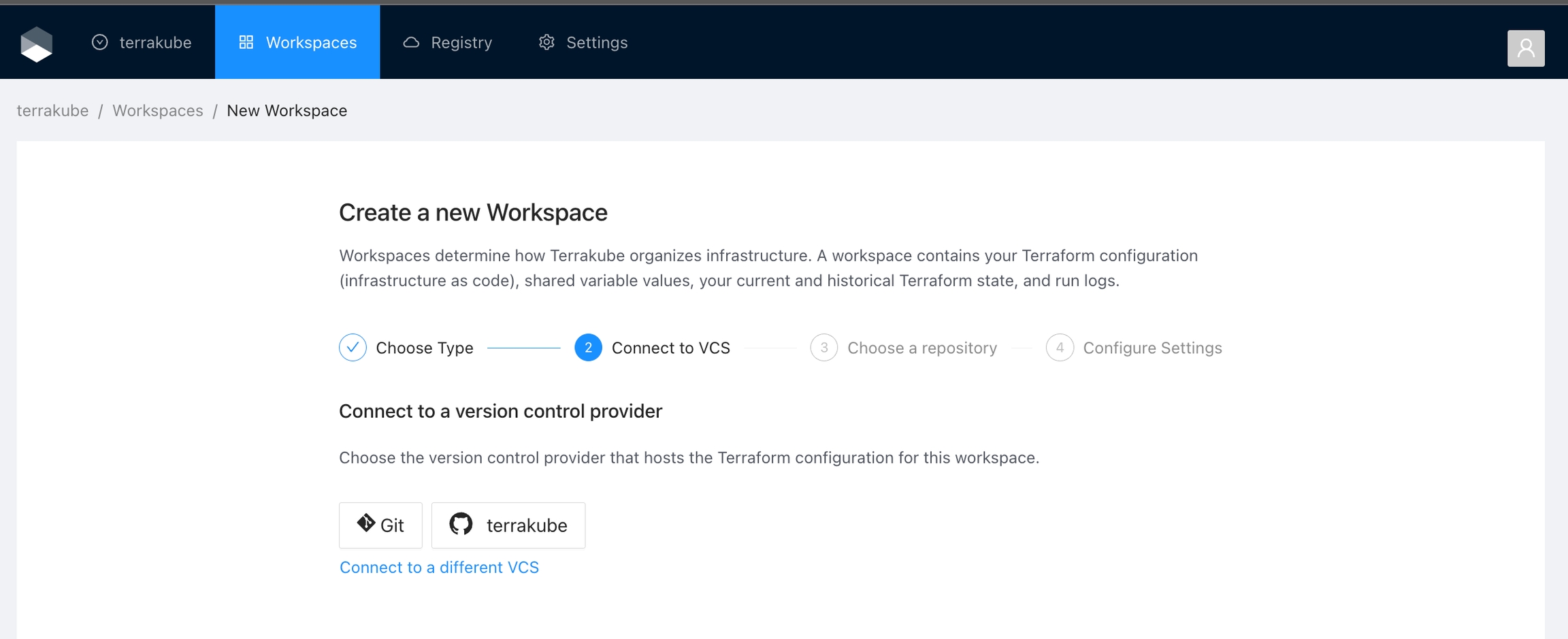

Terrakube empowers collaboration between different teams in your organization. To achieve this, you can integrate Terrakube with your version control system (VCS) provider. Although Terrakube can be used with public Git repositories or with private Git repositories using SSH, connecting to your Terraform code through a VCS provider is the preferred method. This allows for a more streamlined and secure workflow, as well as easier management of access control and permissions.

Terrakube supports the following VCS providers:

Terrakube uses webhooks to monitor new commits. This features is not available in SSH and Azure DevOps.

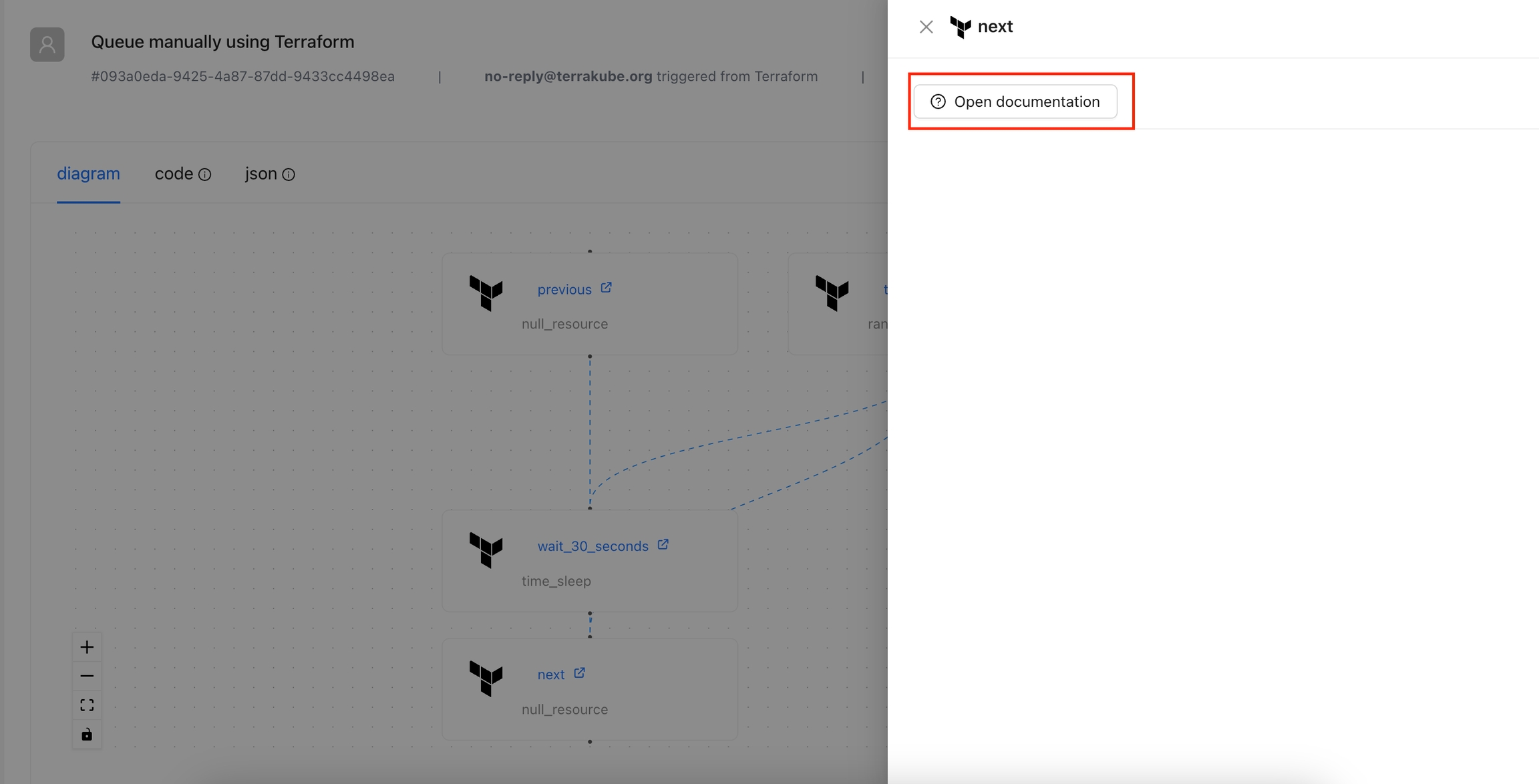

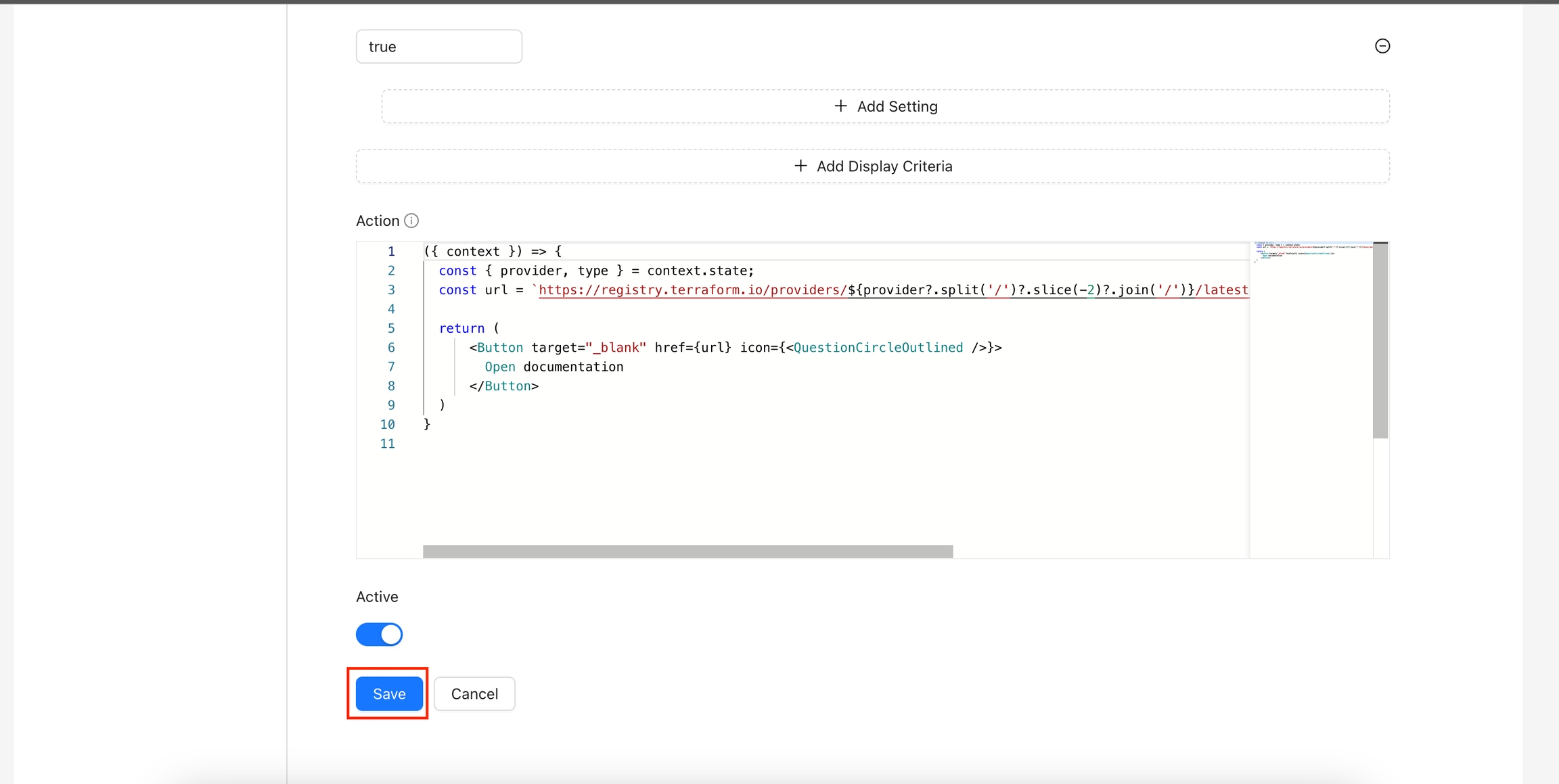

The Open Documentation action is designed to provide quick access to the Terraform registry documentation for a specific provider and resource type. By using the context of the current state, this action constructs the appropriate URL and presents it as a clickable button.

In this section:

The CLI is not compatible with Terrakube 2.X, it needs to be updated and there is an open issue for this

terrakube cli is Terrakube on the command line. It brings organizations, workspaces and other Terrakube concepts to the terminal.

In this section:

git clone https://github.com/AzBuilder/terrakube.git

cd terrakube/telemetry-compose

docker-compose up -dWhen new commits are added to a branch, Terrakube workspaces based on that branch will automatically initiate a Terraform job. Terrakube will use the "Plan and apply" template by default, but you can specify a different Template during the Workspace creation.

When you specify a directory in the Workspace. Terrakube will run the job only if a file changes in that directory

GET {{terrakubeApi}}/context/v1/{{jobId}}import Context

new Context("$terrakubeApi", "$terrakubeToken", "$jobId", "$workingDirectory").saveFile("infracost", "infracost.json")

new Context("$terrakubeApi", "$terrakubeToken", "$jobId", "$workingDirectory").saveProperty("slackId", "1234567890")data "terraform_remote_state" "remote_creation_time" {

backend = "remote"

config = {

organization = "simple"

hostname = "8080-azbuilder-terrakube-vg8s9w8fhaj.ws-us102.gitpod.io"

workspaces = {

name = "simple_tag1"

}

}

}data "terraform_remote_state" "remote_creation_time" {

backend = "remote"

config = {

organization = "simple"

hostname = "8080-azbuilder-terrakube-vg8s9w8fhaj.ws-us102.gitpod.io"

workspaces = {

name = "simple_tag1"

}

}

}

resource "null_resource" "previous" {}

resource "time_sleep" "wait_30_seconds" {

depends_on = [null_resource.previous]

create_duration = data.terraform_remote_state.remote_creation_time.outputs.creation_time

}

resource "null_resource" "next" {

depends_on = [time_sleep.wait_30_seconds]

}Github Authentication

Gitlab Authentictaion

OIDC

LDAP

Keycloak

etc.

The Terrakube helm chart is using DEX as dependency, so you can quickly implement it using any exiting dex configuration.

Make sure to update the dex configuration when deploying terrakube in a real kubernetes environment, by default it is using a very basic openLDAP with some sample data. To disable udpate security.useOpenLDAP in your terrakube.yaml

To customize the DEX setup just create a simple terrakube.yaml and update the configuration like the following example:

Dex configuration examples can be found here.

flow:

- type: "terraformPlan"

step: 100

commands:

- runtime: "GROOVY"

priority: 100

after: true

script: |

import Infracost

String credentials = "version: \"0.1\"\n" +

"api_key: $INFRACOST_KEY \n" +

"pricing_api_endpoint: https://pricing.api.infracost.io"

new Infracost().loadTool(

"$workingDirectory",

"$bashToolsDirectory",

"0.10.12",

credentials)

"Infracost Download Completed..."

- runtime: "BASH"

priority: 200

after: true

script: |

terraform show -json terraformLibrary.tfPlan > plan.json

INFRACOST_ENABLE_DASHBOARD=true infracost breakdown --path plan.json --format json --out-file infracost.json

- runtime: "GROOVY"

priority: 300

after: true

script: |

import Context

new Context("$terrakubeApi", "$terrakubeToken", "$jobId", "$workingDirectory").saveFile("infracost", "infracost.json")

"Save context completed..."# UPDATE THE DEX CLIENT ID AND SCOPE

security:

dexClientId: "example-app"

dexClientScope: "email openid profile offline_access groups"

useOpenLDAP: false

# UPDATE THE DEX CONFIG

dex:

config:

issuer: http://terrakube-api.minikube.net/dex

storage:

type: memory

web:

http: 0.0.0.0:5556

allowedOrigins: ['*']

skipApprovalScreen: true

oauth2:

responseTypes: ["code", "token", "id_token"]

connectors:

- type: ldap

name: OpenLDAP

id: ldap

config:

# The following configurations seem to work with OpenLDAP:

#

# 1) Plain LDAP, without TLS:

host: terrakube-openldap-service:389

insecureNoSSL: true

#

# 2) LDAPS without certificate validation:

#host: localhost:636

#insecureNoSSL: false

#insecureSkipVerify: true

#

# 3) LDAPS with certificate validation:

#host: YOUR-HOSTNAME:636

#insecureNoSSL: false

#insecureSkipVerify: false

#rootCAData: 'CERT'

# ...where CERT="$( base64 -w 0 your-cert.crt )"

# This would normally be a read-only user.

bindDN: cn=admin,dc=example,dc=org

bindPW: admin

usernamePrompt: Email Address

userSearch:

baseDN: ou=People,dc=example,dc=org

filter: "(objectClass=person)"

username: mail

# "DN" (case sensitive) is a special attribute name. It indicates that

# this value should be taken from the entity's DN not an attribute on

# the entity.

idAttr: DN

emailAttr: mail

nameAttr: cn

groupSearch:

baseDN: ou=Groups,dc=example,dc=org

filter: "(objectClass=groupOfNames)"

userMatchers:

# A user is a member of a group when their DN matches

# the value of a "member" attribute on the group entity.

- userAttr: DN

groupAttr: member

# The group name should be the "cn" value.

nameAttr: cn

staticClients:

- id: example-app

redirectURIs:

- 'http://terrakube-ui.minikube.net'

- '/device/callback'

- 'http://localhost:10000/login'

- 'http://localhost:10001/login'

name: 'example-app'

public: trueRoot URL: Terrakube's UI URL.

Valid Redirect URIs: set it to *

clientSecret: must be the secret in the Credentials tab in Keycloak..

redirectURI: has the form [http|https]://<TERRAKUBE_API>/dex/callback. Notice this is the Terrakube API URL and not the UI URL.

insecureEnableGroups: this is required to enable groups claims. This way groups defined in Keycloak are brought by Terrakube's Dex connector.

Allow members to create and administrate all within the organization

Name

Must be a valid group based on the Dex connector you are using to manage users and groups.

For example if you are using Azure Active Directory, you must use a valid Active Directory Group like TERRAKUBE_ADMIN, or if you are using Github the format should be MyGithubOrg:TERRAKUBE_ADMIN

Manage Workspaces

Allow members to create and administrate all workspaces within the organization

Manage Modules

Allow members to create and administrate all modules within the organization

Manage Providers

Allow members to create and administrate all providers within the organization

Manage Templates

Allow members to create and administrate all templates within the organization

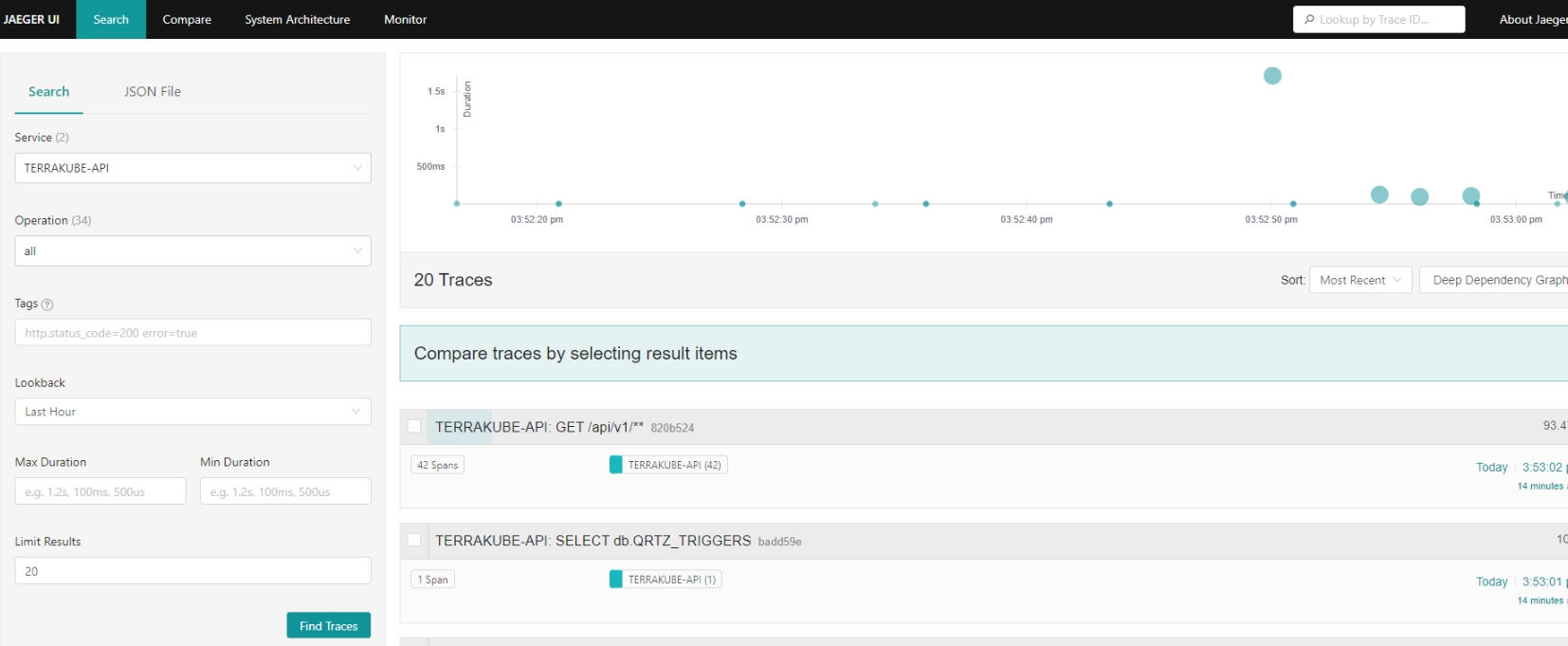

Manage

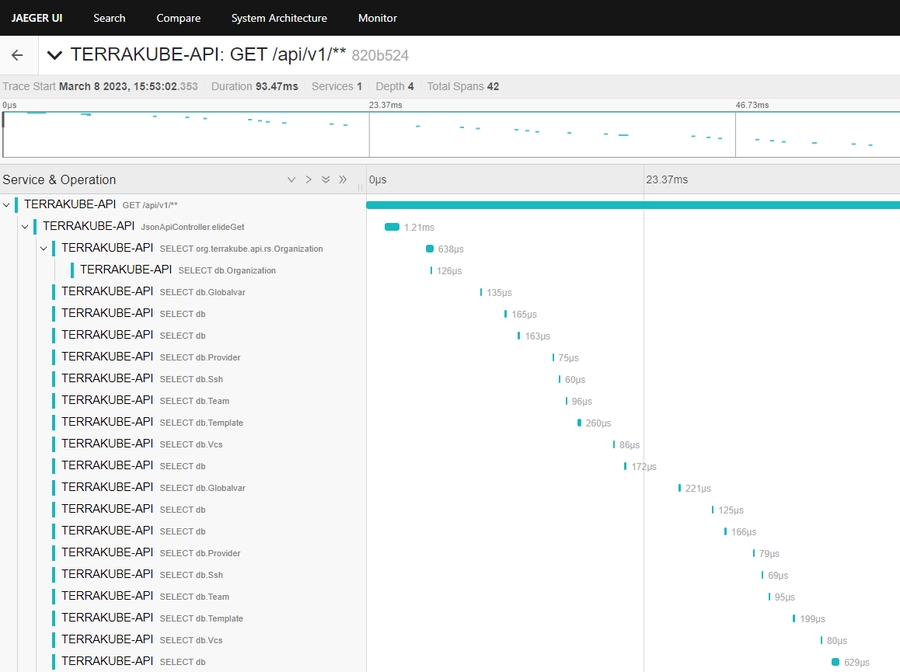

Now we can go the jaeger ui to see if everything is working as expected.

There are several differente configuration options for example:

The Jaeger exporter. This exporter uses gRPC for its communications protocol.

otel.traces.exporter=jaeger

OTEL_TRACES_EXPORTER=jaeger

Select the Jaeger exporter

otel.exporter.jaeger.endpoint

OTEL_EXPORTER_JAEGER_ENDPOINT

The Jaeger gRPC endpoint to connect to. Default is http://localhost:14250.

otel.exporter.jaeger.timeout

OTEL_EXPORTER_JAEGER_TIMEOUT

The maximum waiting time, in milliseconds, allowed to send each batch. Default is 10000.

The Zipkin exporter. It sends JSON in Zipkin format to a specified HTTP URL.

otel.traces.exporter=zipkin

OTEL_TRACES_EXPORTER=zipkin

Select the Zipkin exporter

otel.exporter.zipkin.endpoint

OTEL_EXPORTER_ZIPKIN_ENDPOINT

The Zipkin endpoint to connect to. Default is http://localhost:9411/api/v2/spans. Currently only HTTP is supported.

The Prometheus exporter.

otel.metrics.exporter=prometheus

OTEL_METRICS_EXPORTER=prometheus

Select the Prometheus exporter

otel.exporter.prometheus.port

OTEL_EXPORTER_PROMETHEUS_PORT

The local port used to bind the prometheus metric server. Default is 9464.

otel.exporter.prometheus.host

OTEL_EXPORTER_PROMETHEUS_HOST

The local address used to bind the prometheus metric server. Default is 0.0.0.0.

For more information please check, the official open telemetry documentation.

Open Telemetry Example

One small example to show how to use open telemetry with docker compose can be found in the following URL:

Once you are in the desired organization, click the Settings button, then in the left menu select the Global Variables option and click the Add global variable button

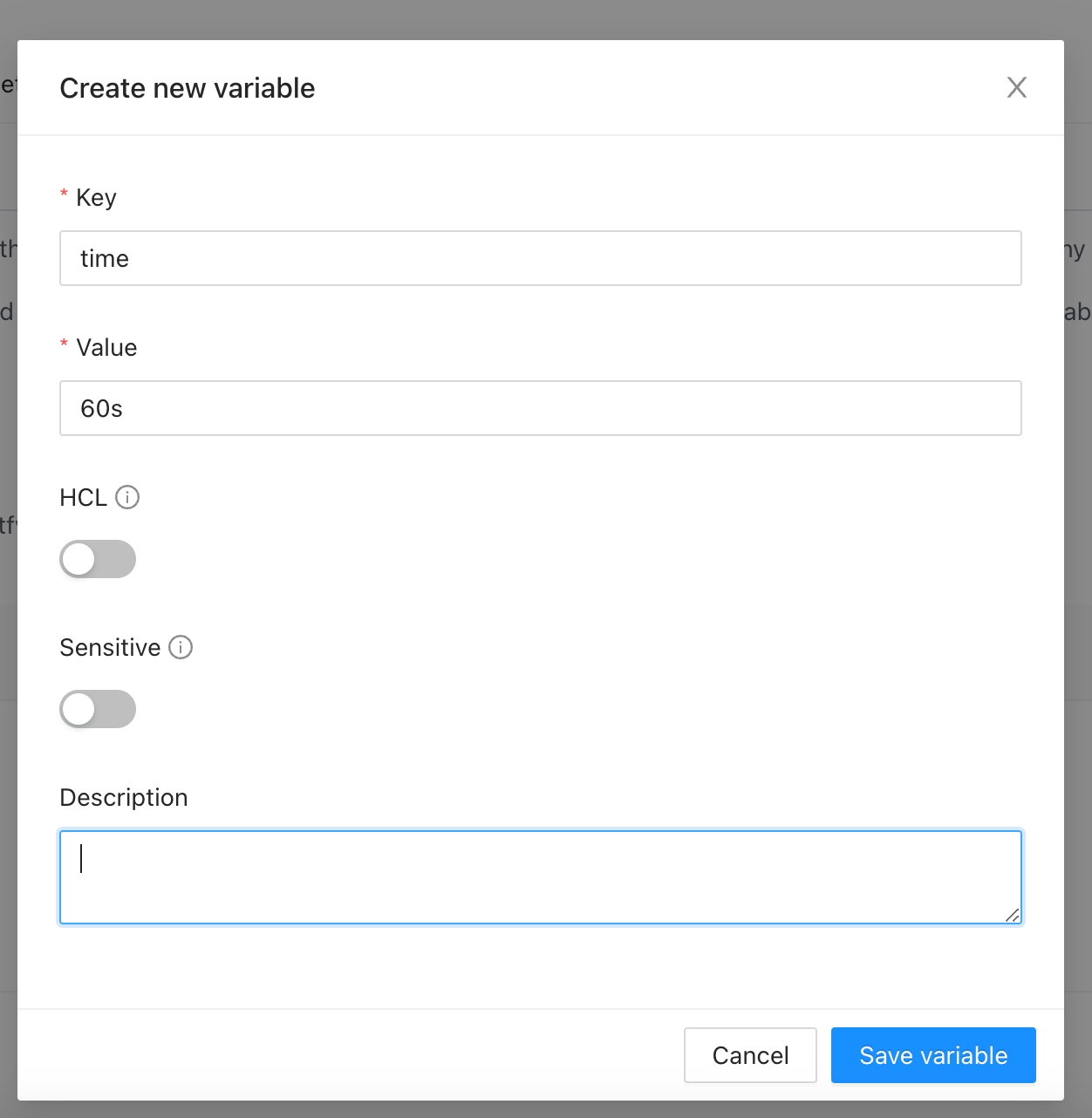

In the popup, provide the required values. Use the below table as reference:

Key

Unique variable name

Value

Key value

Category

Category could be Terraform Variable or Environment Variable

Description

Free text to document the reason for this global variable

HCL

Parse this field as HashiCorp Configuration Language (HCL). This allows you to interpolate values at runtime.

Finally click the Save global variable button and the variable will be created

You will see the new global variable in the list. And now the variable will be injected in all the workspaces within the organization

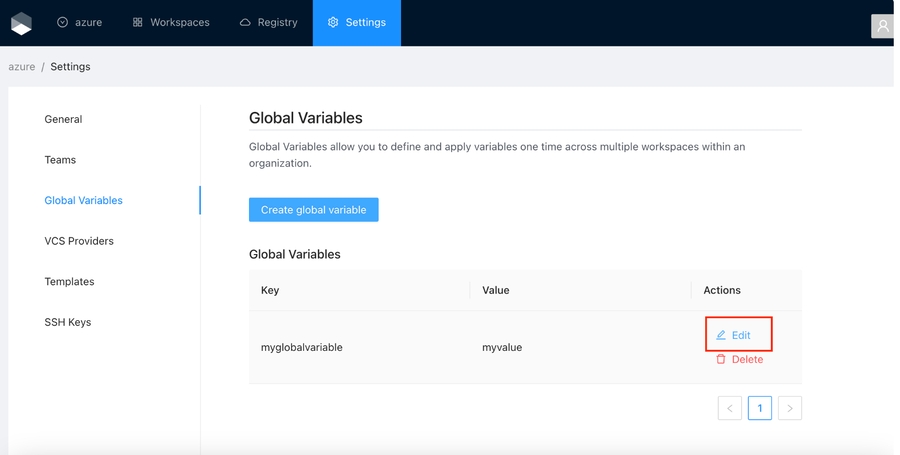

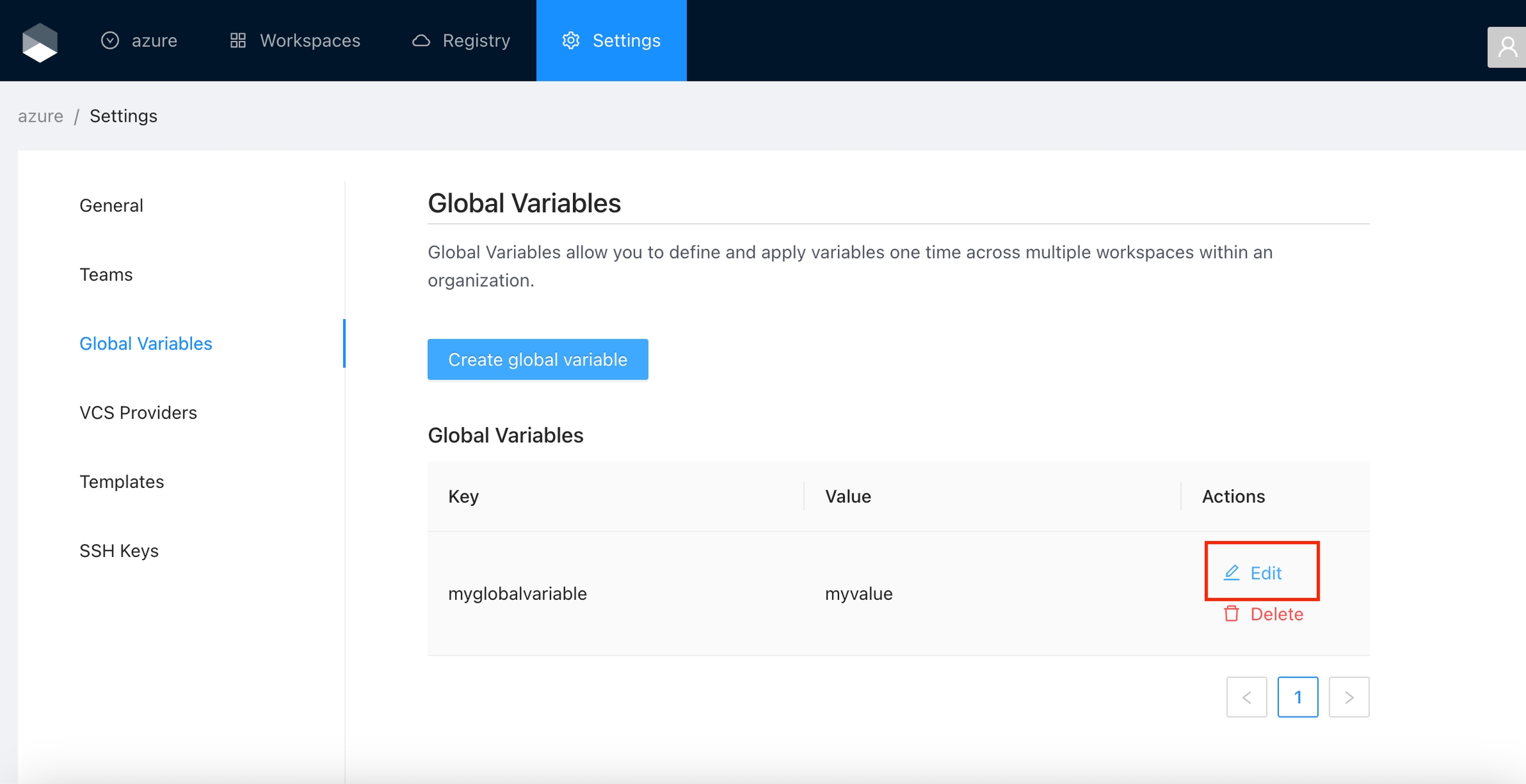

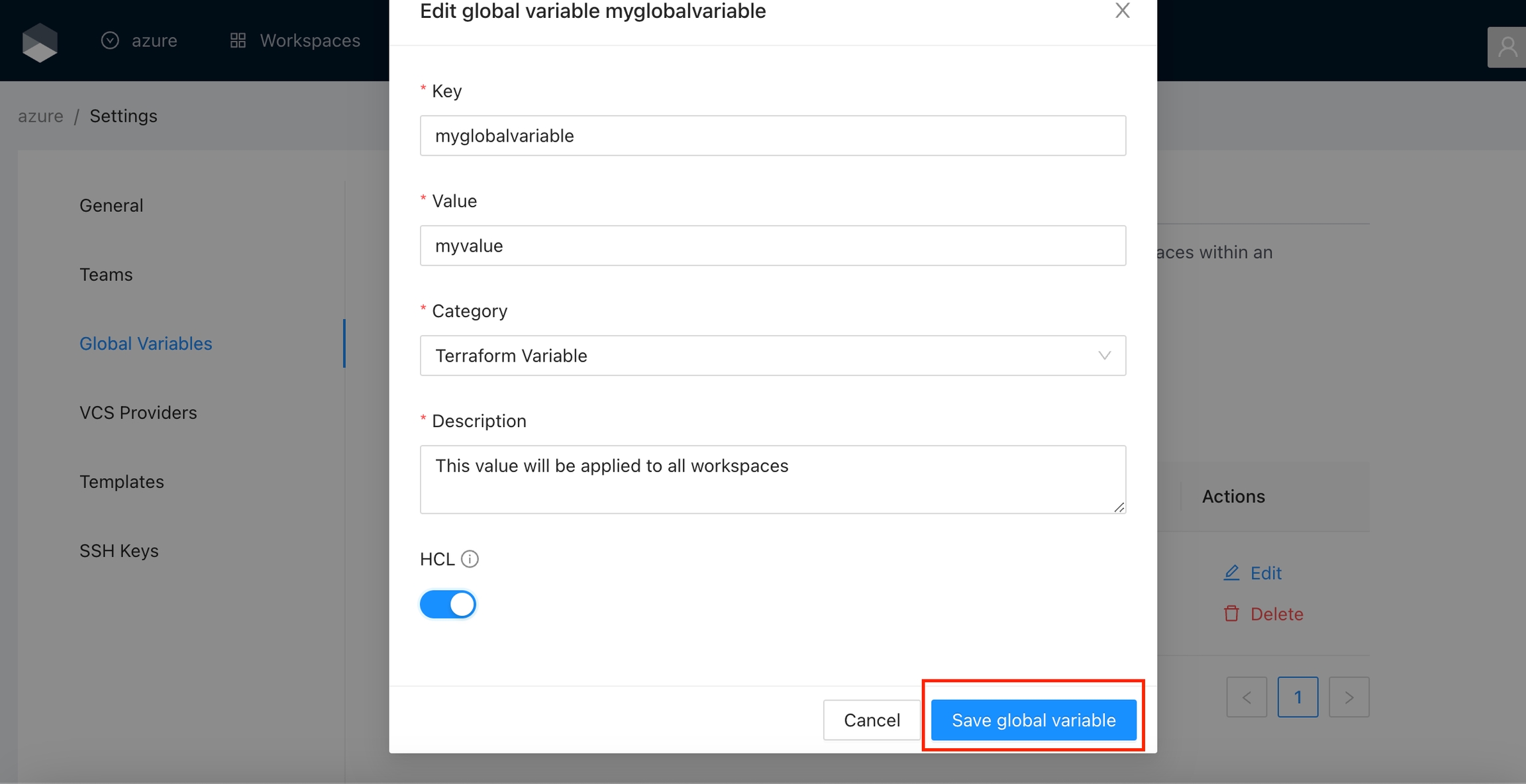

Click the Edit button next to the global variable you want to edit.

Change the fields you need and click the Save global variable button

For security, you can't change the Sensitive field. So if you want to change one global variable to sensitive you must delete the existing variable and create a new one

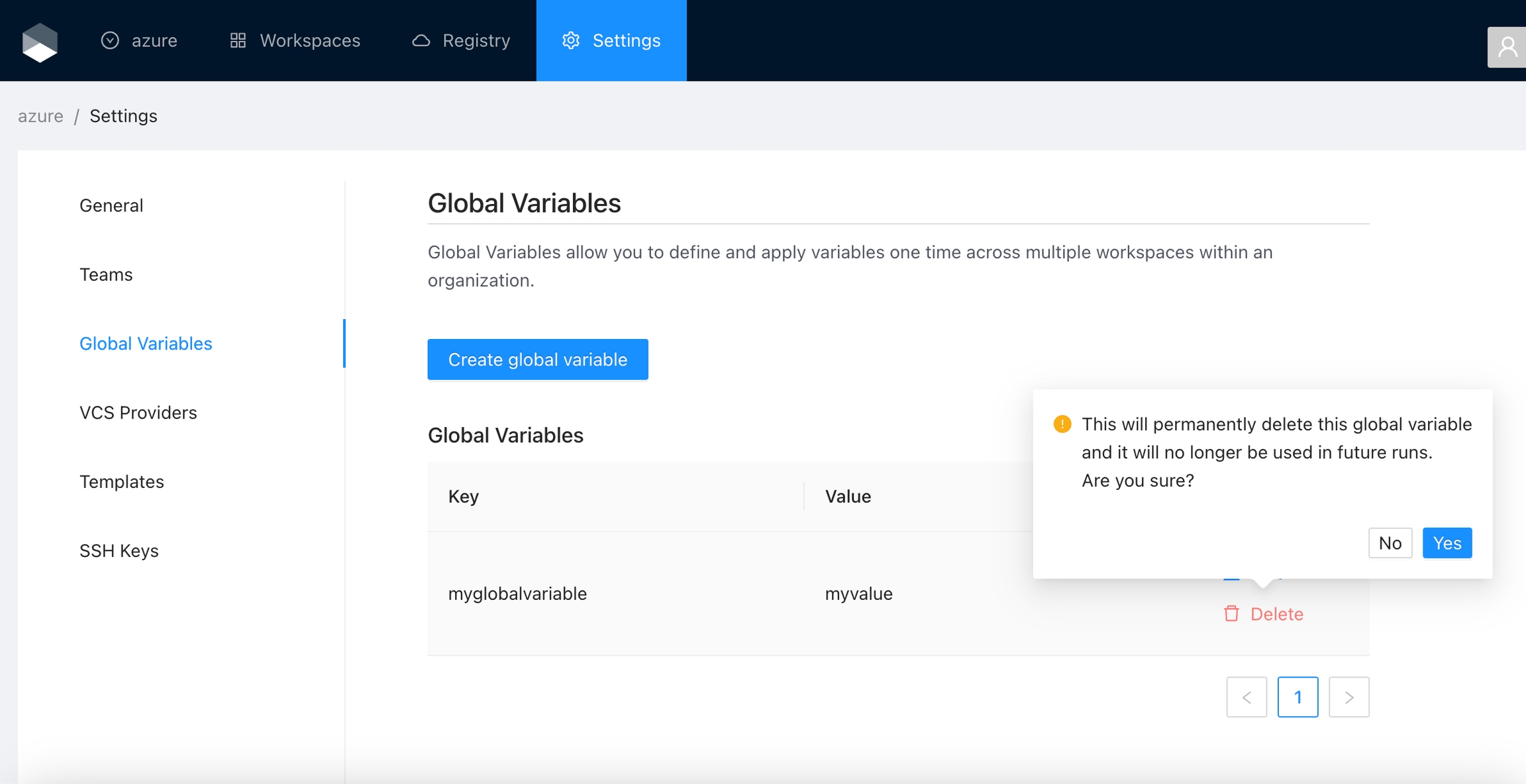

Click the Delete button next to the global variable you want to delete, and then click the Yes button to confirm the deletion. Please take in consideration the deletion is irreversible

Navigate to the Workspace Overview or the Visual State and click a resource name.

In the Resource Drawer, Click the Open documentation button

You will be able to see the documentation for that resource in the Terraform registry

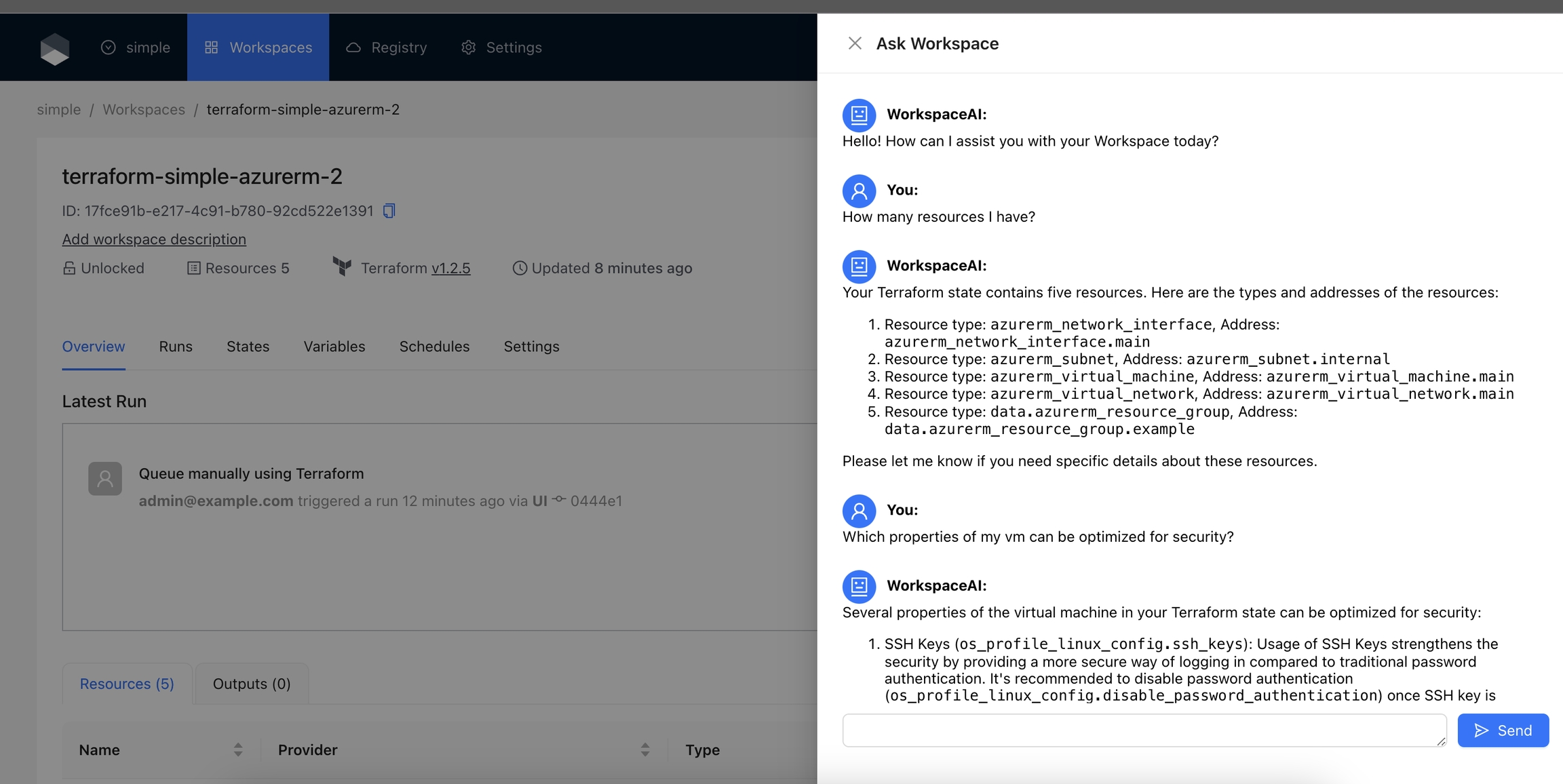

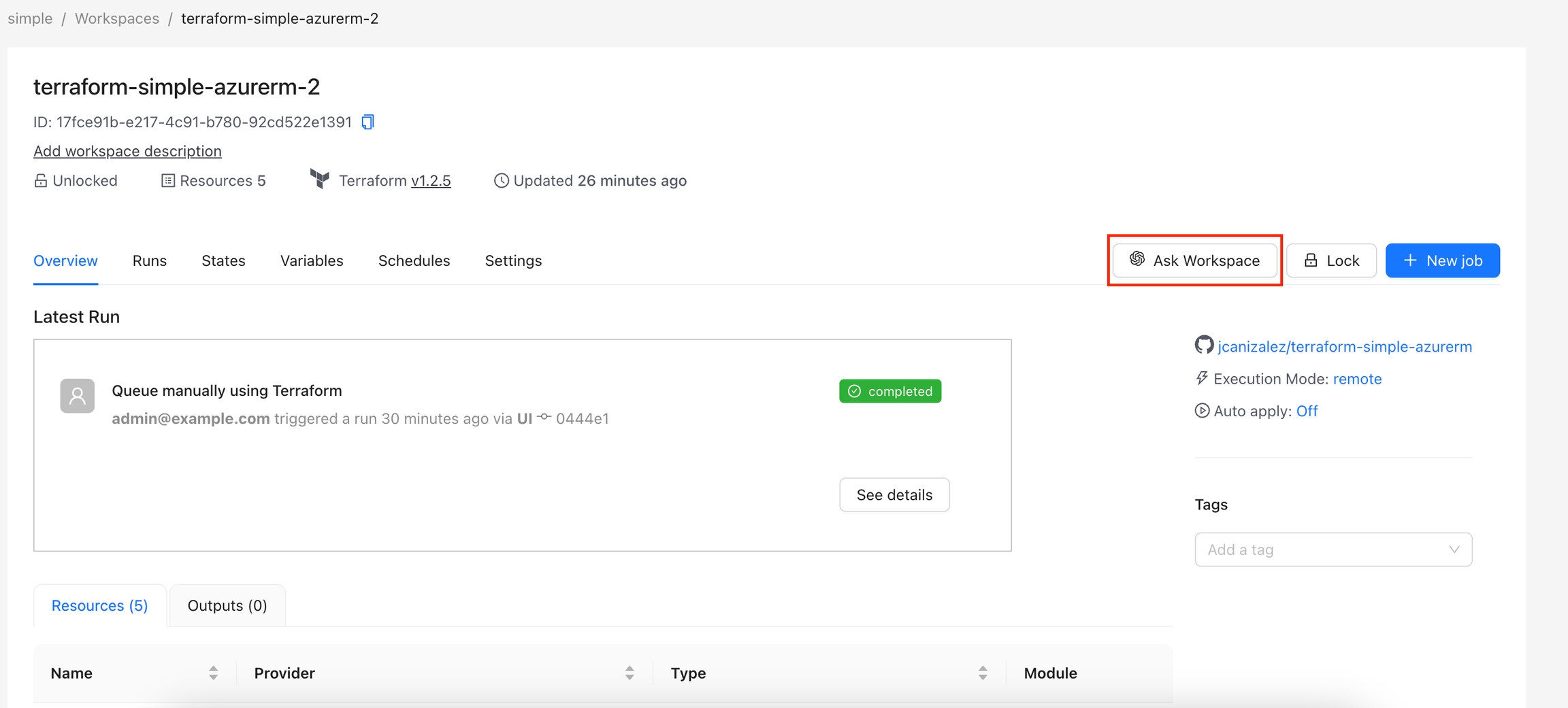

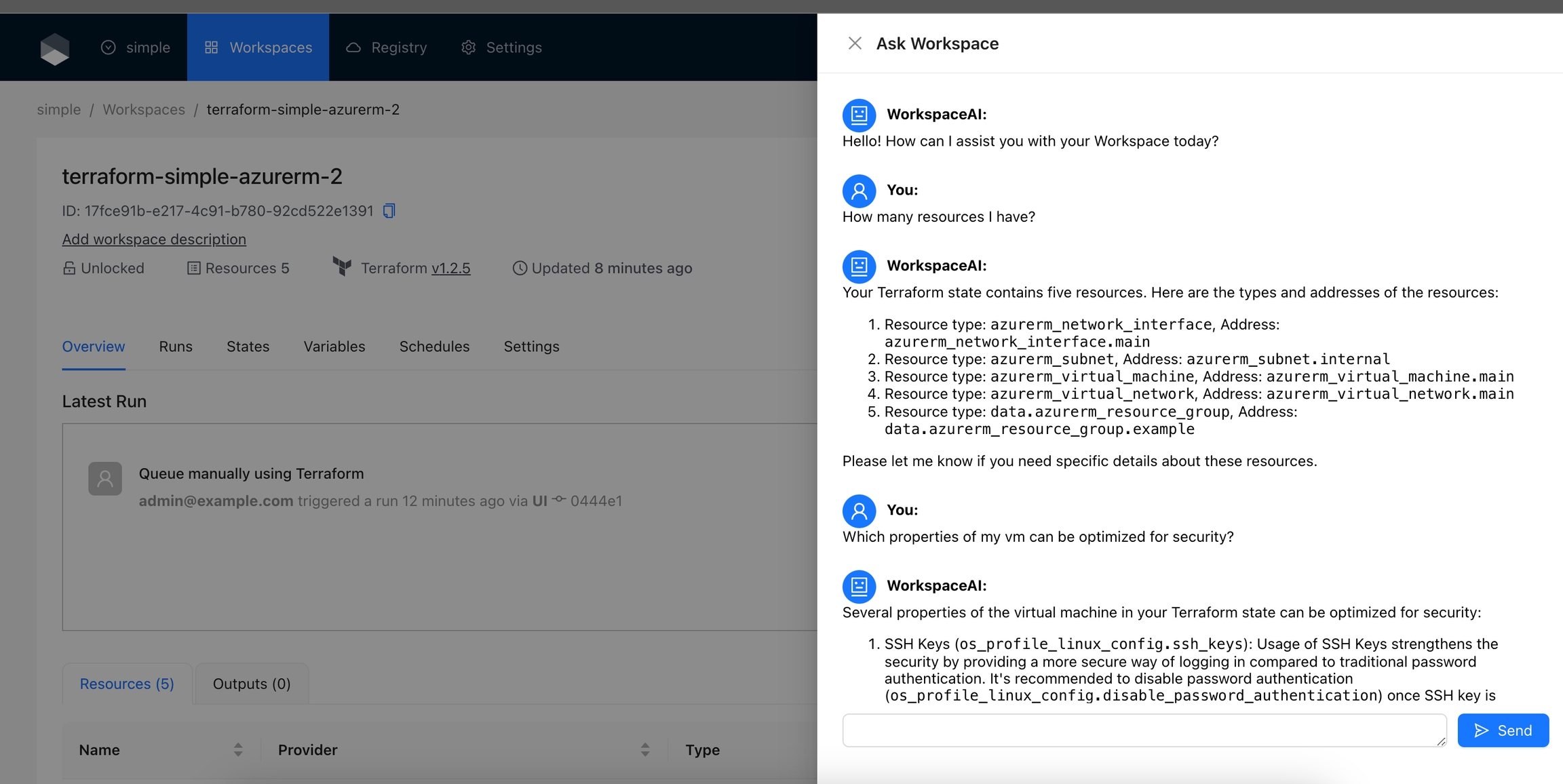

The Ask Workspace action allows users to interact with OpenAI's GPT-4o model to ask questions and get assistance related to their Terraform Workspace. This action provides a chat interface where users can ask questions about their Workspace's Terraform state and receive helpful responses from the AI.

By default, this Action is disabled and when enabled will appear for all resources. If you want to display this action only for certain resources, please check display criteria.

This action requires the following variables as Workspace Variables or Global Variables in the Workspace Organization:

OPENAI_API_KEY: The API key to authenticate requests to the OpenAI API. Please check this guide in how to get an OPEN AI api key

Navigate to the Workspace and click on the Ask Workspace button.

Enter your questions and click the Send button or press the Enter key.

terrakubeUI :{

"100" : "<span>Some Content</span>"

}flow:

- type: "terraformPlan"

step: 100

name: "Plan"

commands:

- runtime: "GROOVY"

priority: 300

after: true

script: |

import Context

def uiTemplate = '{"100":"<span>Simple Text</span>"}'

new Context("$terrakubeApi", "$terrakubeToken", "$jobId", "$workingDirectory").saveProperty("terrakubeUI", uiTemplate)

"Save context completed..."

- type: "terraformApply"

step: 200

name: "Apply"This following will install Terrakube using "HTTP" a few features like the Terraform registry and the Terraform remote state won't be available because they require "HTTPS", to install with HTTPS support locally with minikube check this

Terrakube can be installed in minikube as a sandbox environment to test, to use it please follow this:

If you found the following message "Snippet directives are disabled by the Ingress administrator", please update the ingres-nginx-controller configMap in namespace ingress-nginx adding the following:

The environment has some users, groups and sample data so you can test it quickly.

Visit http://terrakube-ui.minikube.net and login using [email protected] with password admin

You can login using the following user and passwords

The sample user and groups information can be updated in the kubernetes secret:

terrakube-openldap-secrets

Minikube will use a very simple OpenLDAP, make sure to change this when using in a real kubernetes environment. Using the option security.useOpenLDAP=false in your helm deployment.

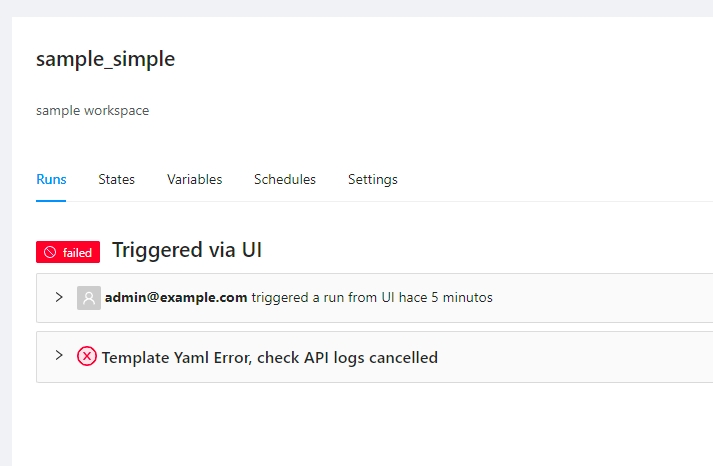

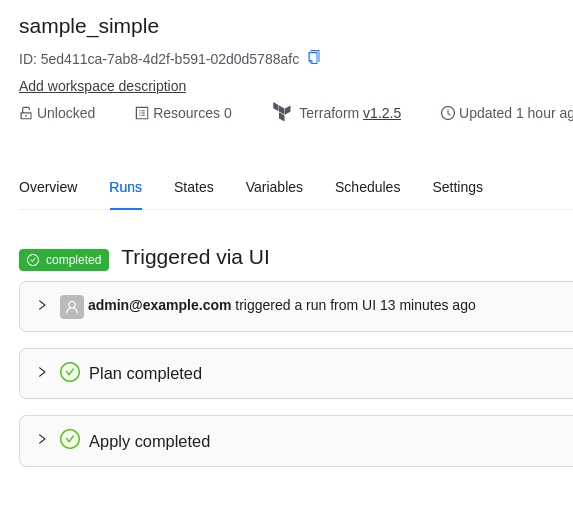

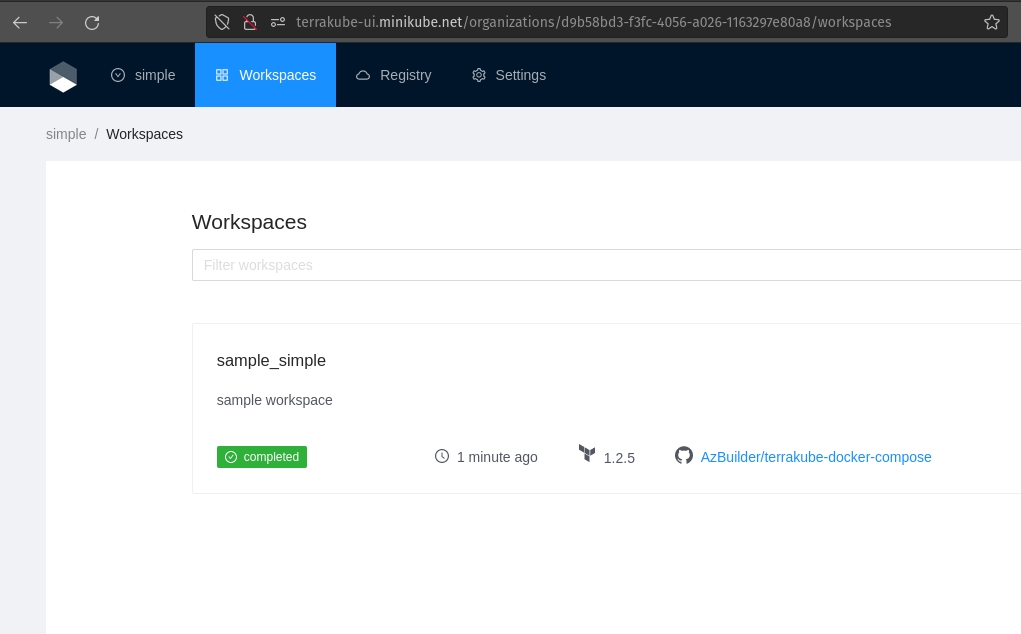

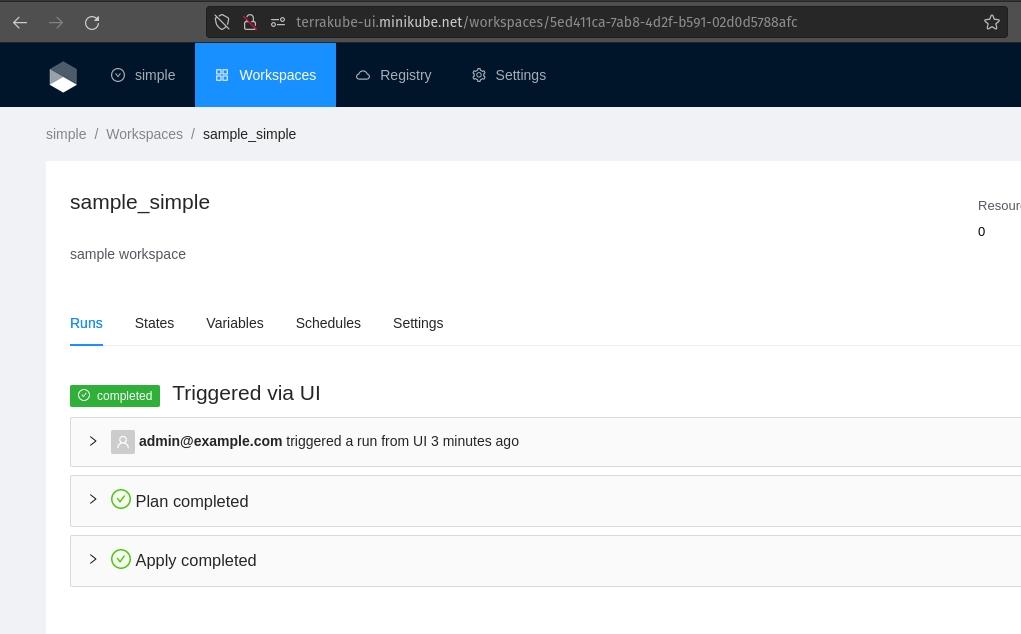

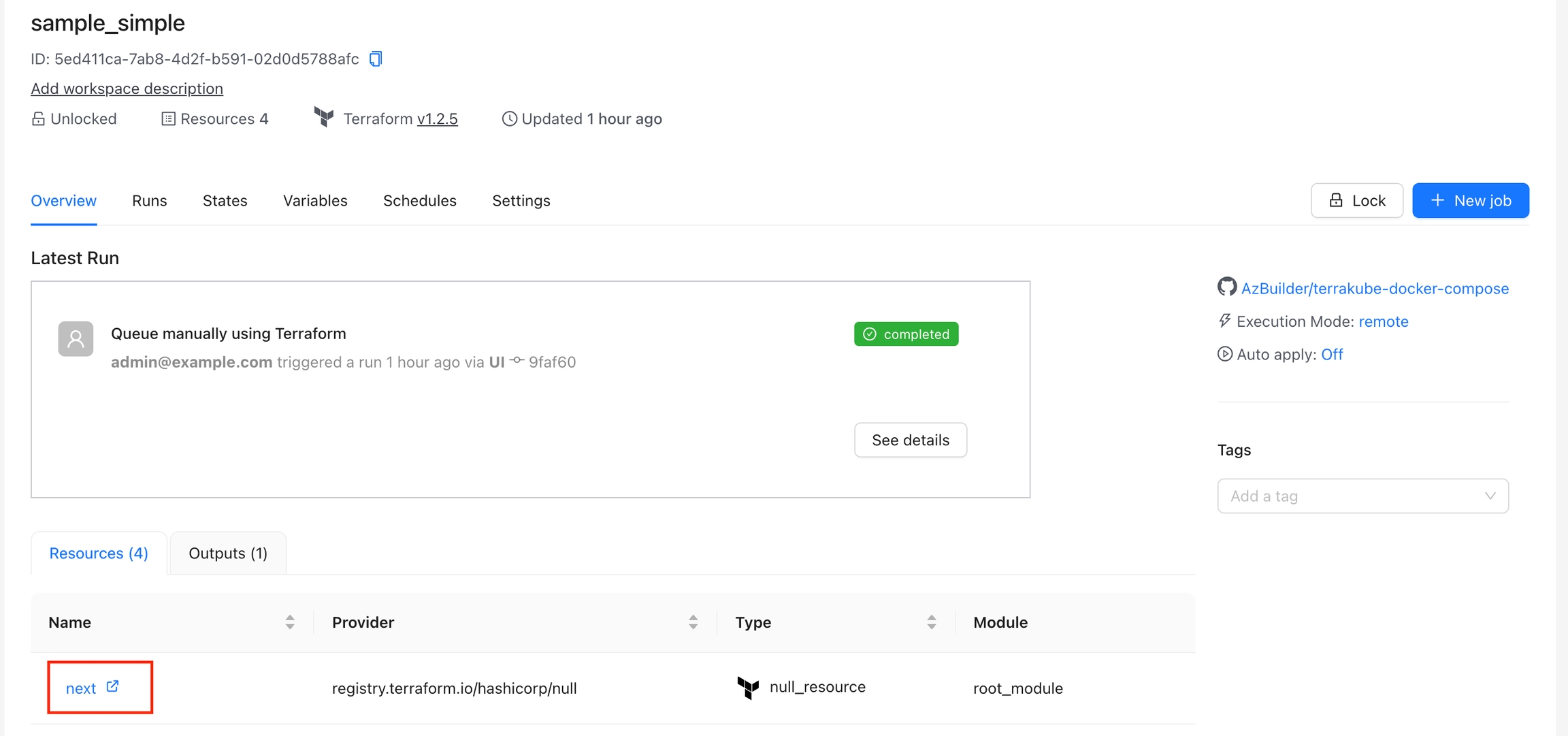

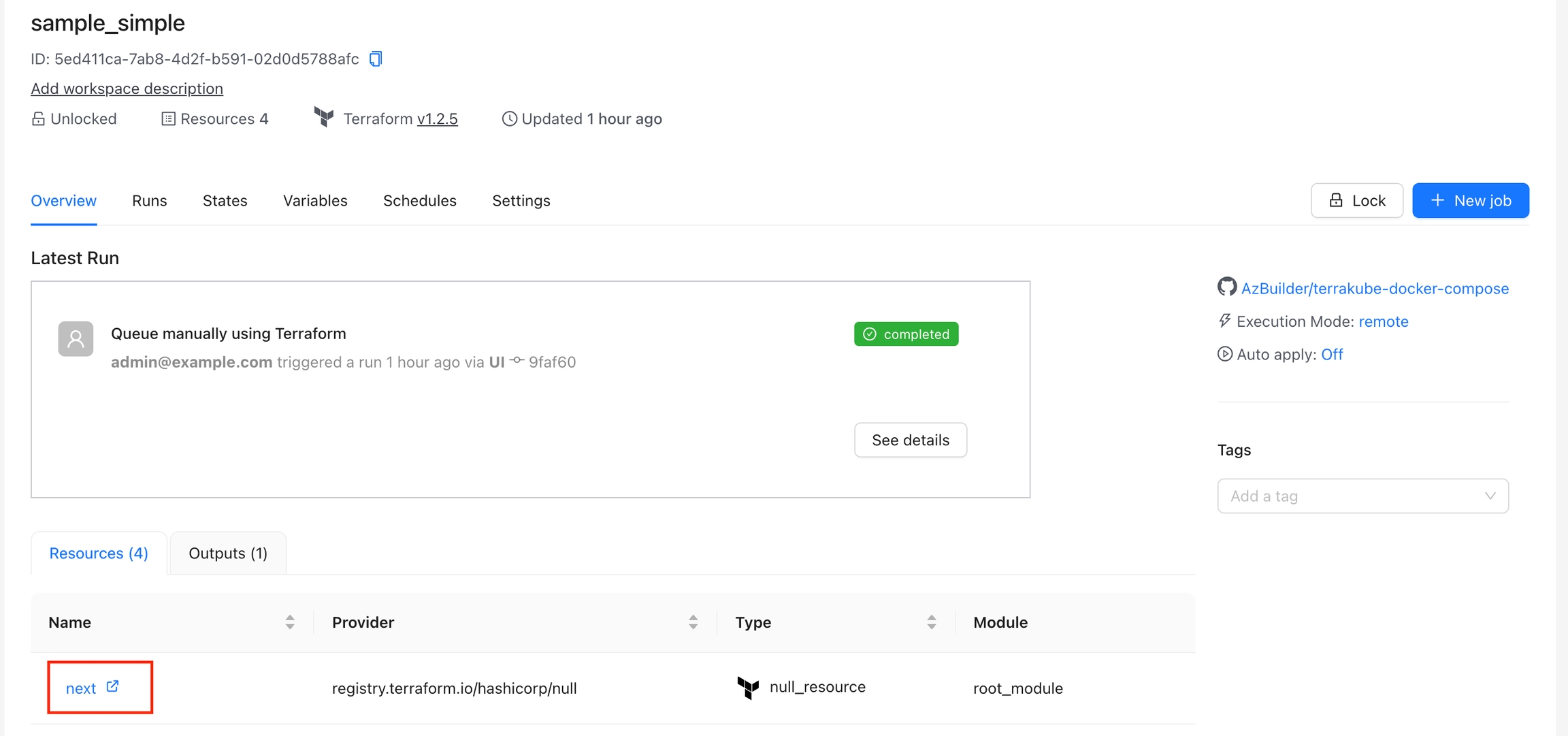

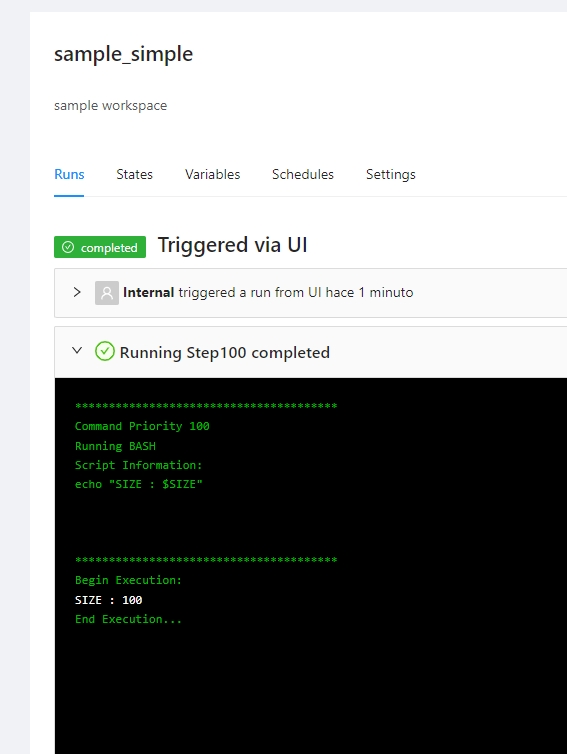

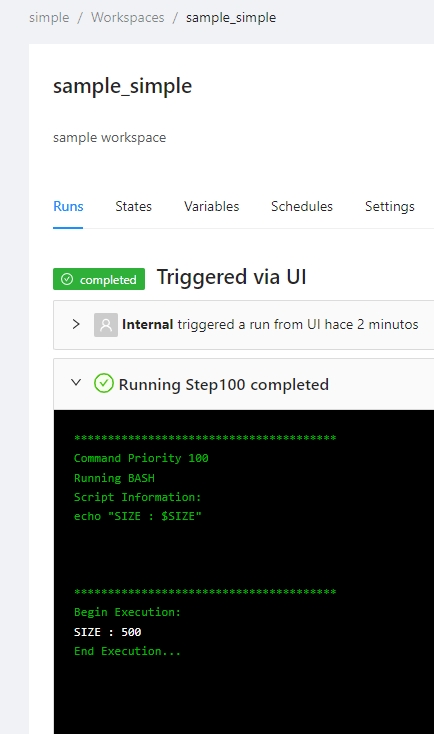

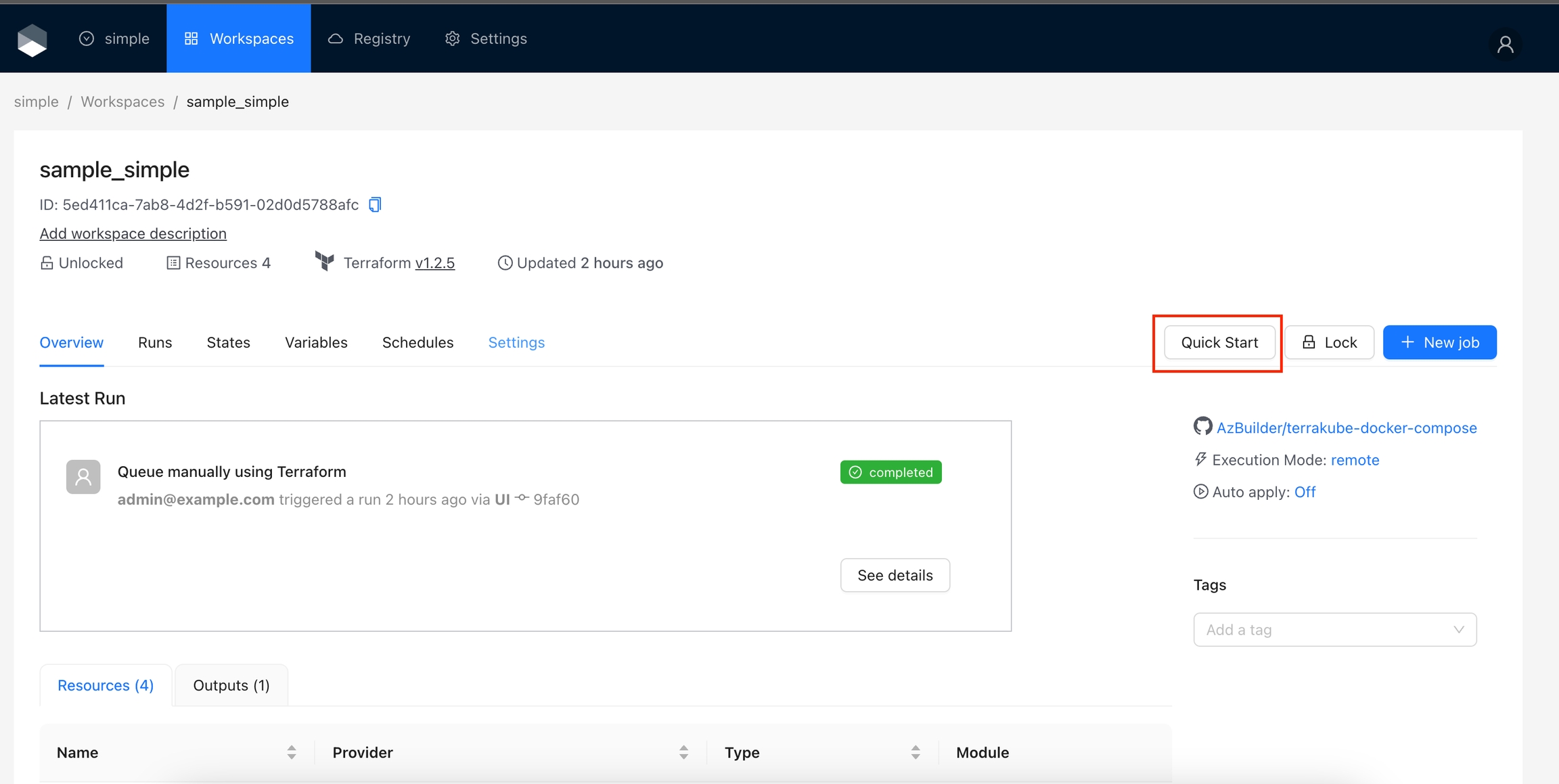

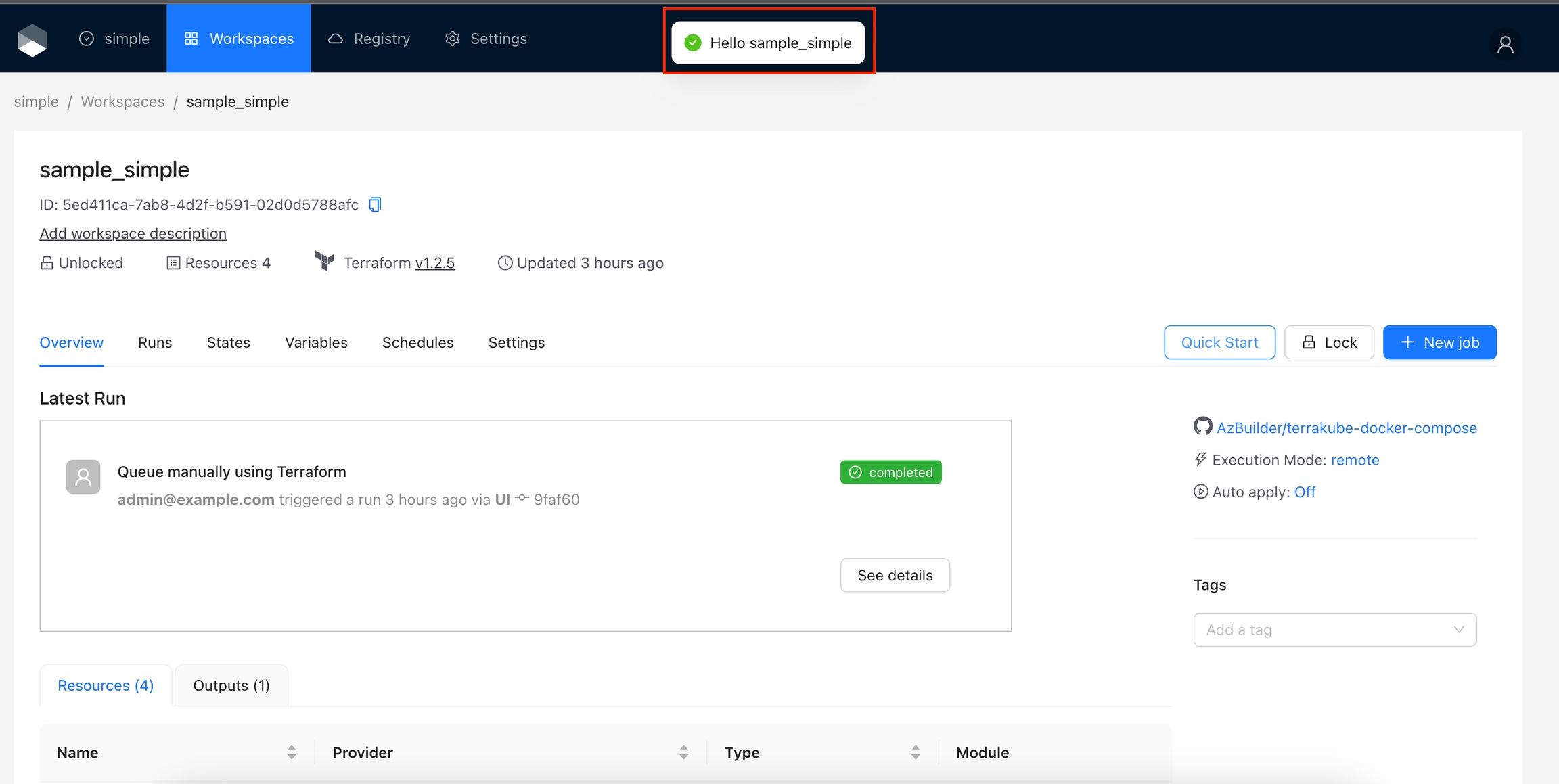

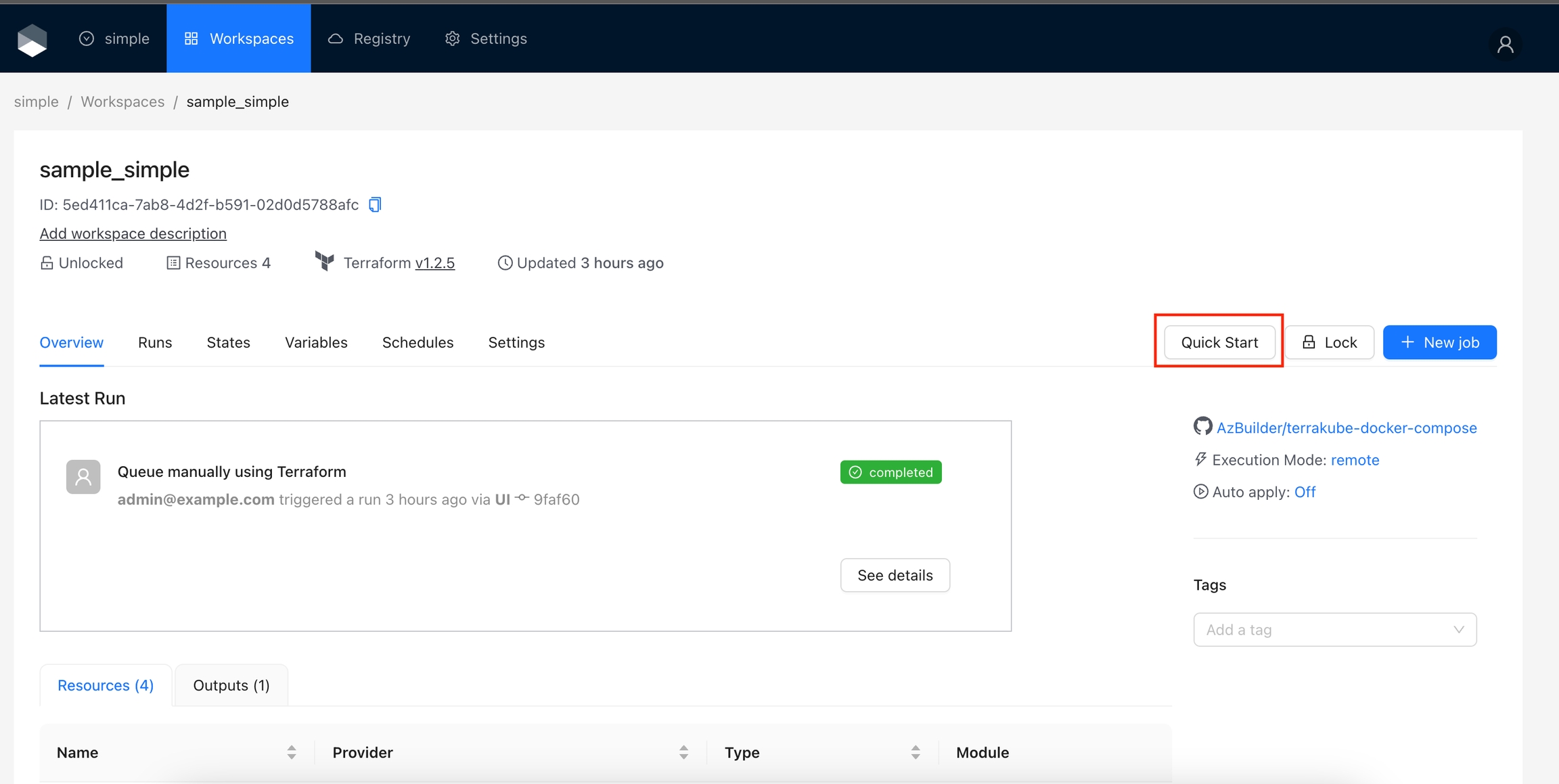

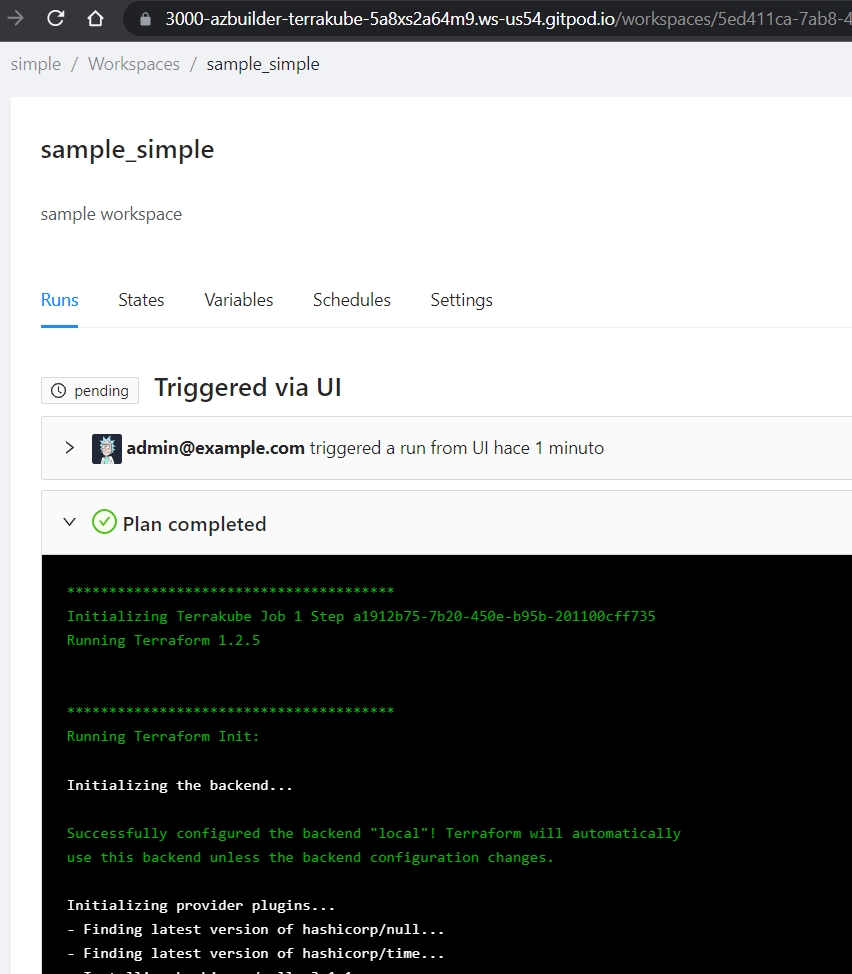

Select the "simple" organization and the "sample_simple" workspace and run a job.

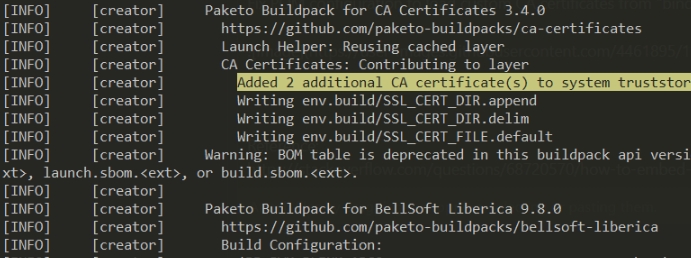

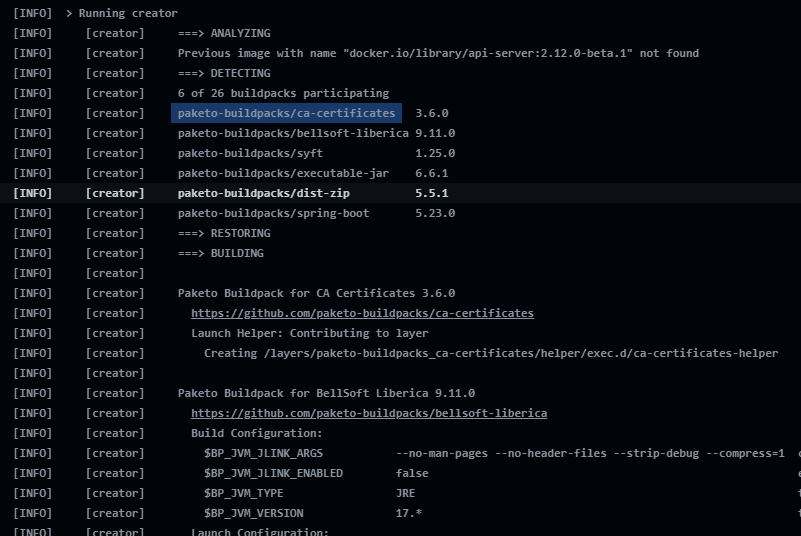

Terrakube componentes (api, registry and executor) are using buildpacks to create the docker images

When using buildpack to add a custom CA certificate at runtime you need to do the following:

Provide the following environment variable to the container:

Inside the path there is a folder call "ca-certificates"

We need to mount some information to that path

Inside this folder we should put out custom PEM CA certs and one additional file call type

The content of the file type is just the text "ca-certificates"

Finally your helm terrakube.yaml should look something like this because we are mounting out CA certs and the file called type in the following path " /mnt/platform/bindings/ca-certificates"

When mounting the volume with the ca secrets dont forget to add the key "type", the content of the file is already defined inside the helm chart

Checking the terrakube component two additional ca certs are added inside the sytem truststore

Additinal information about buildpacks can be found in this link:

Terrakube allow to add the certs when building the application, to use this option use the following:

The certs will be added at runtime as the following image.

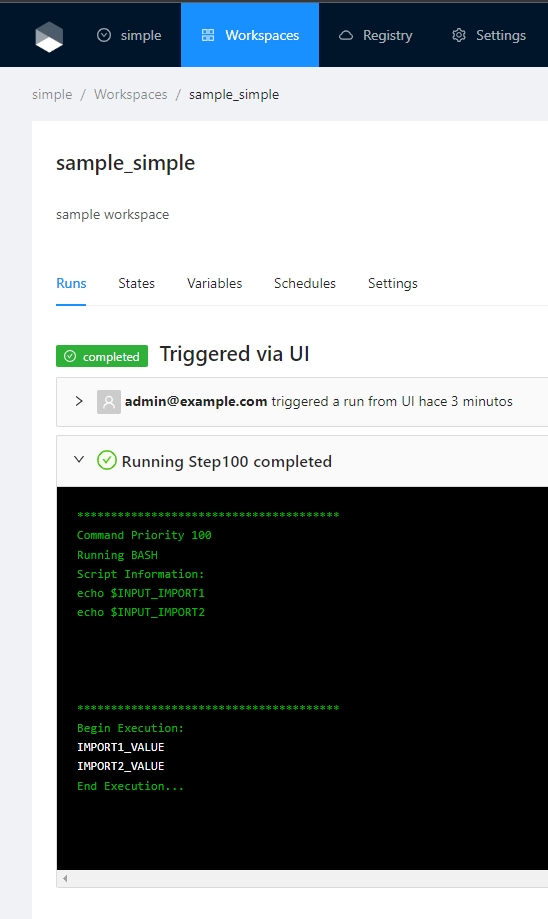

In some special cases it is necesarry to filter the global variables used inside the job execution, if the "inpursEnv" or "inpustTerraform" is not defined all global variables will be imported to the job context.

$IMPORT1 and $IMPORT2 will be added to the job context as env variable INPUT_IMPORT1 and INPUT_IMPORT2

workspace env variables > importComands env variables > Flow env variables workspace terraform variables > importComands terraform variables > Flow terraform variables

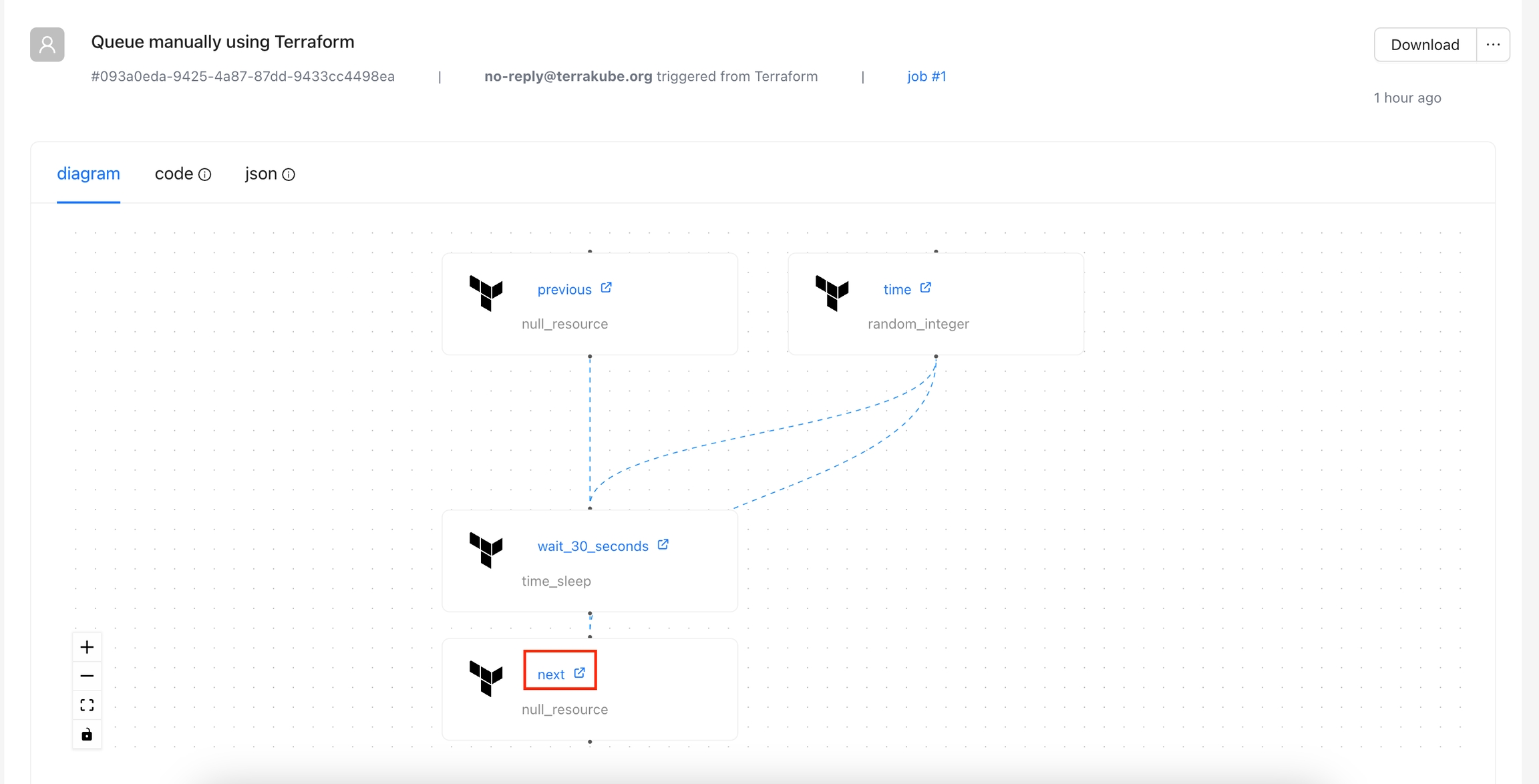

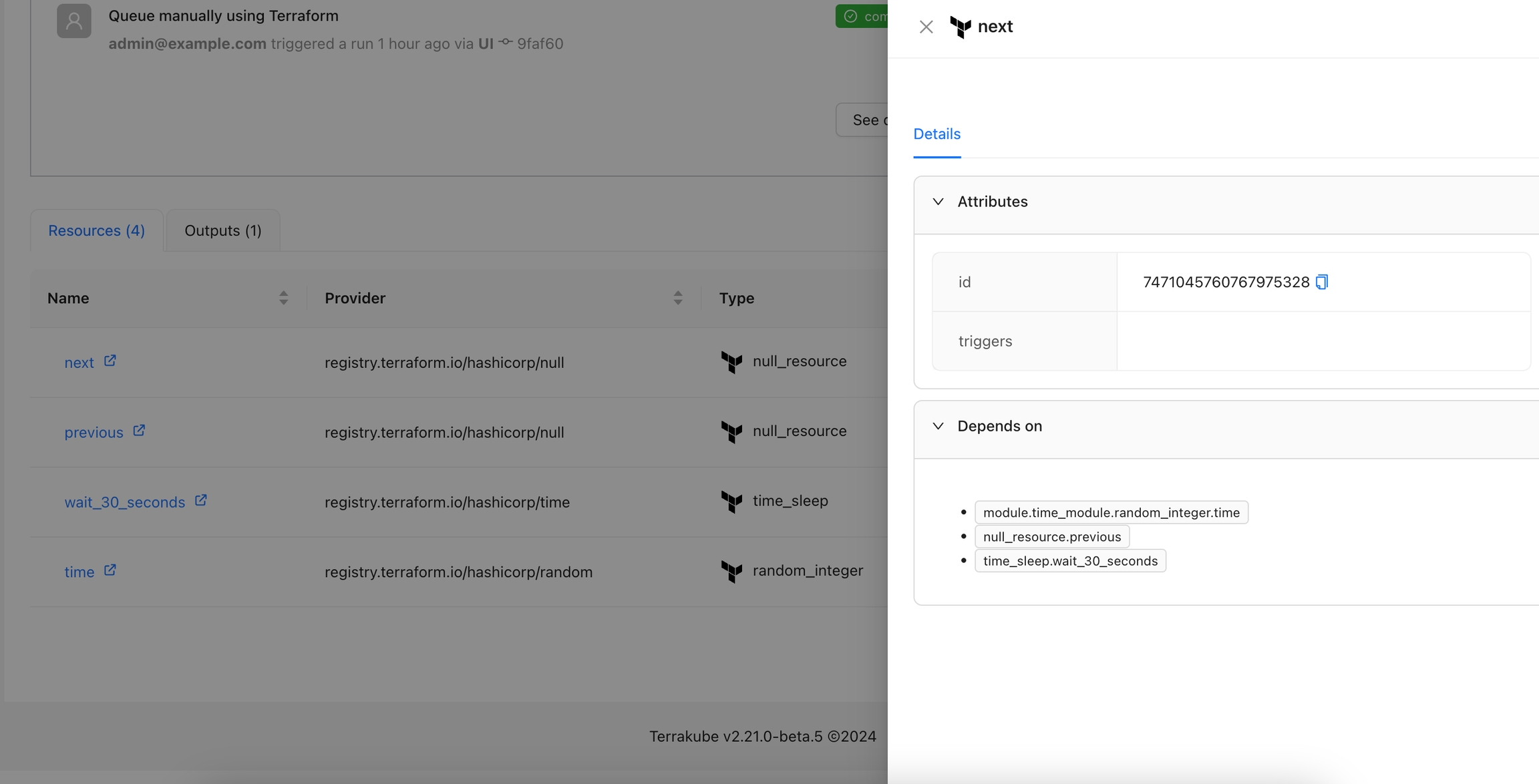

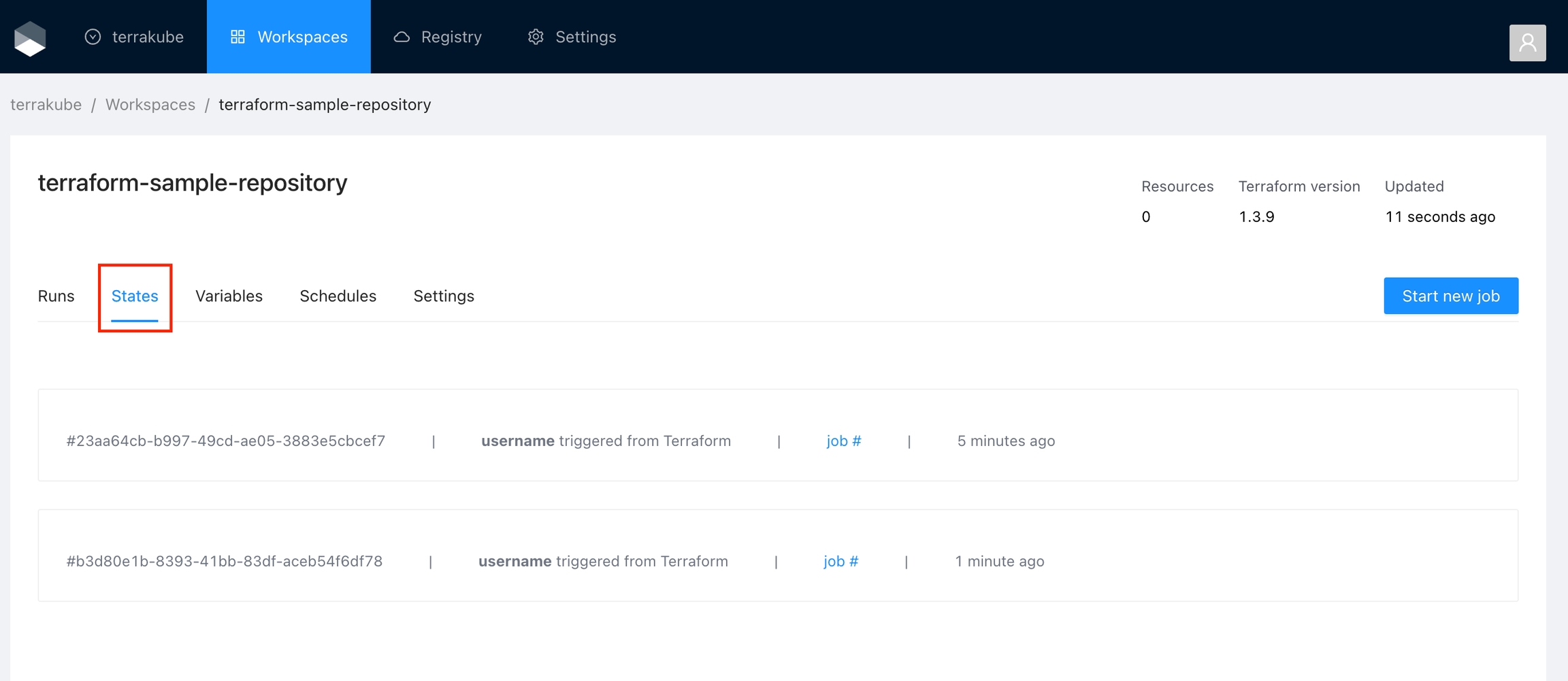

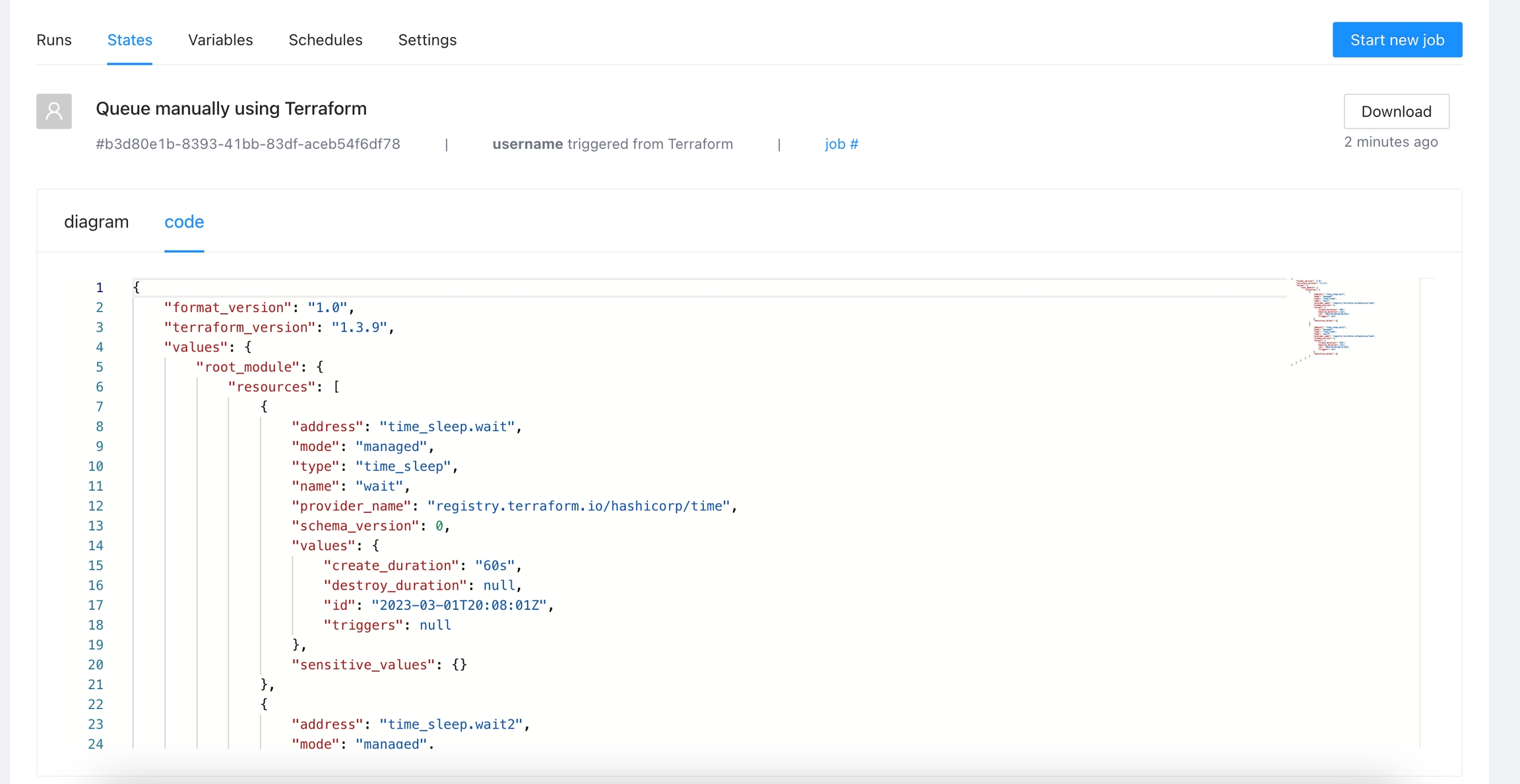

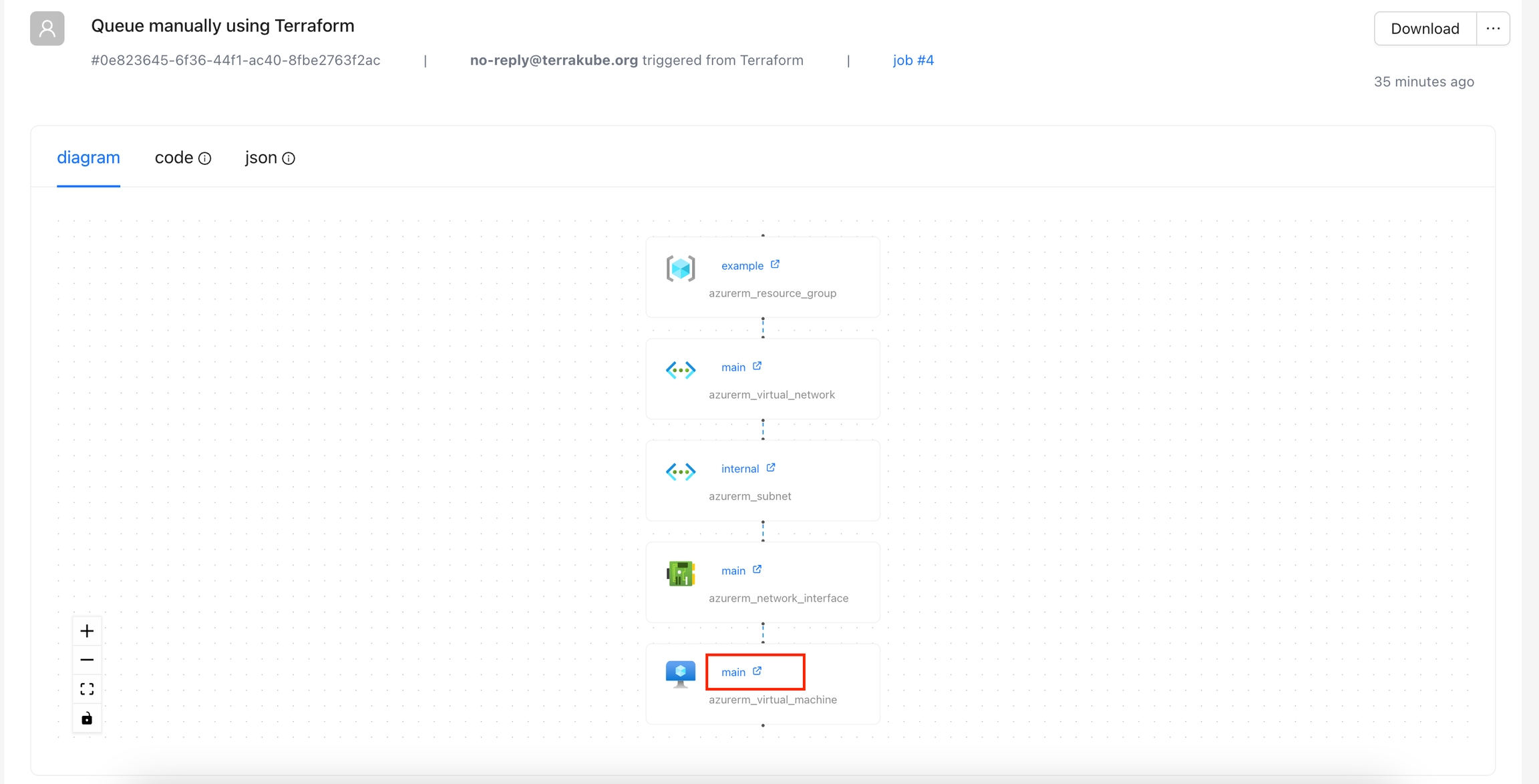

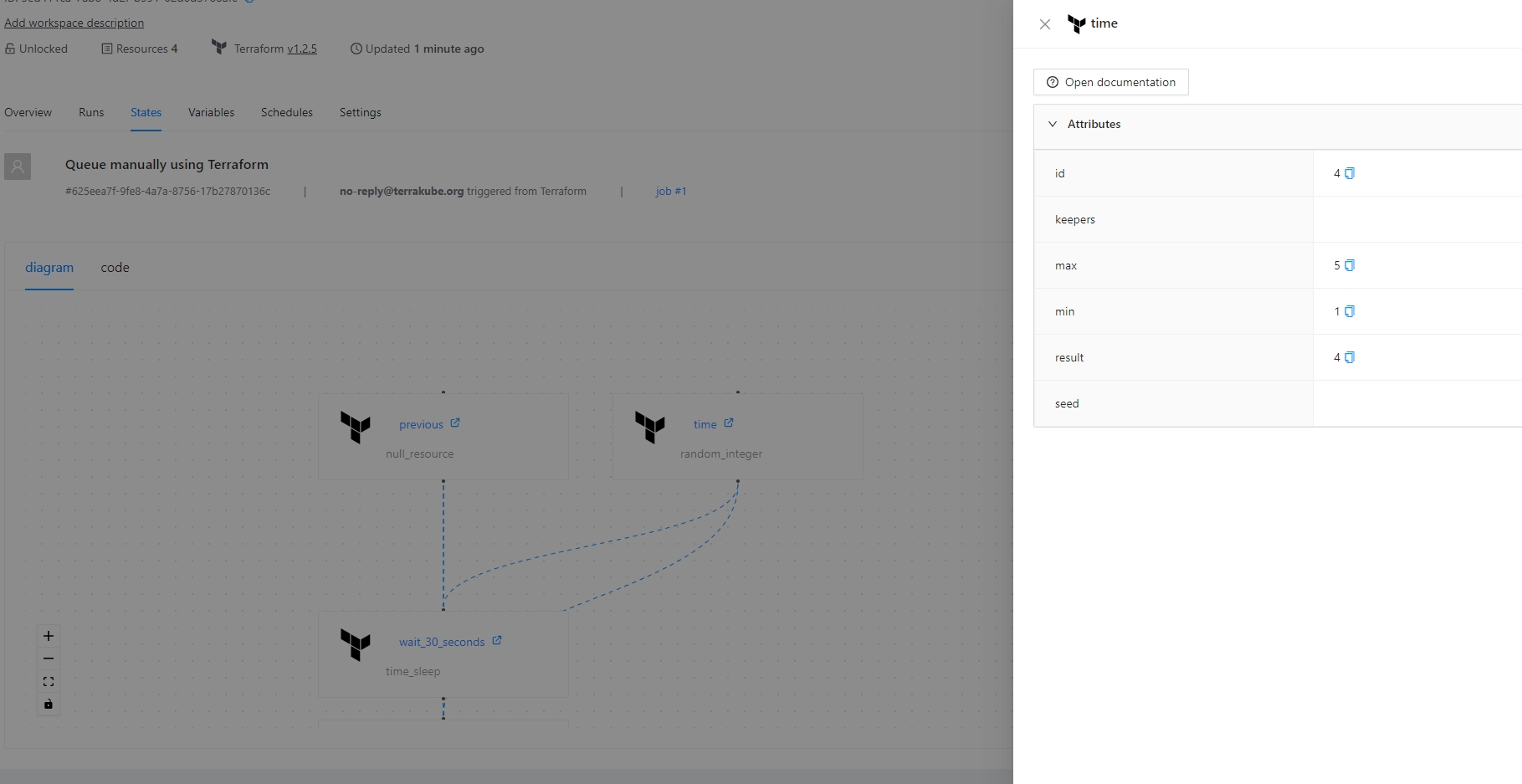

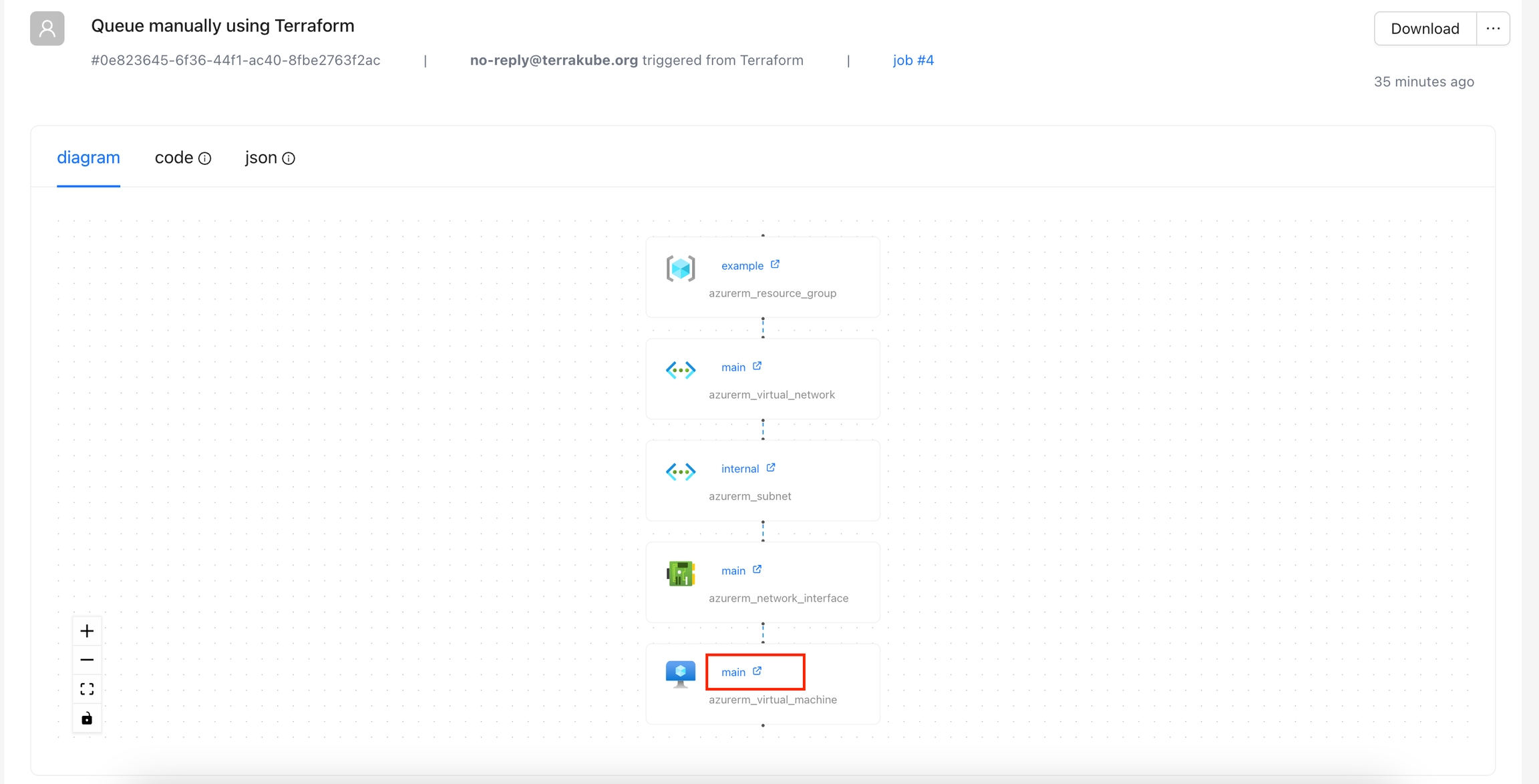

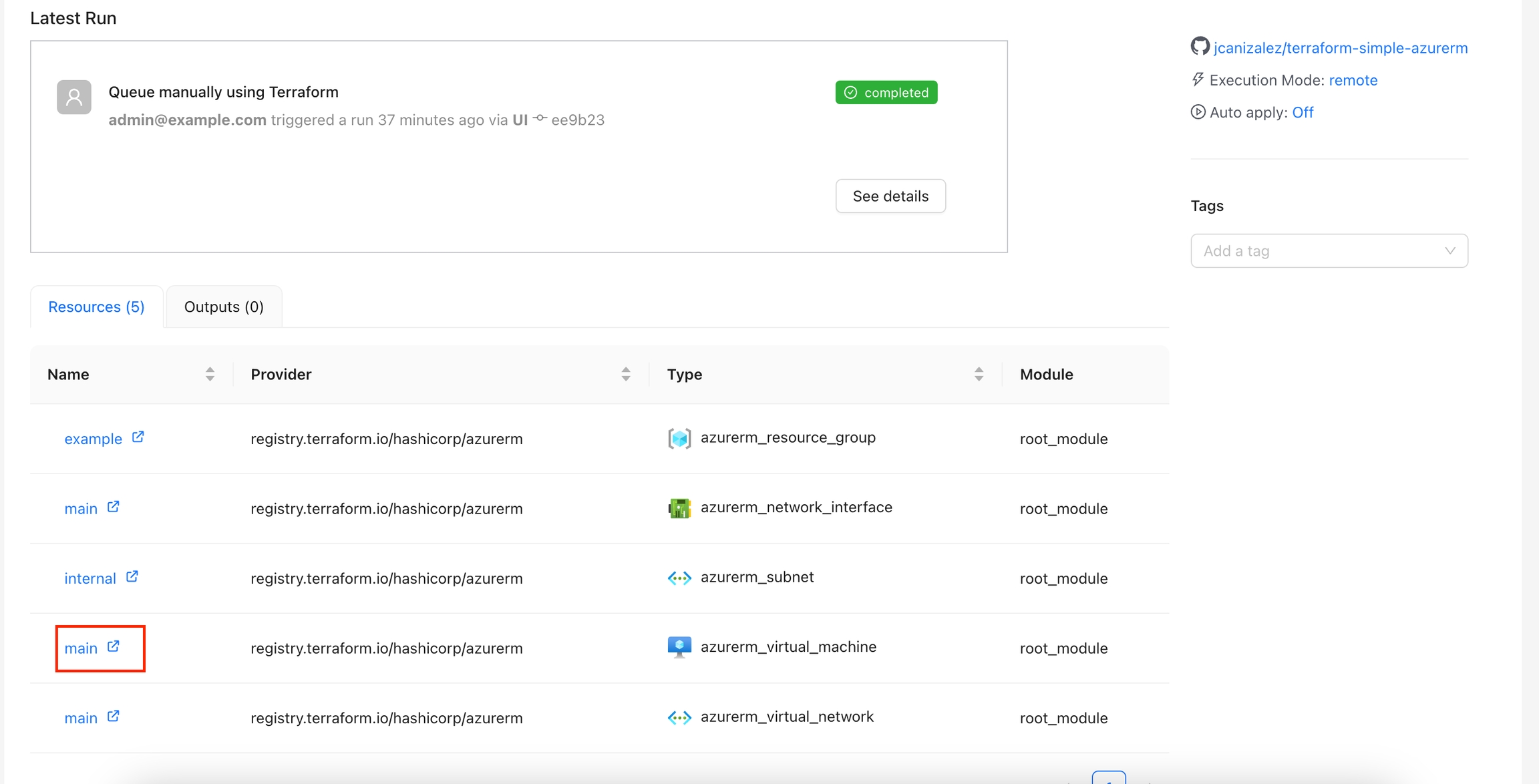

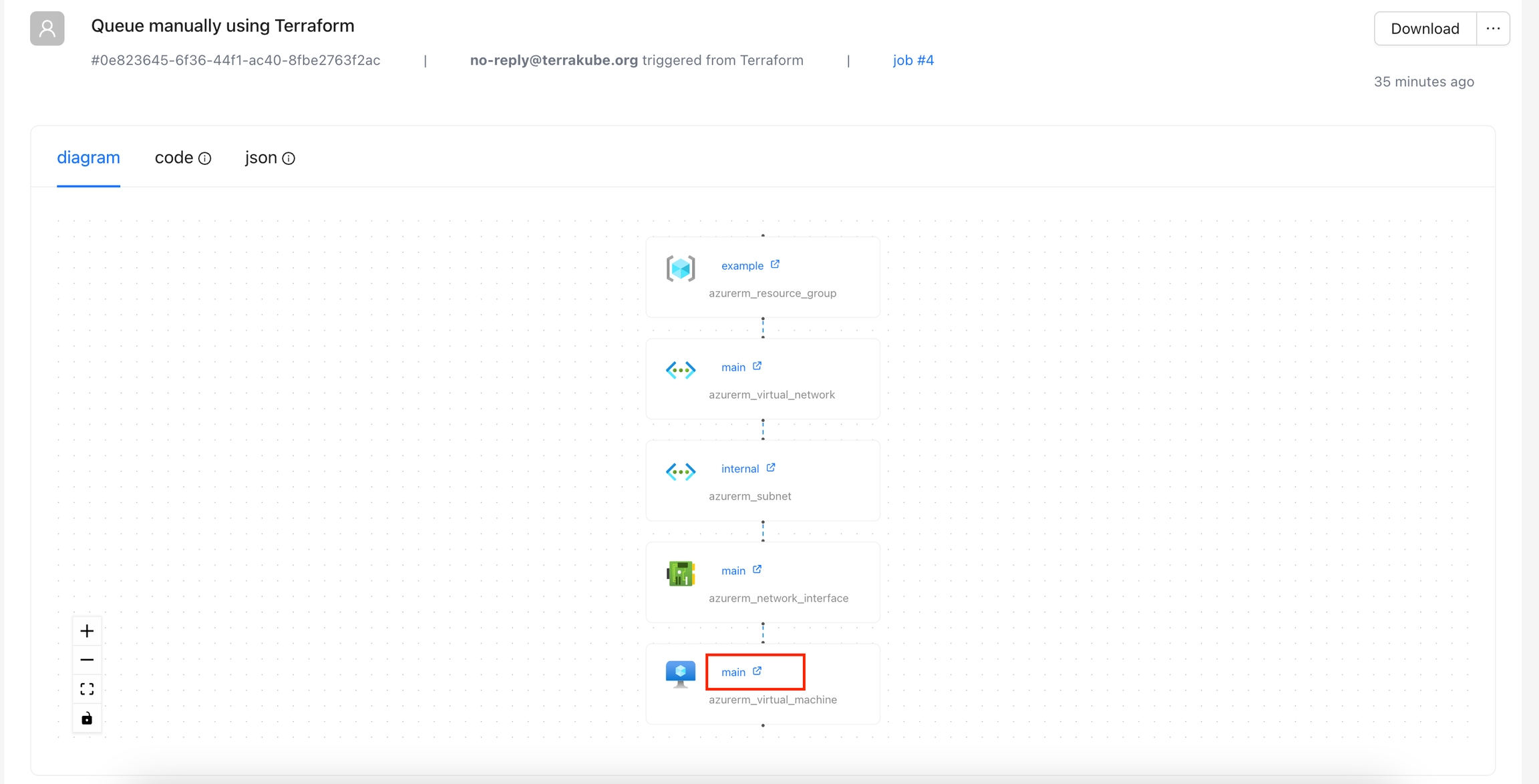

Each Terrakube workspace has its own separate state data, used for jobs within that workspace. In Terrakube you can see the Terraform state in the standard JSON format provided by the Terraform cli or you can see a Visual State, this is a diagram created by Terrakube to represent the resources inside the JSON state.

Go to the workspace and click the States tab and then click in the specific state you want to see.

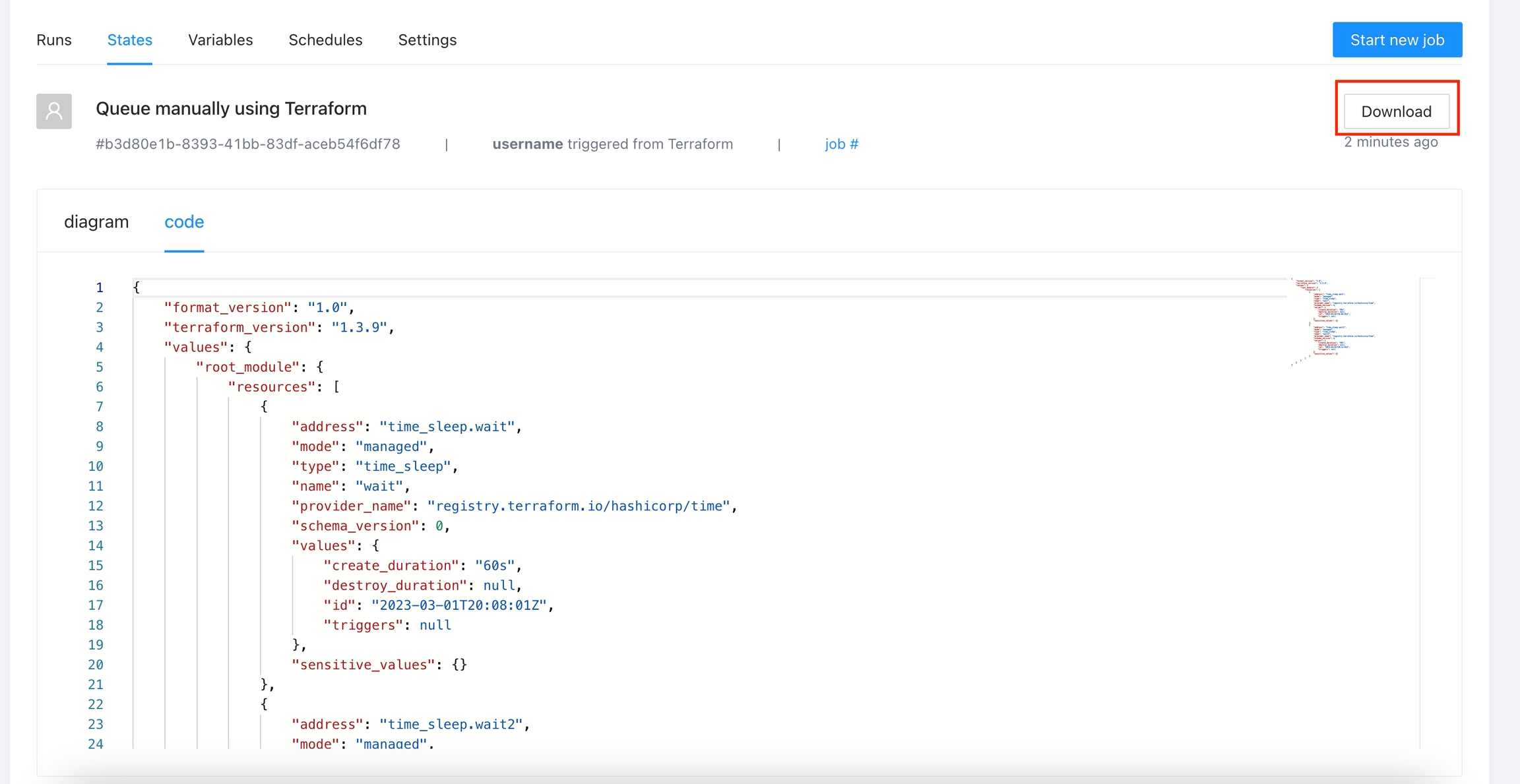

You will see the state in JSON format

You can click the Download button to get a copy of the JSON state file

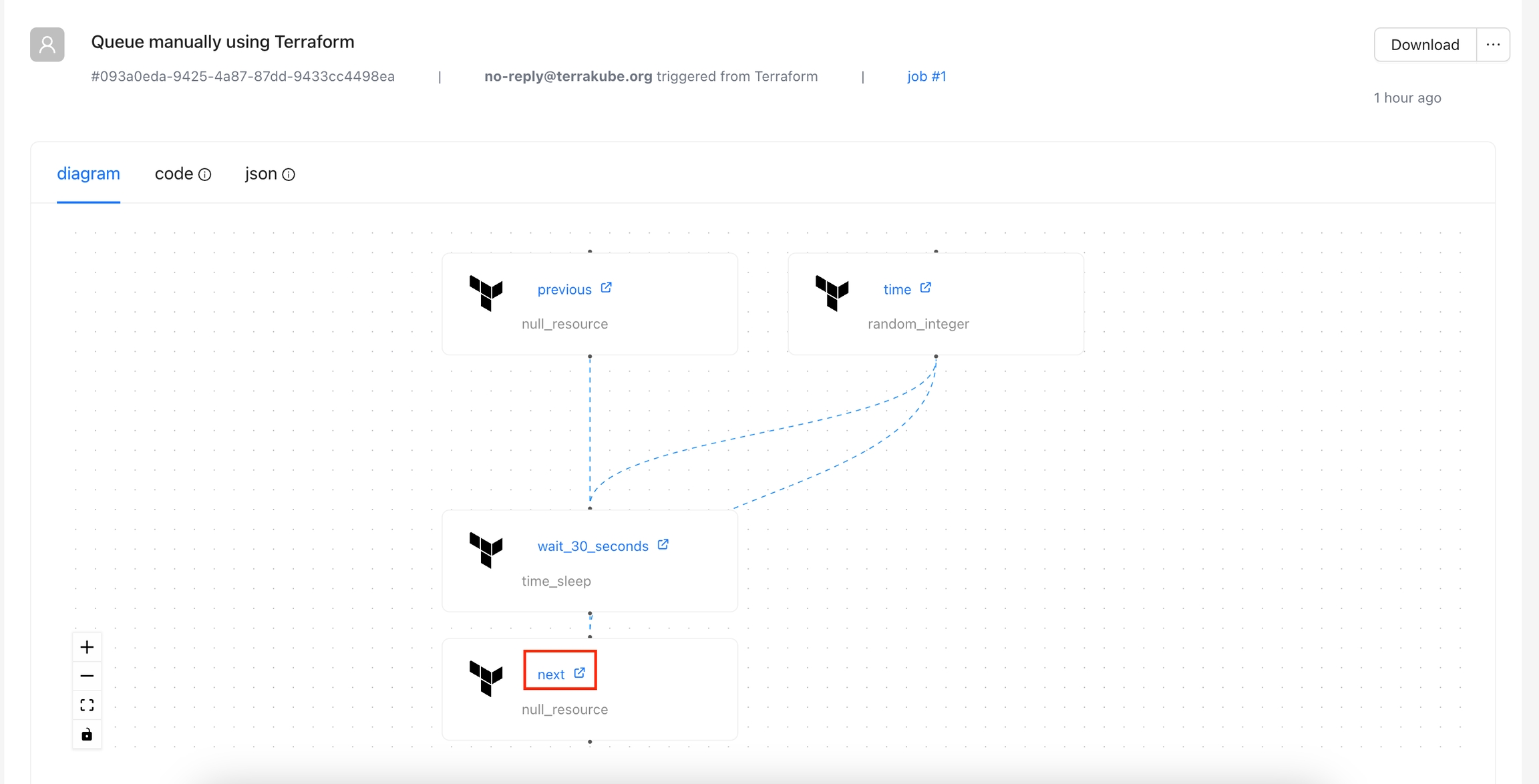

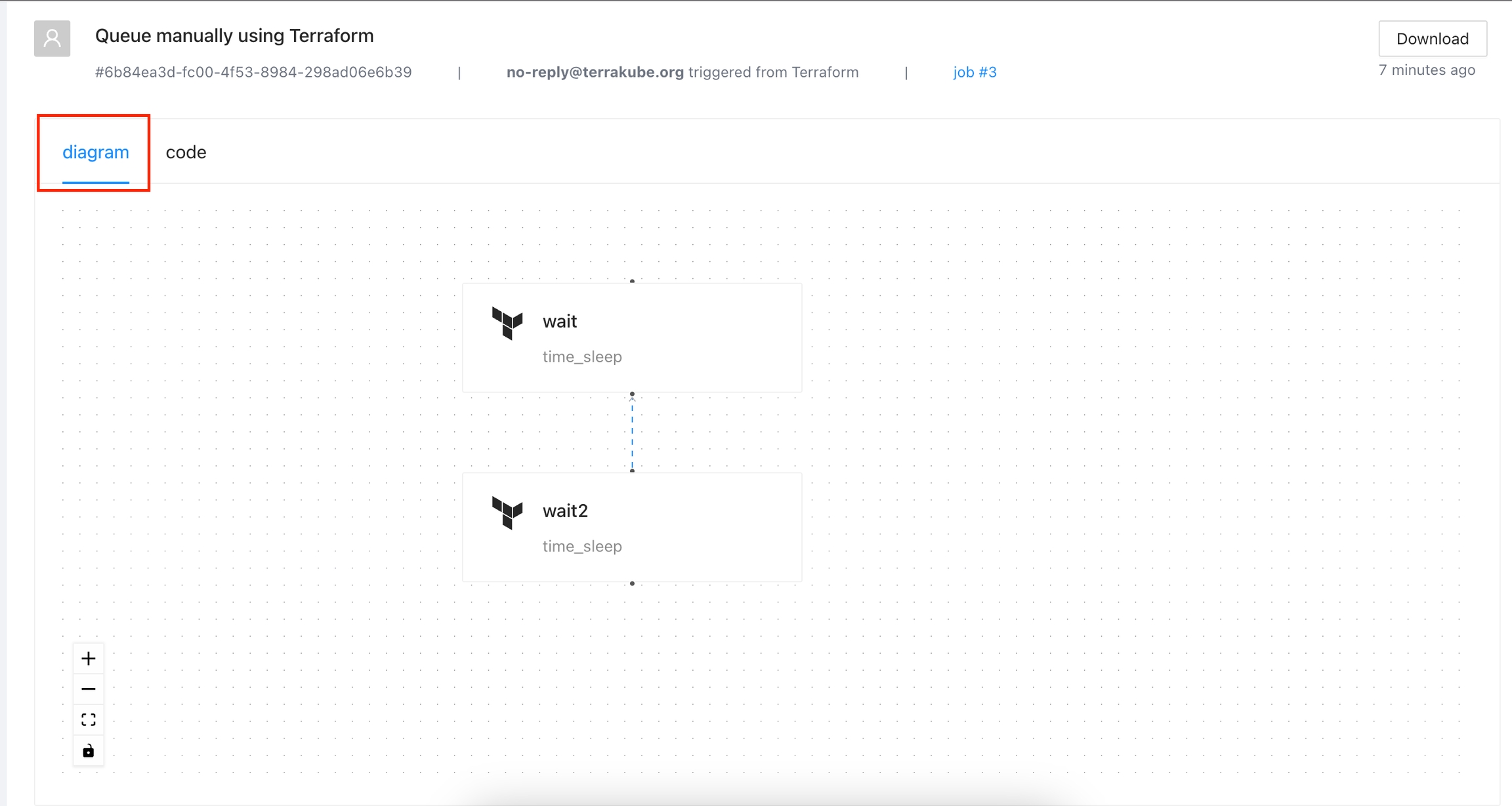

Inside the states page, click the diagram tab and you will see the visual state for your terraform state.

The visual state will also show some information if you click each element like the following:

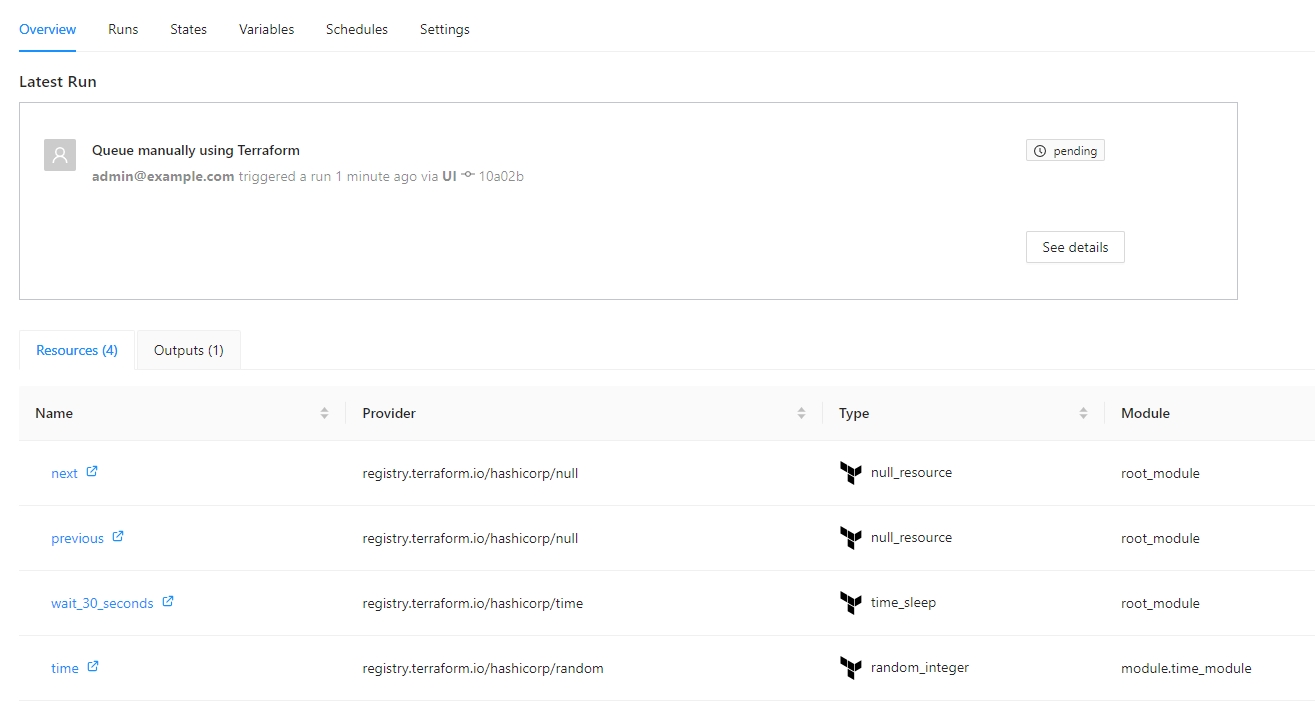

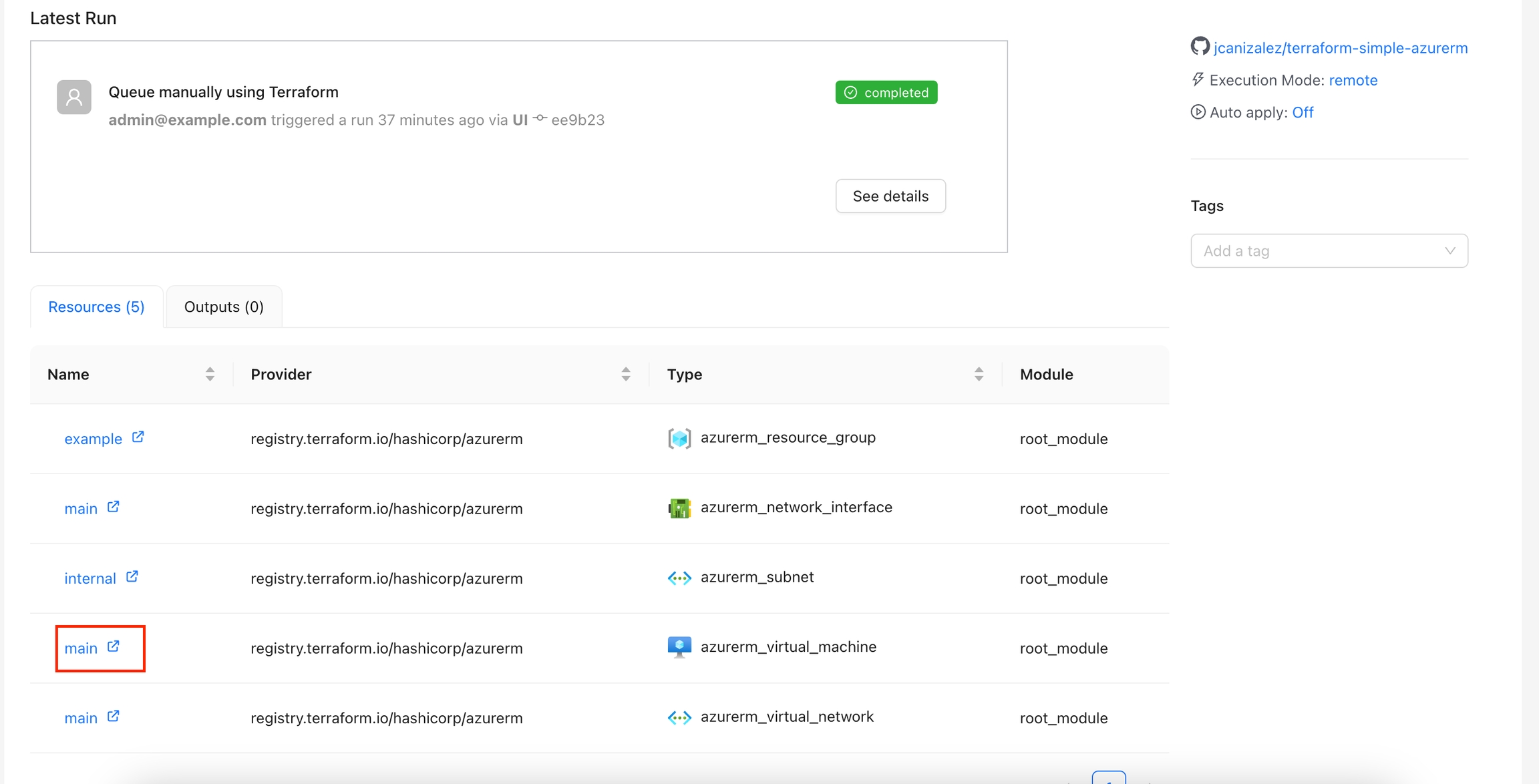

All the resources can be shown in the overview page inside the workspace:

If you click the resources you can check the information about each resouce like the folloing

The dynamic provider credential setup in GCP can be done with the Terrraform code available in the following link:

https://github.com/AzBuilder/terrakube/tree/main/dynamic-credential-setup/gcp

The code will also create a sample workspace with all the require environment variables that can be used to test the functionality using the CLI driven workflow.

Make sure to mount your public and private key to the API container as explained

Validate the following terrakube api endpoints are working:

Set terraform variables using: "variables.auto.tfvars"

Run Terraform apply to create all the federated credential setup in GCP and a sample workspace in terrakube for testing

To test the following terraform code can be used:

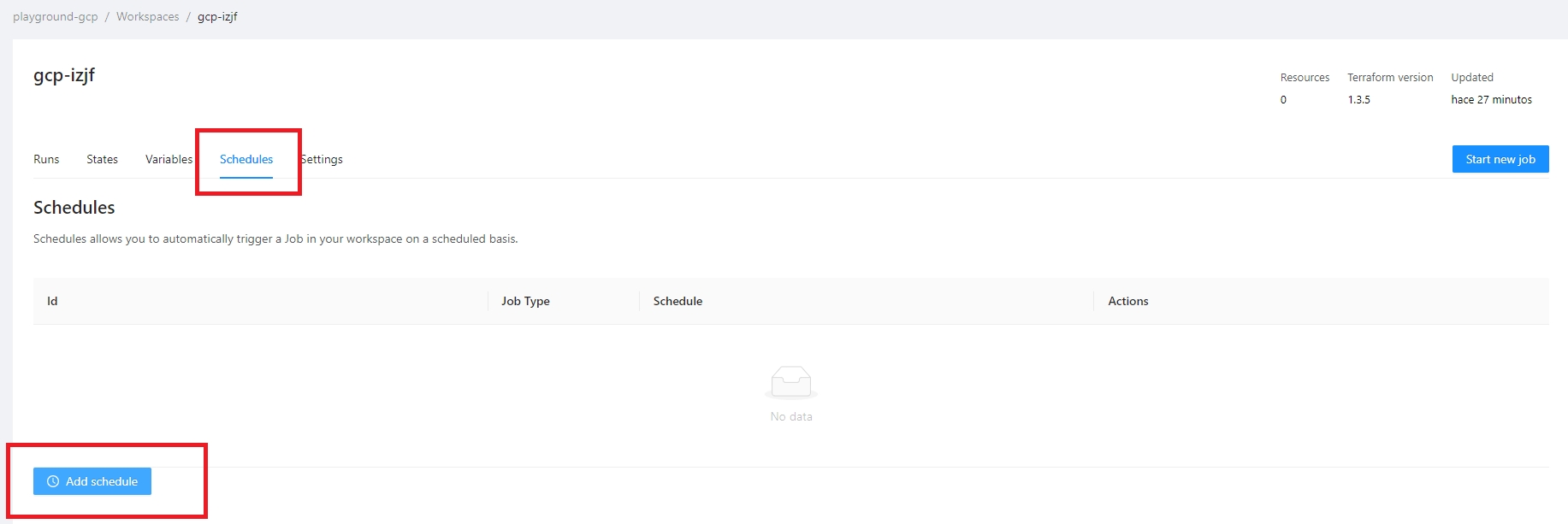

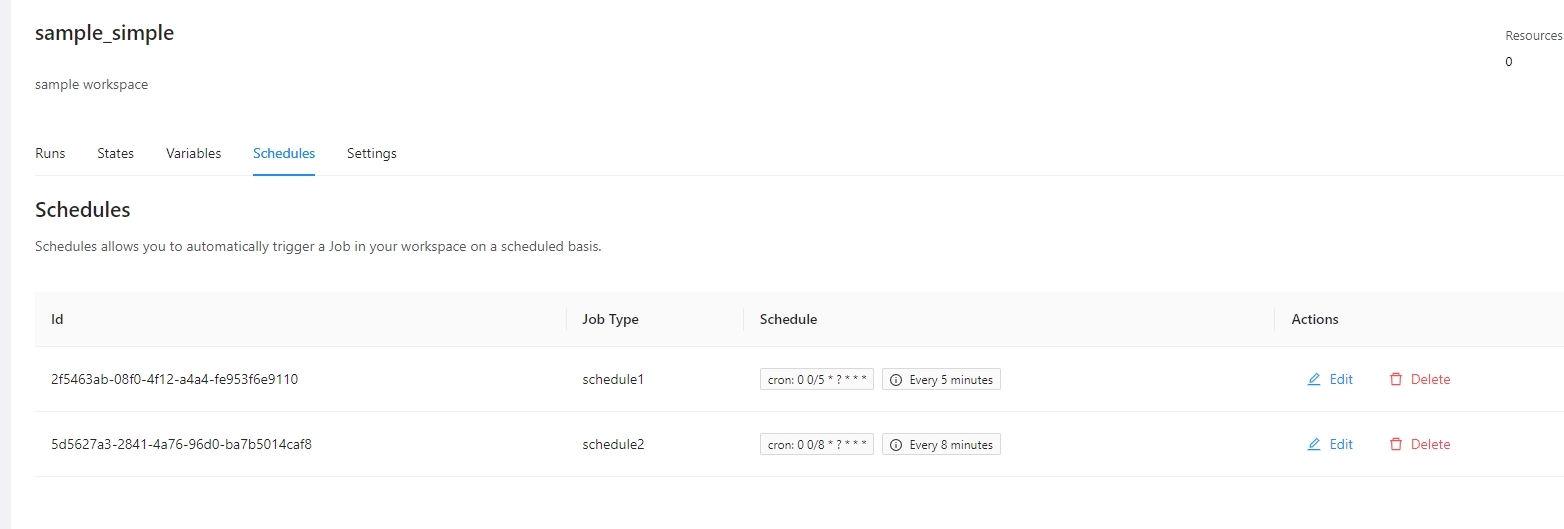

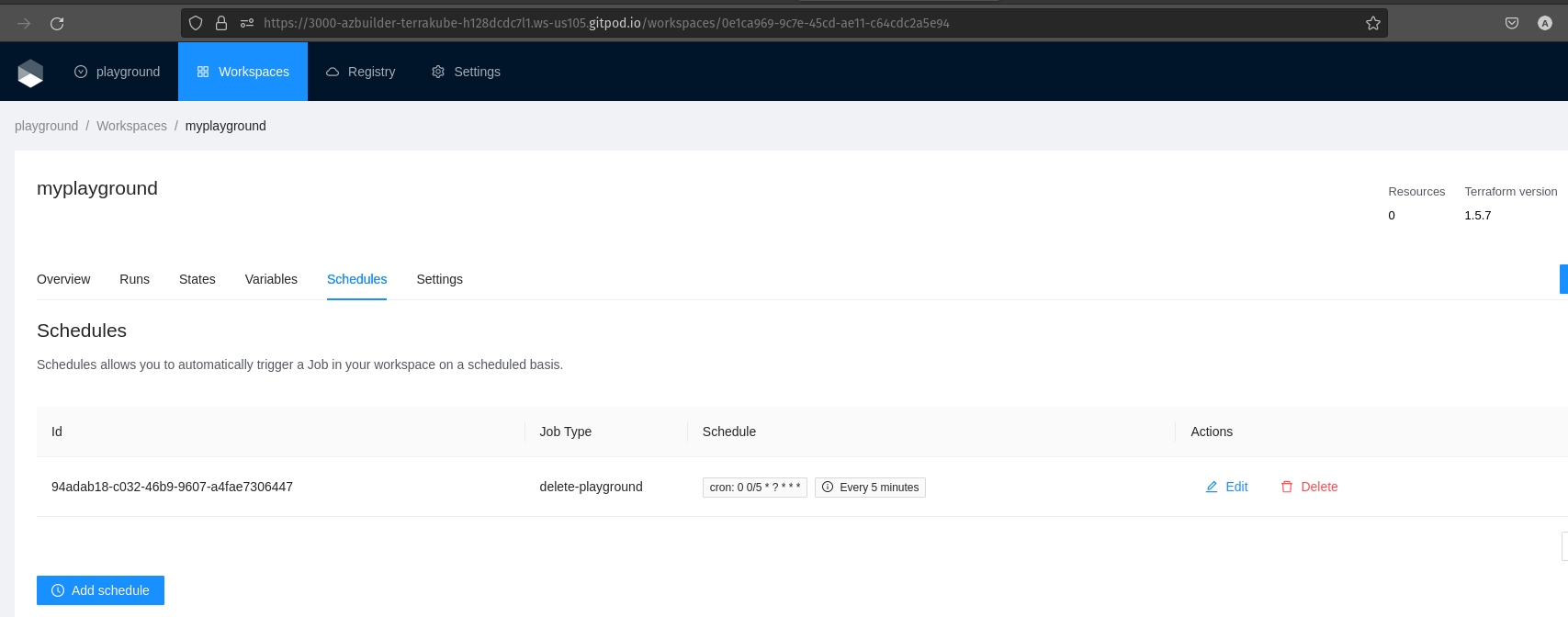

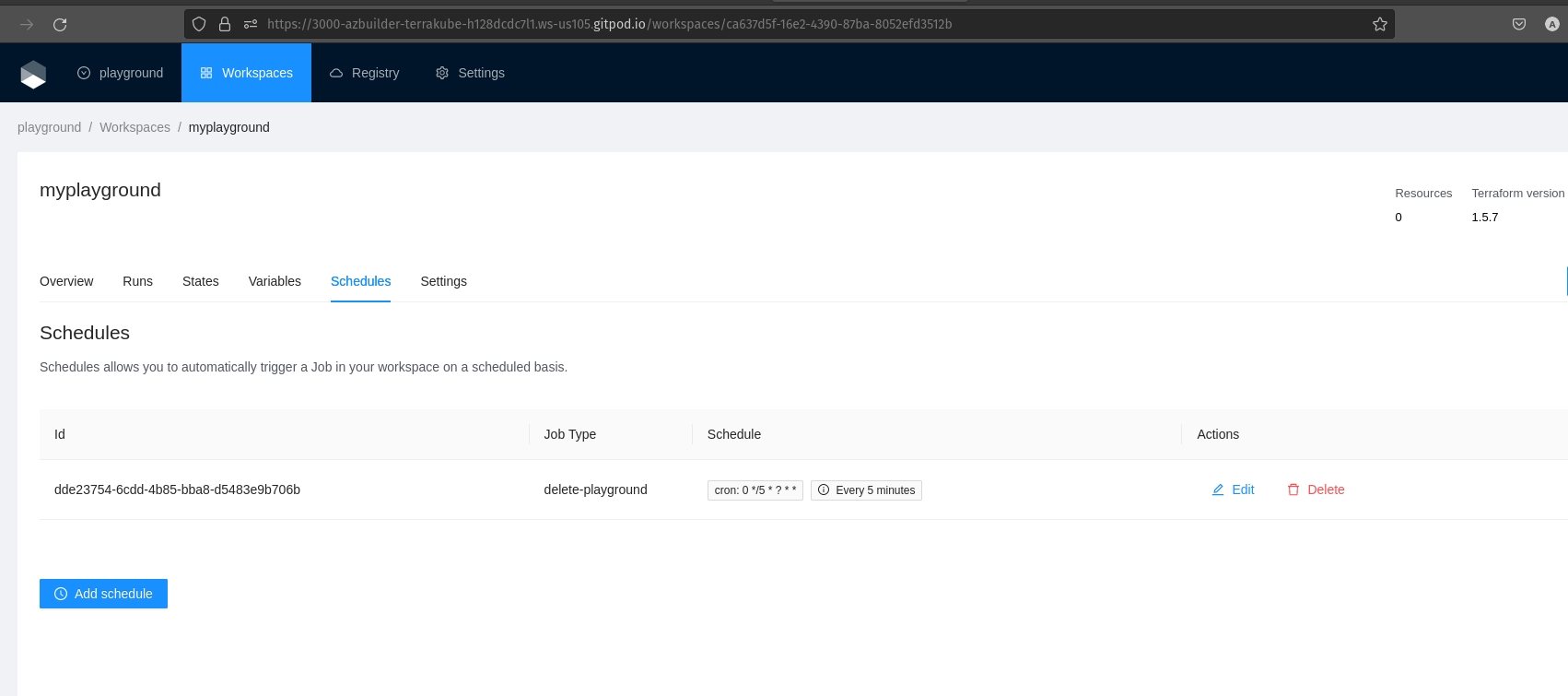

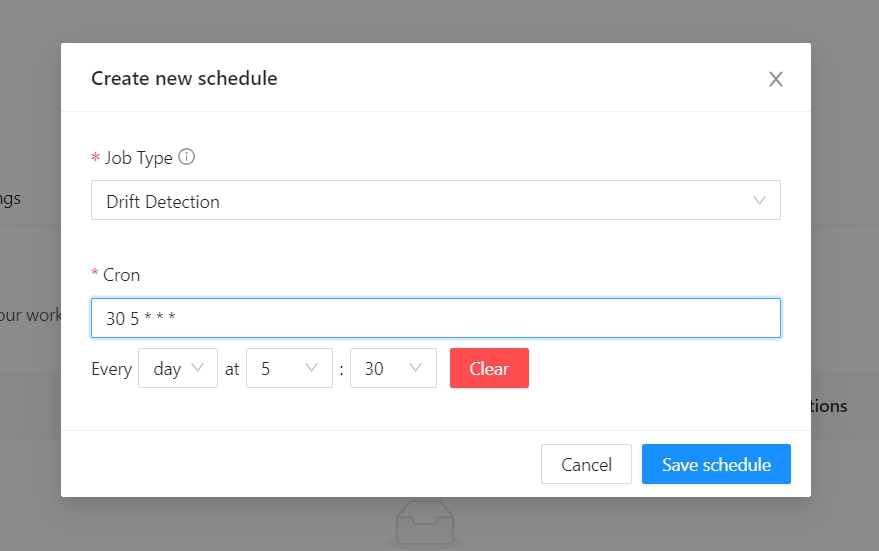

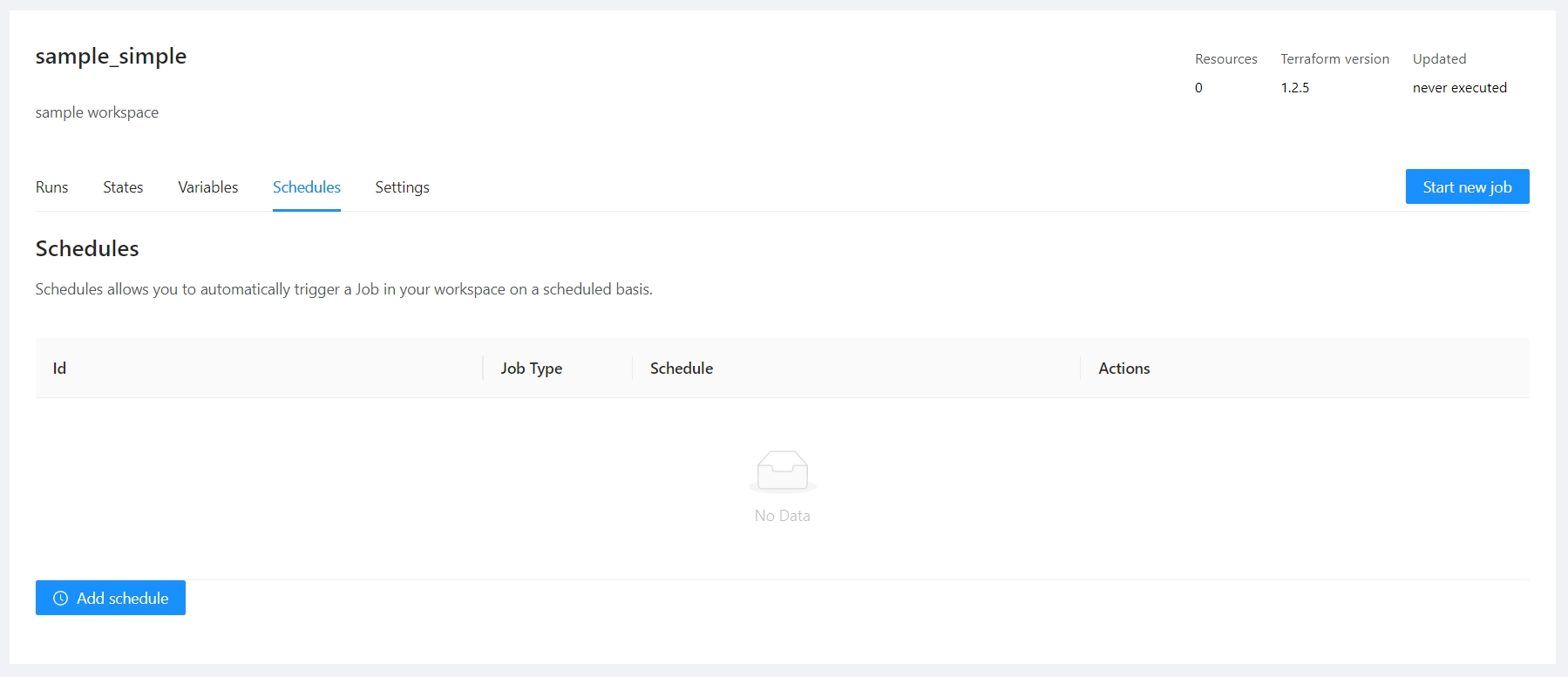

Terrakube allows to schedule a job inside workspaces, this will allow to execute a specific template in an specific time.

To create a workspace schedule click the "schedule" tab and click "Add schedule" like the following image.

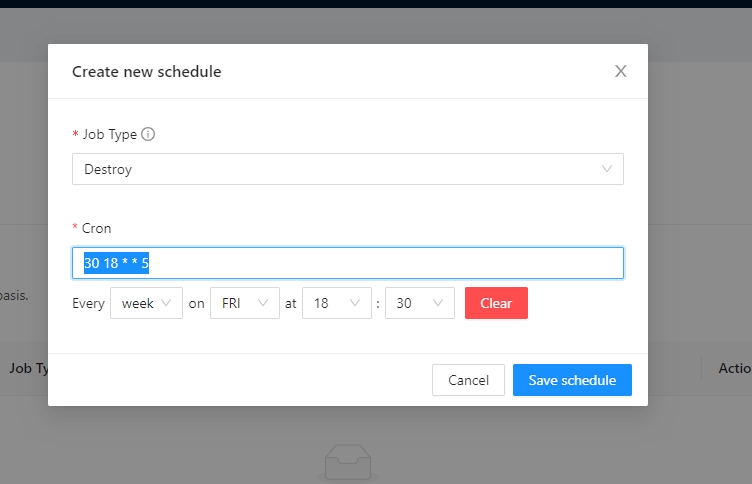

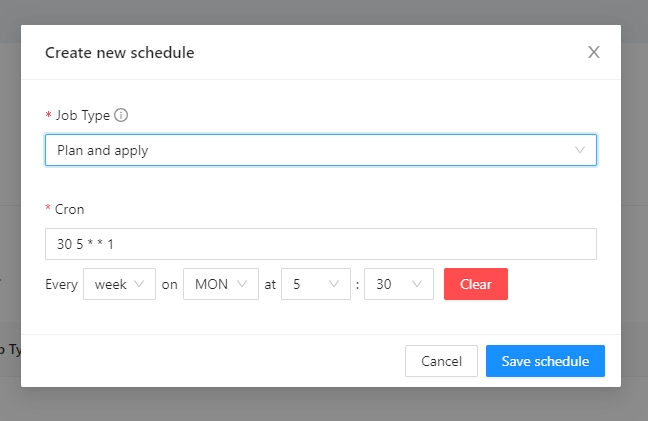

This is usefull for example when you want to destroy your infrastructure by the end of every week to save some money with your cloud provider and recreate it every monday morning.

Example.

Lets destroy the workspace every friday at 6 pm

Lets create the workspace every monday at 5:30 am

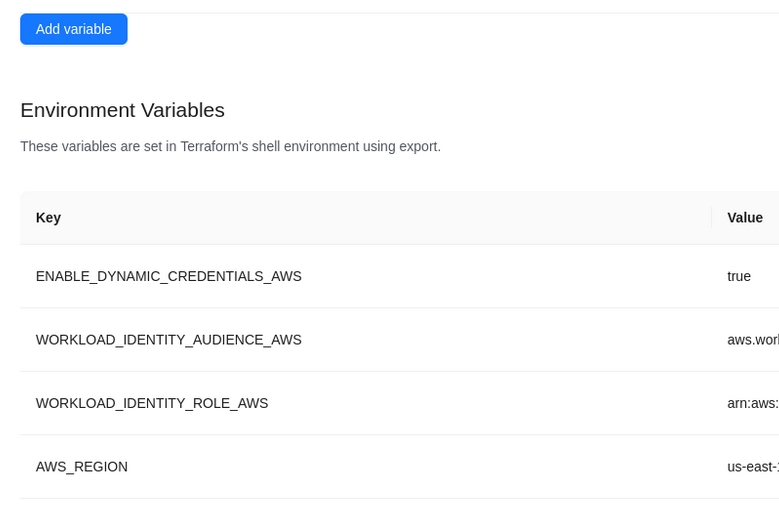

The dynamic provider credential setup in AWS can be done with the Terrraform code available in the following link:

https://github.com/AzBuilder/terrakube/tree/main/dynamic-credential-setup/aws

The code will also create a sample workspace with all the require environment variables that can be used to test the functionality using the CLI driven workflow.

Make sure to mount your public and private key to the API container as explained

Validate the following terrakube api endpoints are working:

Set terraform variables using: "variables.auto.tfvars"

Run Terraform apply to create all the federated credential setup in AWS and a sample workspace in terrakube for testing

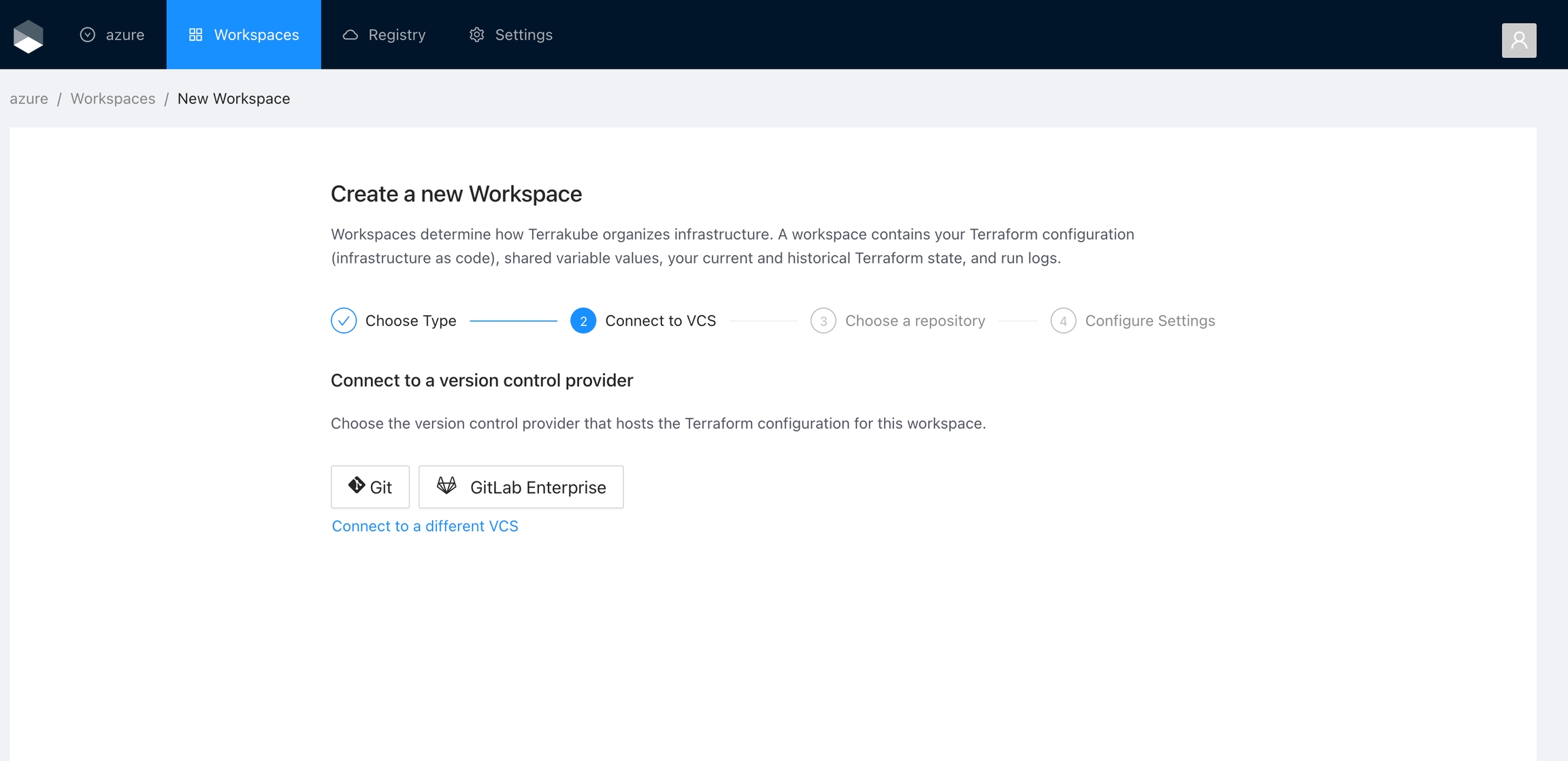

To test the following terraform code can be used:

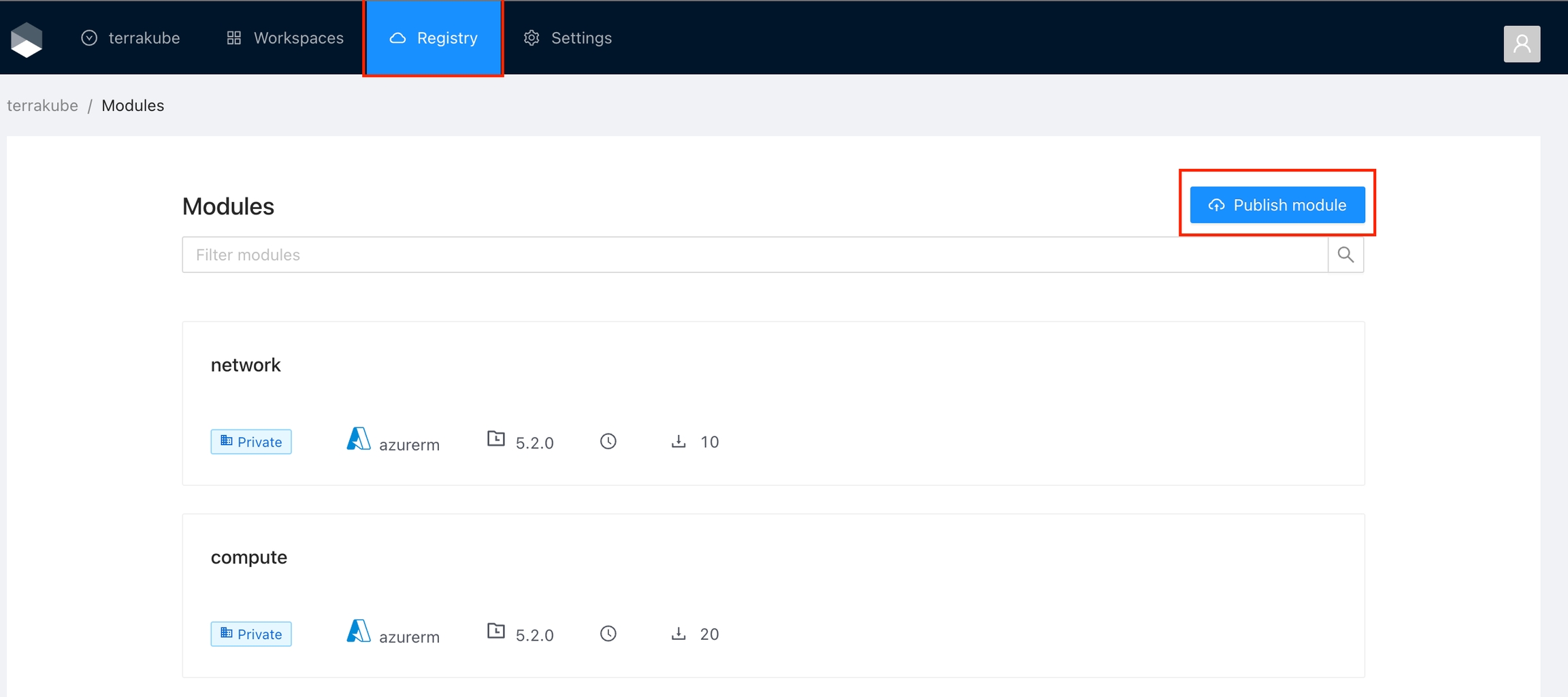

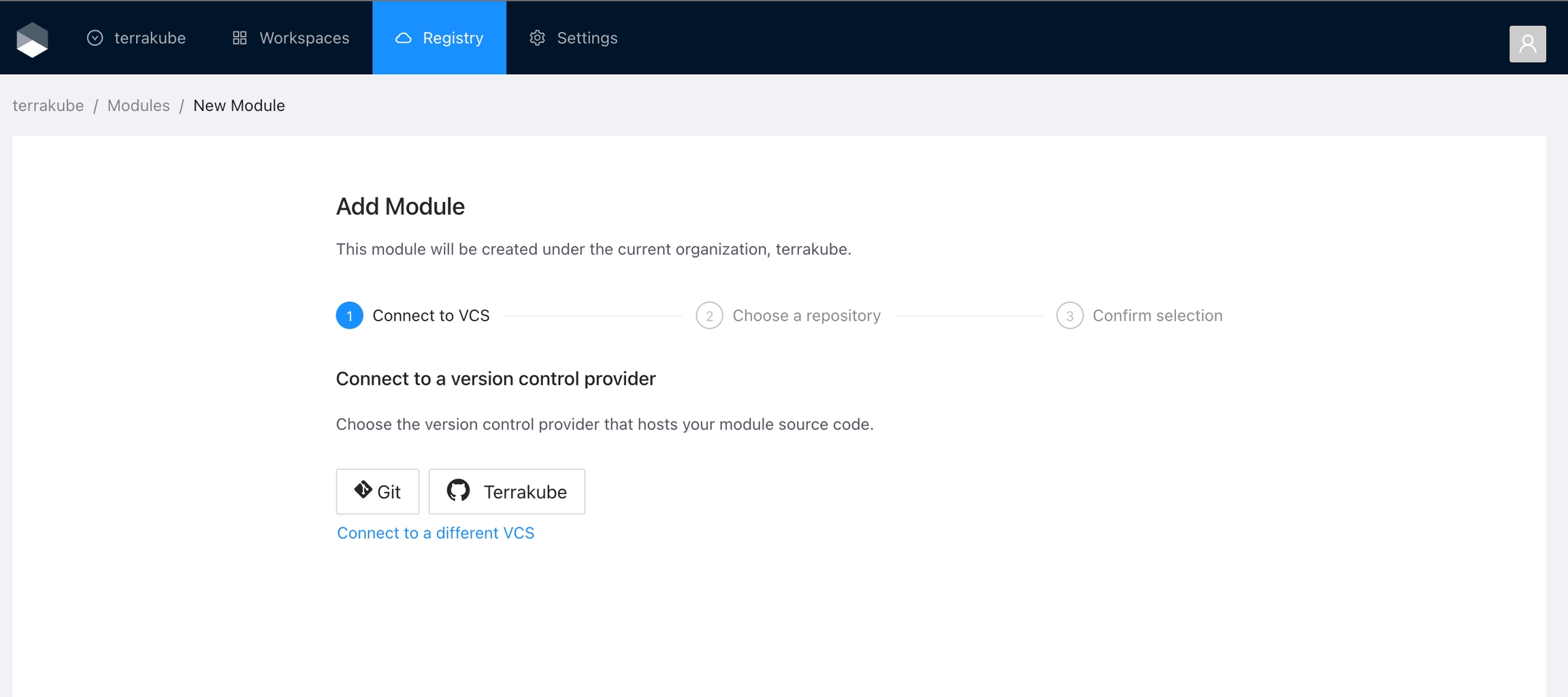

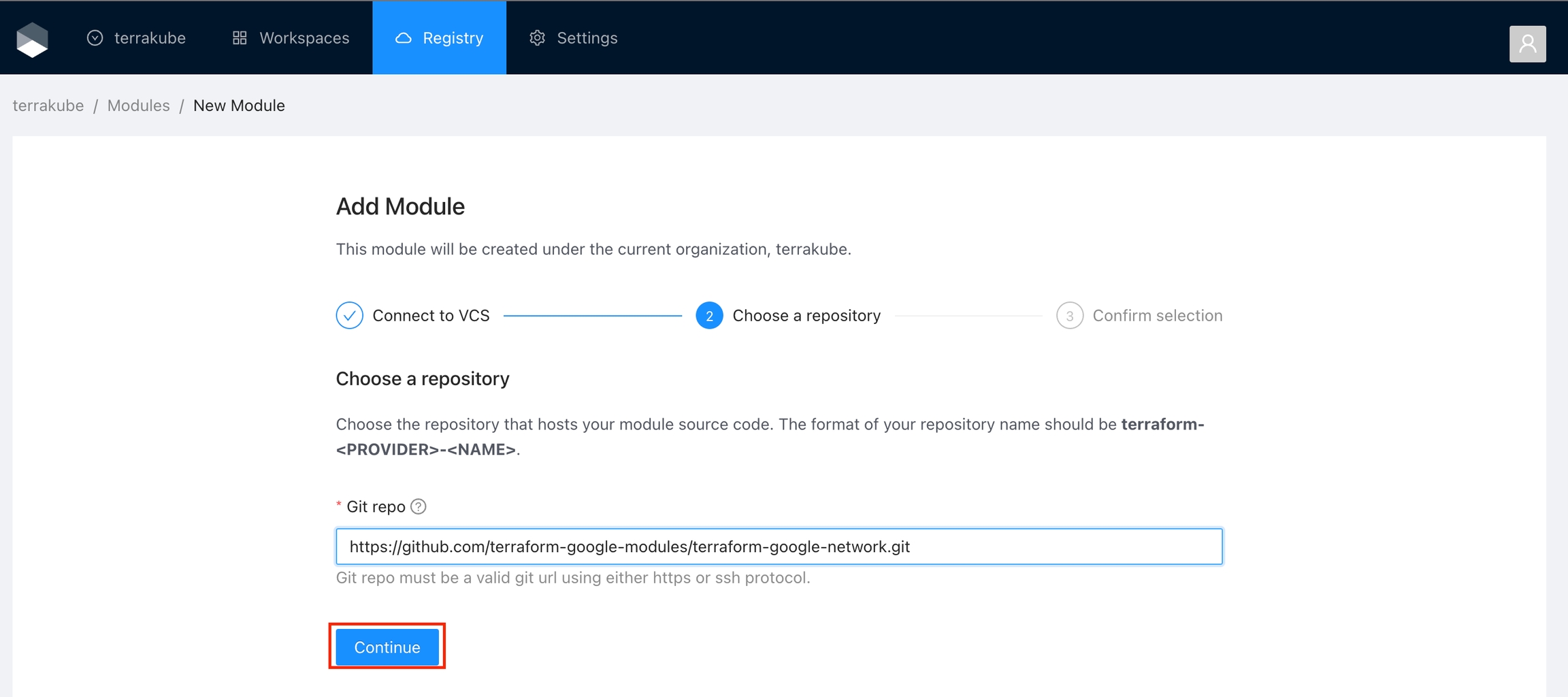

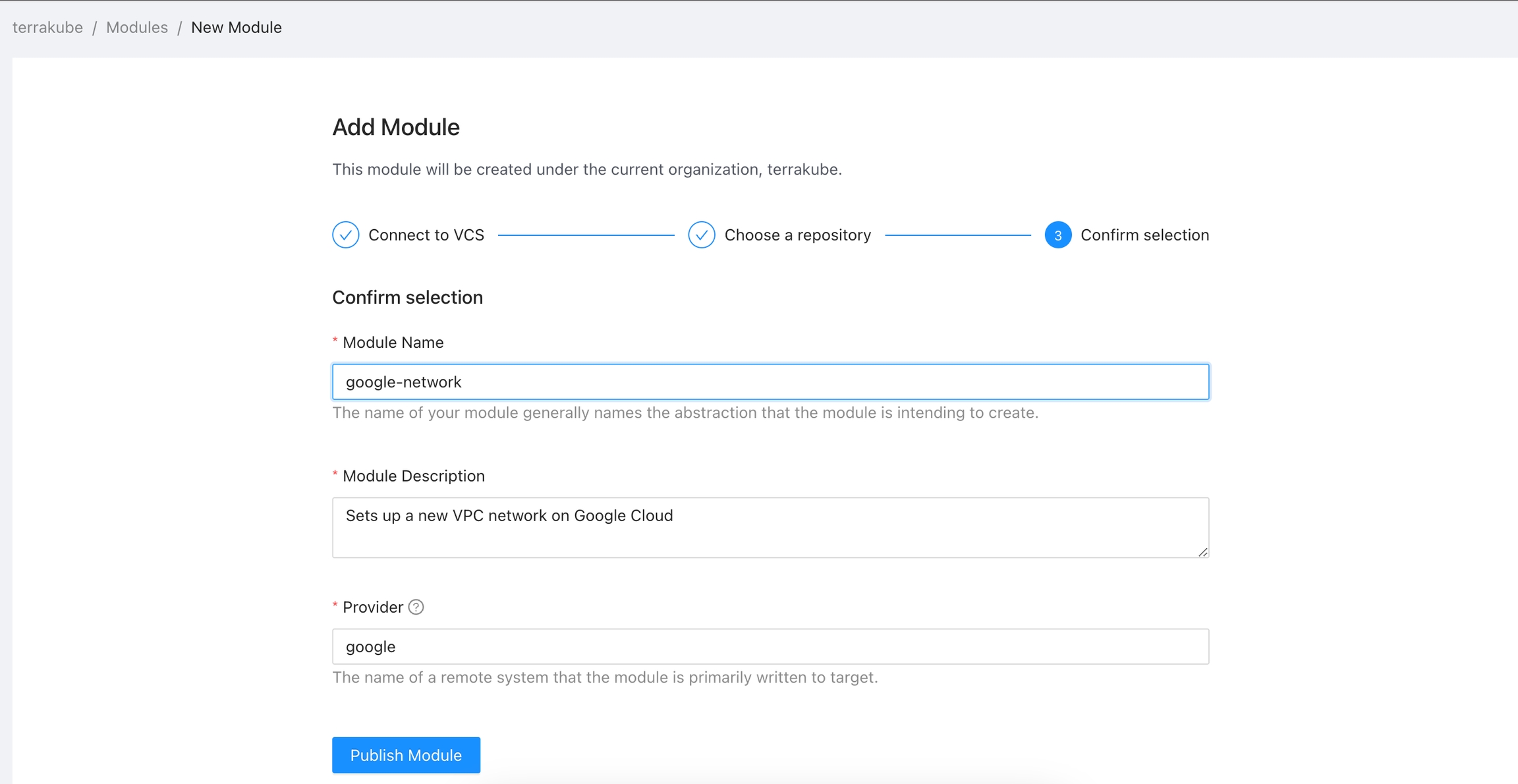

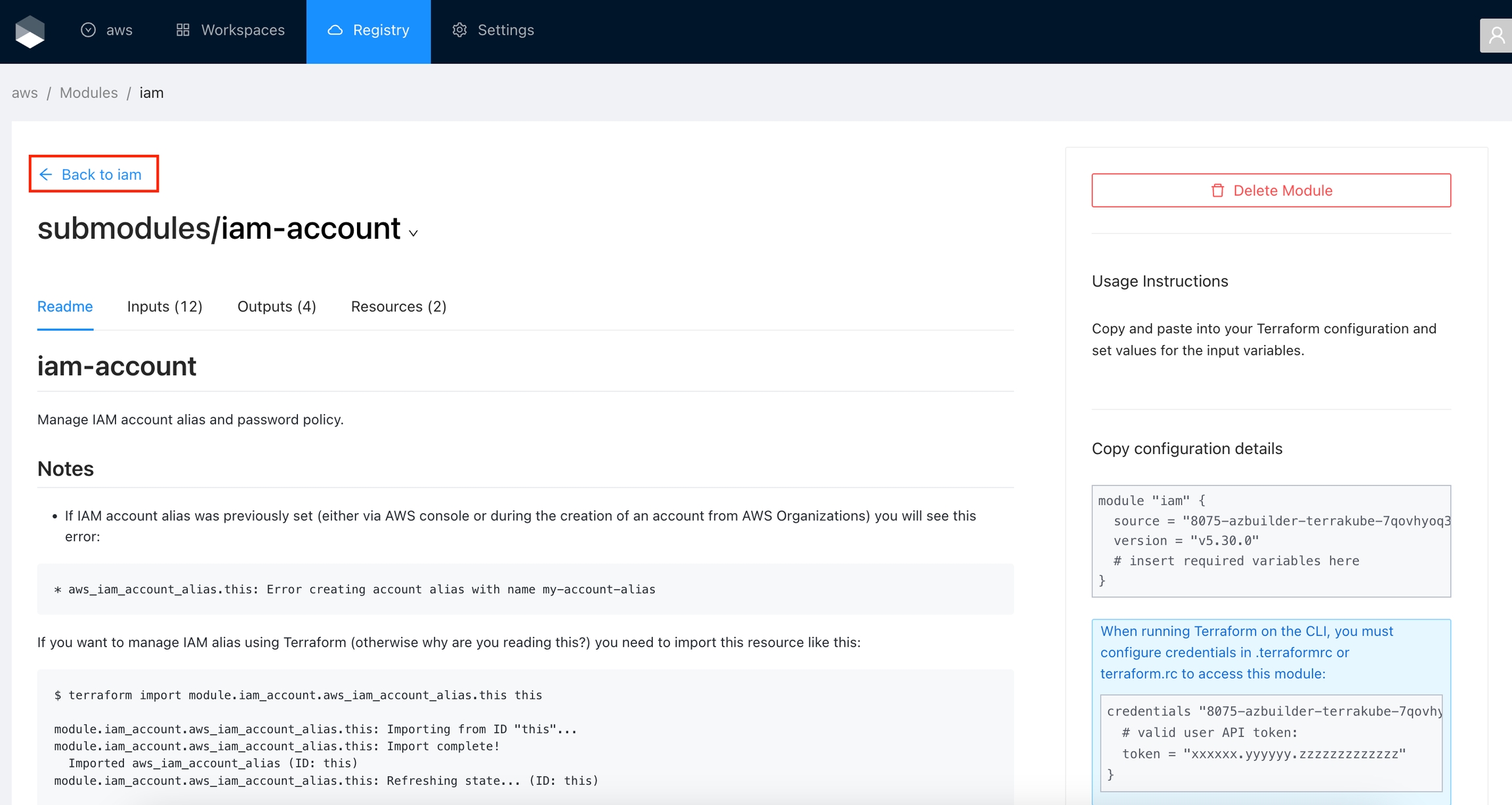

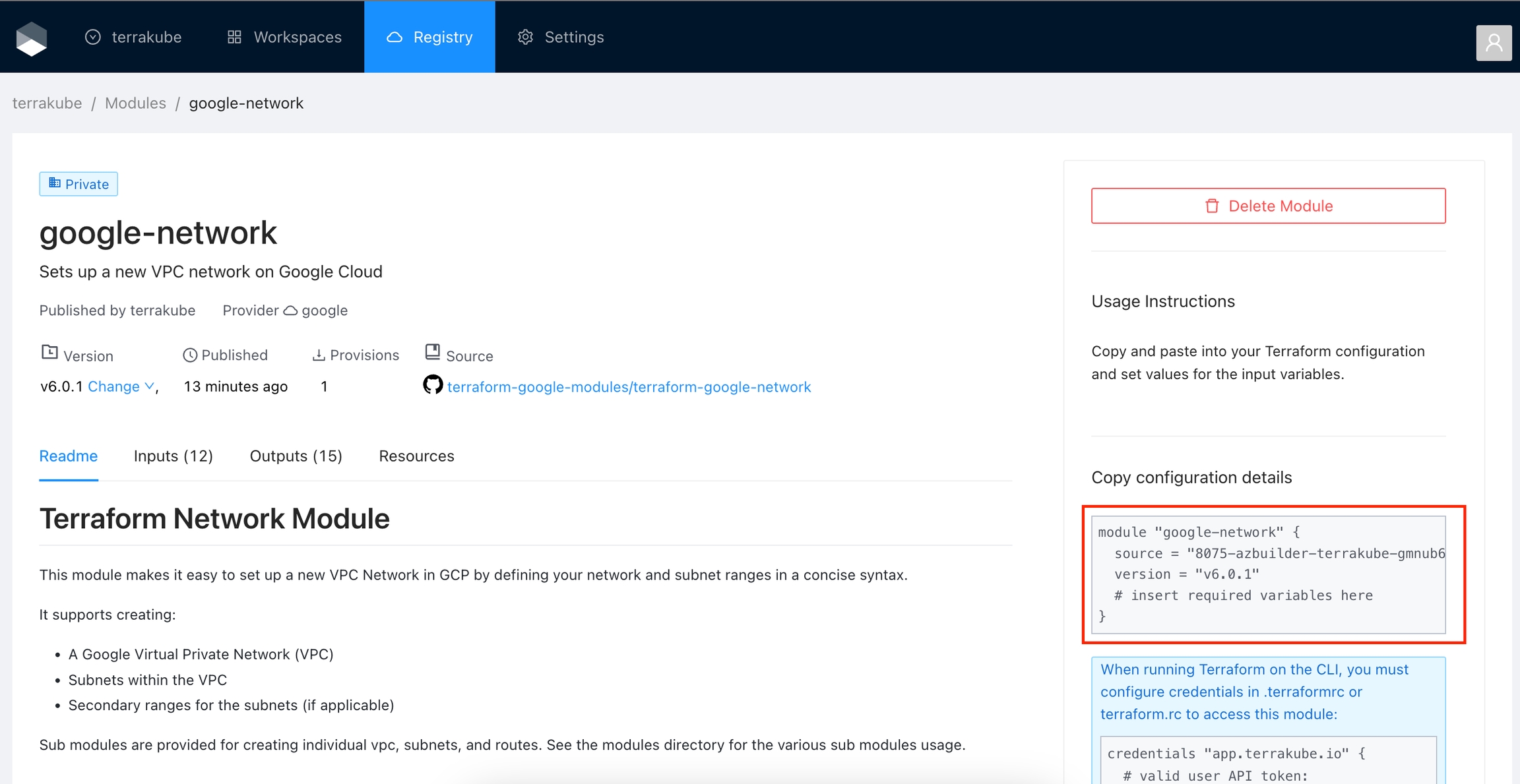

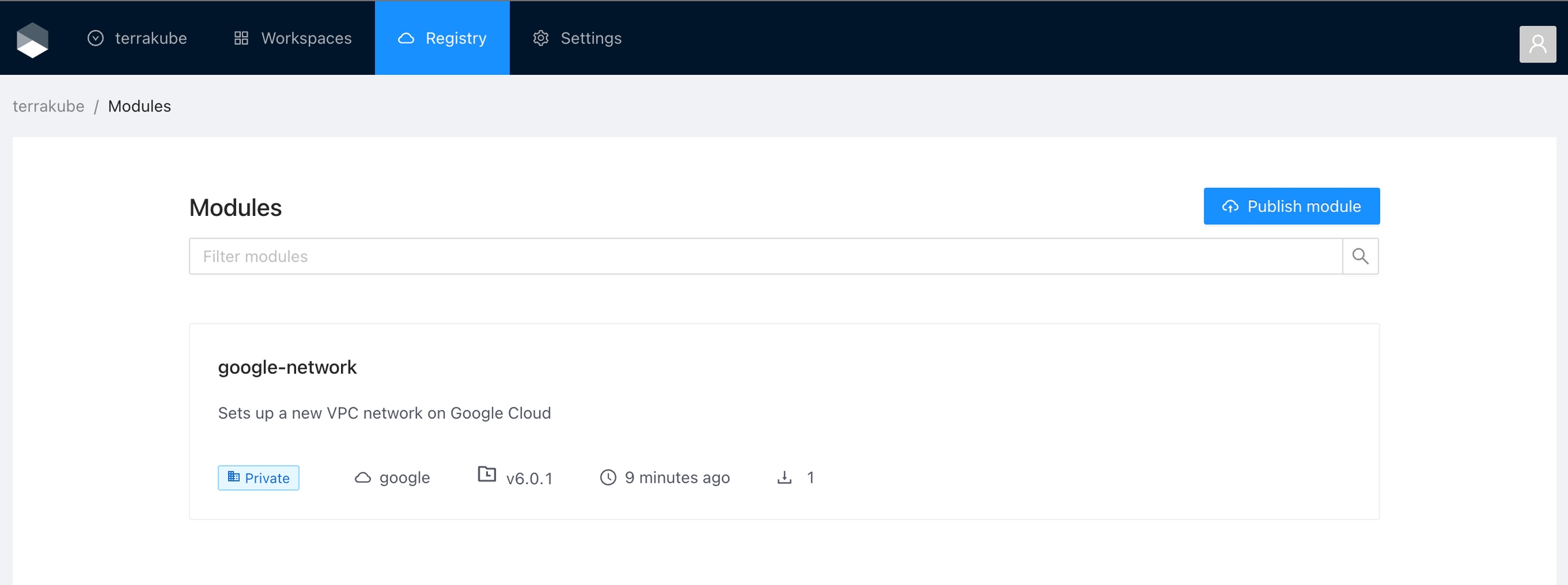

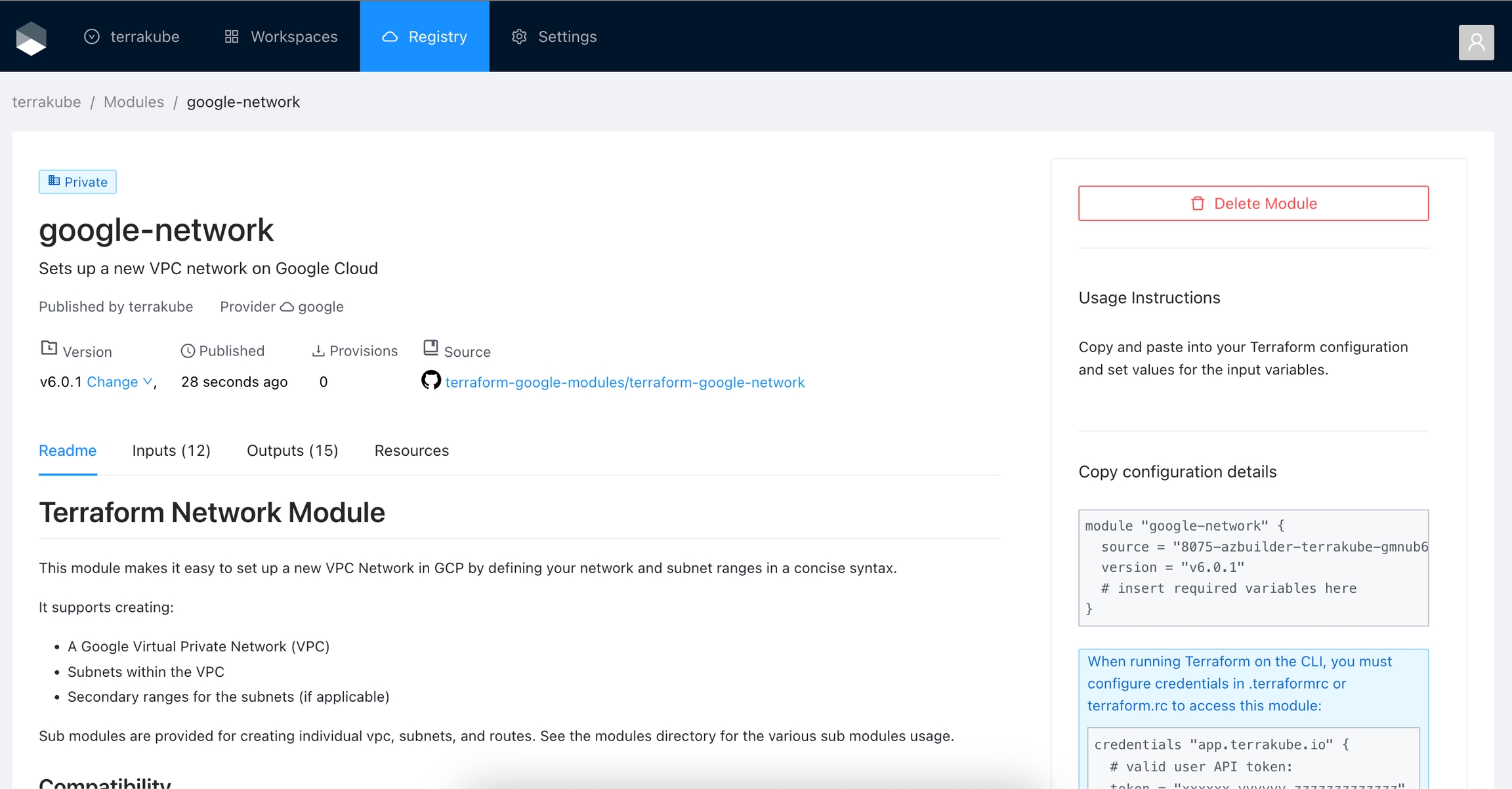

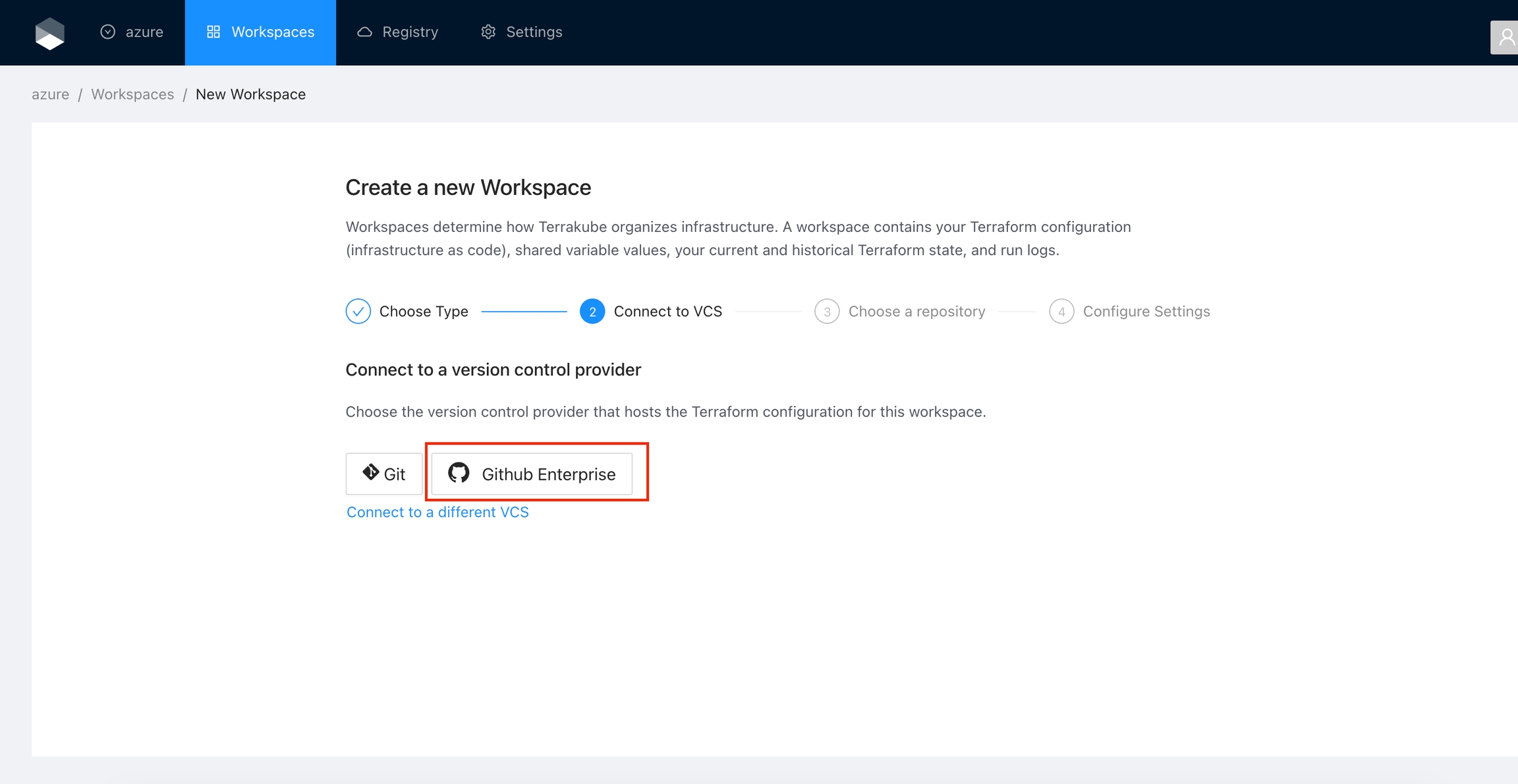

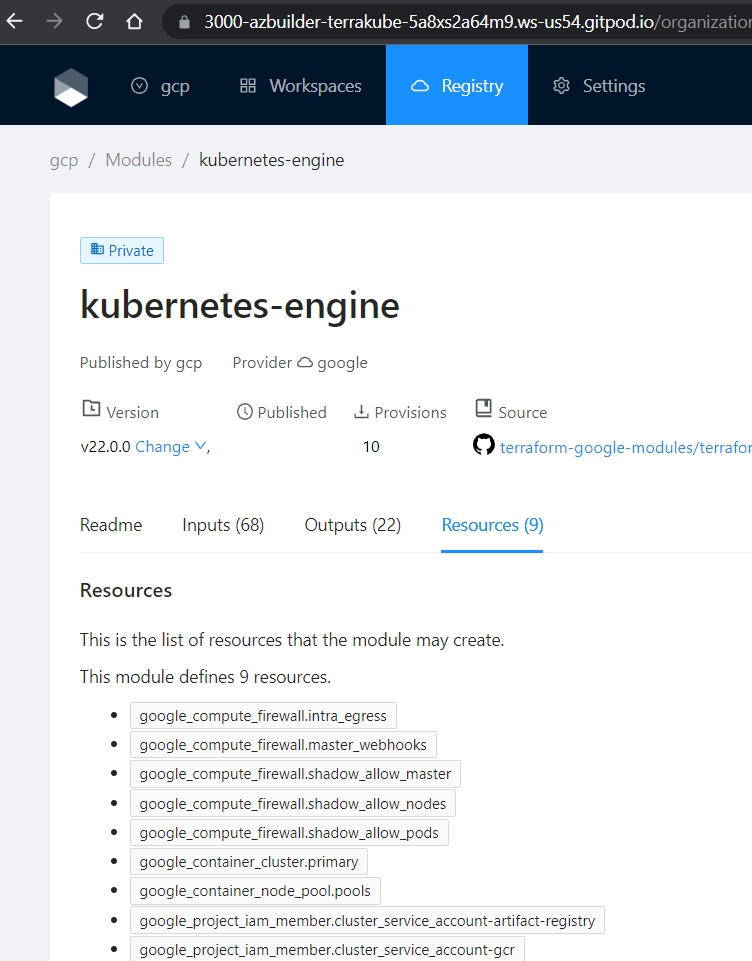

Click Registry in the main menu and then click the Publish module button

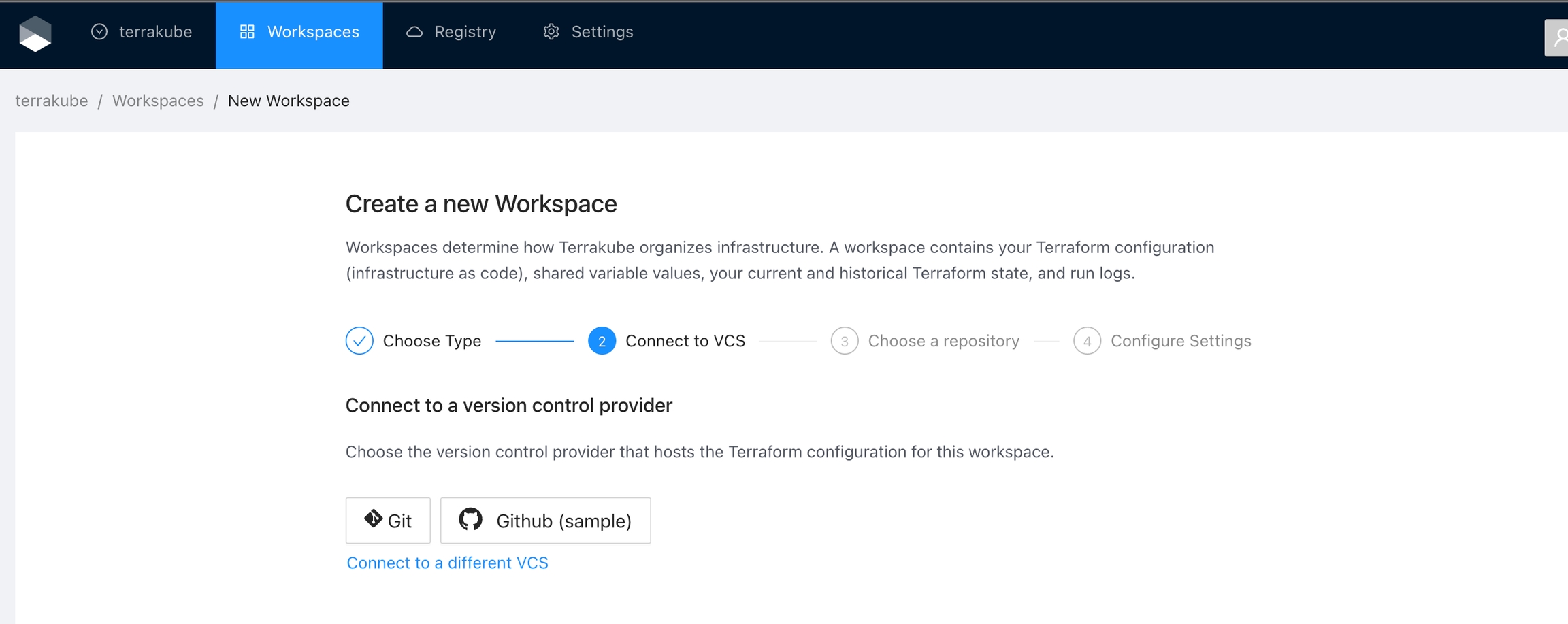

Select an existing version control provider or click Connect to a different VCS to configure a new one. See VCS Providers for more details.

Provide the git repository URL and click the Continue button.

In the next screen, configure the required fields and click the Publish Module button.

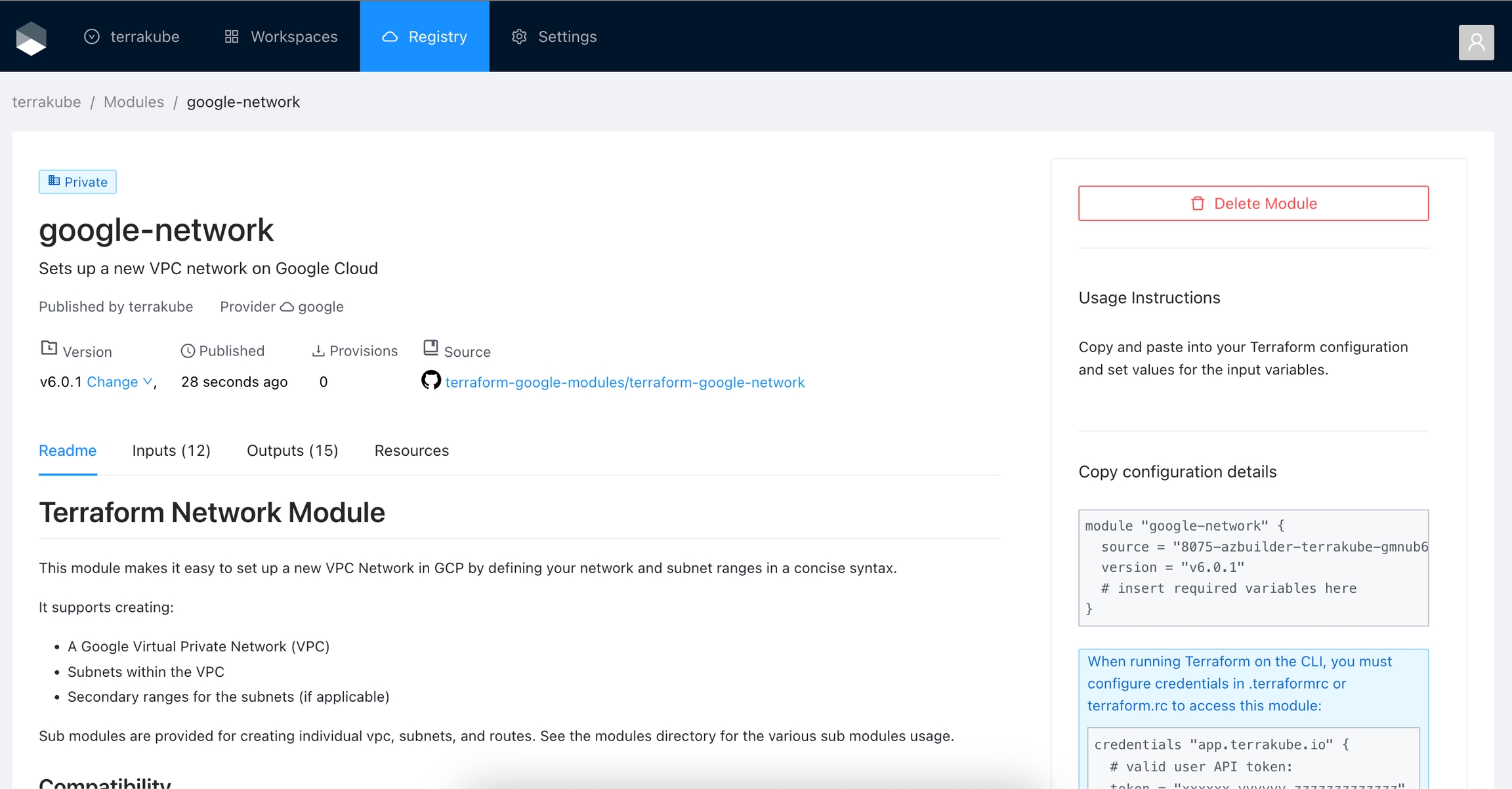

The module will be published inside the specified organization. On the details page, you can view available versions, read documentation, and copy a usage example.

To release a new version of a module, create a new release tag to its VCS repository. The registry automatically imports the new version.

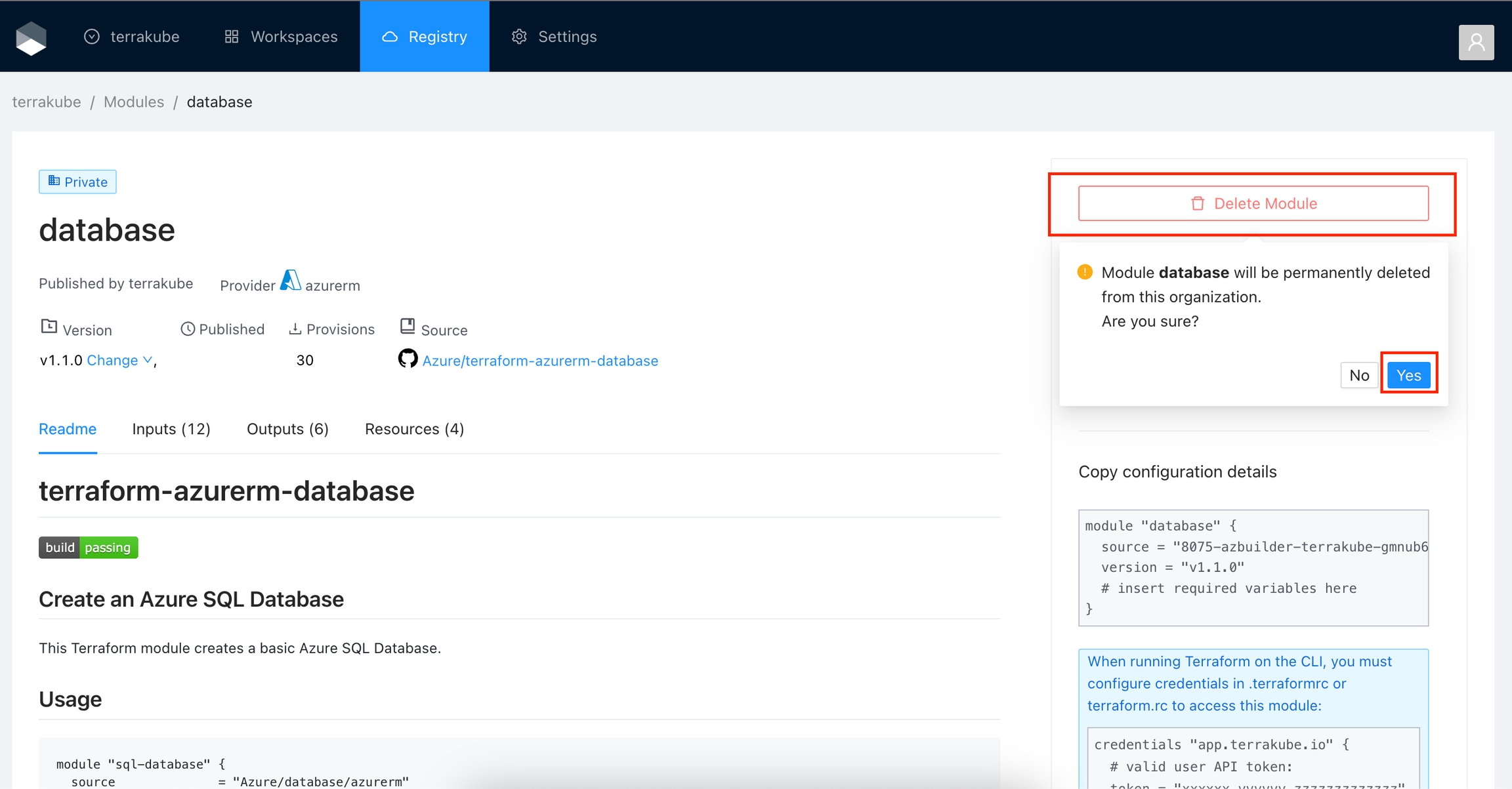

In the Module details page click the Delete Module button and then click the Yes button to confirm

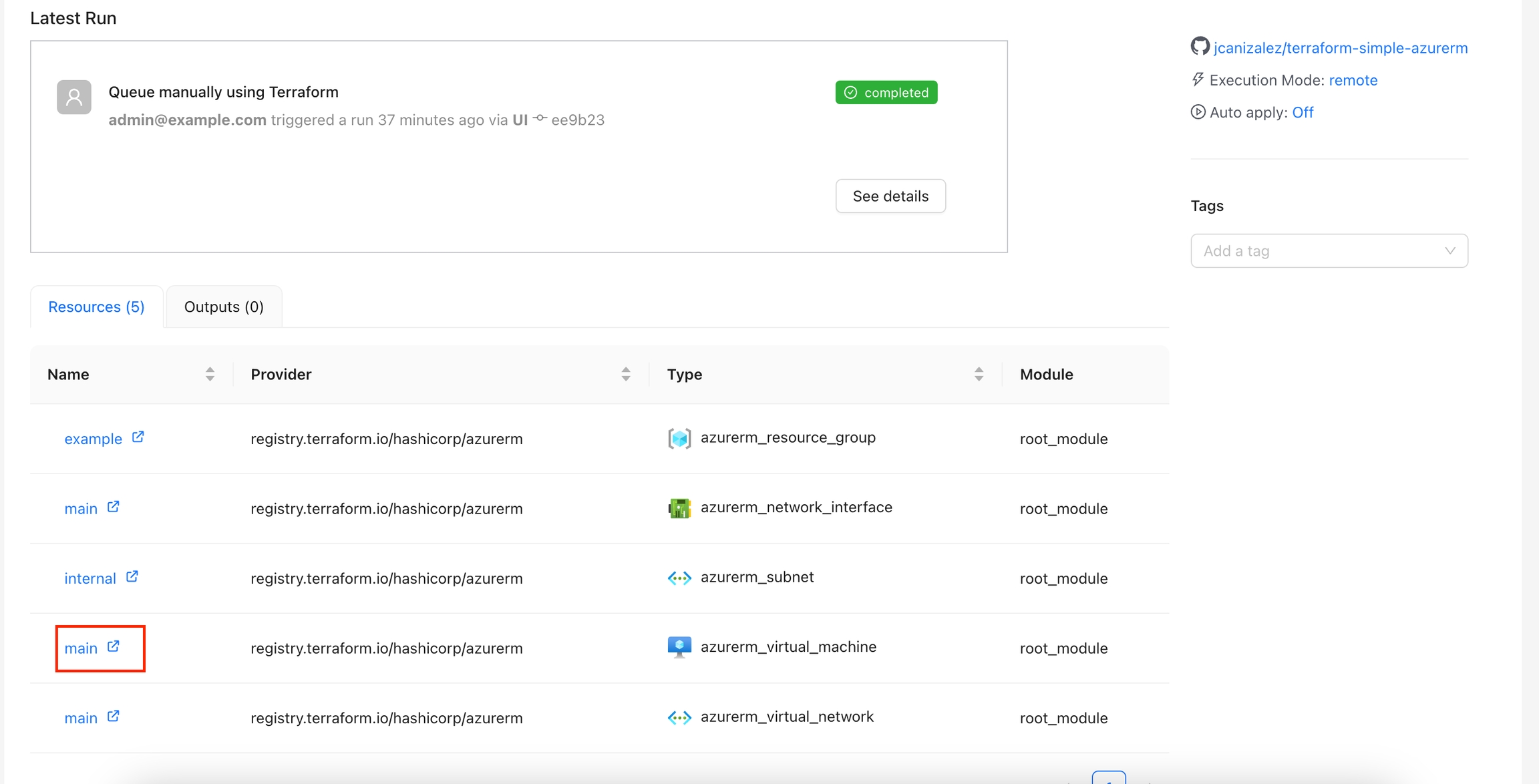

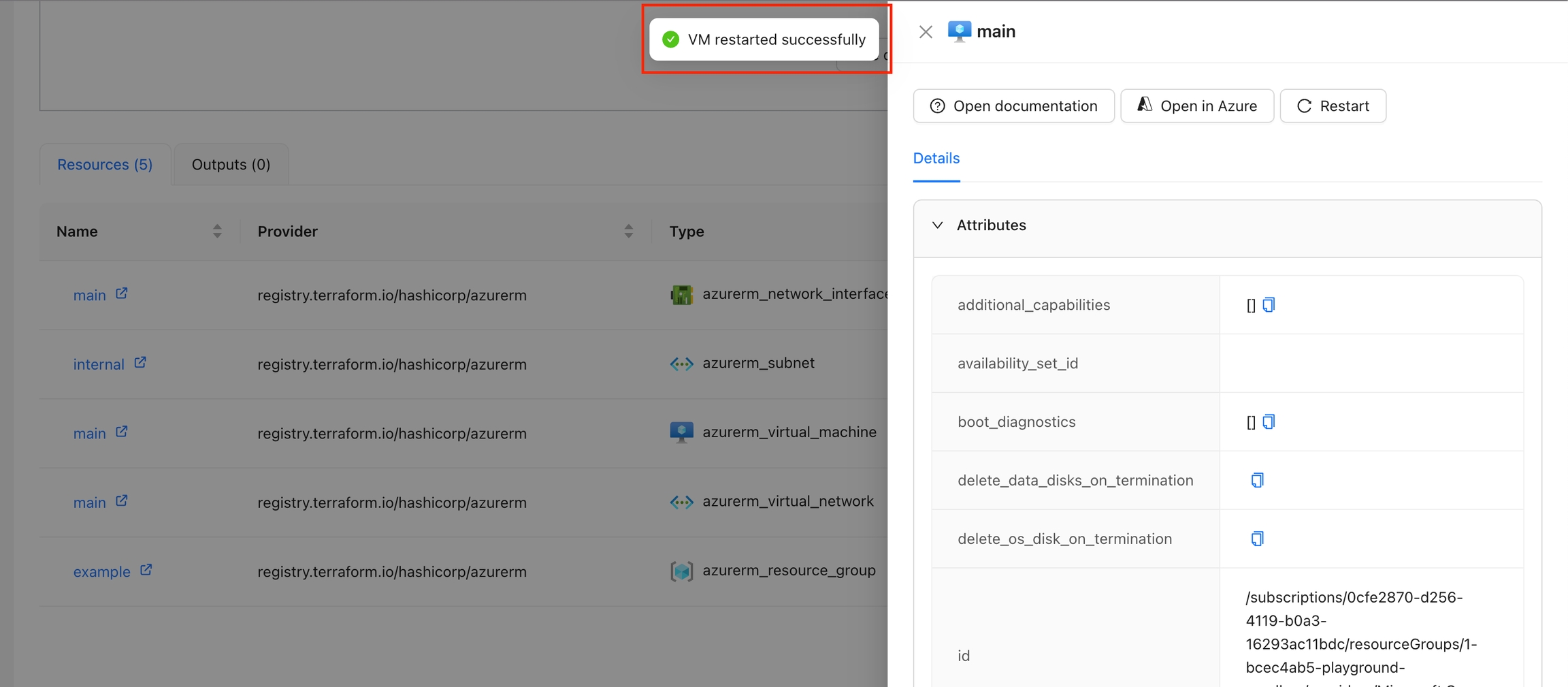

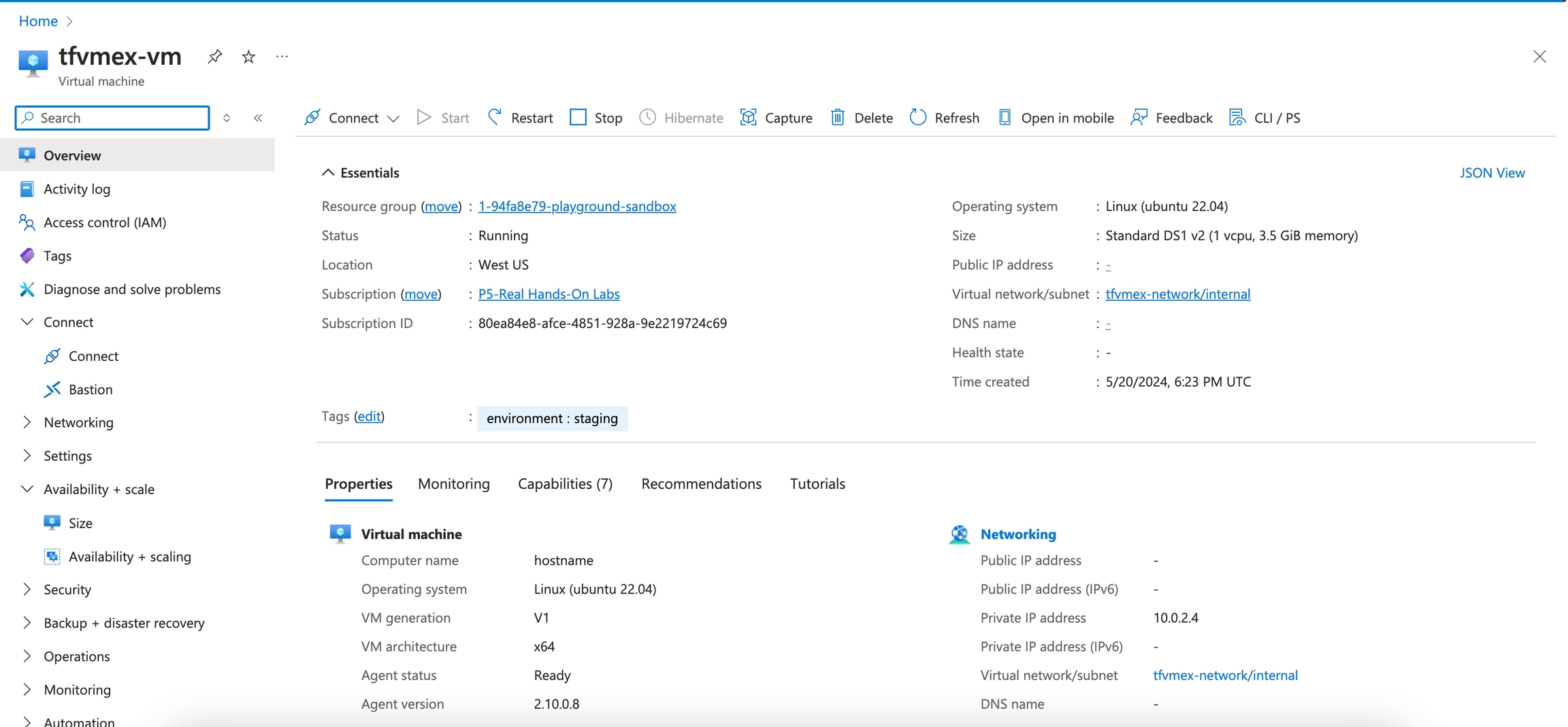

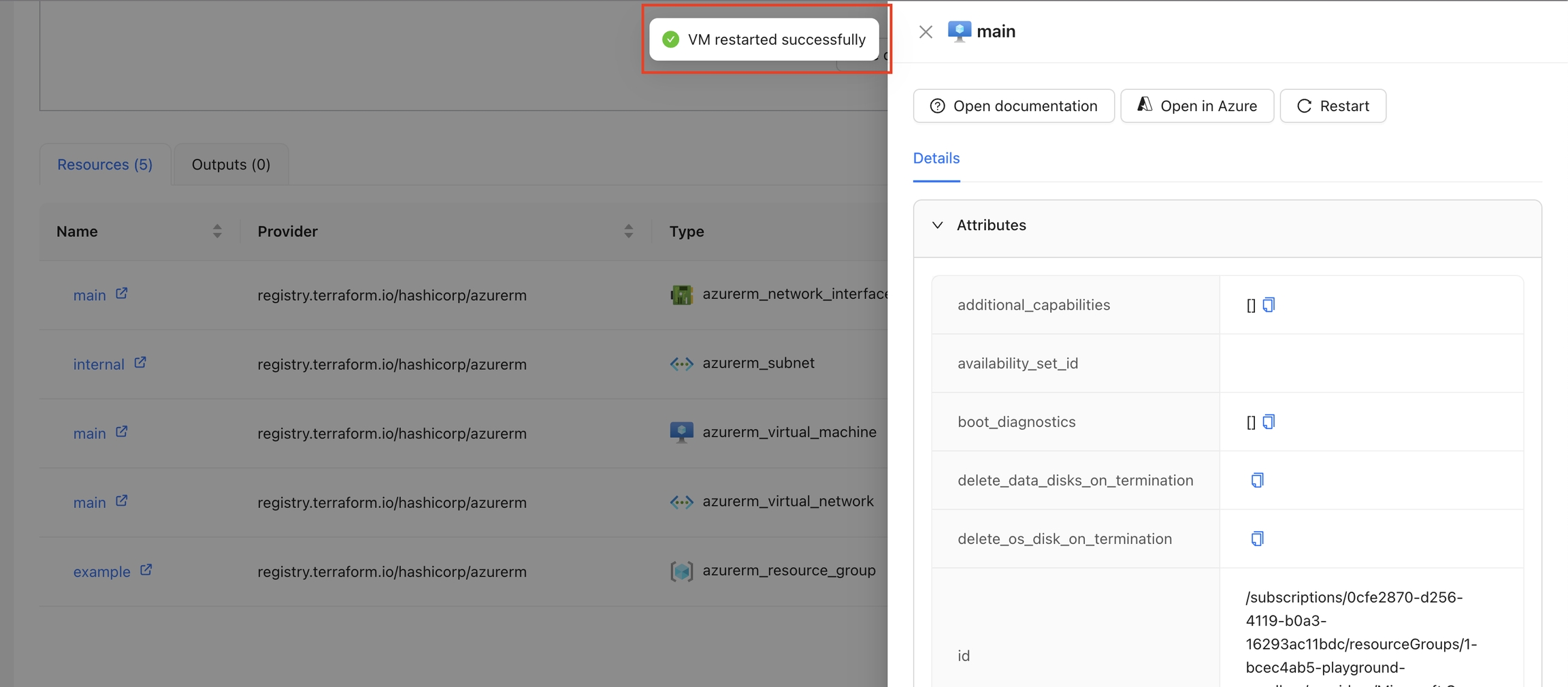

The Restart VM action is designed to restart a specific virtual machine in Azure. By using the context of the current state, this action fetches an Azure access token and issues a restart command to the specified VM. The action ensures that the VM is restarted successfully and provides feedback on the process.

By default, this Action is disabled and when enabled will appear for all resources that have resource type azurerm_virtual_machine. If you want to display this action only for certain resources, please check .

This action requires the following variables as or in the Workspace Organization:

ARM_CLIENT_ID: The Azure Client ID used for authentication.

ARM_CLIENT_SECRET: The Azure Client Secret used for authentication.

ARM_TENANT_ID: The Azure Tenant ID associated with your subscription.

Navigate to the Workspace Overview or the Visual State and click on a resource name.

In the Resource Drawer, click the "Restart" button.

The VM will be restarted, and a success or error message will be displayed.

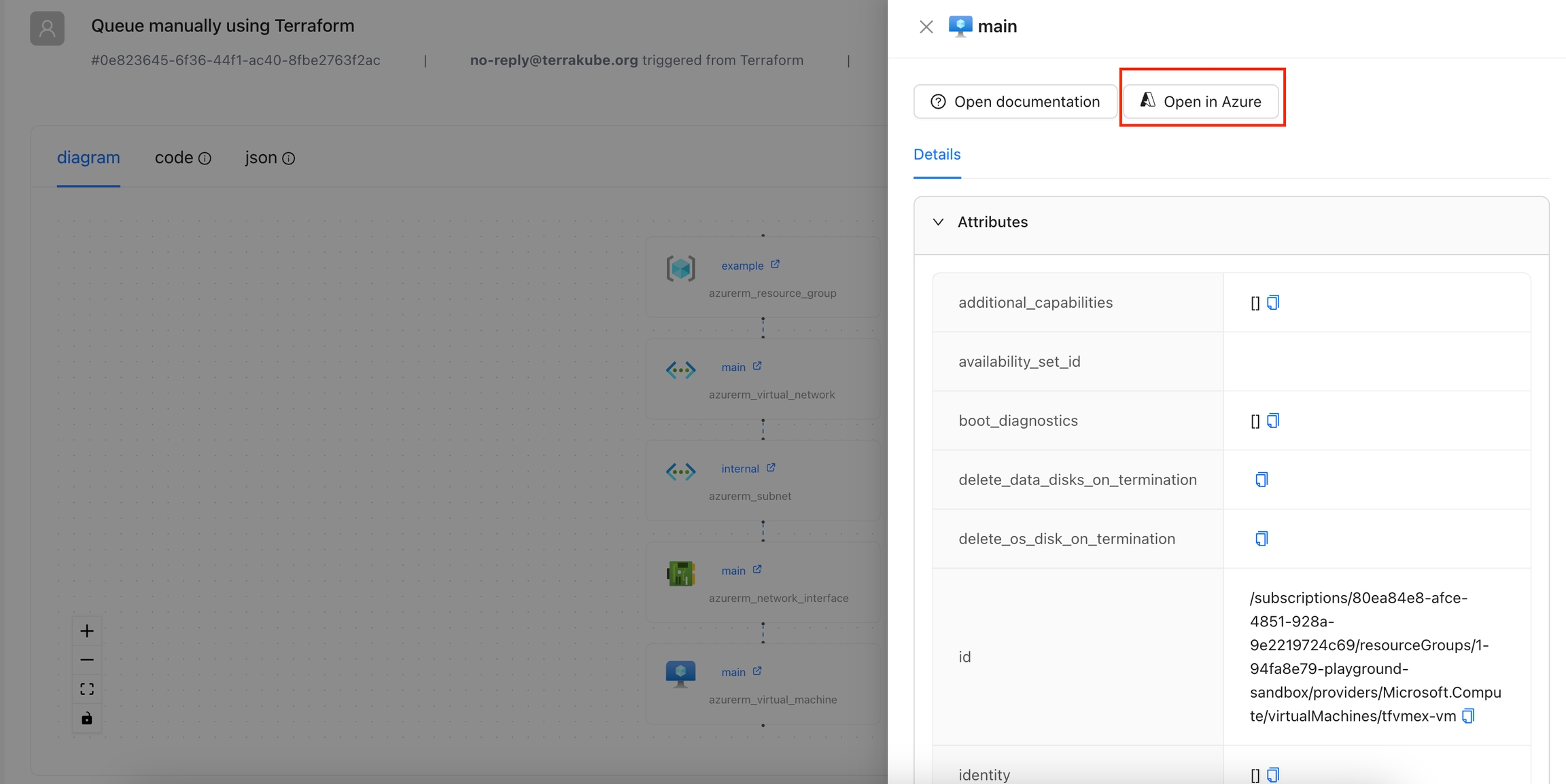

The Open In Azure Portal action is designed to provide quick access to the Azure portal for a specific resource. By using the context of the current state, this action constructs the appropriate URL and presents it as a clickable button.

By default, this Action is disabled and when enabled will appear for all the azurerm resources. If you want to display this action only for certain resources, please check .

Navigate to the Workspace Overview or the Visual State and click a resource name.

In the Resource Drawer, click the Open in Azure button.

You will be able to see the Azure portal for that resource.

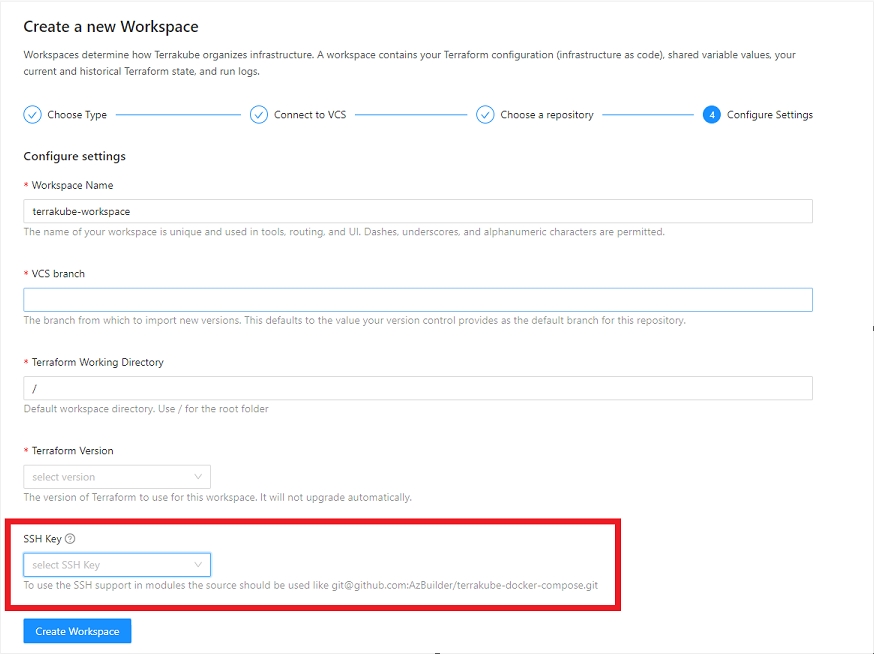

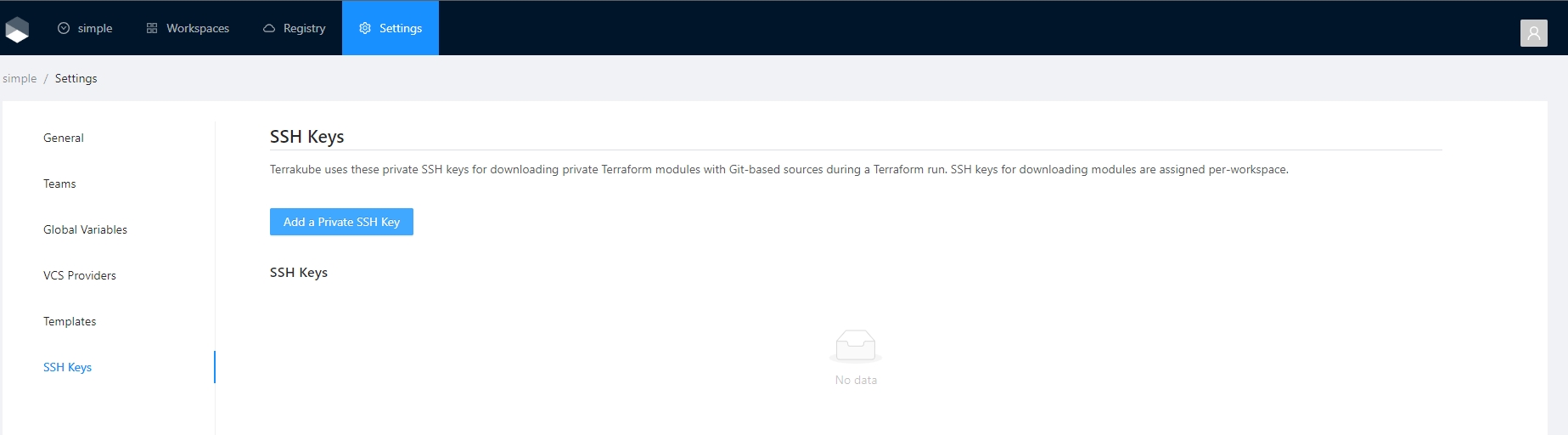

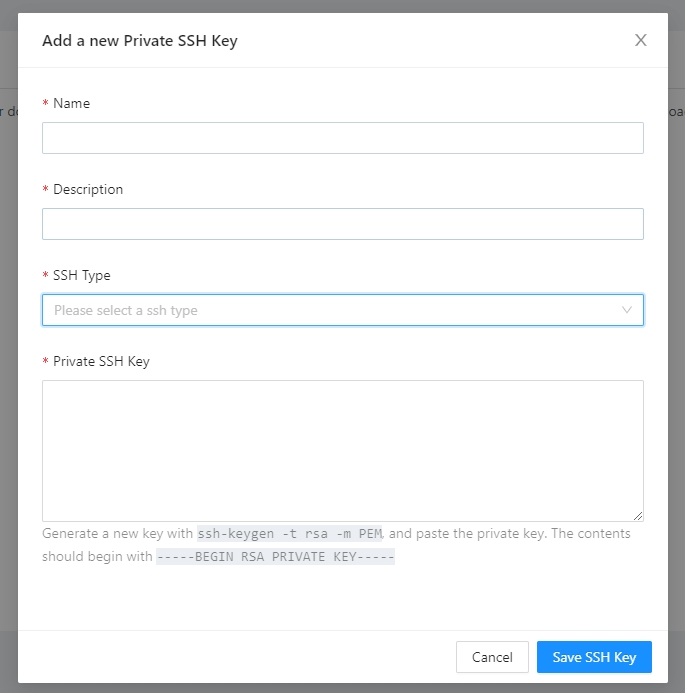

Terrakube can use SSH keys to authenticate to your private repositories. The SSH keys can be uploaded inside the settings menu.

To handle SSH keys inside Terrakube your team should have "Manage VCS settings" access

Terrakube support keys with RSA and ED25519

SSH keys can be used to authenticate to private repositories where you can store modules or workspaces

Once SSH keys are added inside your organization you can use them like the following example:

Display Criteria offer a flexible way to determine when an action will appear. While you can add logic inside your component to show or hide the action, using display criteria provides additional advantages:

Optimize Rendering: Save on the loading cost of each component.

Multiple Criteria: Add multiple display criteria based on your requirements.

Conditional Settings: Define settings to be used when the criteria are met.

The Action Proxy allows you to call other APIs without needing to add the Terrakube frontend to the CORS settings, which is particularly useful if you don't have access to configure CORS. Additionally, it can be used to inject Workspace variables and Global variables into your requests.

The proxy supports POST, GET, PUT, and DELETE methods. You can access it using the axiosInstance variable, which contains an object.

To invoke the proxy, use the following URL format: ${context.apiUrl}/proxy/v1

Terraform provides a public registry, where you can share and reuse your terraform modules or providers, but sometimes you need to define a Terraform module that can be accessed only within your organization.

Terrakube provides a private registry that works similarly to the and helps you share and privately across your organization. You can use any of the main to maintain your terraform module code.

In this section:

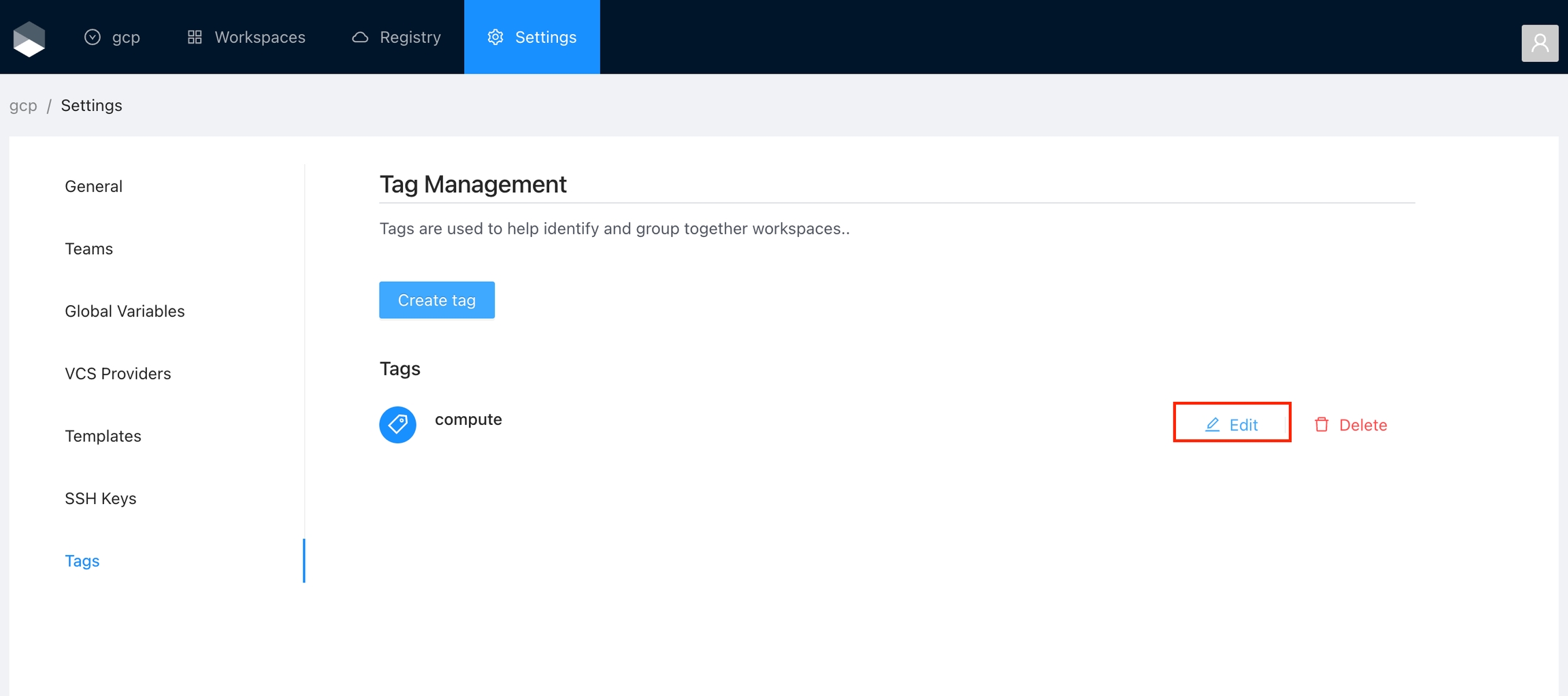

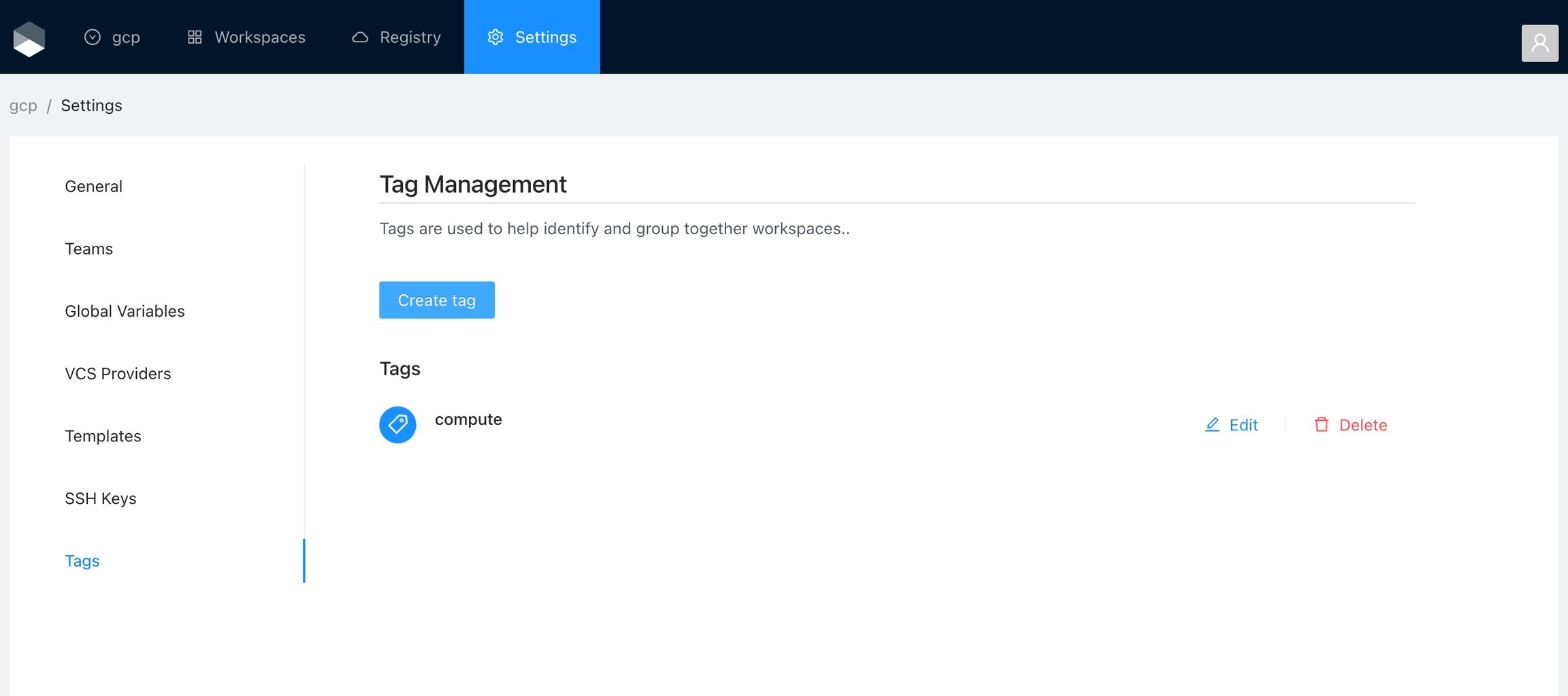

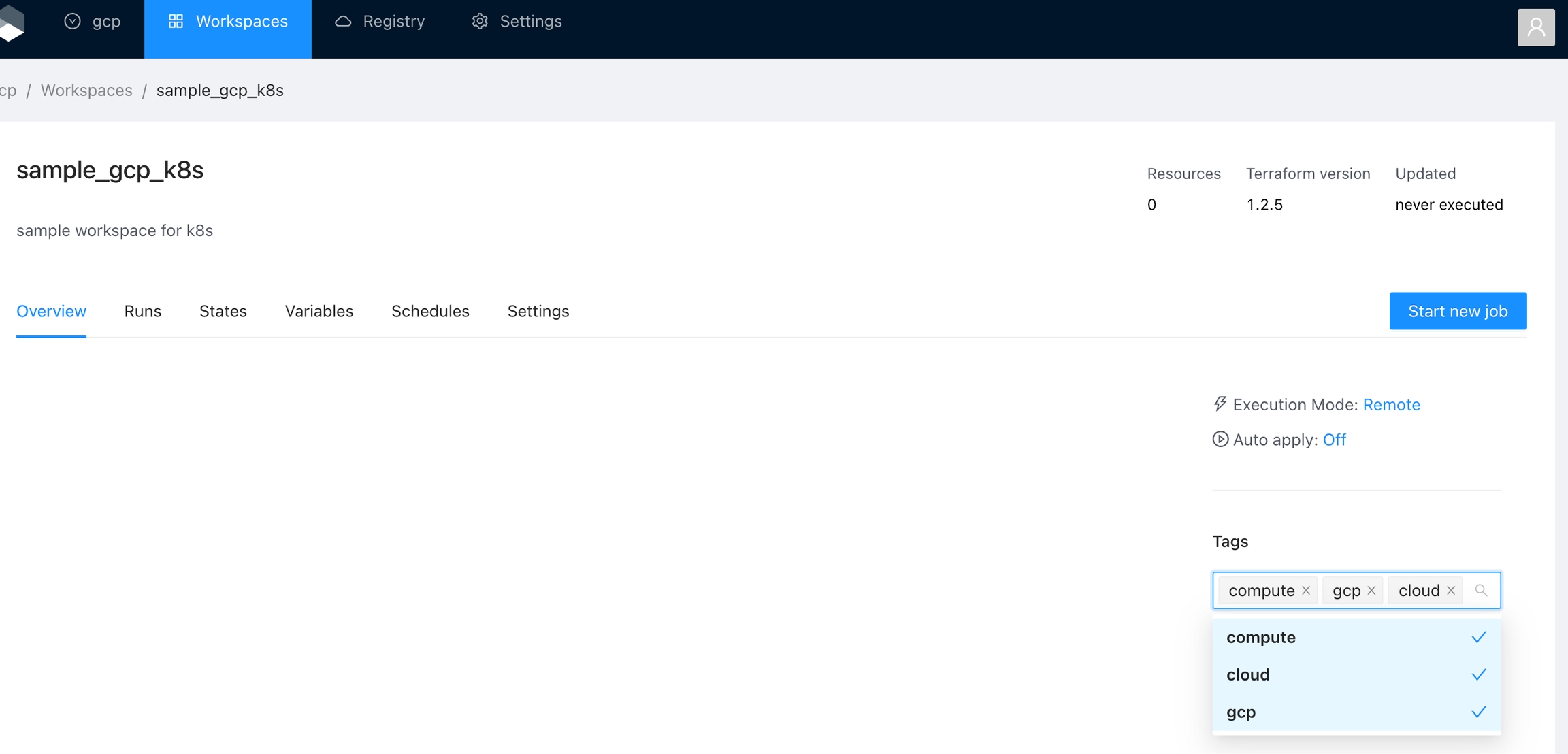

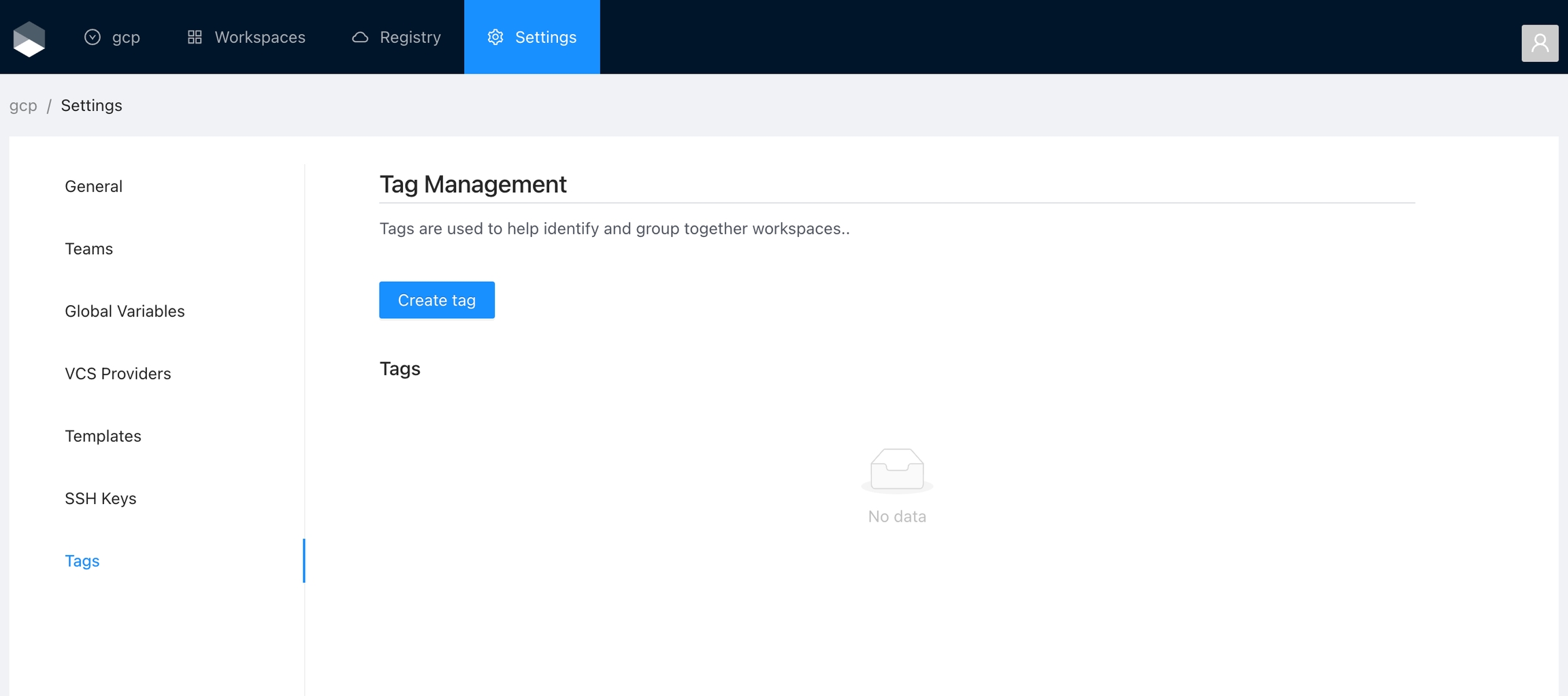

You can use tags to categorize, sort, and even filter workspaces based on the tags.

You can manage tags for the organization in the Settings panel. This section lets you create and remove tags. Alternatively, you can add existing tags or create new ones in the .

Once you are in the desired organization, click the Settings button, then in the left menu select the Tags option and click the Add global variable button

The following actions are added by default in Terrakube. However, not all actions are enabled by default. Please refer to the documentation below if you are interested in activating these actions.

: Adds a button to quickly navigate to the Terraform registry documentation for a specific resource.

connectors:

- type: oidc

id: TerrakubeClient

name: TerrakubeClient

config:

issuer: "[http|https]://<KEYCLOAK_SERVER>/auth/realms/<MY_REALM>"

clientID: "TerrakubeClient"

clientSecret: "<TerrakubeClient's secret>"

redirectURI: "[http|https]://<TERRAKUBE-API URL>/dex/callback"

insecureEnableGroups: trueconfig:

.....

userNameKey: emailOTEL_JAVAAGENT_ENABLE=trueOTEL_TRACES_EXPORTER=jaeger

OTEL_EXPORTER_JAEGER_ENDPOINT=http://jaeger-all-in-one:14250

OTEL_SERVICE_NAME=TERRAKUBE-APIapiVersion: v1

kind: ServiceAccount

metadata:

name: terrakube-api-service-account

namespace: terrakubeapiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: terrakube

name: terrakube-api-role

rules:

- apiGroups: ["batch"]

resources: ["jobs"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: terrakube-api-role-binding

namespace: terrakube

subjects:

- kind: ServiceAccount

name: terrakube-api-service-account

namespace: terrakube

roleRef:

kind: Role

name: terrakube-api-role

apiGroup: rbac.authorization.k8s.io## API properties

api:

image: "azbuilder/api-server"

version: "2.22.0"

serviceAccountName: "terrakube-api-service-account"

env:

- name: ExecutorEphemeralNamespace

value: terrakube

- name: ExecutorEphemeralImage

value: azbuilder/executor:2.22.0

- name: ExecutorEphemeralSecret

value: terrakube-executor-secretsapi:

env:

- name: JAVA_TOOL_OPTIONS

value: "-Dorg.terrakube.executor.ephemeral.nodeSelector.diskType=ssd -Dorg.terrakube.executor.ephemeral.nodeSelector.nodeType=spot" nodeSelector:

disktype: ssd

nodeType: spotSERVICE_BINDING_ROOT: /mnt/platform/bindingscnb@terrakube-api-678cb68d5b-ns5gt:/mnt/platform/bindings$ ls

ca-certificatesflow:

- type: "customScripts"

step: 100

inputsEnv:

INPUT_IMPORT1: $IMPORT1

INPUT_IMPORT2: $IMPORT2

commands:

- runtime: "BASH"

priority: 100

before: true

script: |

echo $INPUT_IMPORT1

echo $INPUT_IMPORT2Open in Azure Portal: Adds a button to quickly navigate to the resource in the Azure Portal.

Restart Azure VM: Adds a button to restart the Azure VM directly from the workspace overview.

Azure Monitor: Adds a panel to visualize the metrics for the resource.

Open AI: Adds a button to ask questions or get recommendations using OpenAI based on the workspace data.

Templates can become big over time while adding steps to execute additional business logic.

Example before template import:

flow:

- type: "terraformPlan"

step: 100

commands:

- runtime: "GROOVY"

priority: 100

before: true

script: |

import TerraTag

new TerraTag().loadTool(

"$workingDirectory",

"$bashToolsDirectory",

"0.1.30")

"Terratag download completed"

- runtime: "BASH"

priority: 200

before: true

script: |

cd $workingDirectory

terratag -tags="{\"environment_id\": \"development\"}"

- type: "terraformApply"

step: 300Terrakube supports having the template logic in an external git repository like the following:

flow:

- type: "terraformPlan"

step: 100

importComands:

repository: "https://github.com/AzBuilder/terrakube-extensions"

folder: "templates/terratag"

branch: "import-template"

- type: "terraformApply"

step: 200Using import templates become simply and we can reuse the logic accross several Terrakube organizations

The sample template can be found here.

targetUrl: Contains the URL that you want to invoke.

proxyHeaders: Contains the headers that you want to send to the target URL.

workspaceId: The workspace ID that will be used for some validations from the proxy side

If you need to access sensitive keys or passwords from your API call, you can inject variables using the template notation ${{var.variable_name}}, where variable_name represents a Workspace variable or a Global variable.

Example Usage

In this example:

${{var.OPENAI_API_KEY}} is a placeholder for a sensitive key that will be injected by the proxy.

proxyBody contains the data to be sent in the request body.

proxyHeaders contains the headers to be sent to the target URL.

targetUrl specifies the API endpoint to be invoked.

workspaceId is used for validations on the proxy side.

By using the Action Proxy, you can easily interact with external APIs while using Terrakube's capabilities to manage and inject necessary variables securely.

({ context }) => {

const fetchData = async () => {

try {

const response = await axiosInstance.get(`${context.apiUrl}/proxy/v1`, {

params: {

targetUrl: 'https://jsonplaceholder.typicode.com/posts/1/comments',

proxyheaders: JSON.stringify({

'Content-Type': 'application/json',

}),

workspaceId: context.workspace.id

}

});

console.log(response.data);

} catch (error) {

console.error('Error fetching data:', error);

}

};const response = await axiosInstance.post(`${context.apiUrl}/proxy/v1`, {

targetUrl: 'https://api.openai.com/v1/chat/completions',

proxyHeaders: JSON.stringify({

'Content-Type': 'application/json',

'Authorization': 'Bearer ${{var.OPENAI_API_KEY}}'

}),

workspaceId: context.workspace.id,

proxyBody: JSON.stringify({

model: 'gpt-4',

messages: updatedMessages,

})

}, {

headers: {

'Content-Type': 'application/json',

}

});

azure

AZURE_DEVELOPERS

admin

TERRAKUBE_ADMIN, TERRAKUBE_DEVELOPERS

aws

AWS_DEVELOPERS

gcp

GCP_DEVELOPERS

TERRAKUBE_ADMIN

TERRAKUBE_DEVELOPERS

AZURE_DEVELOPERS

AWS_DEVELOPERS

GCP_DEVELOPERS

ARM_SUBSCRIPTION_ID: The Azure Subscription ID where the VM is located.When using SSH keys make sure to use your repository URL using the ssh format. For github it is something like [email protected]:AzBuilder/terrakube-docker-compose.git

The selected SSH key will be used to clone the workspace information at runtime when running the job inside Terrakube, but it will also be injected to the job execution to be use inside our terraform code.

For example if you are using a module using a GIT connection like the following:

When running the job, internally terraform will be using the selected SSH key to clone the necesary module dependencies like the below image:

When using a VCS connection you can select wich SSH key should be injected when downloading modules in the workspace settings:

This will allow to use modules using the git format inside a workspace with VCS connection like the following:

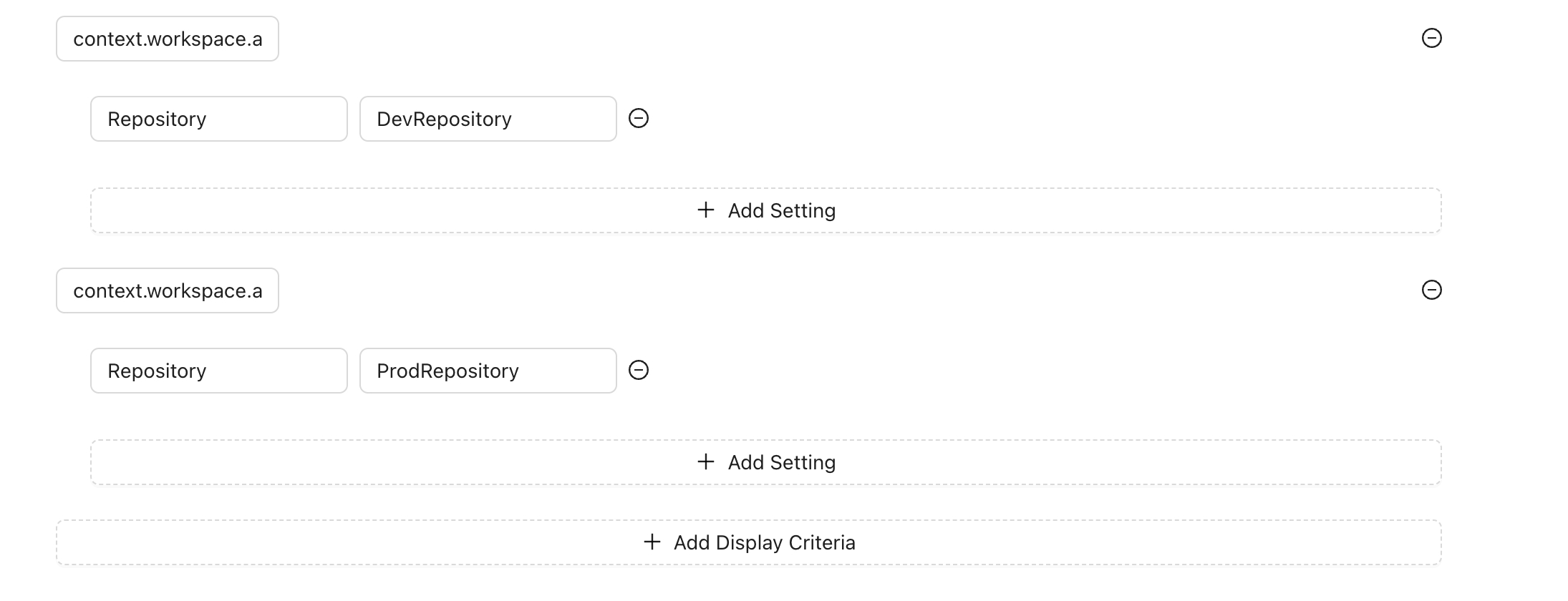

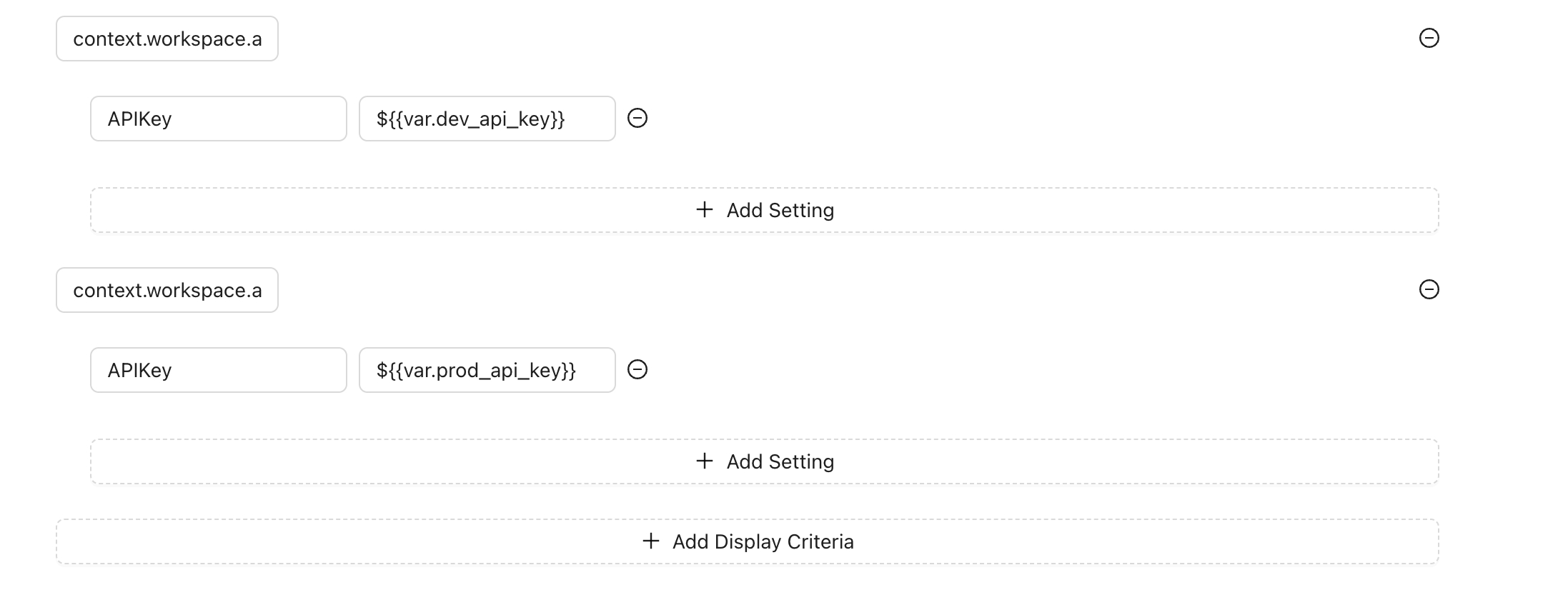

You can set specific settings for each display criteria, which is useful when the action provides configurable options. For example, suppose you have an action that queries GitHub issues using the GitHub API and you want to display the issues using GraphQL. Your issues are stored in two different repositories: one for production and one for non-production.

In this case, you can create settings based on the display criteria to select the repository name. This approach allows your action code to be reusable. You only need to change the settings based on the criteria, rather than modifying the action code itself.

For instance:

Production Environment: For workspaces starting with prod, use the repository name for production issues.

Non-Production Environment: Use the repository name for non-production issues

By setting these configurations, you ensure that your action dynamically adapts to different environments or conditions, enhancing reusability and maintainability.

You might be tempted to store secrets inside settings; however, display criteria settings don't provide a secure way to store sensitive data. For cases where you need to use different credentials for an action based on your workspace, organization or any other condition, you should use template notation instead. This approach allows you to use Workspace Variables or Global Variables to store sensitive settings securely.

For example, instead of directly storing an API key in the settings, you can reference a variable:

For the development environment: ${{var.dev_api_key}}

For the production environment: ${{var.prod_api_key}}

For the previous example, you will need to create the variables at workspace level or use global variables with the names dev_api_keyand prod_api_key

For more details about using settings in your action and template variables check Action Proxy.

You can define multiple display criteria for your actions. In this case, the first criteria to be met will be applied, and the corresponding settings will be provided via the context.

Contains the workspace information in the Terrakube api format. See the docs for more details on the properties available.

context.workspace.id: Id of the workspace

context.workspace.attributes.name: Name of the workspace

context.workspace.attributes.terraformVersion: Terraform version

workspace/action

workspace/resourcedrawer/action

workspace/resourcedrawer/tab

Contains the settings that you configured in the display criteria.

context.settings.Repository: the value of repository that you set for the setting. Example:

workspace/action

workspace/resourcedrawer/action

workspace/resourcedrawer/tab

For workspace/action this property contains the full terraform or open tofu state. For workspace/resourcedrawer/action and workspace/resourcedrawer/tab contains only the section of the resource

workspace/action

workspace/resourcedrawer/action

workspace/resourcedrawer/tab

Contains the Terrakube api Url. Useful if you want to use the Action Proxy or execute a call to the Terrakube API.

workspace/action

workspace/resourcedrawer/action

workspace/resourcedrawer/tab

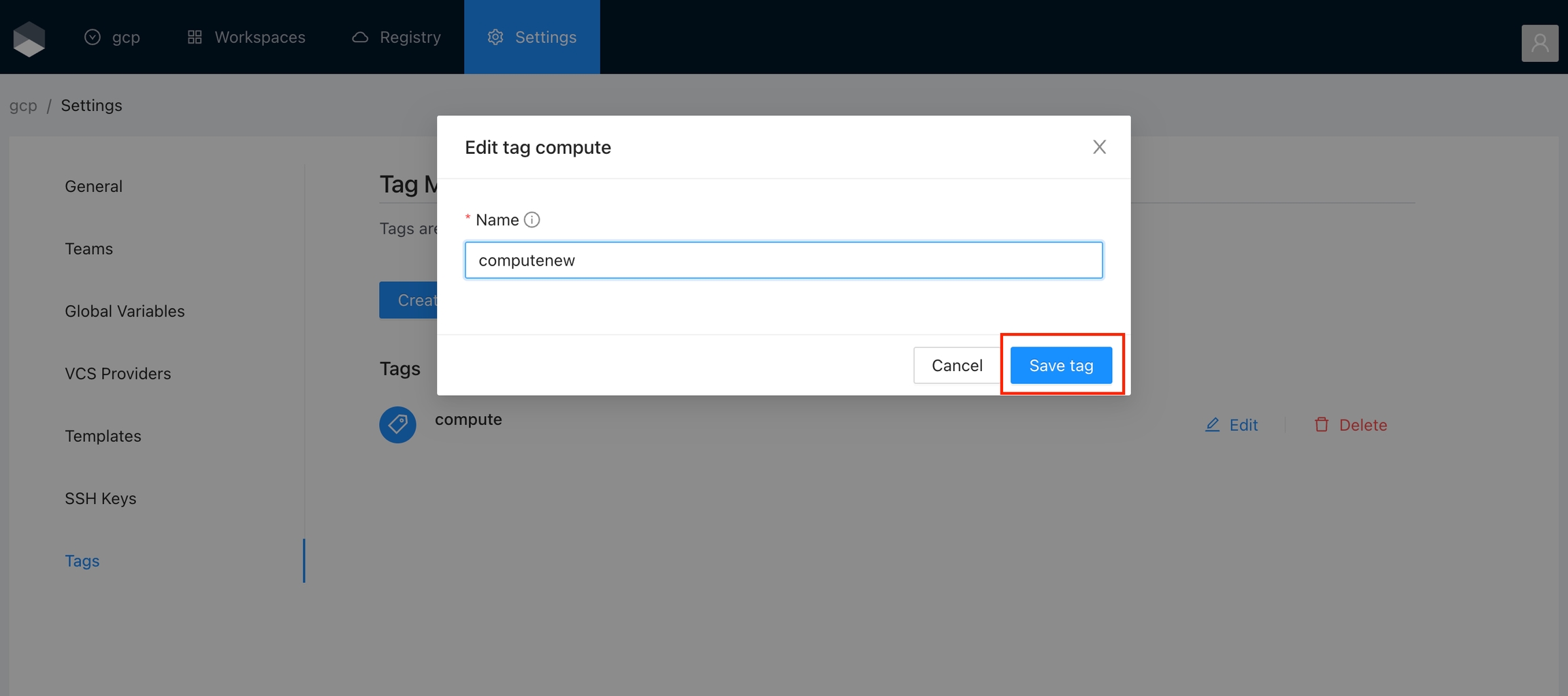

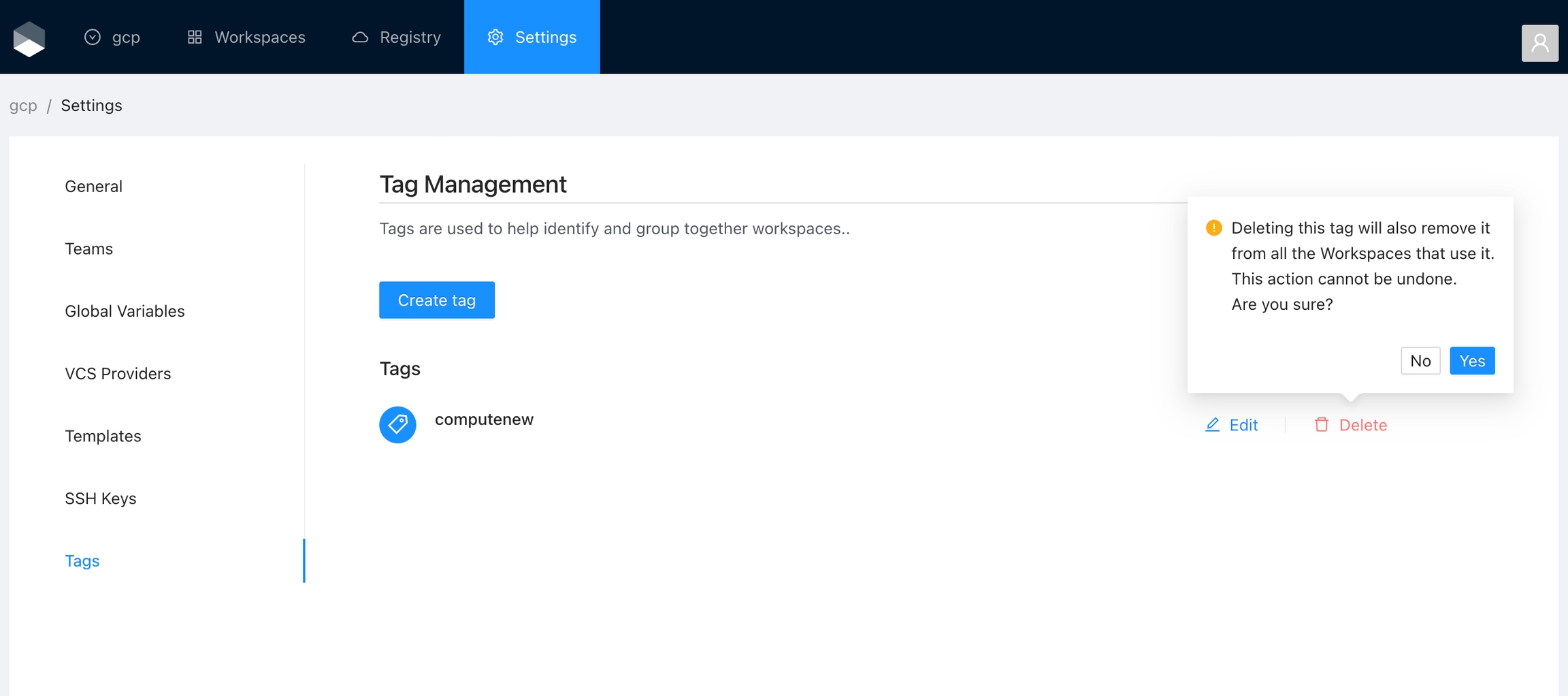

Name

Unique tag name

Finally click the Save tag button and the variable will be created

You will see the new tag in the list. And now you can use the tag in the workspaces within the organization

Click the Edit button next to the tag you want to edit.

Change the fields you need and click the Save tag button

Click the Delete button next to the tag you want to delete, and then click the Yes button to confirm the deletion. Please take in consideration the deletion is irreversible and the tag will be removed from all the workspaces using it.

Sensitive

Sensitive variables are never shown in the UI or API. They may appear in Terraform logs if your configuration is designed to output them.

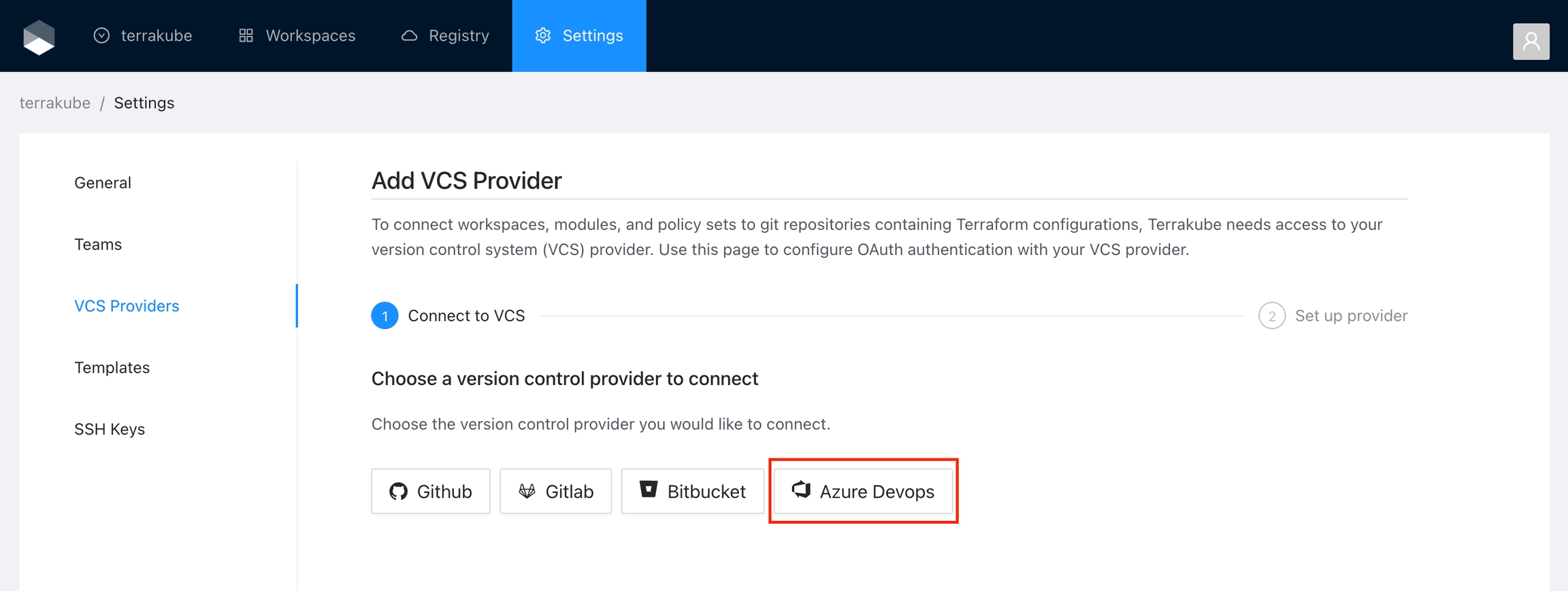

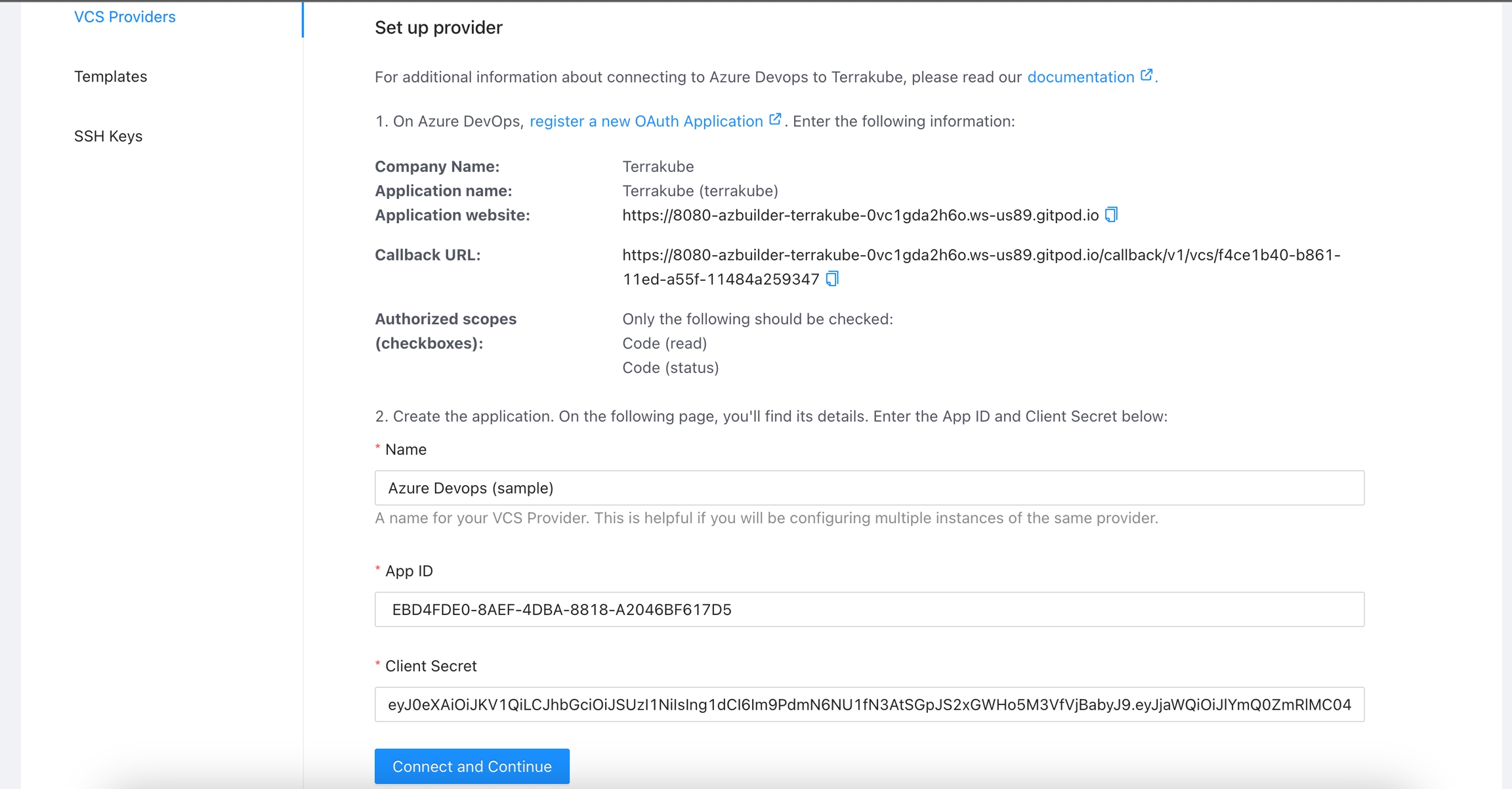

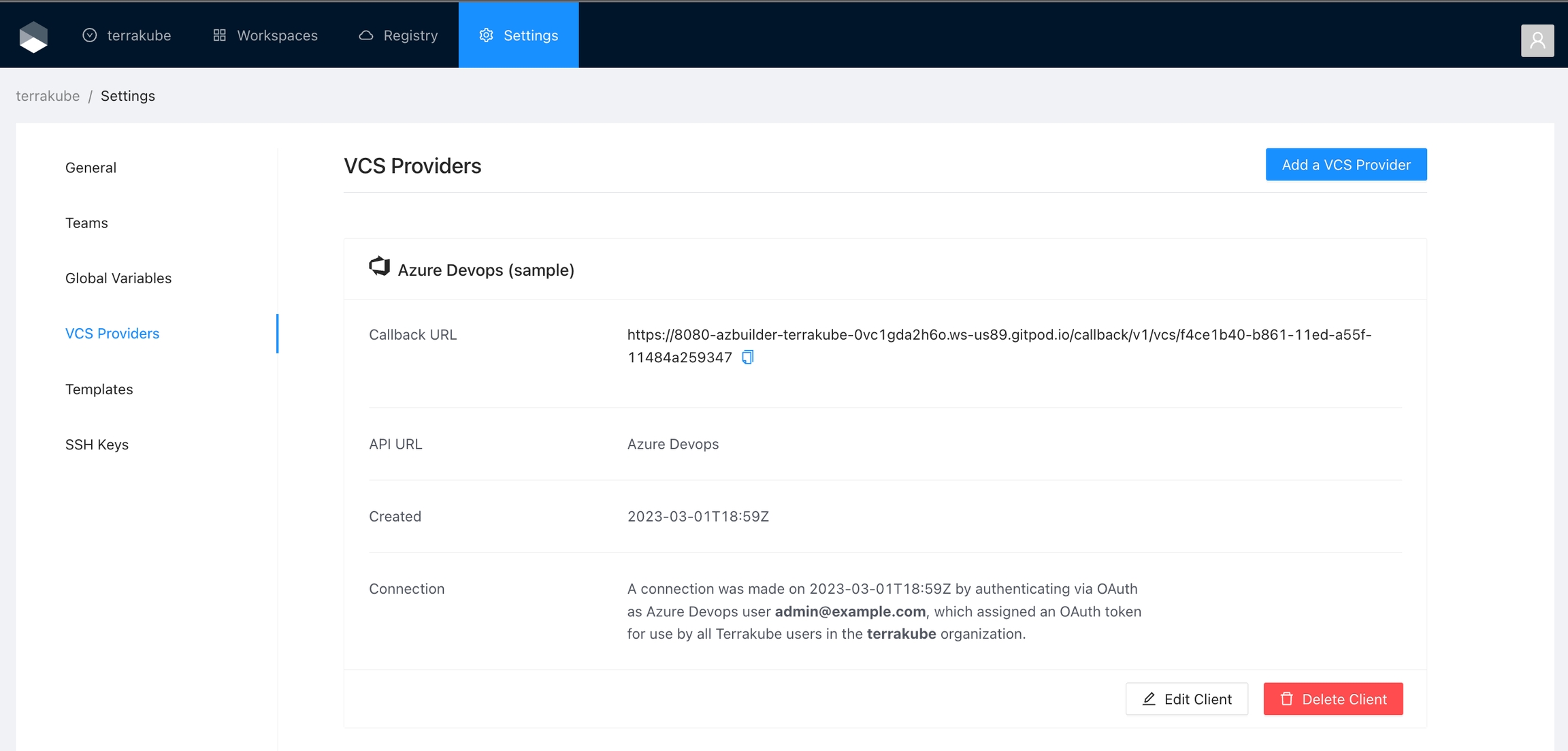

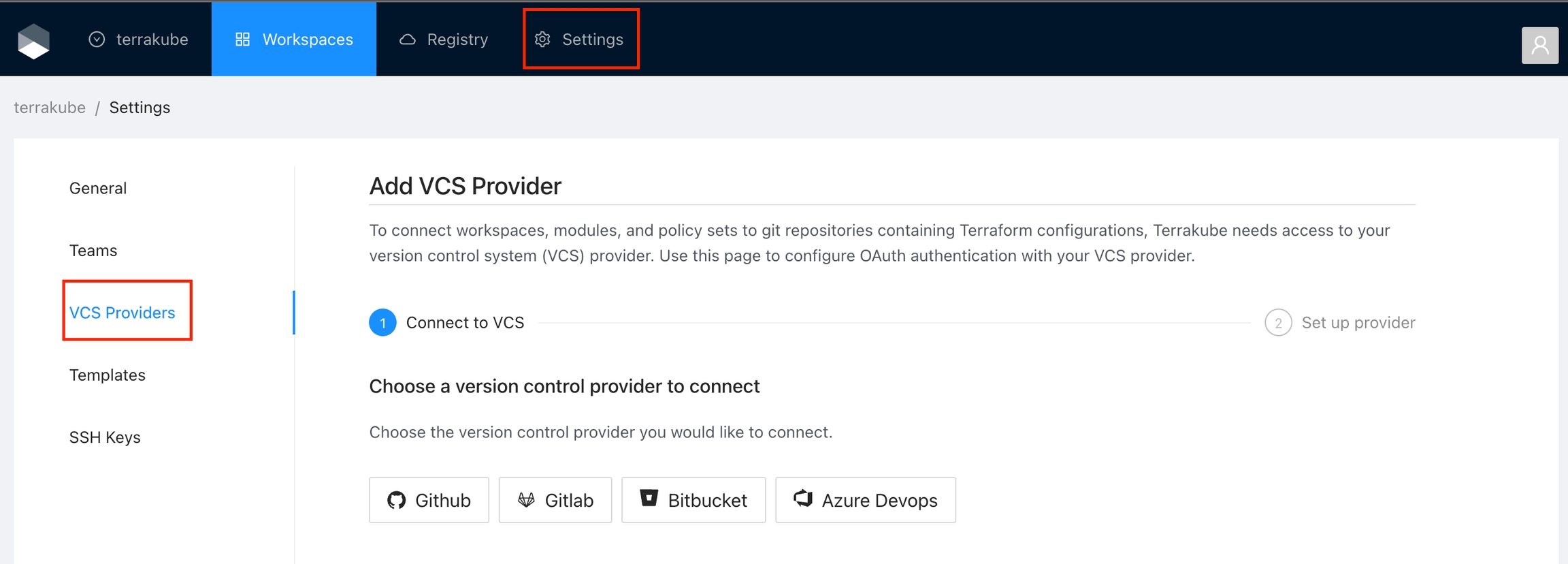

For using repositories from Azure Devops with Terrakube workspaces and modules you will need to follow these steps:

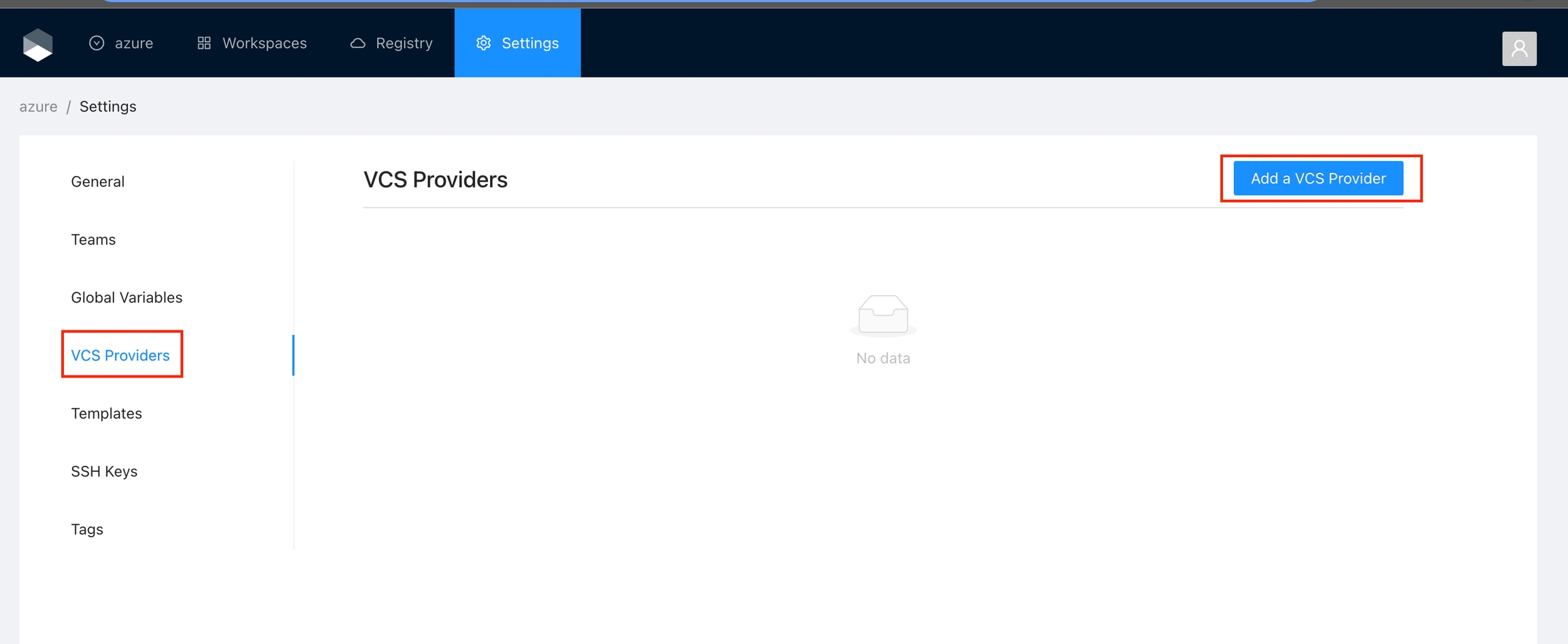

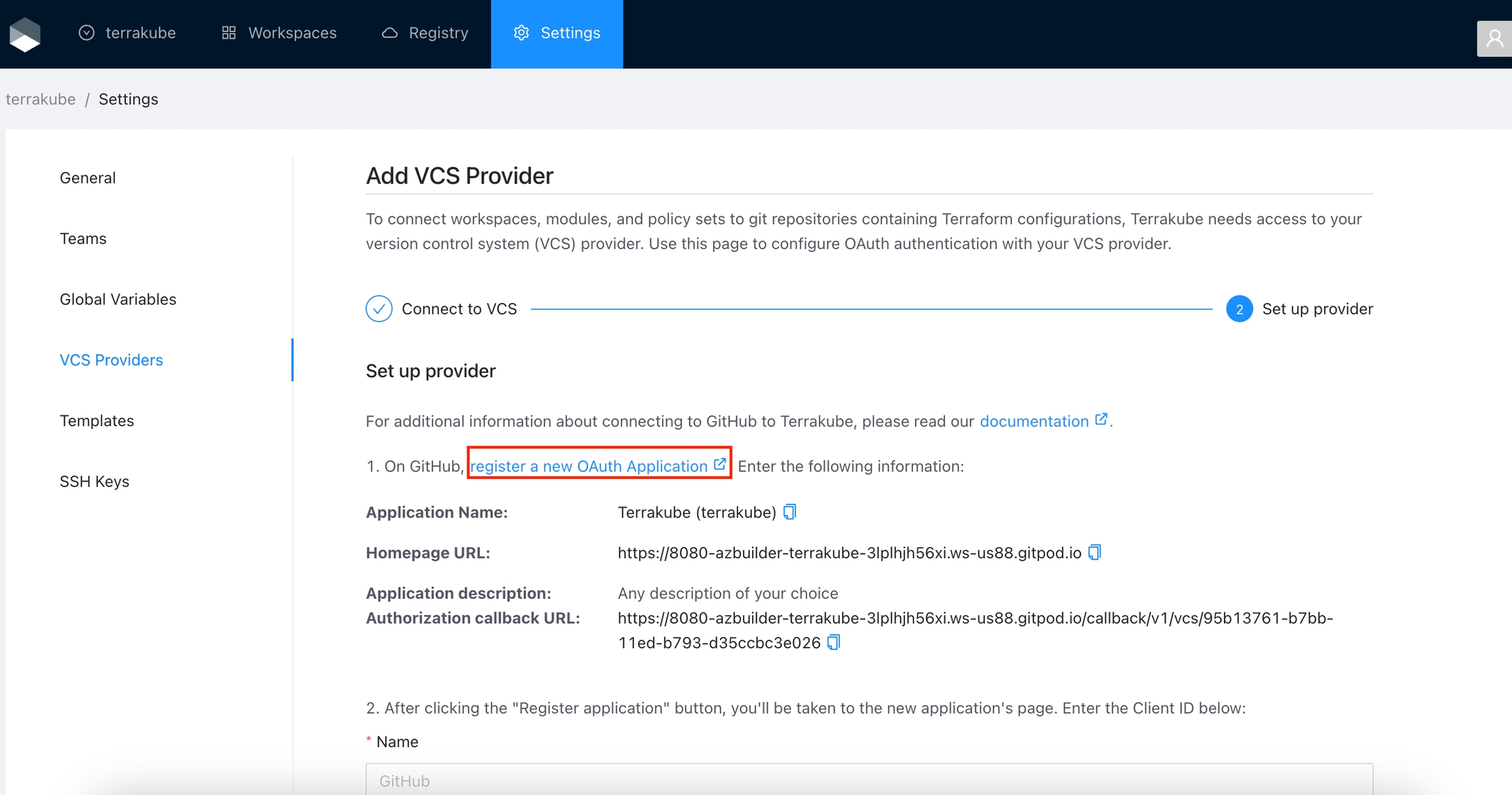

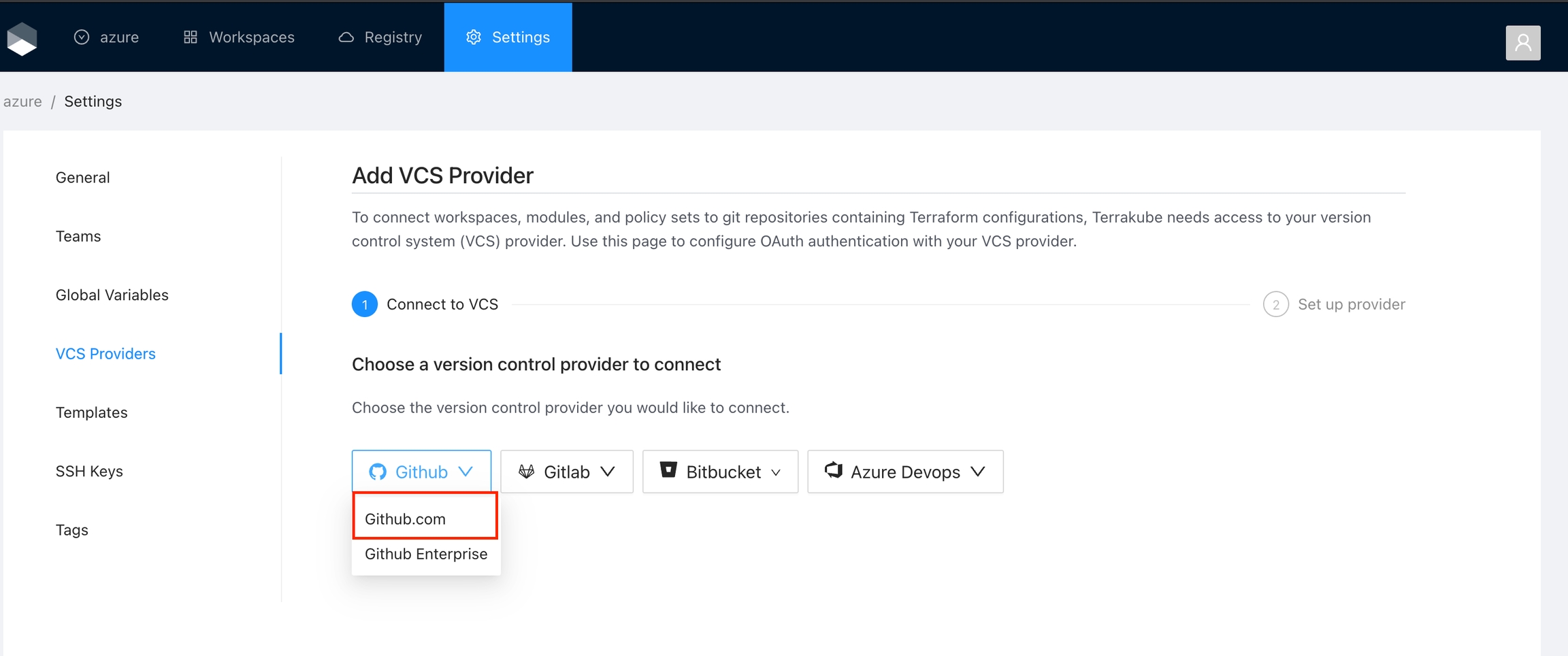

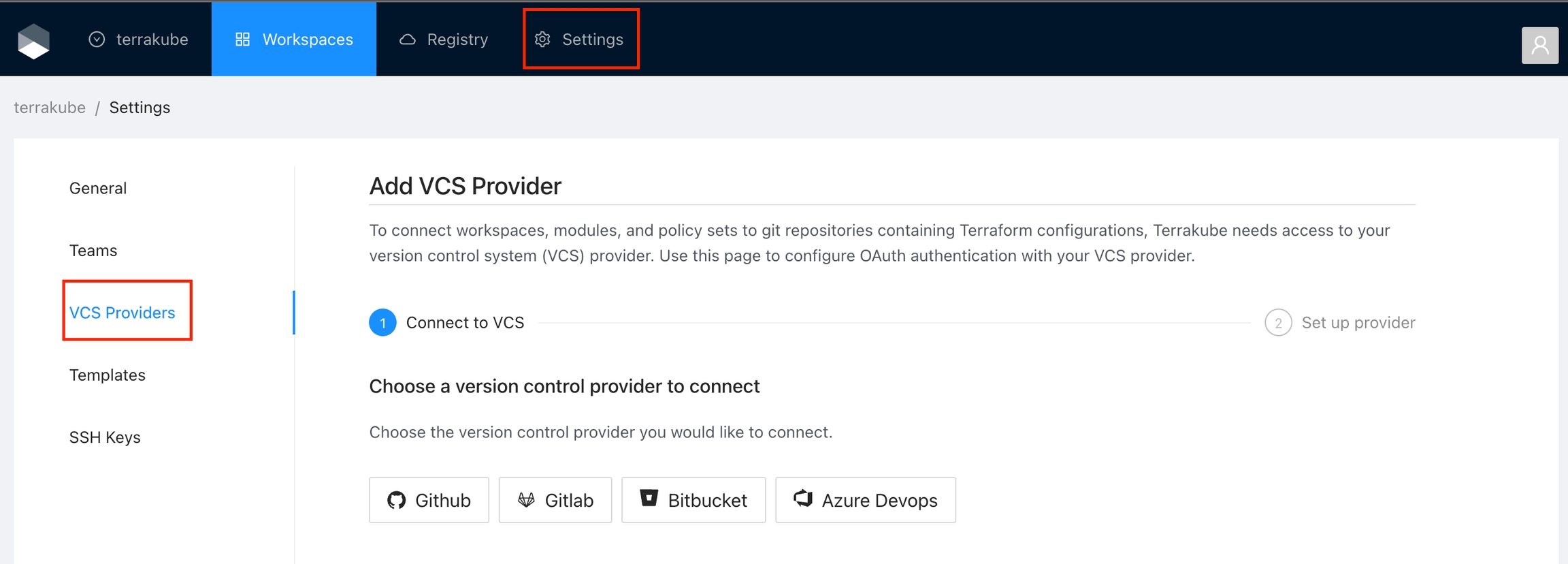

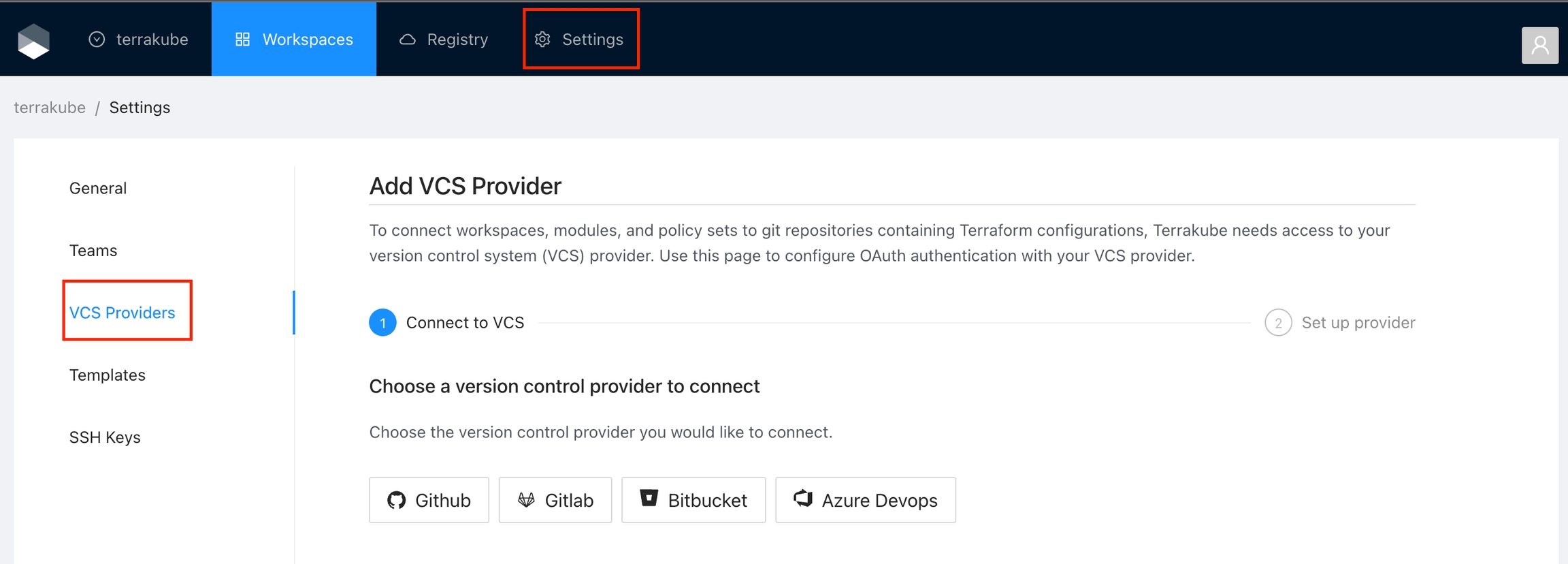

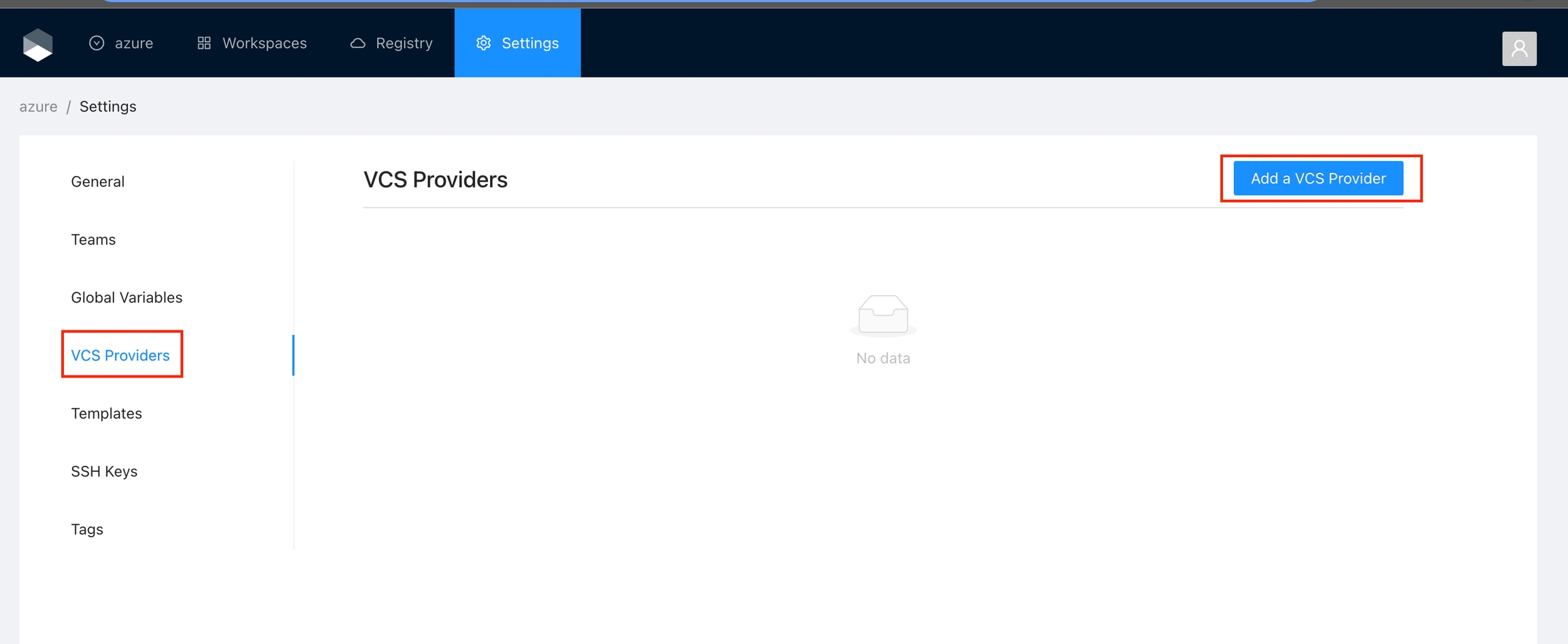

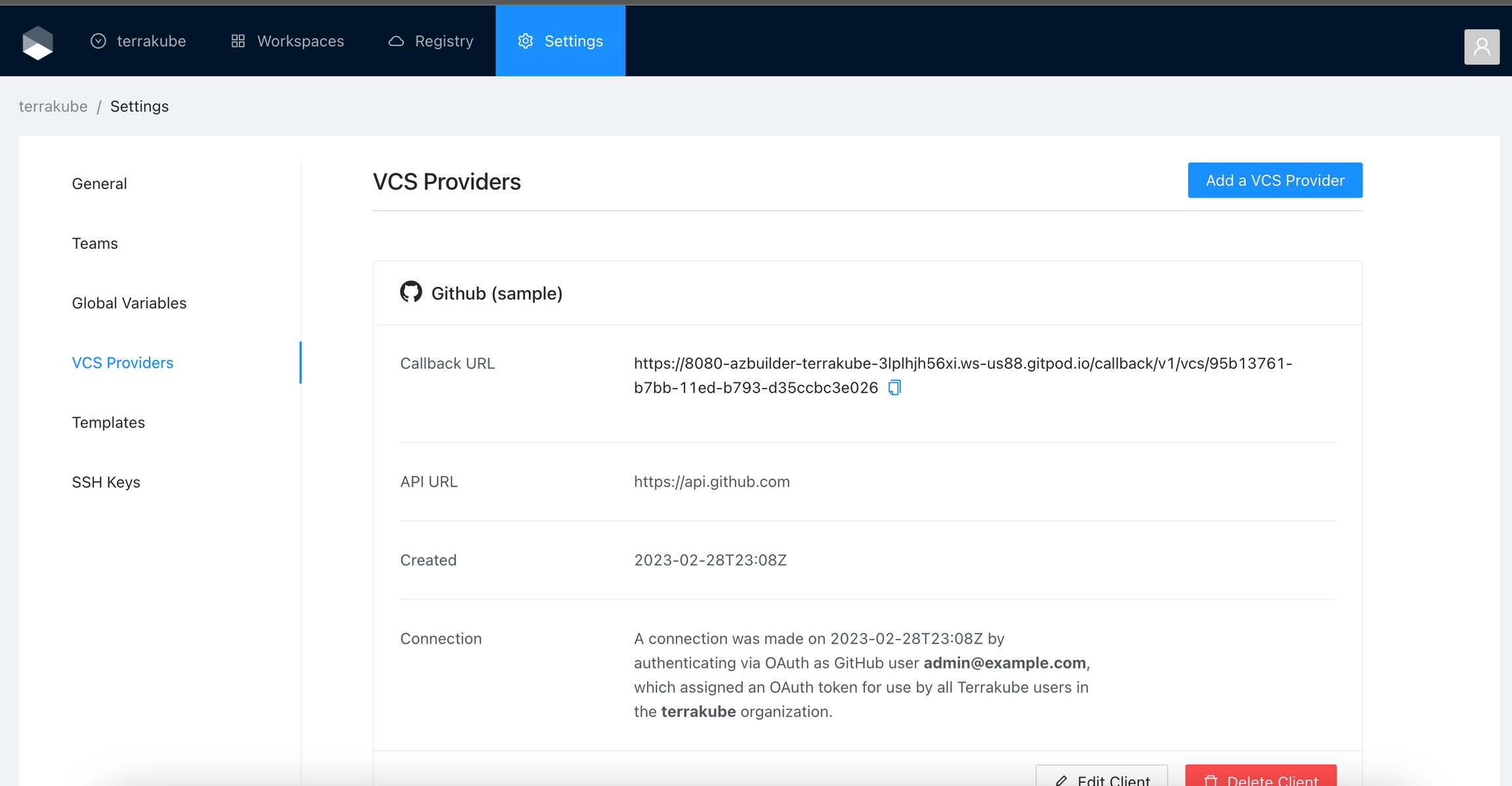

Navigate to the desired organization and click the Settings button, then on the left menu select VCS Providers

Click the Azure Devops button

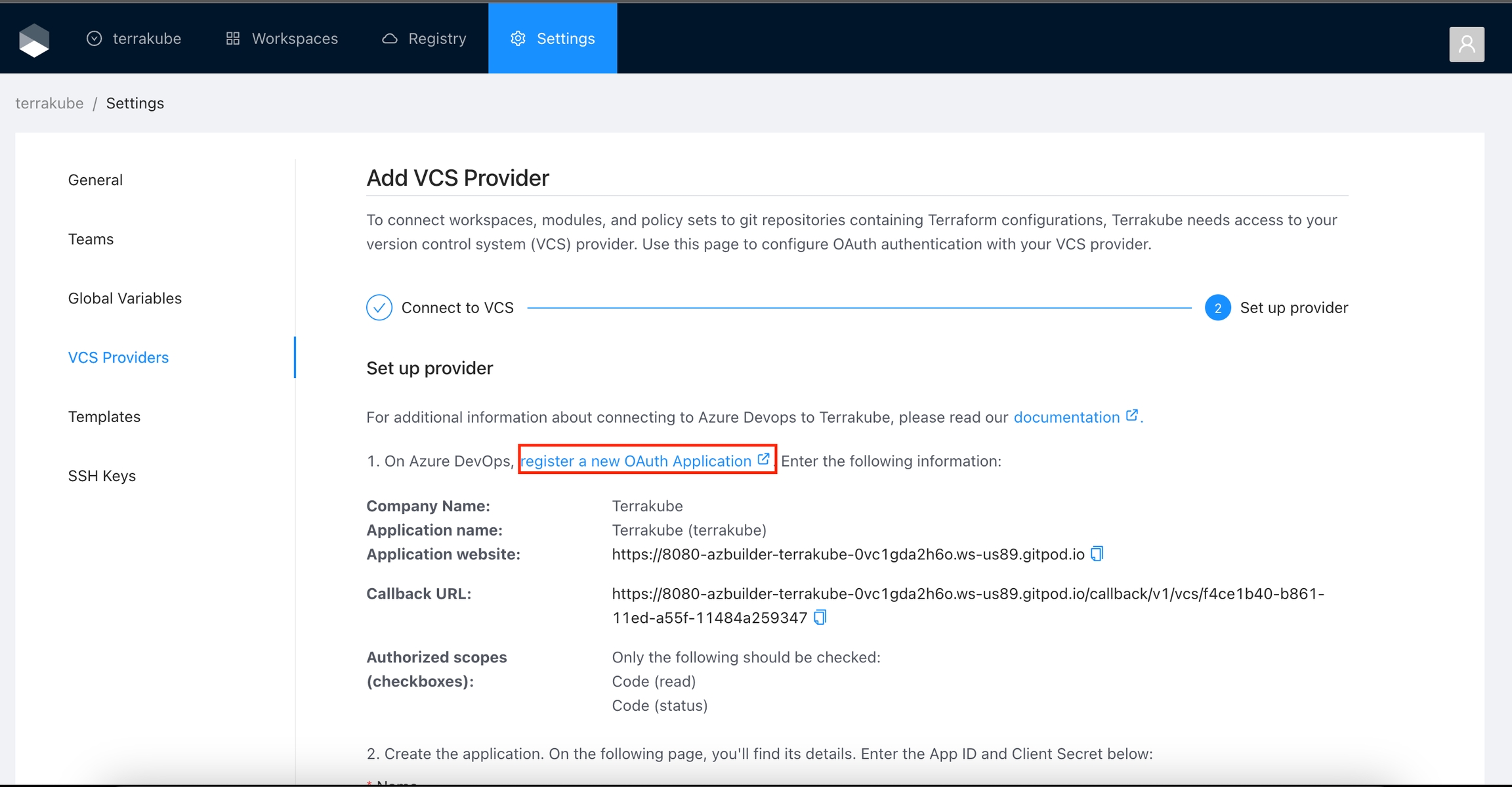

In the next screen click the link to in Azure Devops

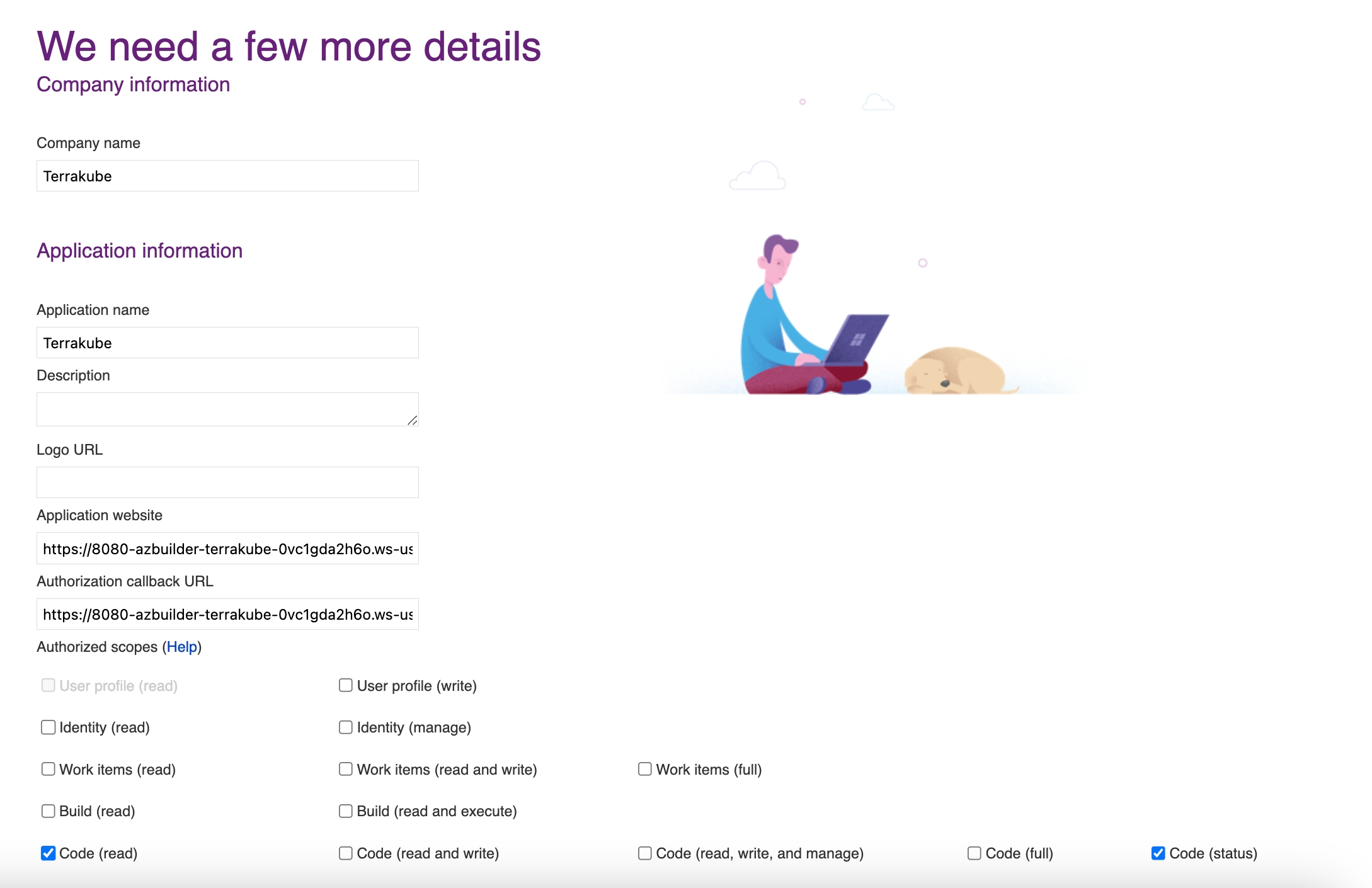

In the Azure Devops page, complete the required fields and click Create application

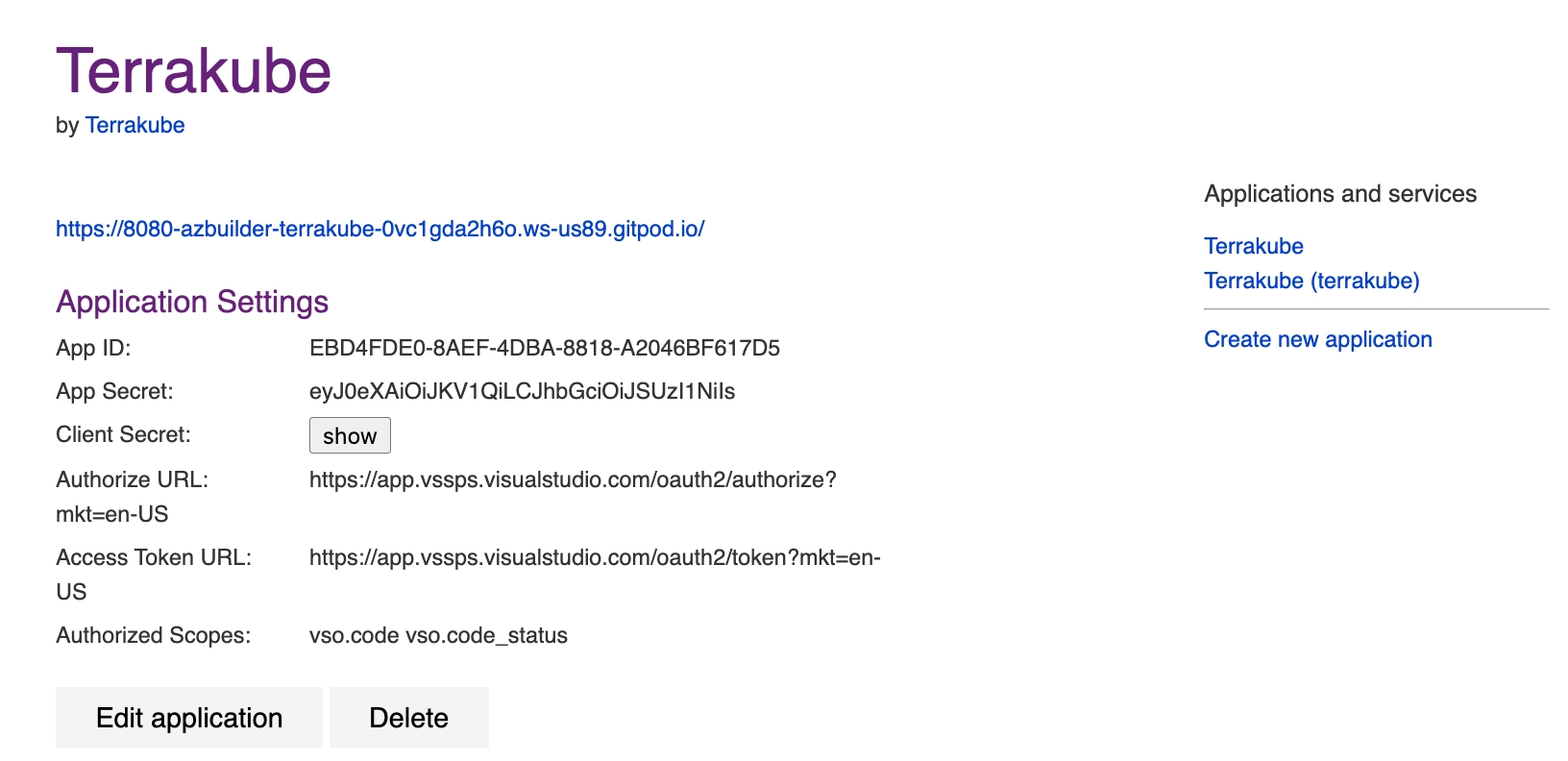

In the next screen, copy the App ID and Client Secret

Go back to Terrakube to enter the information you copied from the previous step. Then, click the Connect and Continue button.

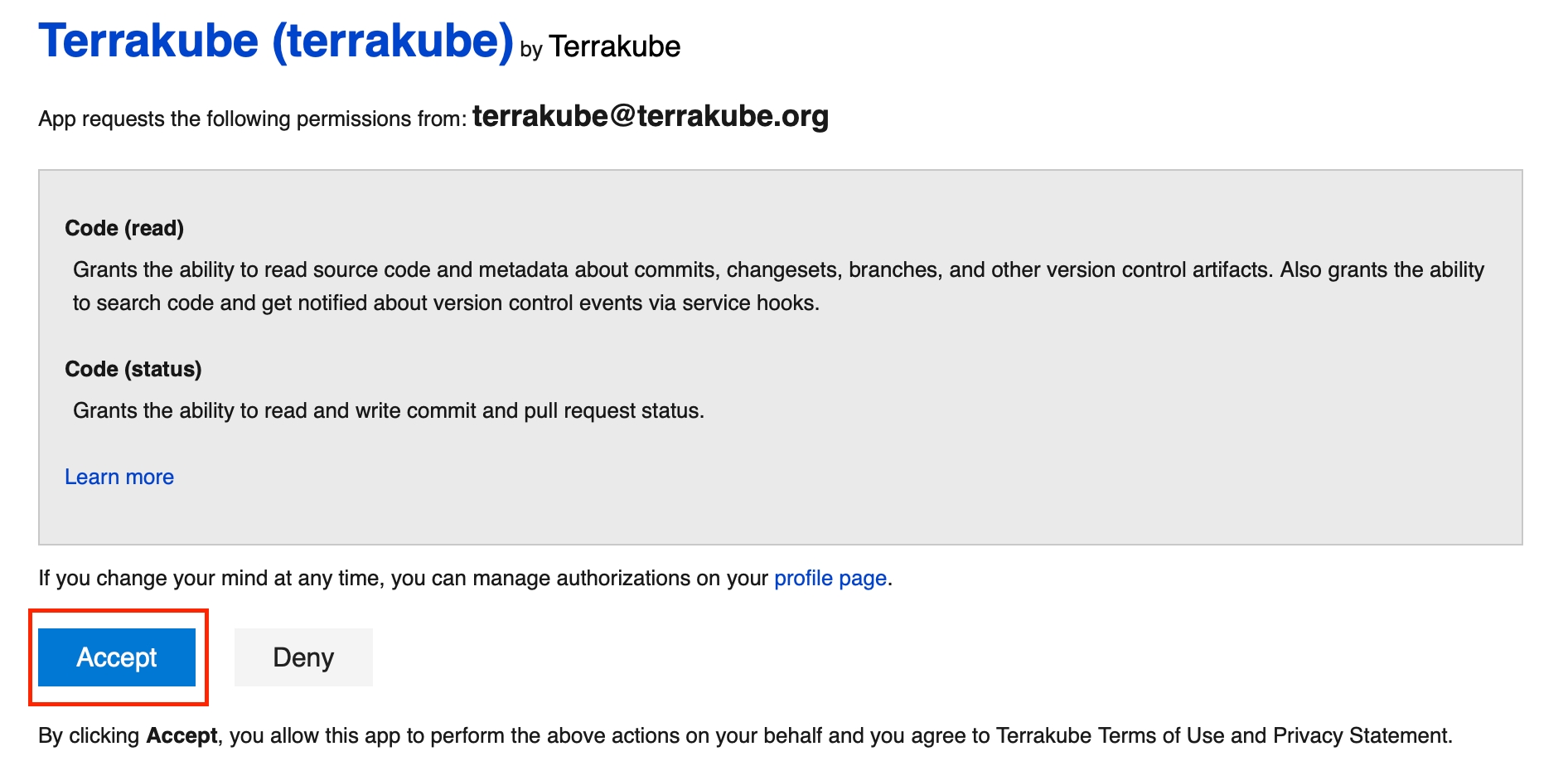

You will see an Azure Devops window, click the Accept button to complete the connection

Finally, if the connection was established successfully, you will be redirected to the VCS provider’s page in your organization. You should see the connection status with the date and the user that created the connection.

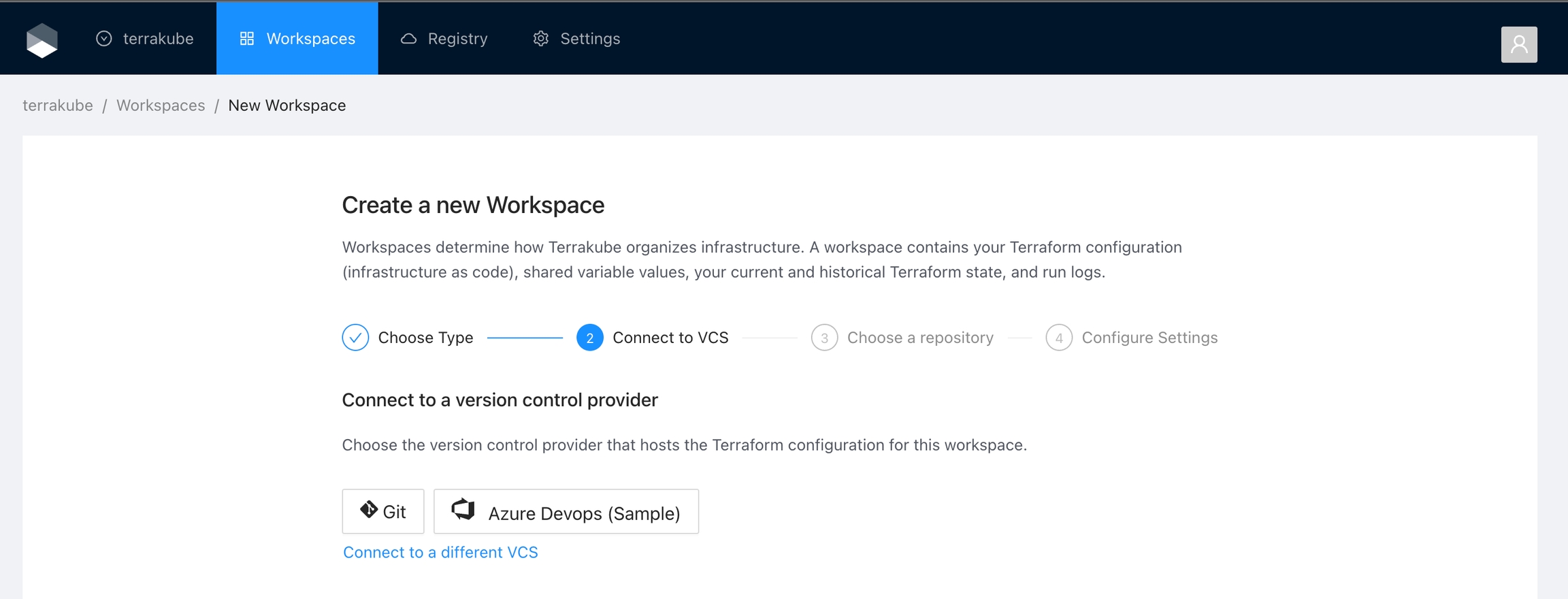

And now, you will be able to use the connection in your workspaces and modules:

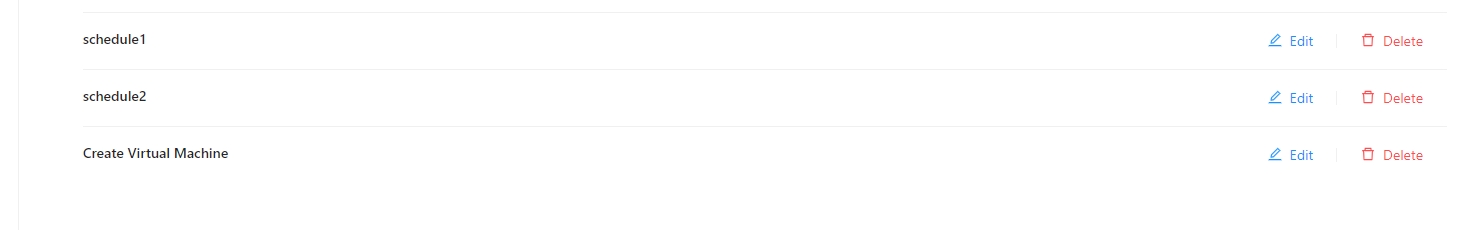

When we deploy some infrastructure we can set some other template to execute in some specific time for example to destroy the infrascturture or resize it.

This example will show the basic logic to accomplish but it is not creaing a real VM it just running some scripts that are showing the injected terraform variables when running the job.

Define the size as global terraform variables for example SIZE_SMALL and SIZE_BIG, this terraform variables will be injected in our future templates "schedule1" and "schedule2" as terraform variables dynamically.

We define a job template that creates the infrasctucture using some small size and create two schedule at the end of the template.

We will have 3 templates, one to create the virtual machine and two to resize it

Once the workspace is executed creating the infrasctructure two automatic shedules will be define

Differente jobs will be executed.

Inject the new size using global terraform variables

Inject the new size using global terraform variables

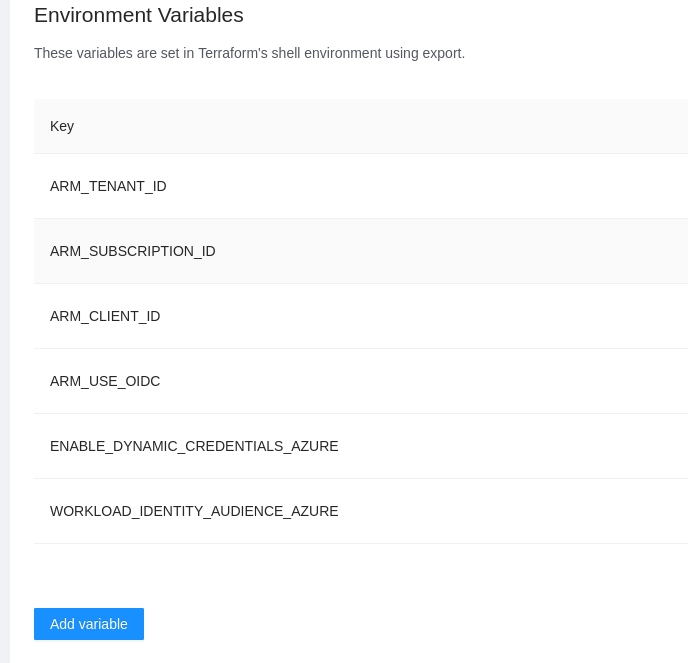

The dynamic provider credential setup in Azure can be done with the Terrraform code available in the following link:

https://github.com/AzBuilder/terrakube/tree/main/dynamic-credential-setup/azure

The code will also create a sample workspace with all the require environment variables that can be used to test the functionality using the CLI driven workflow.

Make sure to mount your public and private key to the API container as explained

Validate the following terrakube api endpoints are working:

Set terraform variables using: "variables.auto.tfvars"

Run Terraform apply to create all the federated credential setup in AWS and a sample workspace in terrakube for testing

To test the following terraform code can be used:

When running a job Terrakube will correctly authenticate to Azure without any credentials inside the workspace

To use Dynamic Provider credentials we need to genera a public and private key that will be use to generate a validate the federated tokens, we can use the following commands

You need to make sure the private key starts with "-----BEGIN PRIVATE KEY-----" if not the following command can be used to transform the private key to the correct format

The public and private key need to be mounted inside the container and the path should be specify in the following environment variables

DynamicCredentialPublicKeyPath

DynamicCredentialPrivateKeyPath

To use Dynamic Provider credentials the following public endpoints were added. This endpoint needs to be accessible for your different cloud providers.

The following environment variables can be used to customize the dynamic credentials configuration:

DynamicCredentialId = This will be the kid in the JWKS endpoint (Default value: 03446895-220d-47e1-9564-4eeaa3691b42)

DynamicCredentialTtl= The TTL for the federated token generated internally in Terrakube (Defafult: 30)

DynamicCredentialPublicKeyPath= The path to the public key to validate the federated tokens

DynamicCredentialPrivateKeyPath=The path to the private key to generate the federated tokens

Terrakube will generate a JWT token internally, this token will be used to authenticate to your cloud provider.

The token structure looks like the following for Azure

The token structure looks like the following for GCP

The token structure looks like the following for AWS

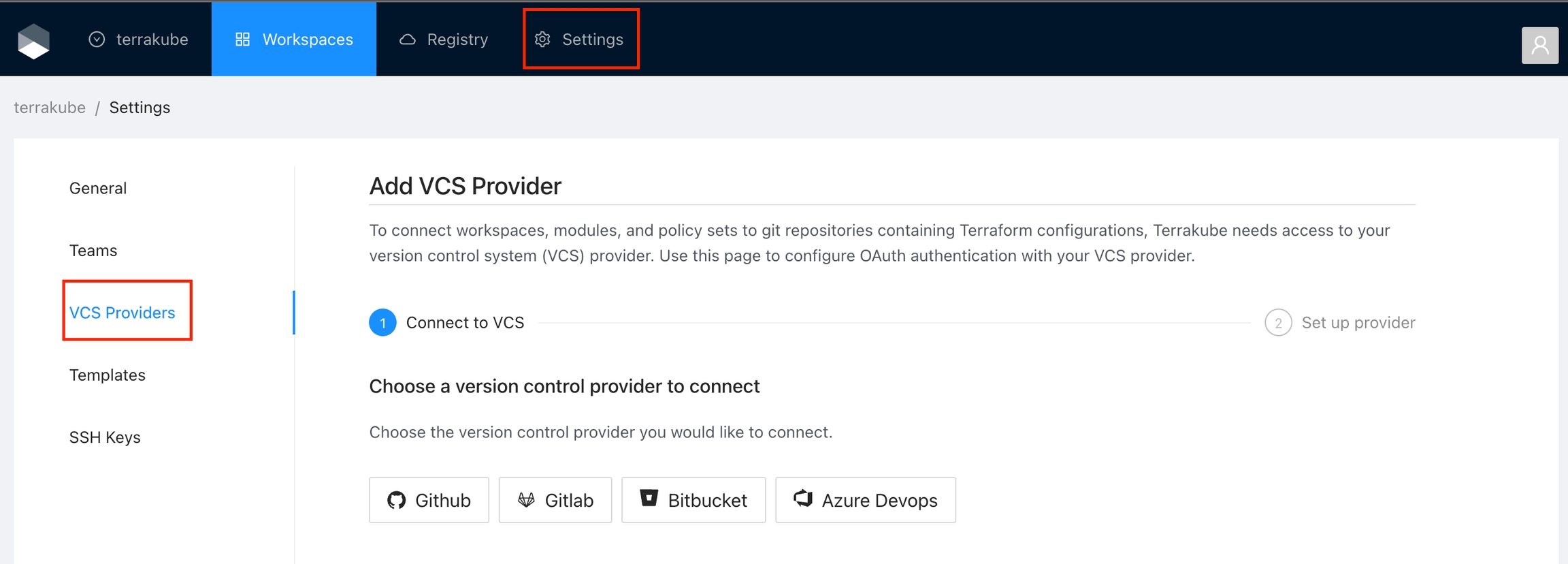

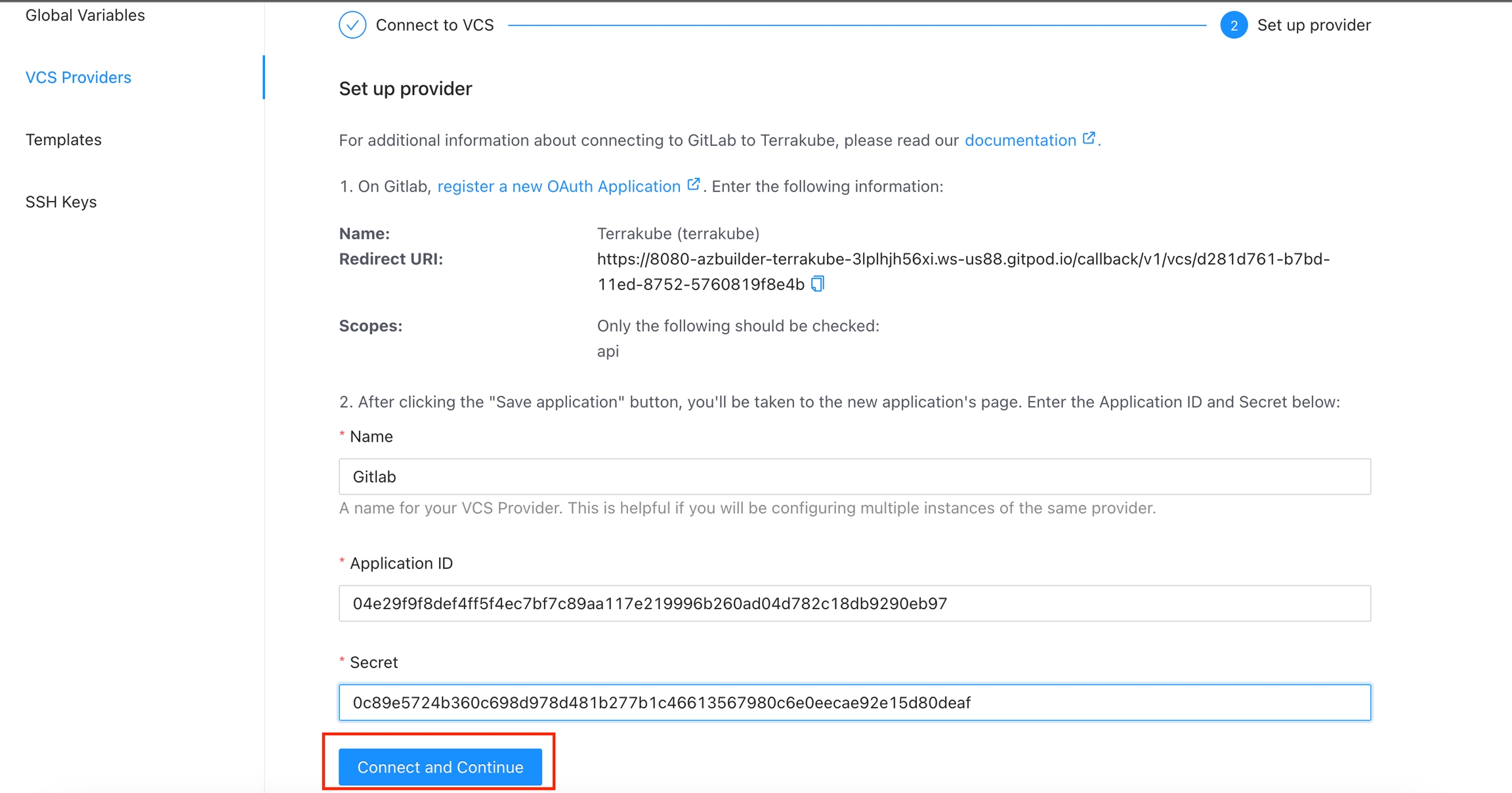

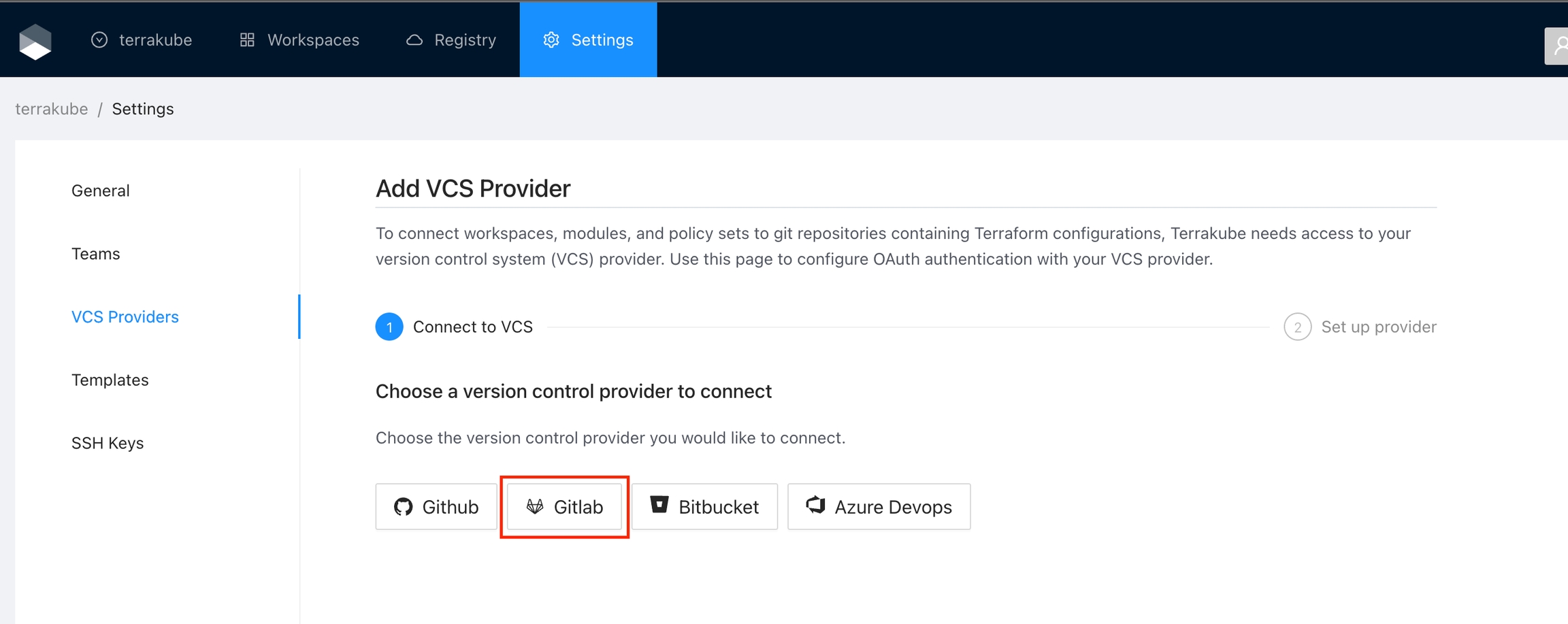

For using repositories from Gitlab.com with Terrakube workspaces and modules you will need to follow these steps:

Navigate to the desired organization and click the Settings button, then on the left menu select VCS Providers

Click the Gitlab button

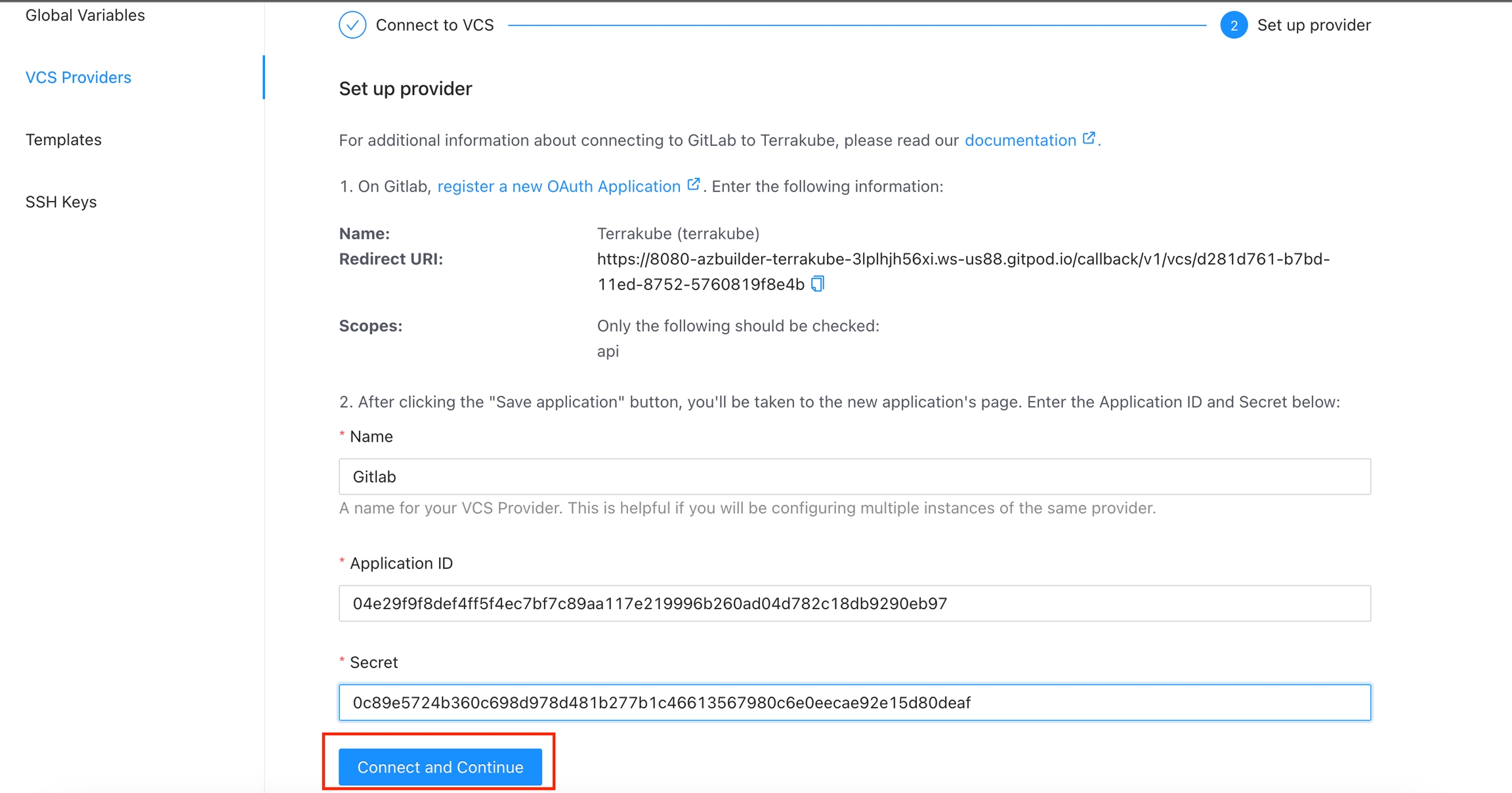

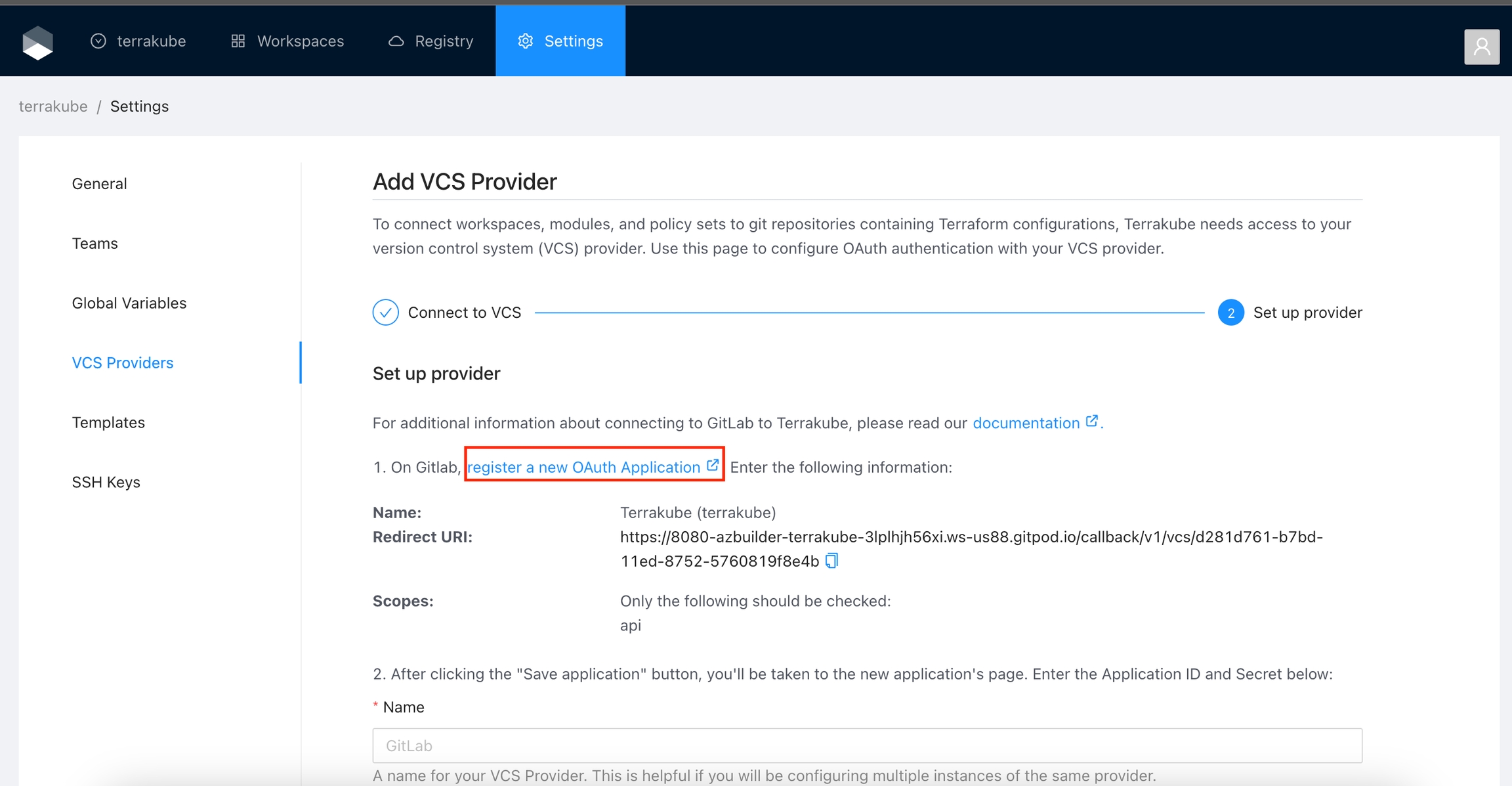

In the next screen click the link to in Gitlab

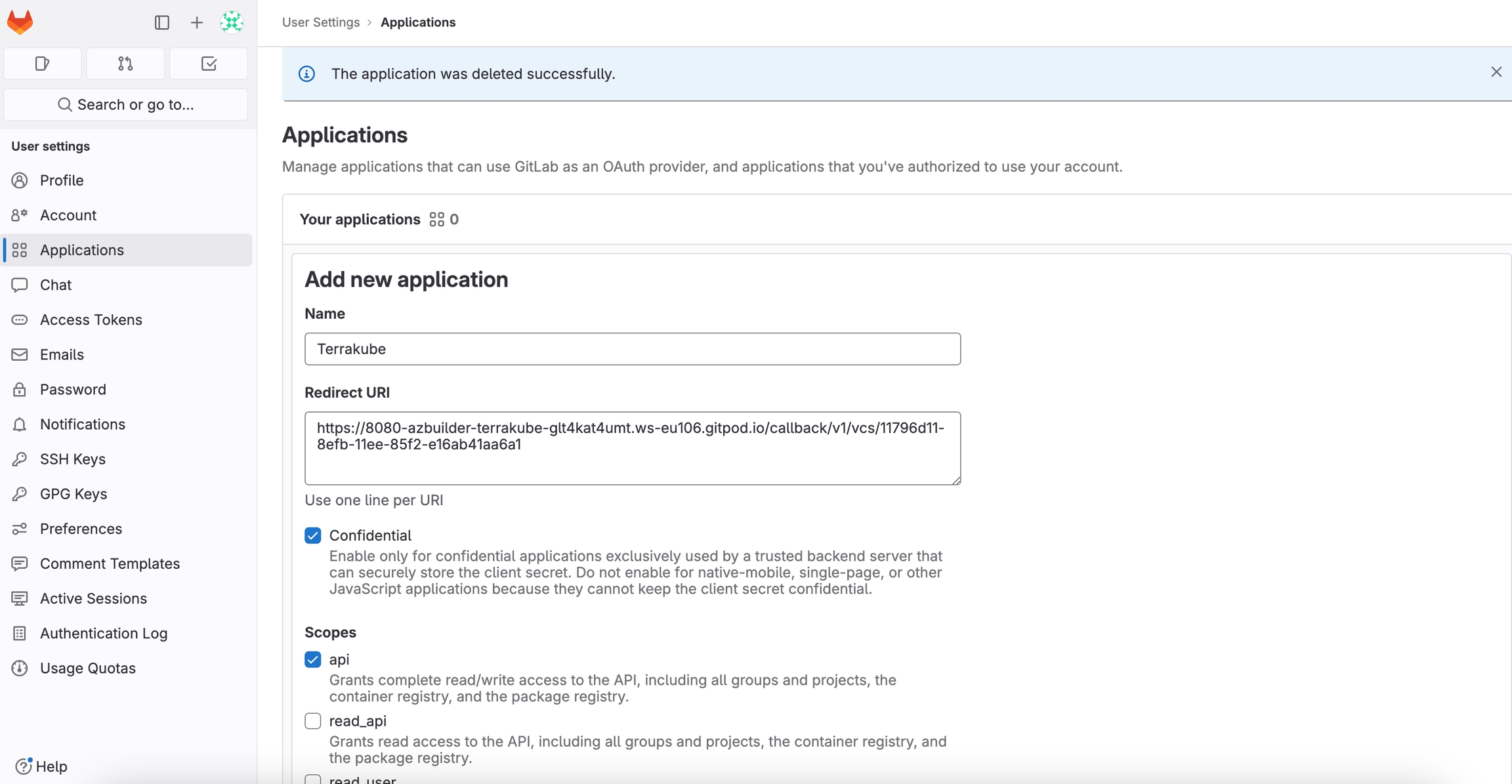

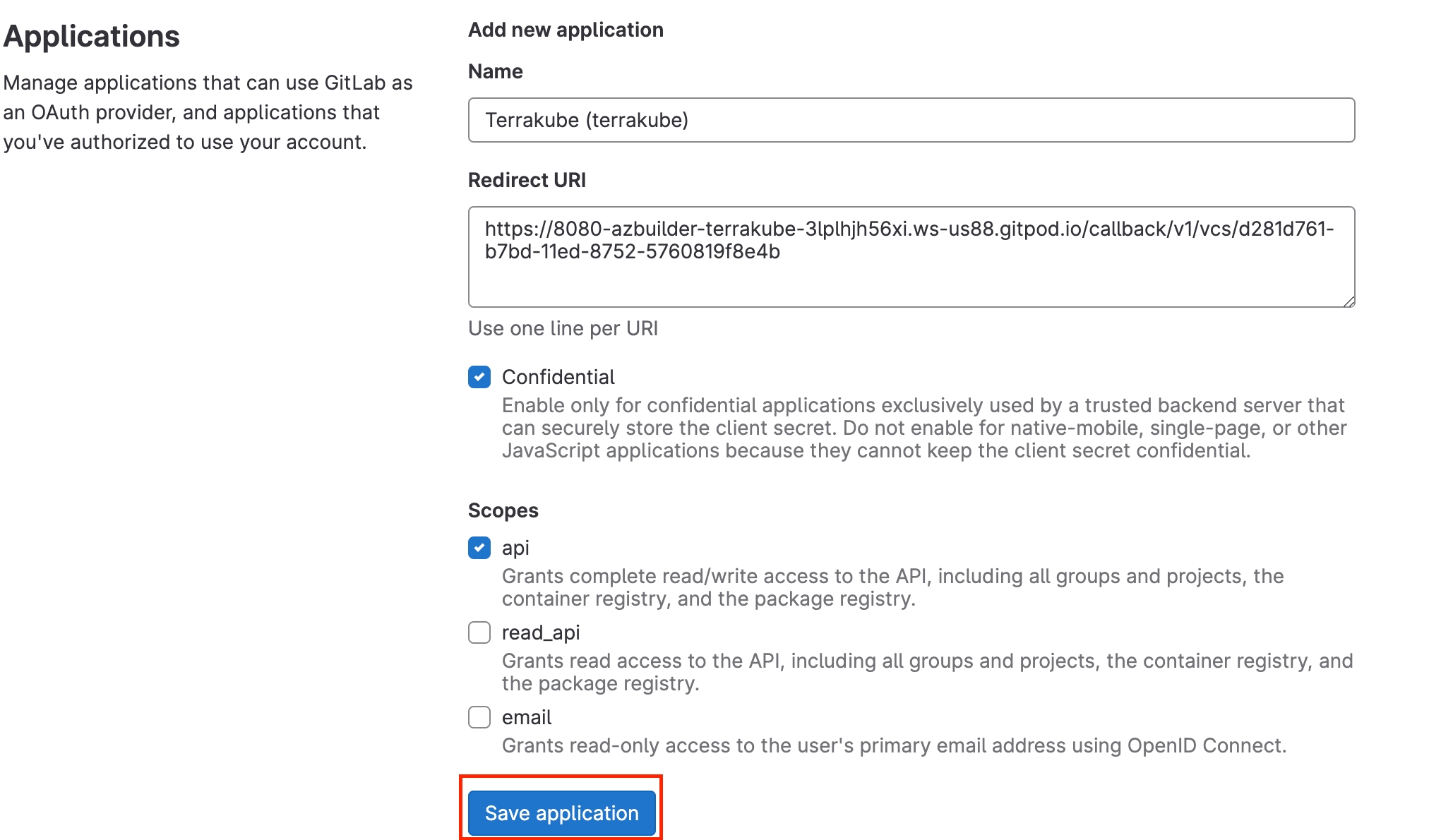

On Gitlab, complete the required fields and click Save application button

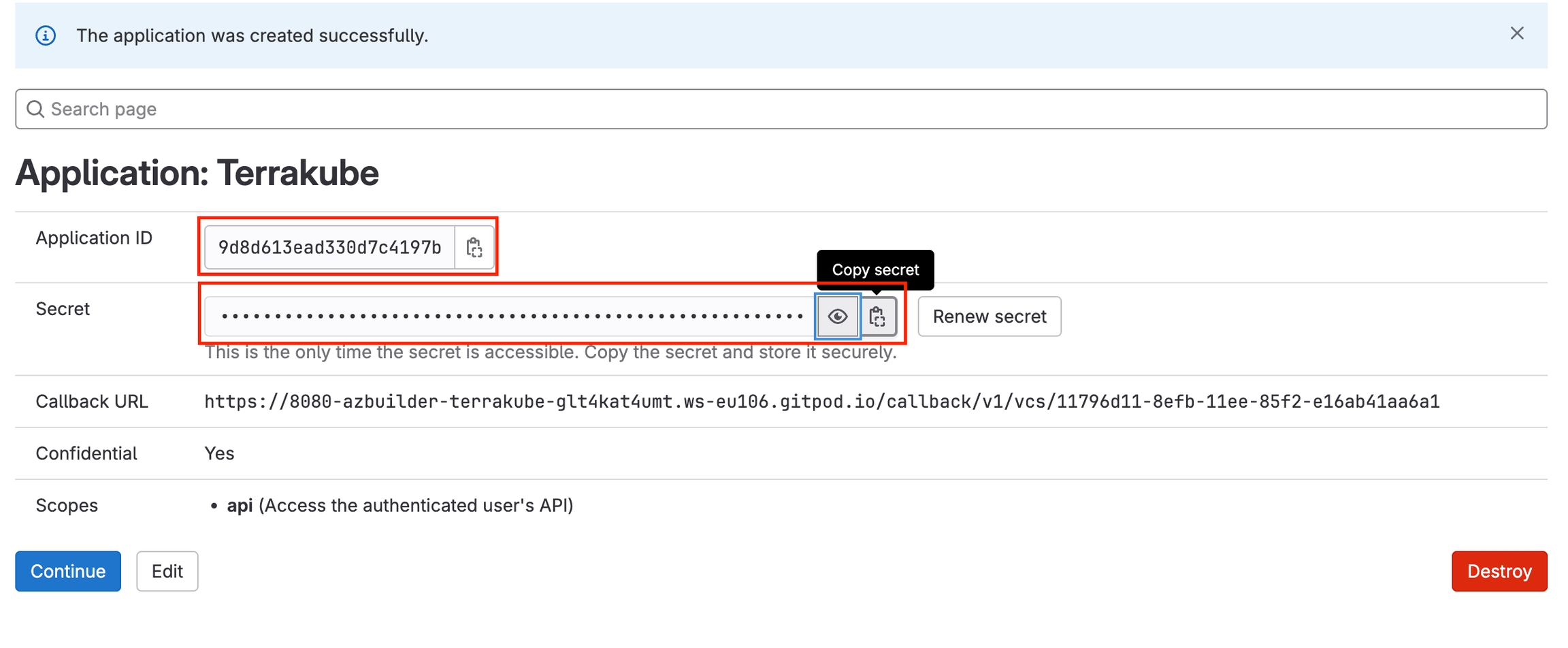

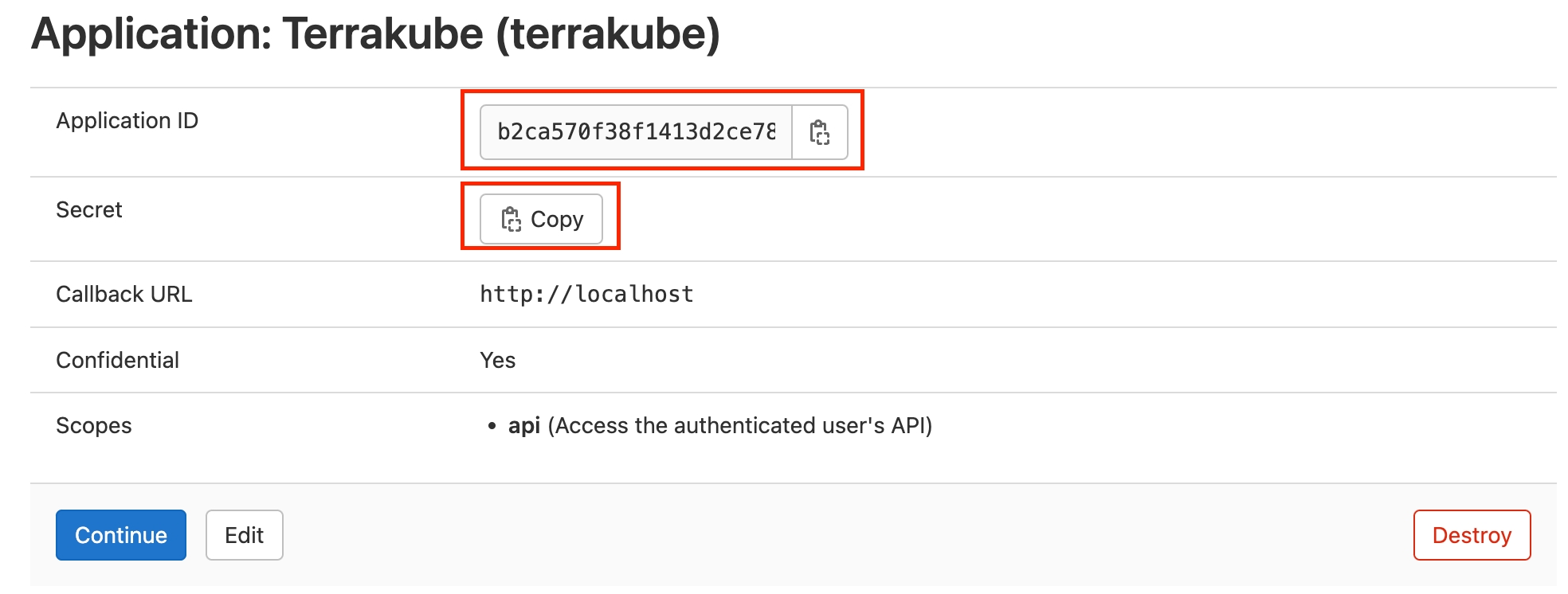

In the next screen, copy the Application ID and Secret

Go back to Terrakube to enter the information you copied from the previous step. Then, click the Connect and Continue button.

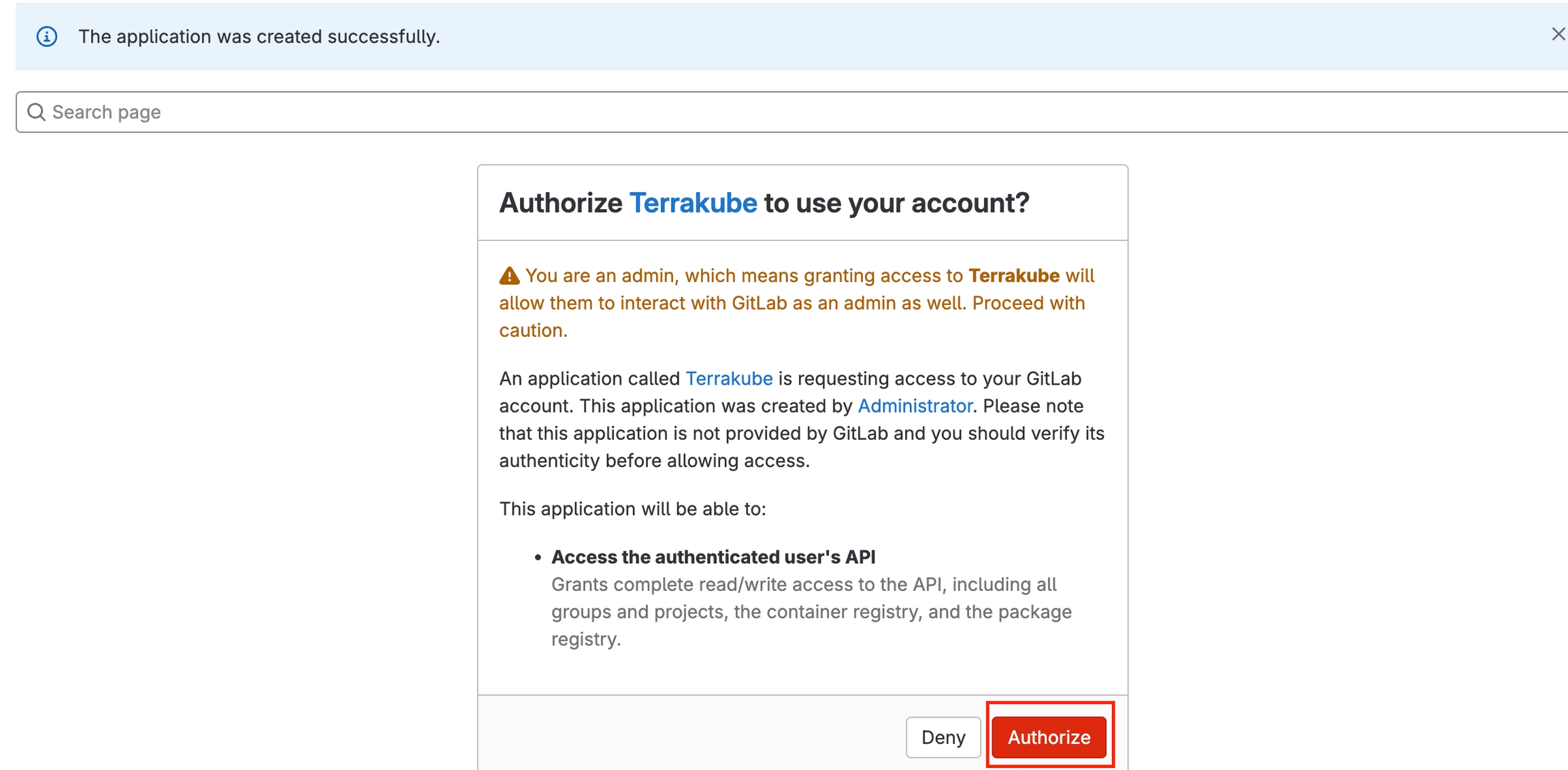

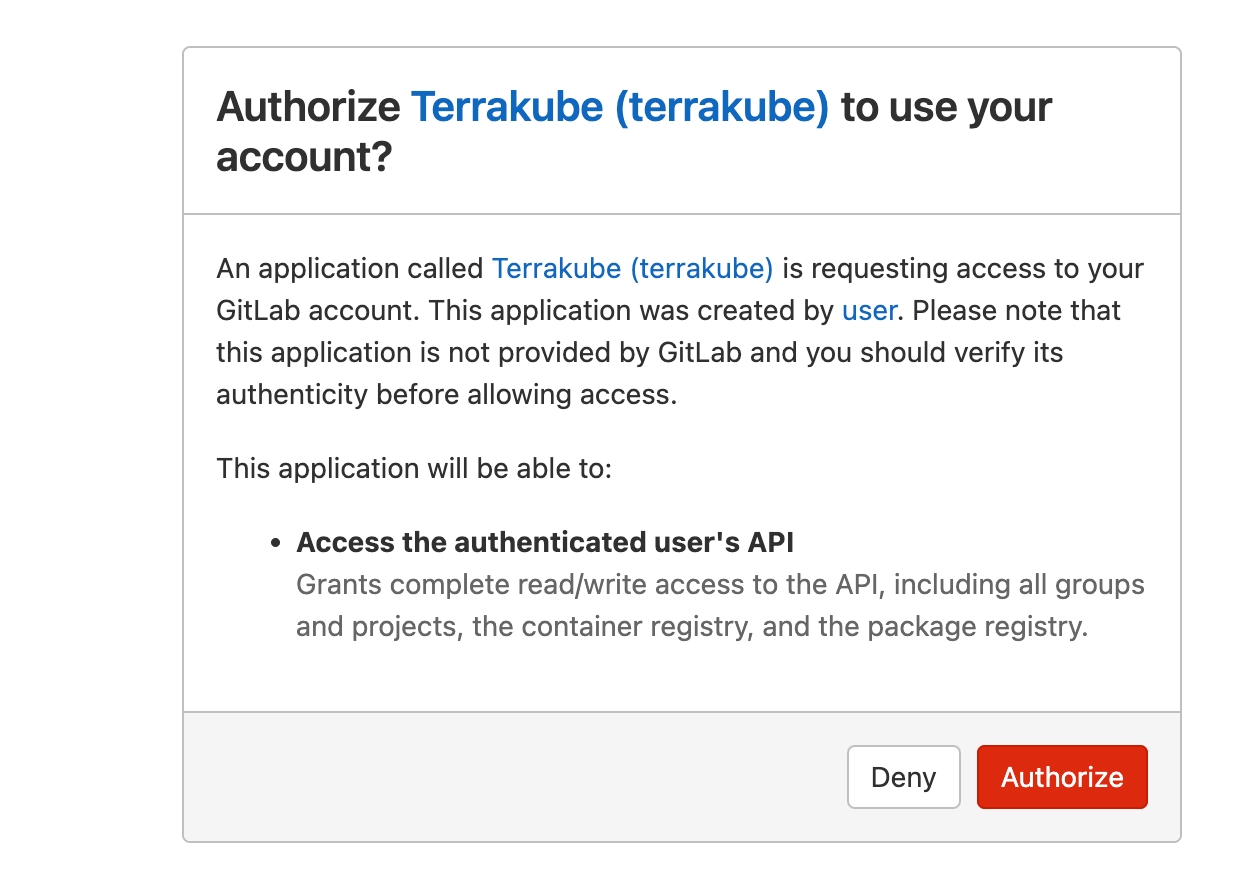

You will see a Gitlab window, click the Authorize button to complete the connection

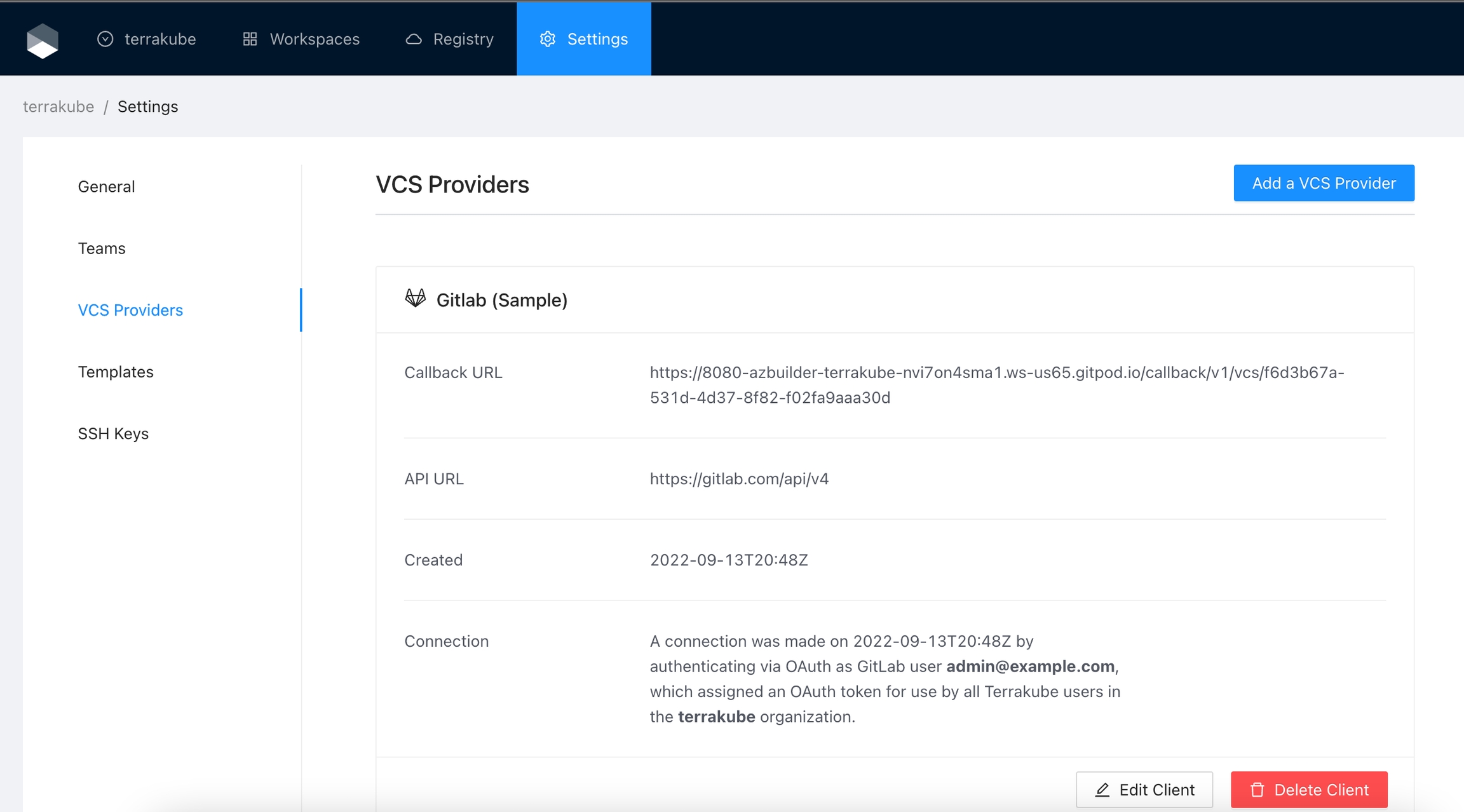

Finally, if the connection was established successfully, you will be redirected to the VCS provider’s page in your organization. You should see the connection status with the date and the user that created the connection.

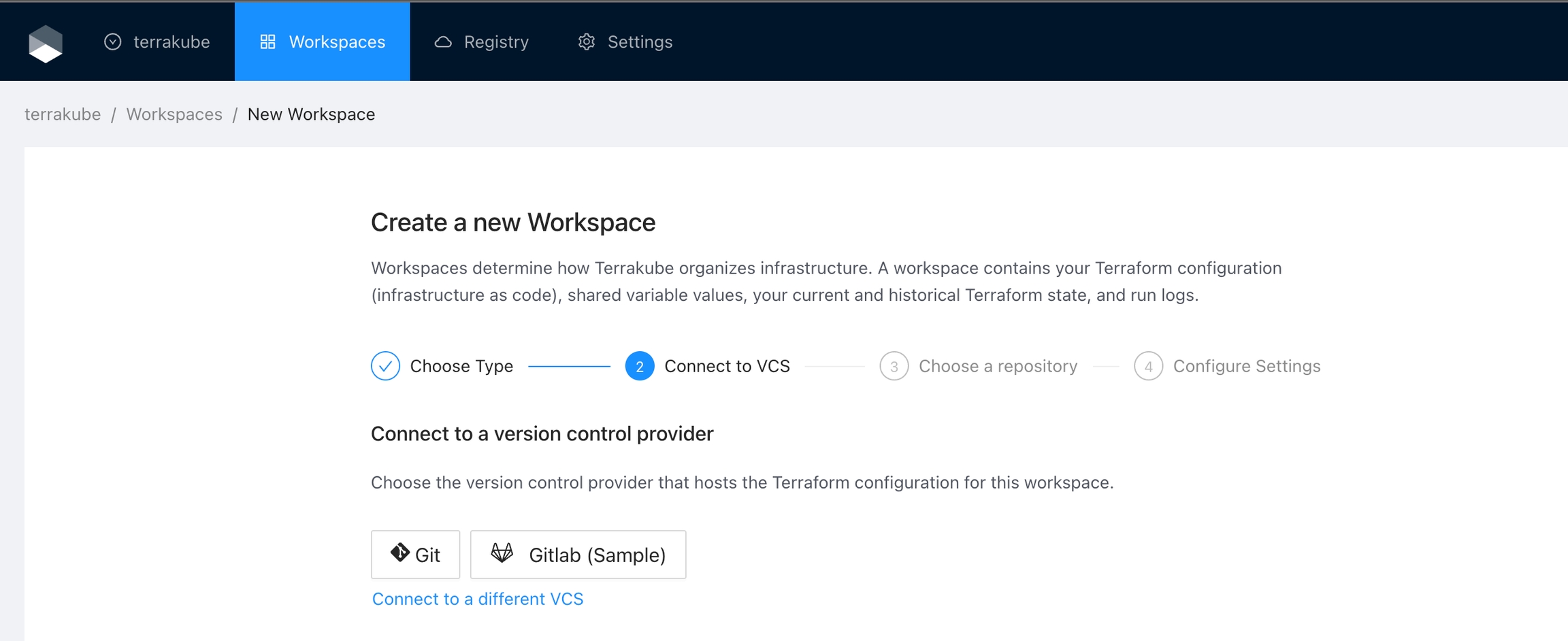

And now, you will be able to use the connection in your workspaces and modules:

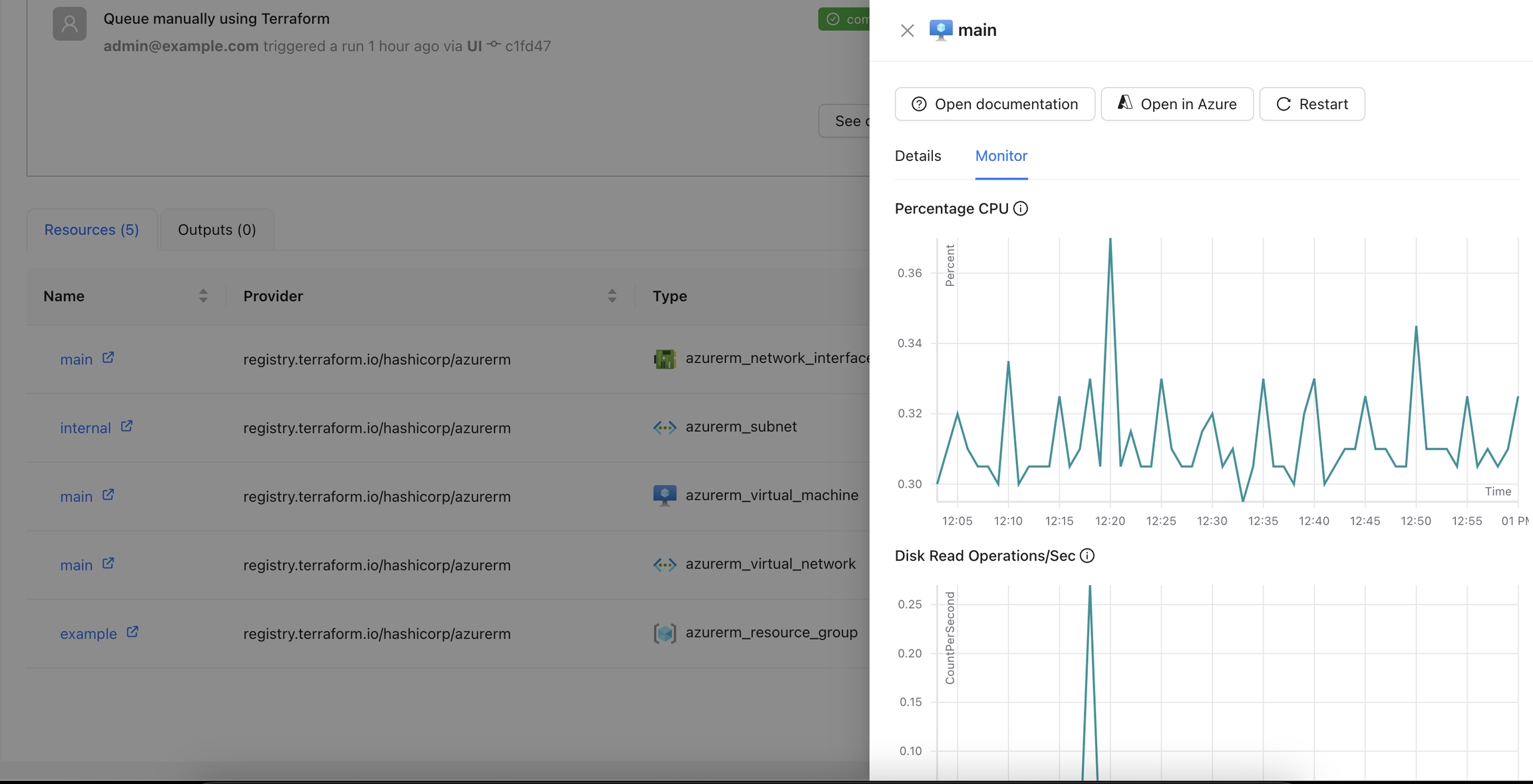

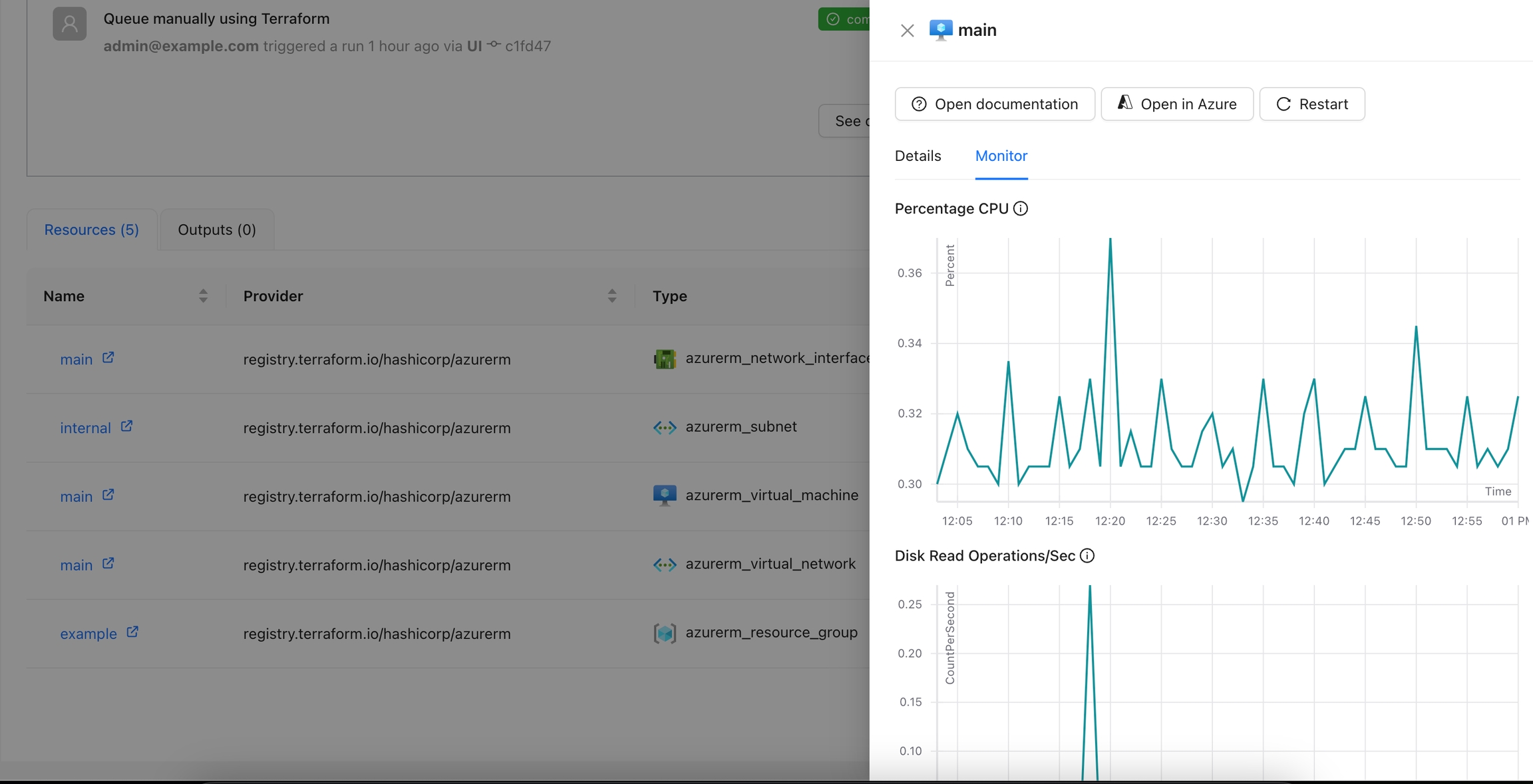

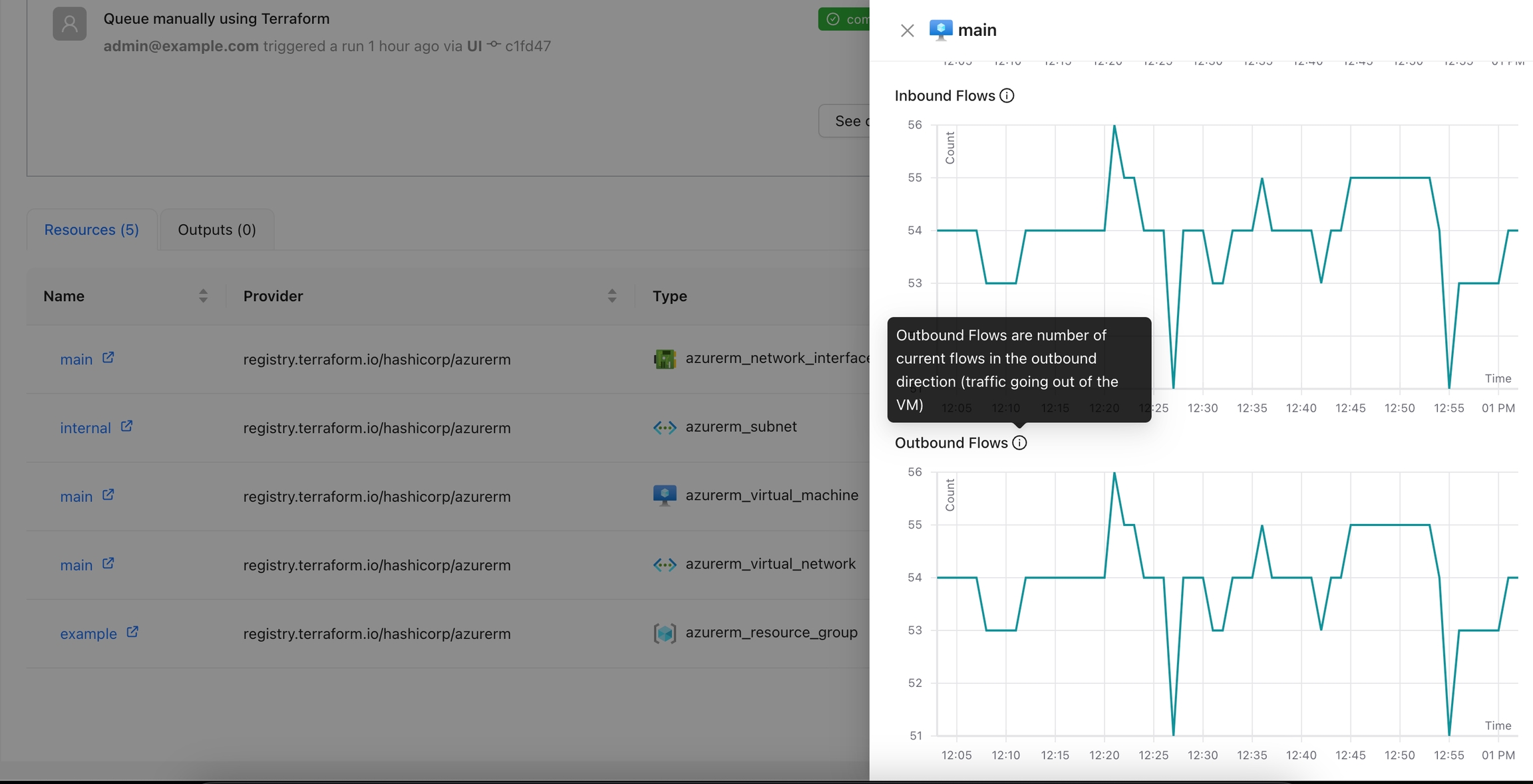

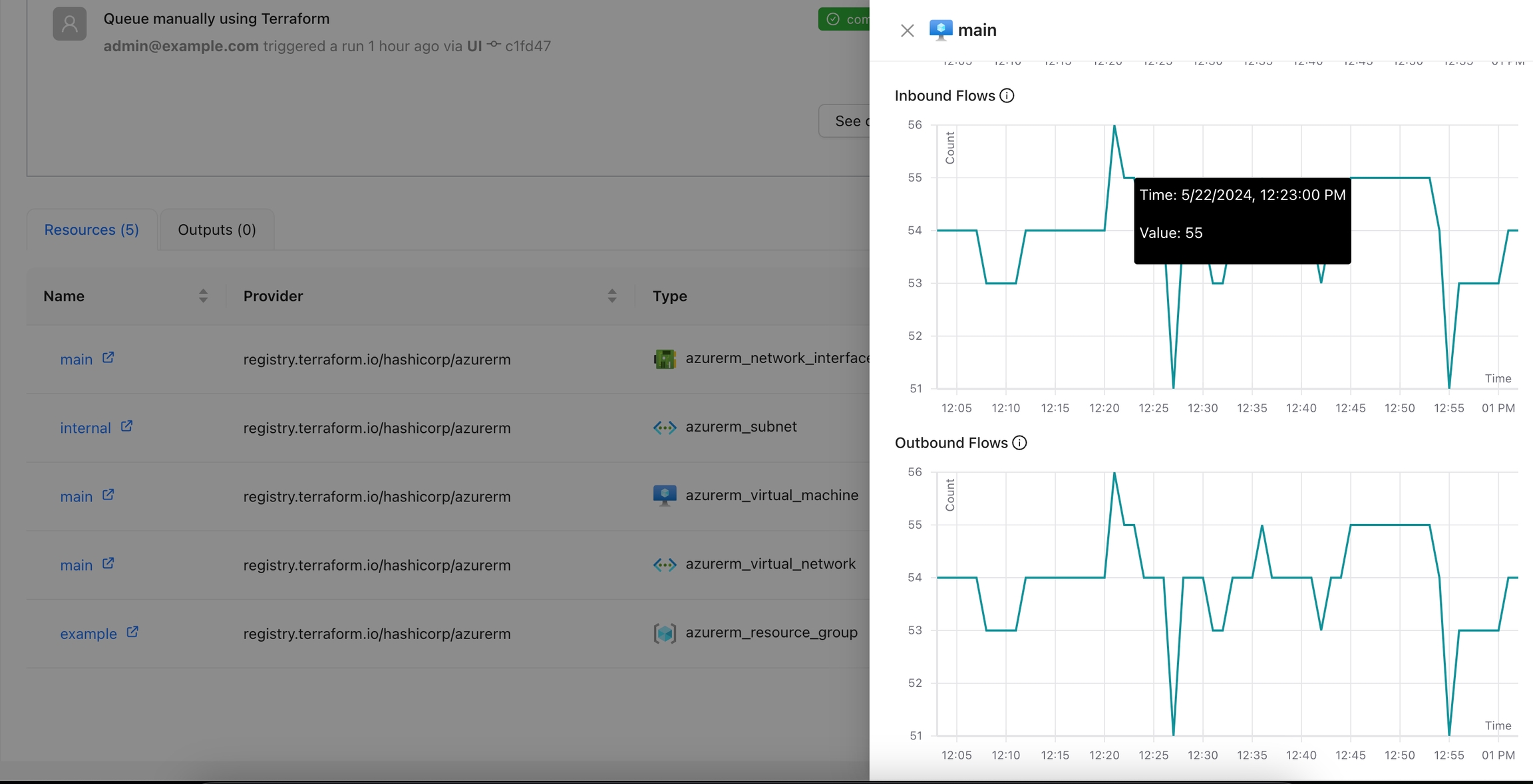

The Azure Monitor Metrics action allows users to fetch and visualize metrics from Azure Monitor for a specified resource.

By default, this Action is disabled and when enabled will appear for all the azurerm resources. If you want to display this action only for certain resources, please check .

This action requires the following variables as or in the Workspace Organization:

ARM_CLIENT_ID: The Azure Client ID used for authentication.

ARM_CLIENT_SECRET: The Azure Client Secret used for authentication.

ARM_TENANT_ID: The Azure Tenant ID associated with your subscription.

Navigate to the Workspace Overview or the Visual State and click on a resource name.

Click the Monitor tab to view metrics for the selected resource.

You can view additional details for the metrics using the tooltip.

You can see more details for each chart by navigating through it.

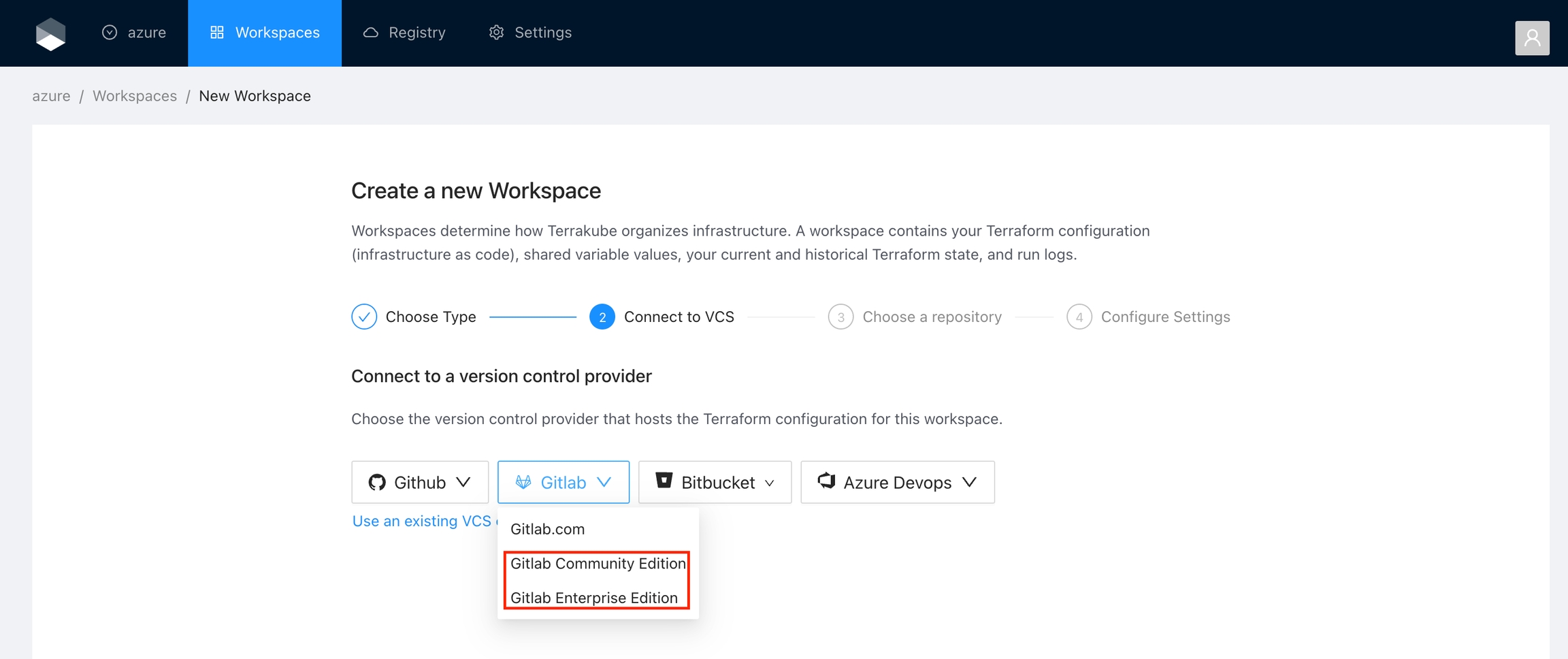

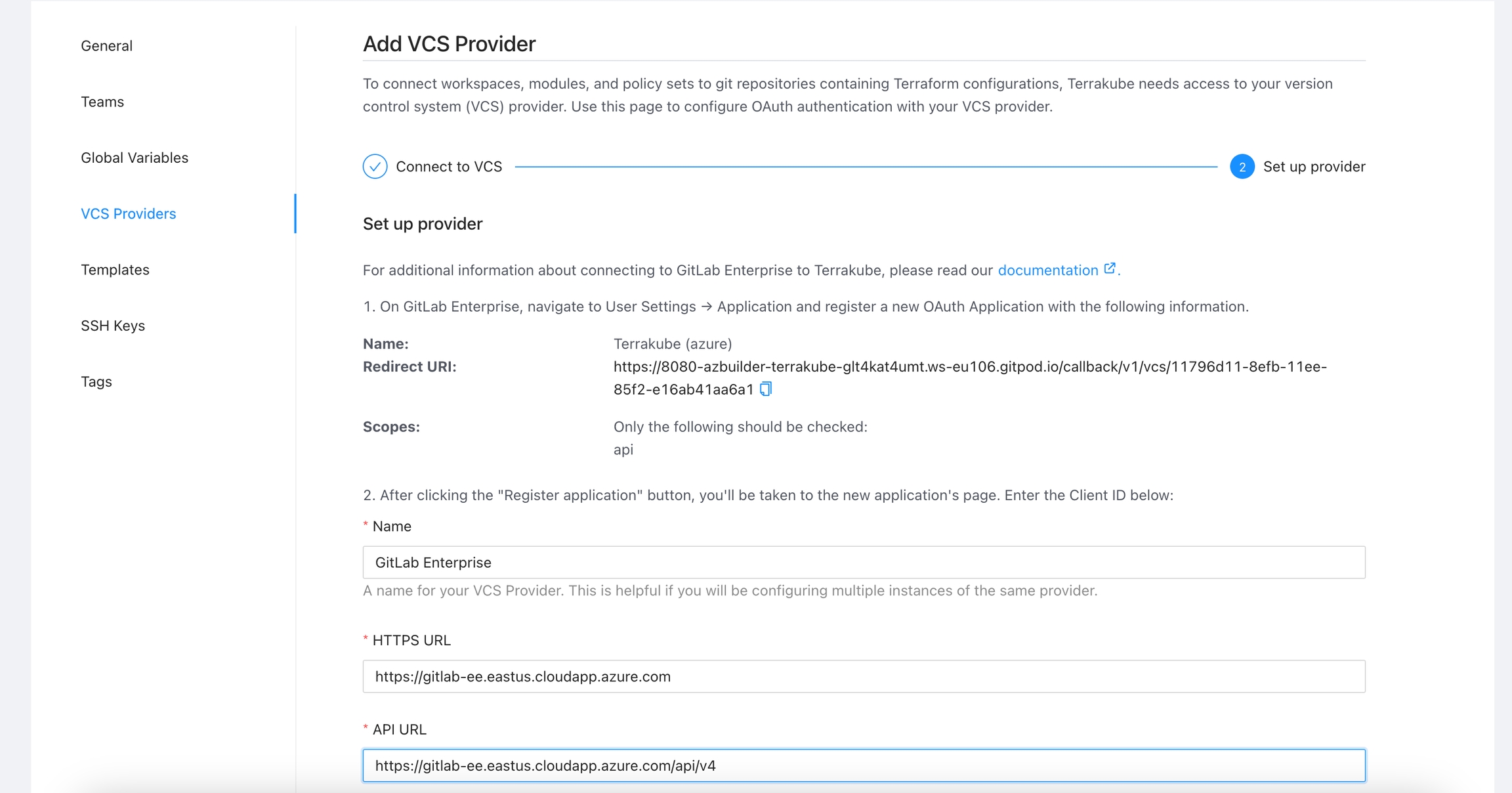

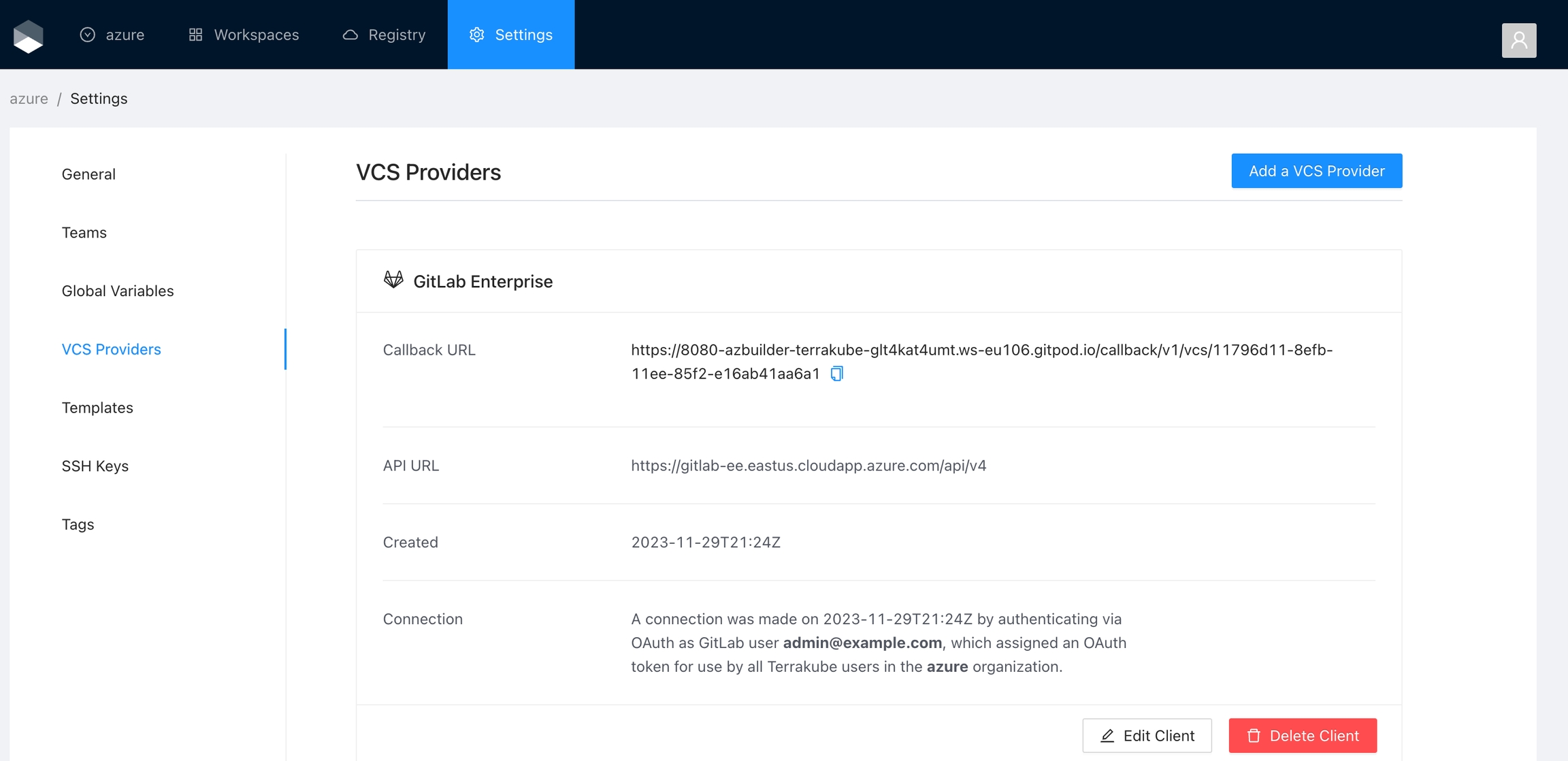

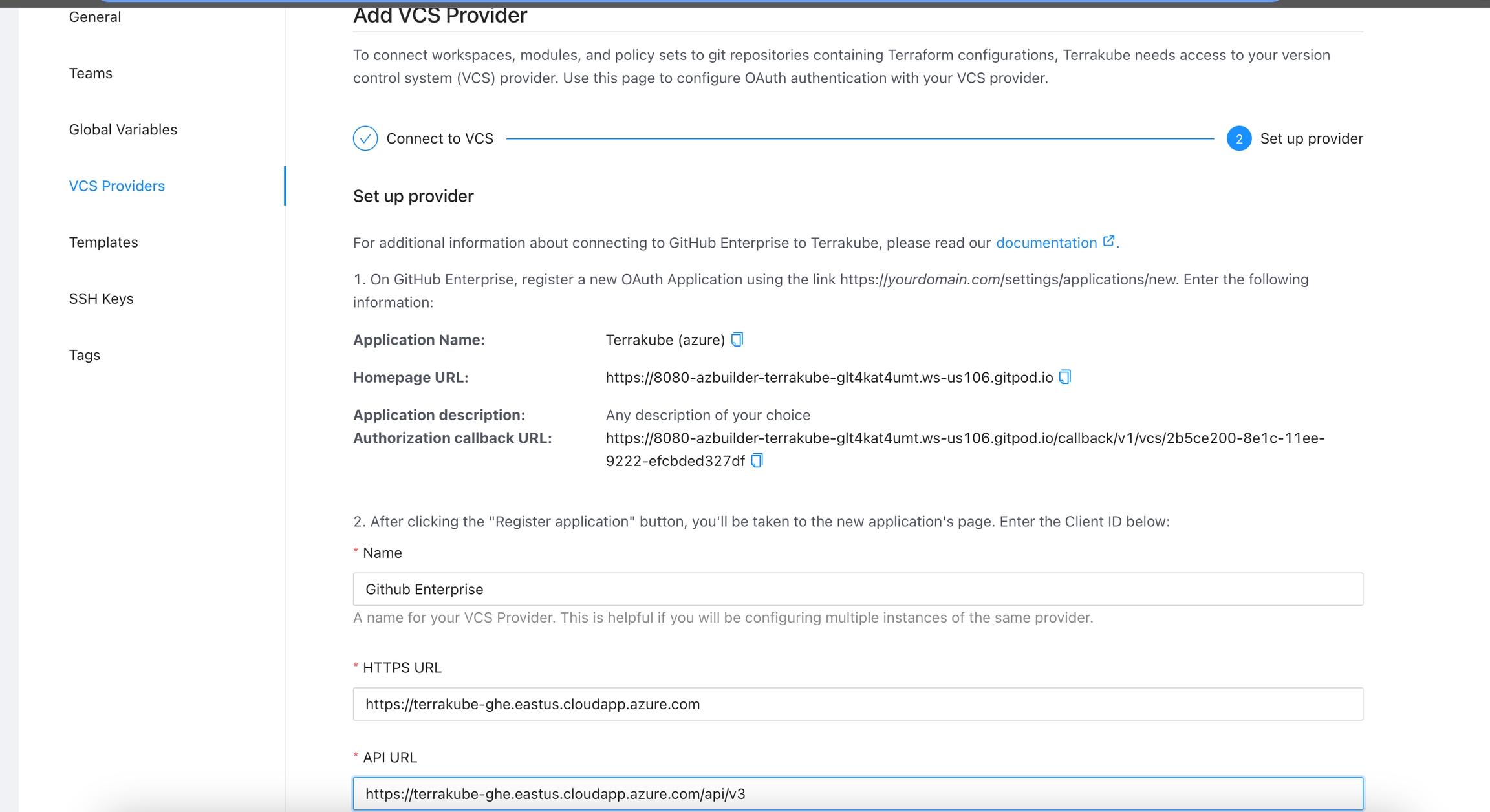

To use Terrakube’s VCS features with a self-hosted GitLab Enterprise Edition (EE) or GitLab Community Edition (CE), follow these instructions. For GitLab.com, .

Got to the organization settings you want to configure.

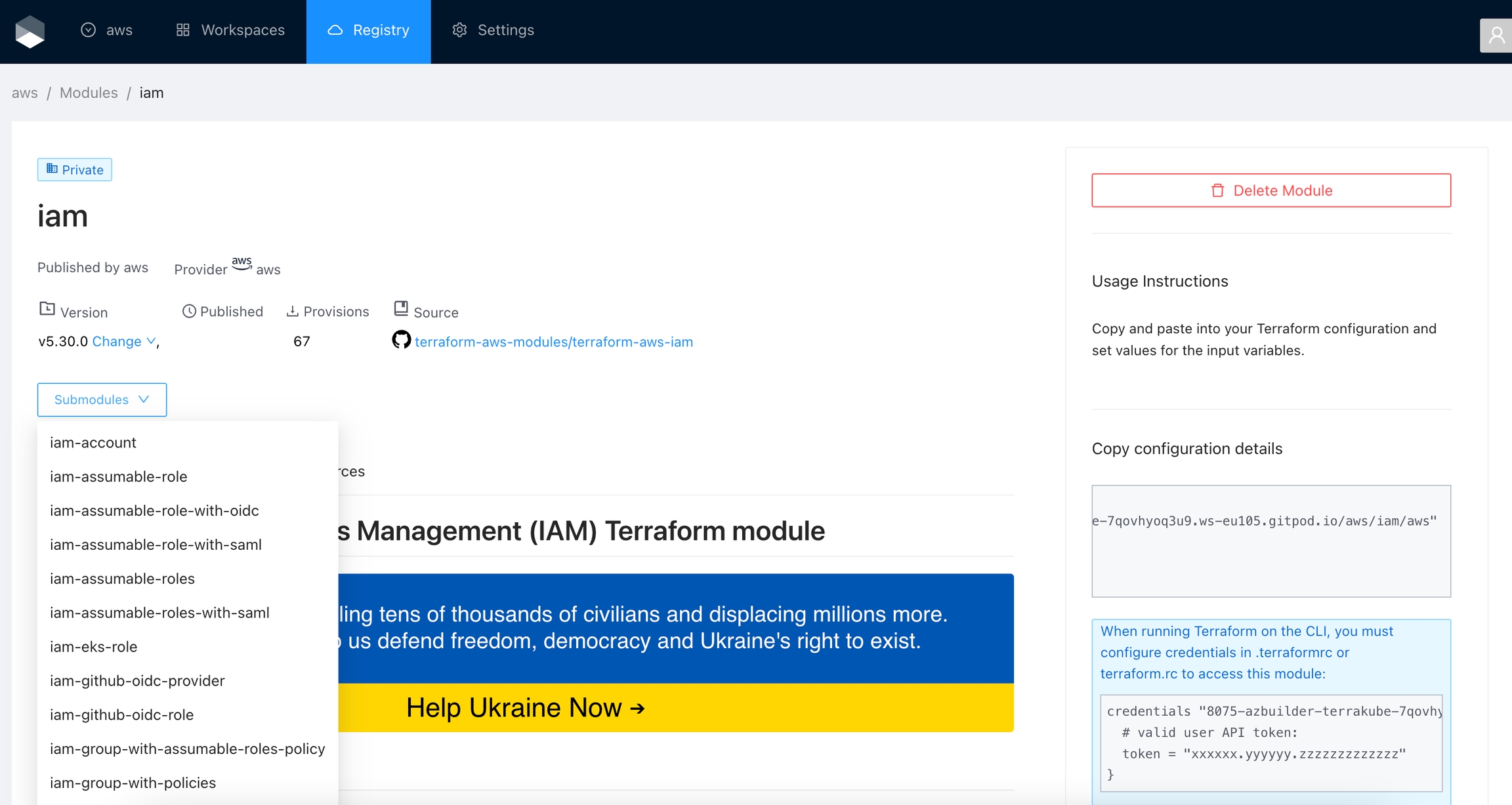

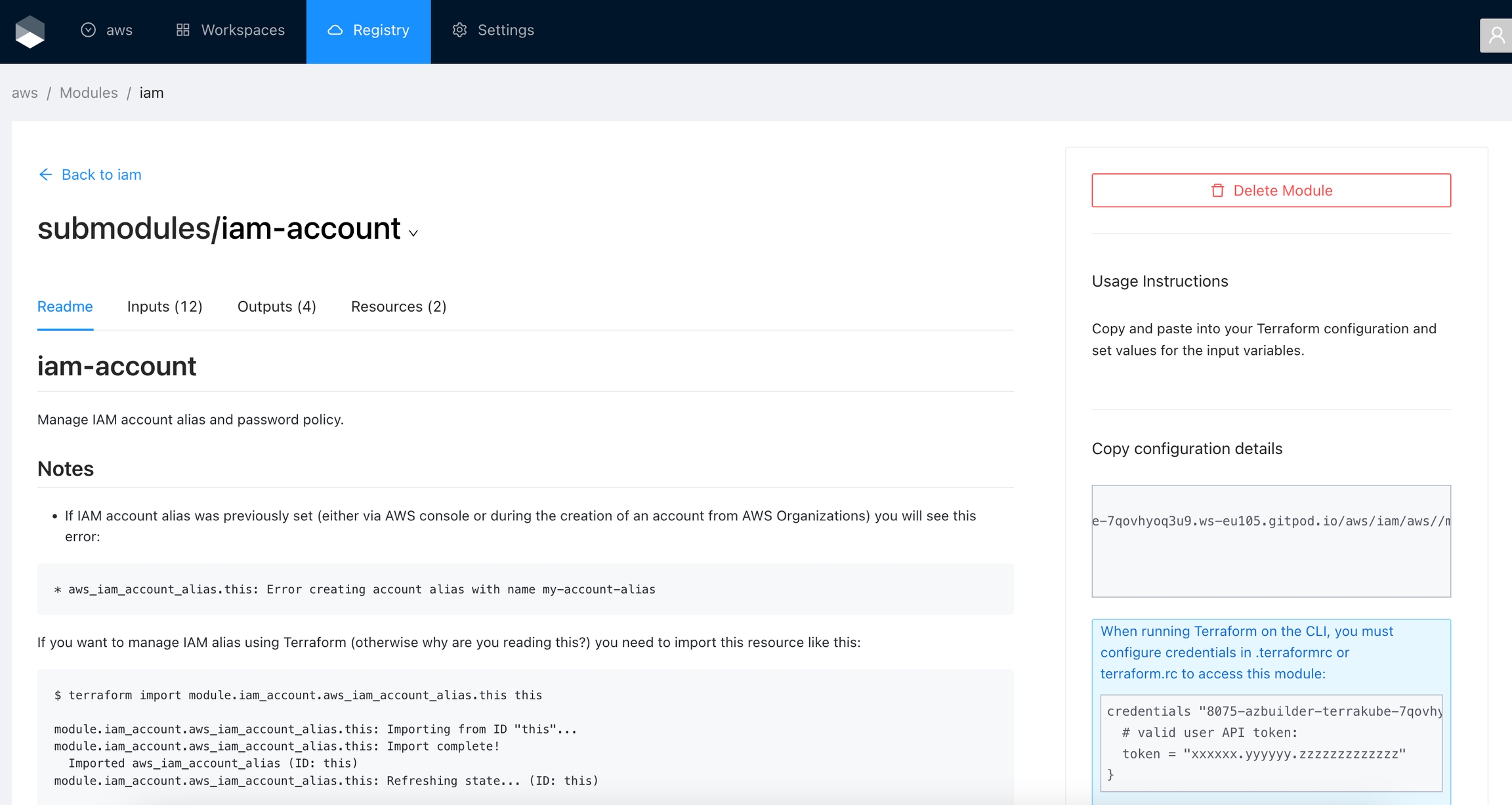

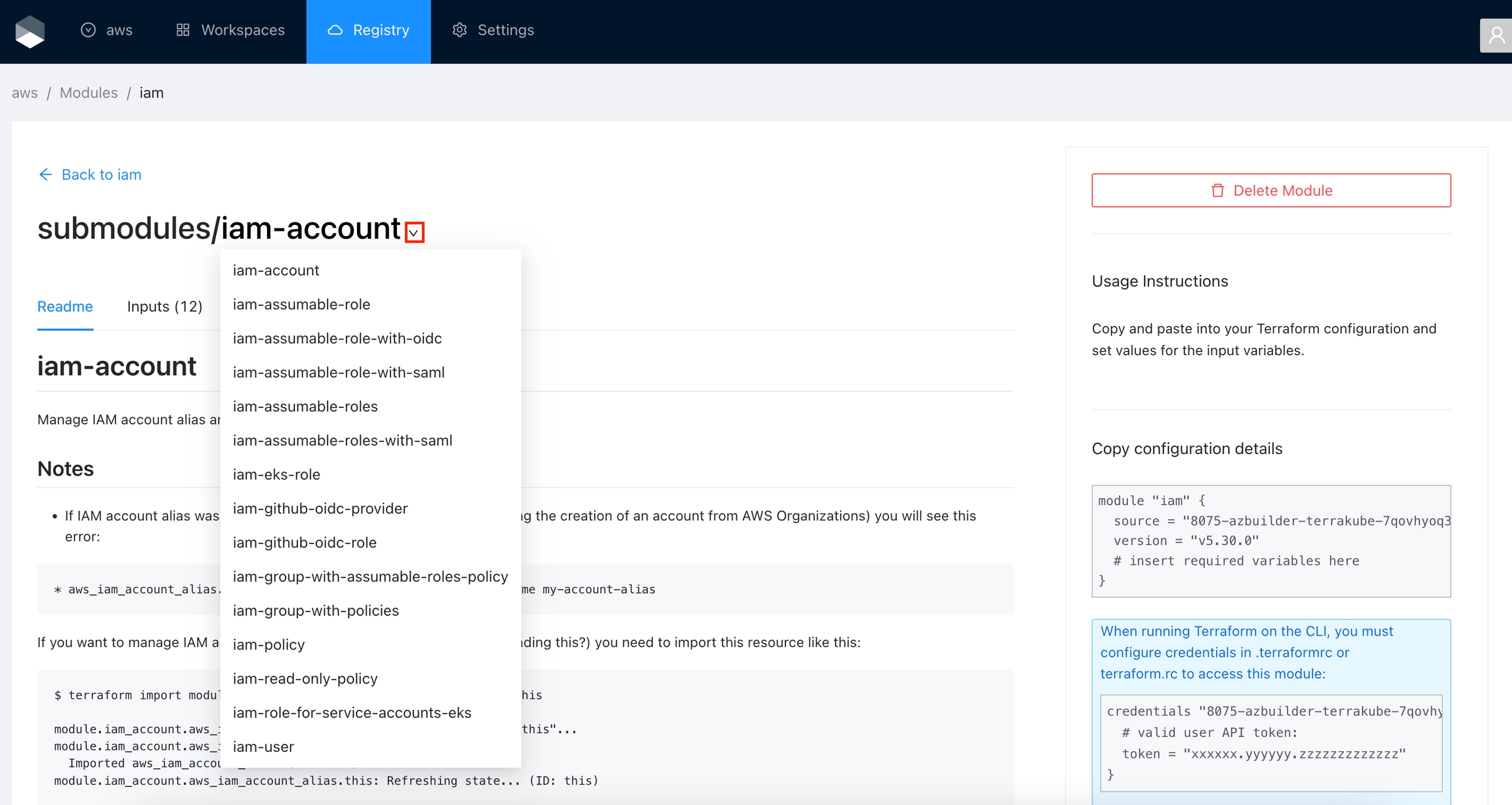

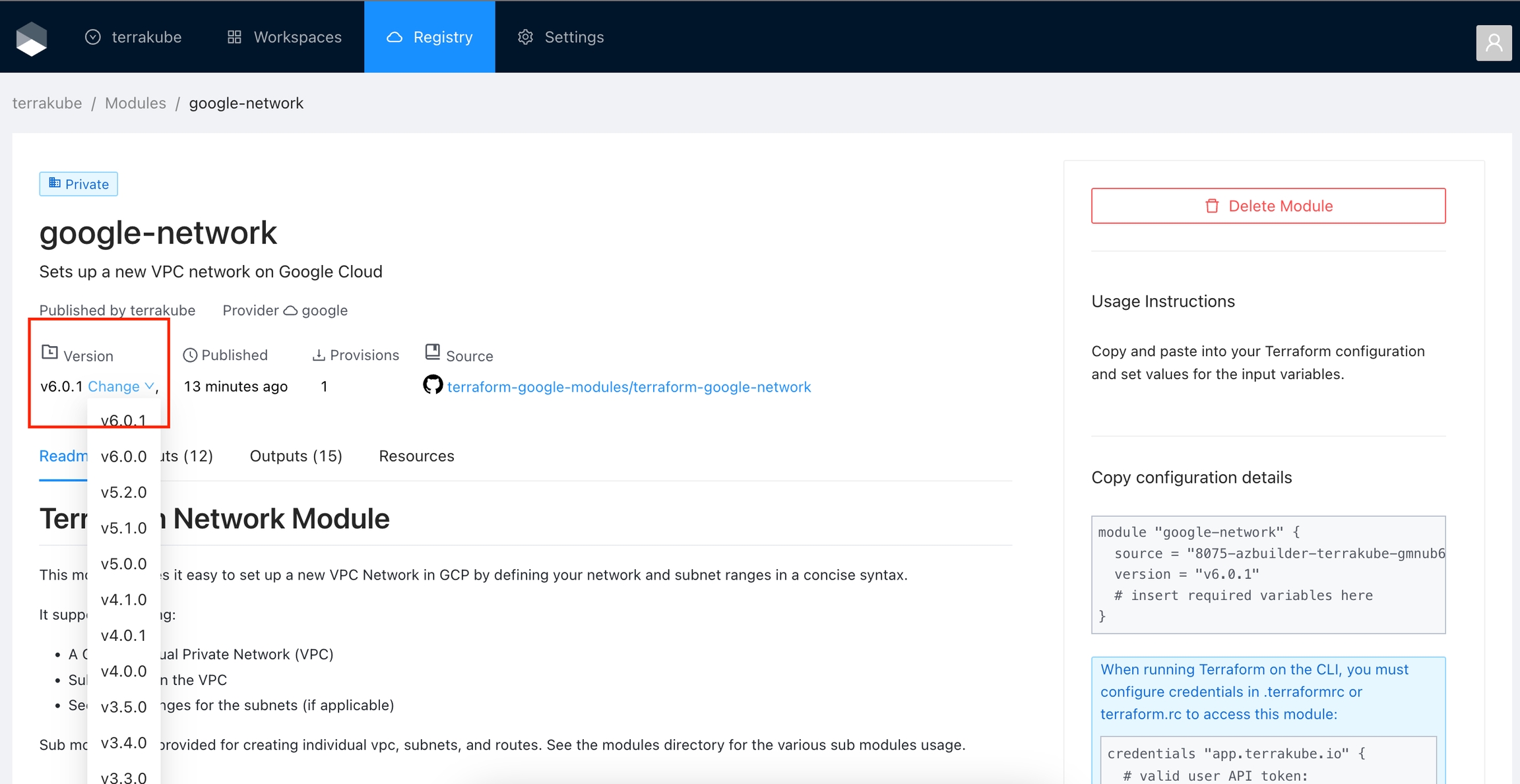

All users in an organization with Manage Modules permission can view the Terrakube private registry and use the available providers and modules.

Click Registry in the main menu. And then click the module you want to use.

In the module page, select the version in the dropdown list

Terrakube actions allow you to extend the UI in Workspaces, functioning similarly to plugins. These actions enable you to add new functions to your Workspace, transforming it into a hub where you can monitor and manage your infrastructure and gain valuable insights. With Terrakube actions, you can quickly observe your infrastructure and apply necessary actions.

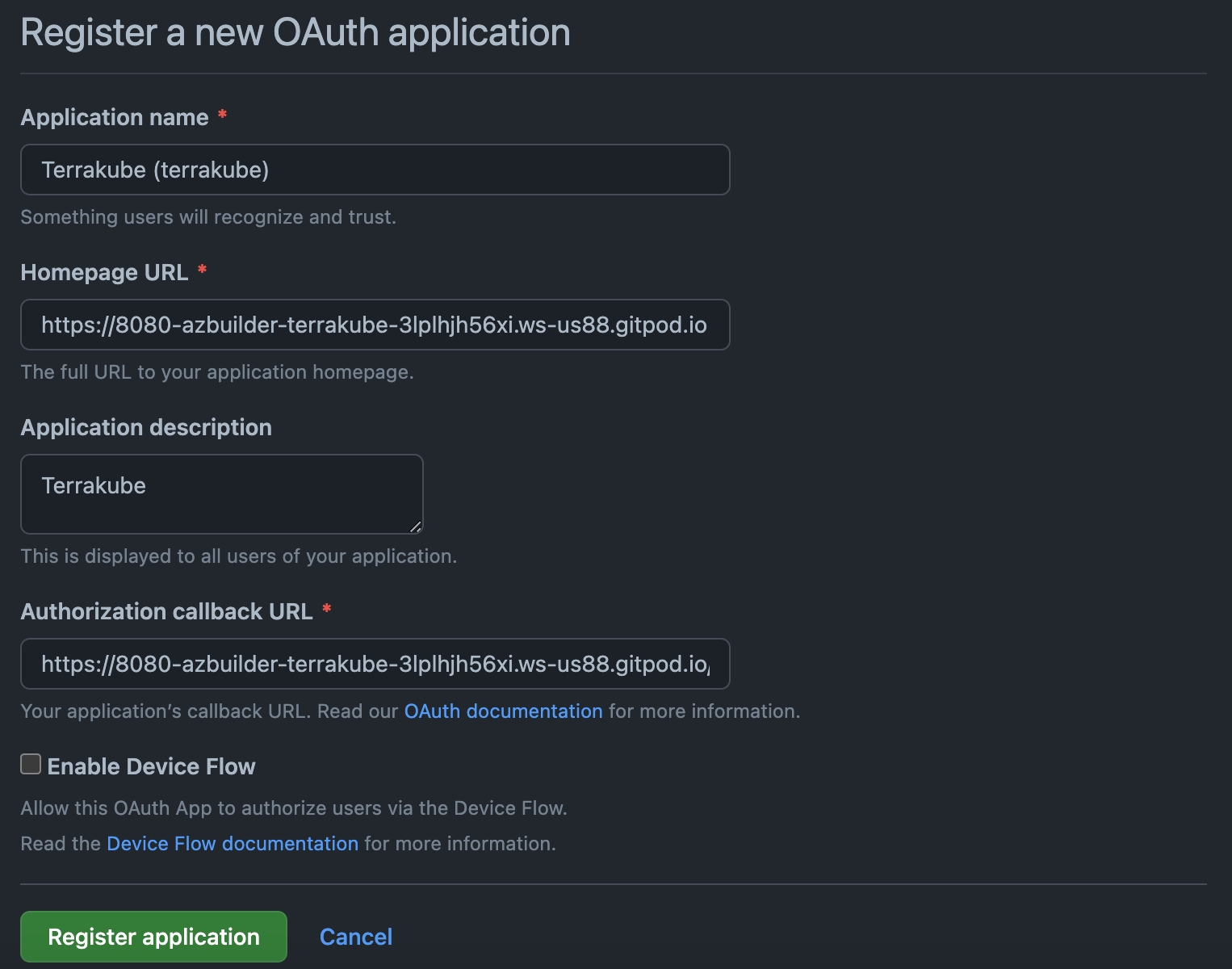

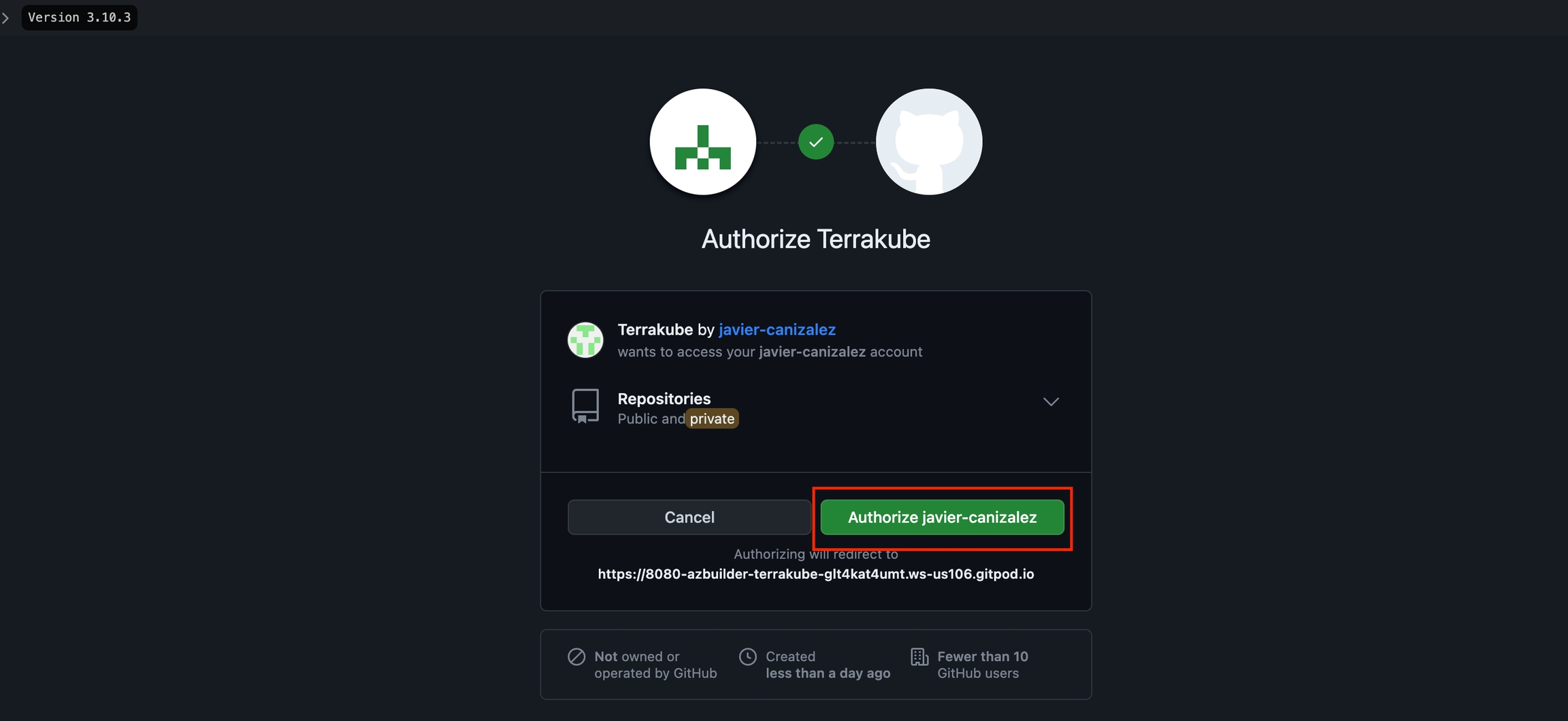

For using repositories from GitHub.com with Terrakube workspaces and modules you will need to follow these steps:

Navigate to the desired organization and click the Settings button, then on the left menu select VCS Providers

If you need to manage your workspaces and execute Jobs from an external CI/CD tool you can use the however a simple approach is to use the existing integrations:

helm repo add terrakube-repo https://AzBuilder.github.io/terrakube-helm-chart

helm repo updateminikube start

minikube addons enable ingress

minikube addons enable storage-provisioner

minikube ip kubectl create namespace terrakubesudo nano /etc/hosts

192.168.59.100 terrakube-ui.minikube.net